As AI will get smarter, brokers now deal with advanced duties. Individuals make selections throughout entire workflows with loads of effectivity and good intent, however by no means with good accuracy. It’s simple to float off-task, over-explain, under-explain, misinterpret a immediate, or create complications for no matter comes subsequent. Typically the end result finally ends up off-topic, incomplete, and even unsafe. And as these brokers start to tackle precise work, we’d like a mechanism for checking their output earlier than letting it go ahead. That is simply one more reason why CrewAI has branched out into utilizing job guardrails. Guardrails create the identical expectations for each job: size, tone, high quality, format, and accuracy are clarified by guidelines. If the agent drifts from the guardrail, it is going to gently right course and pressure the agent to strive once more. It holds regular the workflow. Guardrails will assist brokers keep on observe, constant, and dependable from begin to end.

What Are Job Guardrails?

Job guardrails are validation checks utilized to a specific job, in CrewAI. Job guardrails are run instantly following an AI agent finishing a task-related output. As soon as the AI generates its output, if it conforms to your guidelines, we’ll proceed to take the following motion in your workflow. If not, we’ll cease execution or retry in keeping with your configurations.

Consider a guardrail as a filter. The agent completes its work, however earlier than that work has an influence on different duties, the guardrail critiques the agent’s work. Does it comply with the anticipated format? Does it embrace the required key phrases? Is it lengthy sufficient? Is it related? Does it meet security standards? Solely when the work has checked in opposition to these parameters will the workflow proceed.

Learn extra: Guardrails in LLM

CrewAI has two forms of guardrails to help you to make sure compliance along with your workflows:

1. Perform-Primarily based Guardrails

That is probably the most regularly used strategy. You merely write a operate in Python that checks the output from the agent. The operate will return:

- True if output is legitimate

- False with optionally available suggestions if output isn’t legitimate

Perform-based guardrails are finest suited to rule-based eventualities reminiscent of:

- Phrase depend

- Required phrases

- JSON formatting

- Format validation

- Checking for key phrases

For instance you may say: “Output should embrace the phrases electrical kettle and be at the least 150 phrases lengthy.”

2. LLM-Primarily based Guardrails

These guardrails utilized an LLM to be able to assess if an agent output happy some much less stringent standards, reminiscent of:

- Tone

- Fashion

- Creativity

- Subjective high quality

- Professionalism

As an alternative of writing code, simply present a textual content description that may learn: “Make sure the writing is pleasant, doesn’t use slang, and feels acceptable for a basic viewers.” Then, the mannequin would look at the output and resolve whether or not or not it passes.

Each of those varieties are highly effective. You’ll be able to even mix them to have layered validation.

Why Use Job Guardrails?

Guardrails exist in AI workflows for varied essential causes. Right here’s how they’re sometimes used:

1. High quality Management

AI produced outputs might range in high quality, as one immediate might create a superb response, whereas the following misses the purpose solely. Guardrails assist to control the standard of the output as a result of guardrails create the expectation of minimal output requirements. If an output is just too brief, unrelated to the request, or poorly organized, the guardrail ensures motion shall be taken.

2. Security and Compliance

Some workflows require strict accuracy. This rule of thumb is very true when working in healthcare, finance, authorized, or enterprise use-cases. Guardrails are used to stop hallucinations and unsafe compliance outputs that violate tips. CrewAI has a in-built ‘hallucination guardrail’ that seeks out truth primarily based content material to reinforce security.

3. Reliability and Predictability

In multi-step workflows, one dangerous output might trigger every part down stream to interrupt. A badly shaped output to a question might trigger one other agent’s question to crash. Guardrails shield from invalid outputs establishing dependable and predictable pipelines.

4. Automated Retry Logic

If you don’t want to cope with manually fixing outputs, you might have CrewAI retry robotically. If the guardrail fails, permit CrewAI to retry outputing the data as much as two extra occasions. This characteristic creates resilient workflows and reduces the quantity of supervision required throughout a workflow.

How Job Guardrails Work?

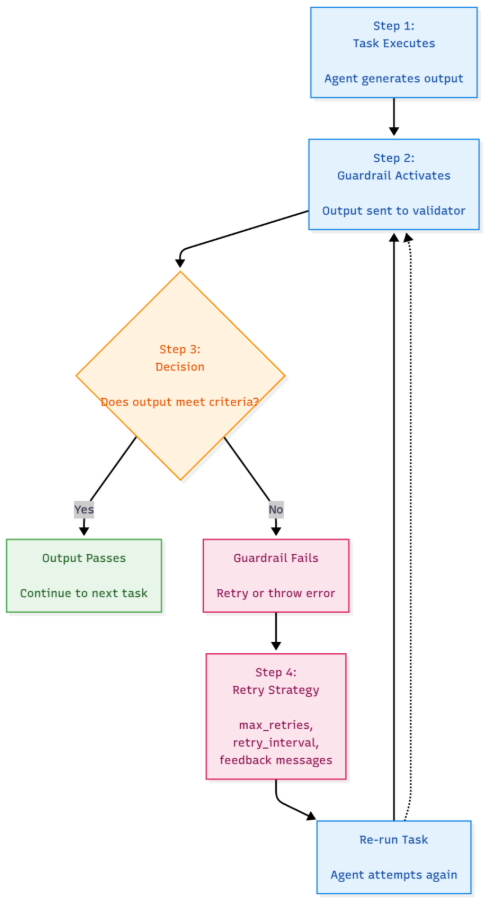

CrewAI’s job guardrails presents an easy but highly effective course of. The agent executes the duty and generates output, then the guardrail prompts and receives the output. The guardrail checks the output primarily based on the foundations you configured, if the results of the output passes the guardrail test, the workflow continues. If the results of the output fails to cross the guardrail test, the guardrail makes an attempt to set off a retry or generates an error. You’ll be able to customise retries by defining the utmost retries, retry intervals, and customized messages. CrewAI logs each try and supplies visibility into precisely what occurred at every step of the workflow. This loop helps make sure the system stays steady, supplies the good thing about better accuracy, and makes for total extra dependable workflow.

Predominant Options & Greatest Practices

Perform vs LLM Guardrails

Implement function-based guardrails for express guidelines. Implement LLM-based guardrails for presumably subjective junctions.

Chaining Guardrails

You’ll be able to run a number of guardrails.

- Size test

- Key phrase test

- Tone test

- Format test

Workflow continues within the case that every one cross.

Hallucination Guardrail

For extra fact-based workflows, use CrewAI built-in hallucination guardrail. It compares output with context reference and detects if it flagged unsupported claims.

Retry Methods

Set limits to your retry limits with warning. Much less retrying = strict workflow, extra retrying = extra creativity.

Logging and Observability

CrewAI reveals:

- What failed

- Why it failed

- Which try succeeded

This will help you modify your guardrails.

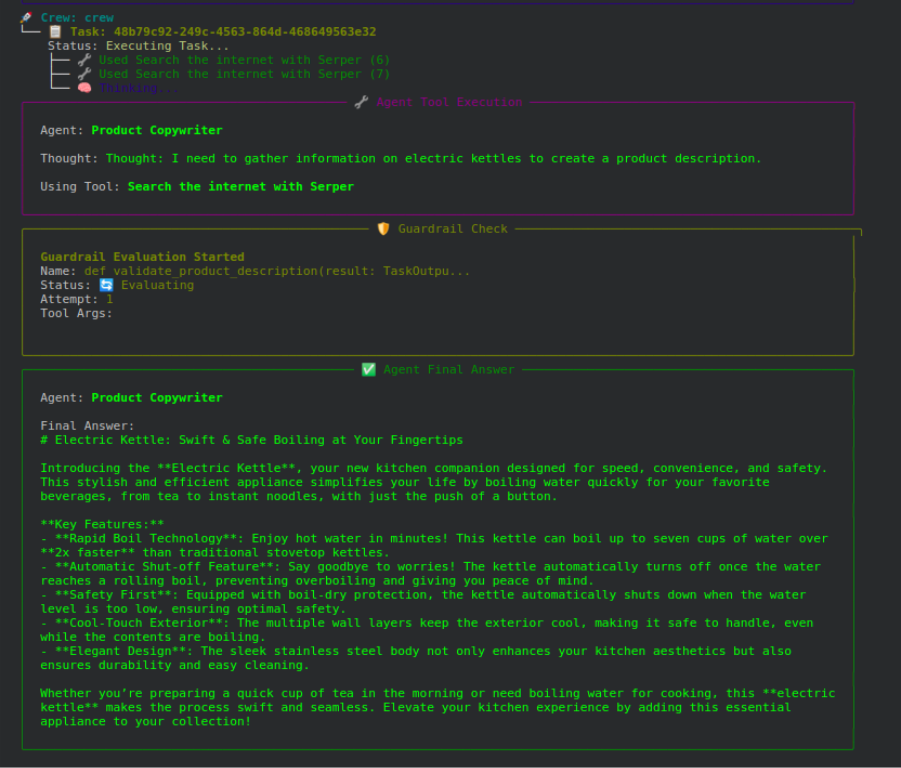

Palms-on Instance: Validating a Product Description

On this instance, we display how a guardrail checks the product description earlier than accepting it. The expectations are clear. The product description should be a minimal of 150 phrases, include the expression “electrical kettle,” and comply with the required format.

Step 1: Set Up and Imports

On this step, we set up CrewAI, import the library, and cargo the API keys. This lets you correctly arrange all of the variables in order that the agent can run and asynchronously hook up with the instruments it wants.

%pip set up -U -q crewai crewai-tools

from crewai import Agent, Job, LLM, Crew, TaskOutput

from crewai_tools import SerperDevTool

from datetime import date

from typing import Tuple, Any

import os, getpass, warnings

warnings.filterwarnings("ignore")

SERPER_API_KEY = getpass.getpass('Enter your SERPER_API_KEY: ')

OPENAI_API_KEY = getpass.getpass('Enter your OPENAI_API_KEY: ')

if SERPER_API_KEY and OPENAI_API_KEY:

os.environ['SERPER_API_KEY'] = SERPER_API_KEY

os.environ['OPENAI_API_KEY'] = OPENAI_API_KEY

print("API keys set efficiently!")Step 2: Outline Guardrail Perform

Subsequent, you outline a operate that validates the output of the agent’s output. It could test that the output accommodates “electrical kettle” and counts the entire output phrases. If it doesn’t discover the anticipated textual content or if the output is just too brief, it returns the failure response. If the outline outputs accurately, it returns success.

def validate_product_description(end result: TaskOutput) -> Tuple[bool, Any]:

textual content = end result.uncooked.decrease().strip()

word_count = len(textual content.cut up())

if "electrical kettle" not in textual content:

return (False, "Lacking required phrase: 'electrical kettle'")

if word_count Step 3: Outline Agent and Job

Lastly, you outline the Agent that you simply created to put in writing the outline. You give it a task and an goal. Subsequent, you outline the duty and add the guardrail to the duty. The duty will retry as much as 3 occasions if the agent output fails.

llm = LLM(mannequin="gpt-4o-mini", api_key=OPENAI_API_KEY)

product_writer = Agent(

function="Product Copywriter",

purpose="Write high-quality product descriptions",

backstory="An knowledgeable marketer expert in persuasive descriptions.",

instruments=[SerperDevTool()],

llm=llm,

verbose=True

)

product_task = Job(

description="Write an in depth product description for {product_name}.",

expected_output="A 150+ phrase description mentioning the product title.",

agent=product_writer,

markdown=True,

guardrail=validate_product_description,

max_retries=3

)

crew = Crew(

brokers=[product_writer],

duties=[product_task],

verbose=True

)Step 4: Execute the Workflow

You provoke the duty. The agent composes the product description. The guardrail evaluates it. If the analysis fails, the agent creates a brand new description. This continues till the output passes the analysis course of or the utmost variety of iterations have accomplished.

outcomes = crew.kickoff(inputs={"product_name": "electrical kettle"})

print("n Remaining Abstract:n", outcomes)

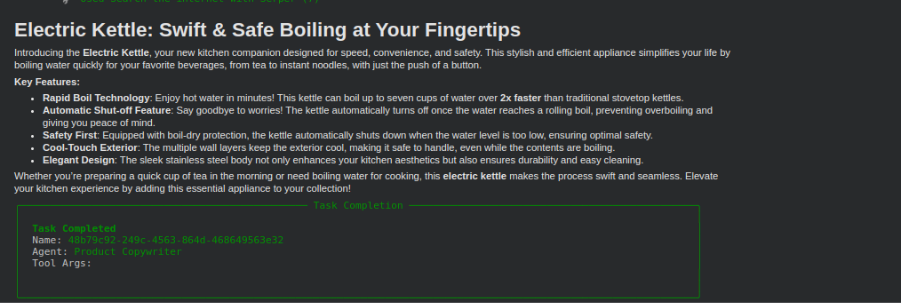

Step 5: Show the Output

Ultimately, you’ll present the proper output that handed guardrail checks. That is the validated product description that meets all necessities.

from IPython.show import show, Markdown

show(Markdown(outcomes.uncooked))

Some Sensible Recommendations

- Be very clear in your

expected_output. - Don’t make guardrails too strict.

- Log causes for failure.

- Use guardrails early to keep away from downstream injury.

- Take a look at edge instances.

Guardrails ought to shield your workflow, not block it.

Learn extra: Constructing AI Brokers with CrewAI

Conclusion

Job guardrails are merely some of the essential options in CrewAI. Guardrails guarantee security, accuracy, and consistency throughout multi-agent workflows. Guardrails validate outputs earlier than they transfer downstream; subsequently, they’re the foundational characteristic to assist create AI programs which are merely highly effective and dependably correct. Whether or not constructing an automatic author, an evaluation pipeline, or a choice framework, guardrails create a high quality layer that helps preserve every part in alignment. Finally, guardrails be sure that the automation course of is smoother and safer and extra predictable from begin to end.

Incessantly Requested Questions

A. They preserve output constant, protected, and usable so one dangerous response doesn’t break your complete workflow.

A. Perform guardrails test strict guidelines like size or key phrases, whereas LLM guardrails deal with tone, type, and subjective high quality.

A. CrewAI can robotically regenerate the output as much as your set restrict till it meets the foundations or exhausts retries.

Login to proceed studying and revel in expert-curated content material.