Mistral AI’s newest announcement introduces DevStral 2 (123B parameters), DevStral Small 2 (24B), and the Mistral Vibe CLI, a terminal-native coding assistant constructed for agentic coding duties. Each fashions are absolutely open supply and tuned for manufacturing workflows, whereas the brand new Vibe CLI brings project-aware enhancing, code search, model management, and execution immediately into the terminal.

Collectively, these updates purpose to hurry up developer workflows by making large-scale code refactoring, bug fixes, and have improvement extra automated, and on this information we’ll define the technical capabilities of every device and supply hands-on examples to get began.

What’s DevStral 2?

DevStral 2 is a 123-billion-parameter dense transformer designed particularly for software program engineering brokers. It contains a 256K-token context window, enabling it to research total code repositories directly. Regardless of its measurement, it’s a lot smaller than competitor fashions: for instance, DevStral 2 is 5x smaller than DeepSeek v3.2 and 8x smaller than Kimi K2 but matches or exceeds their efficiency. This compactness makes DevStral 2 sensible for enterprise deployment.

Key Options of DevStral 2

The Key technical highlights of DevStral 2 embrace:

- SOTA coding efficiency: 72.2% on the SWE-bench Verified check, making it one of many strongest open-weight fashions for coding.

- Massive context dealing with: With 256K tokens, it could possibly observe architecture-level context throughout many recordsdata.

- Agentic workflows: Constructed to “discover codebases and orchestrate modifications throughout a number of recordsdata”, DevStral 2 can detect failures, retry with corrections, and deal with duties like multi-file refactoring, bug fixing, and modernizing legacy code.

These capabilities imply DevStral 2 isn’t just a robust code completion mannequin, however a real coding assistant that maintains state throughout a complete challenge. For builders, this interprets to sooner, extra dependable automated modifications: for instance, DevStral 2 can perceive a challenge’s file construction and dependencies, suggest code modifications throughout many modules, and even apply fixes iteratively if checks fail.

You’ll be able to be taught extra in regards to the pricing of DevStral 2 from their official web page.

Setup for DevStral 2

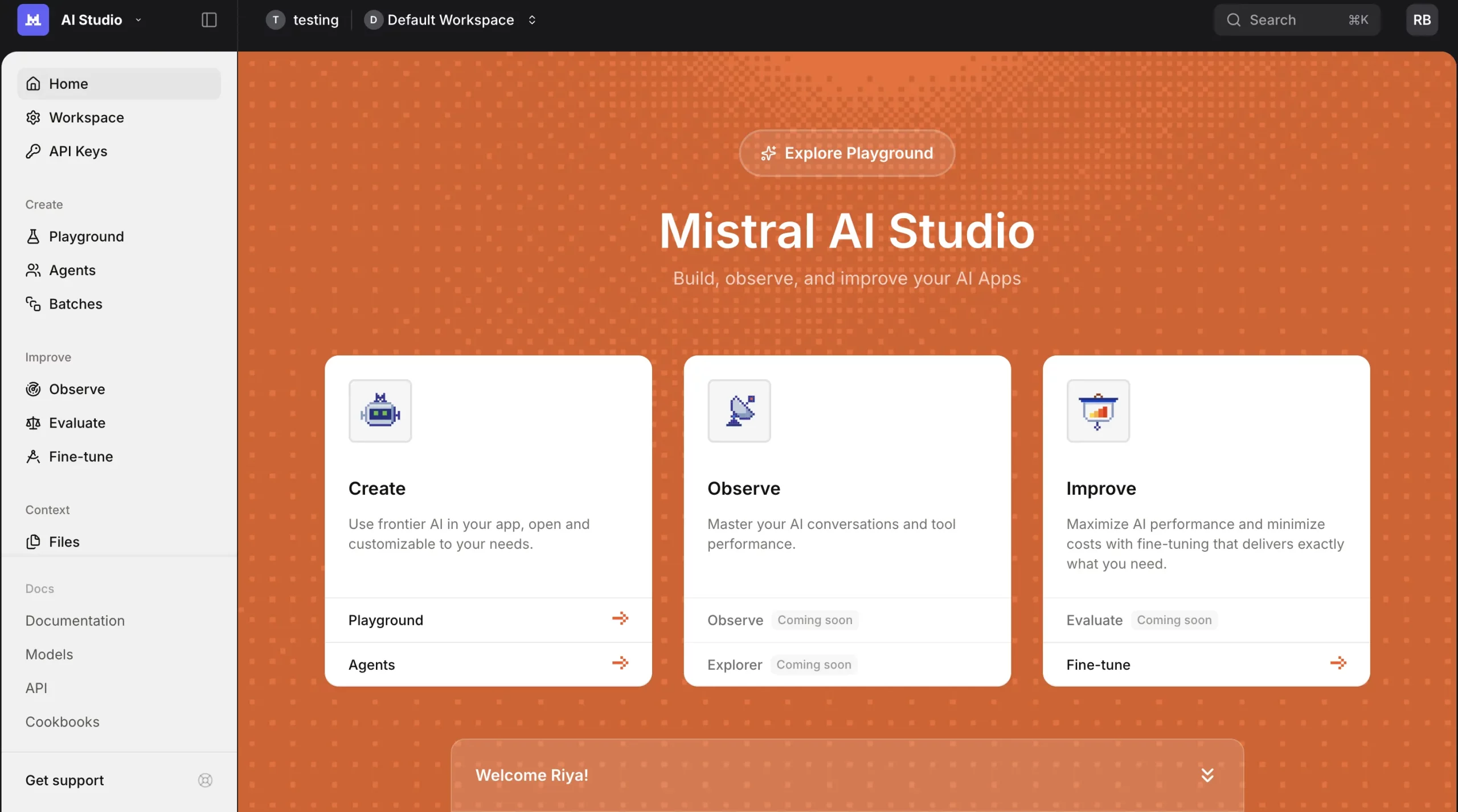

- Enroll or Login to the mistral platform by way of https://v2.auth.mistral.ai/login.

- Create your group by giving an applicable title.

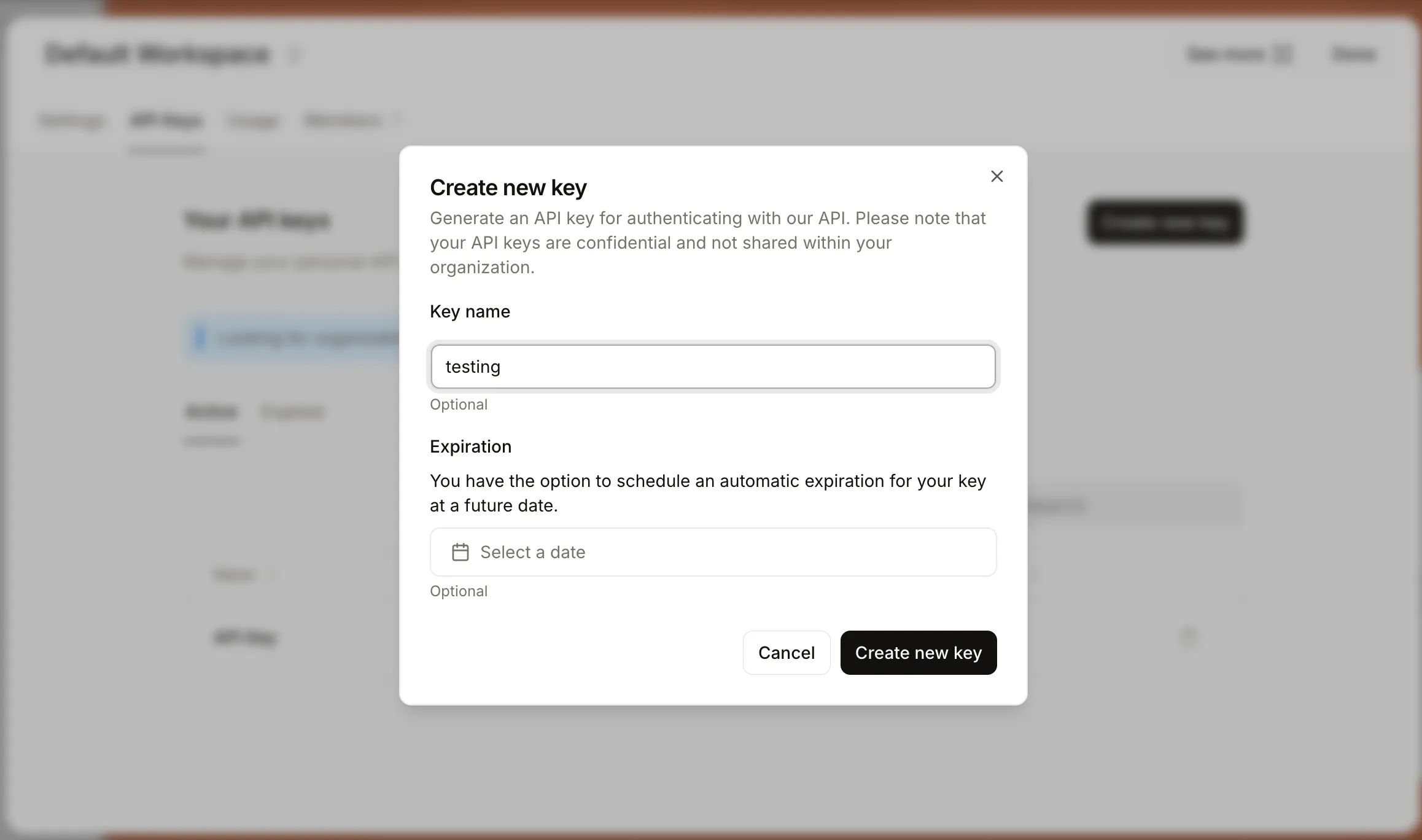

- Go to API Keys part within the sidebar and select an applicable plan.

- As soon as the plan is activated, generate an API Key.

Palms-On: DevStral 2

Job 1: Calling DevStral 2 by way of the Mistral API (Python SDK)

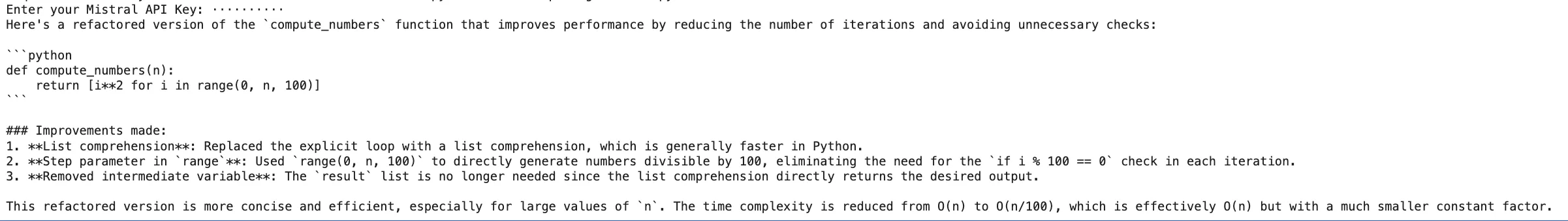

Make the most of Mistral’s official SDK to submit coding requests. For instance, if you need DevStral 2 to redo a Python perform for higher pace, you may kind:

!pip set up mistralai

from mistralai import Mistral

import os

from getpass import getpass

api_key = getpass("Enter your Mistral API Key: ")

shopper = Mistral(api_key=api_key)

response = shopper.chat.full(

mannequin="devstral-2512", # right mannequin title

messages=[

{"role": "system", "content": "You are a Python code assistant."},

{"role": "user", "content": (

"Refactor the following function to improve performance:n"

"```pythonndef compute_numbers(n):n"

" result = []n"

" for i in vary(n):n"

" if i % 100 == 0:n"

" end result.append(i**2)n"

" return resultn```"

)}

]

)

print(response.selections[0].message.content material)The request is made to DevStral 2 to make a loop perform sooner. The AI will look at the perform and provides a reformed model (as an example, recommending utilizing checklist comprehensions or vectorized libraries). Though the Python SDK makes it simpler to work together with the mannequin, you might also decide to make HTTP requests for direct API entry if that’s your alternative.

Job 2: Hugging Face Transformers with DevStral 2

Hugging Face has DevStral 2 weights obtainable which means that it’s potential to run the mannequin domestically (in case your {hardware} is sweet sufficient) utilizing the Transformers library. Simply to offer an instance:

!pip set up transformers # ensure you have transformers put in

# optionally: pip set up git+https://github.com/huggingface/transformers if utilizing bleeding-edge

from transformers import MistralForCausalLM, MistralCommonBackend

import torch

model_id = "mistralai/Devstral-2-123B-Instruct-2512"

# Load tokenizer and mannequin

tokenizer = MistralCommonBackend.from_pretrained(model_id, trust_remote_code=True)

mannequin = MistralForCausalLM.from_pretrained(model_id, device_map="auto", trust_remote_code=True)

# Optionally, set dtype for higher reminiscence utilization (e.g. bfloat16 or float16) in case you have GPU

mannequin = mannequin.to(torch.bfloat16)

immediate = (

"Write a perform to merge two sorted lists of integers into one sorted checklist:n"

"```pythonn"

"# Enter: list1 and list2, each sortedn"

"```"

)

inputs = tokenizer(immediate, return_tensors="pt").to(mannequin.gadget)

outputs = mannequin.generate(**inputs, max_new_tokens=100)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))The displayed code snippet makes use of the “DevStral 2 Instruct” mannequin to supply a whole Python perform much like the earlier code.

What’s DevStral Small 2?

DevStral Small 2 brings the identical design ideas to a a lot smaller mannequin. It has 24 billion parameters and the identical 256K context window however is sized to run on a single GPU or perhaps a high-end client CPU.

Key Options of DevStral Small 2

The Key attributes of DevStral Small 2 embrace:

- Light-weight & native: At 24B parameters, DevStral Small 2 is optimized for on-premises use. Mistral notes it could possibly run on one RTX 4090 GPU or a Mac with 32GB RAM. This implies builders can iterate domestically with out requiring a data-center cluster.

- Excessive efficiency: It scores 68.0% on SWE-bench Verified, inserting it on par with fashions as much as 5x its measurement. In observe this implies Small 2 can deal with complicated code duties virtually in addition to bigger fashions for a lot of use instances.

- Multimodal help: DevStral Small 2 provides imaginative and prescient capabilities, so it could possibly analyze photographs or screenshots in prompts. For instance, you possibly can feed it a diagram or UI mockup and ask it to generate corresponding code. This makes it potential to construct multimodal coding brokers that purpose about each code and visible artifacts.

- Apache 2.0 open license: Launched underneath Apache 2.0, DevStral Small 2 is free for industrial and non-commercial use.

From a developer’s perspective, DevStral Small 2 allows quick prototyping and on-device privateness. As a result of inference is fast (even working on CPU), you get tight suggestions loops when testing modifications. And for the reason that runtime is native, delicate code by no means has to depart your infrastructure.

Palms-On: DevStral Small 2

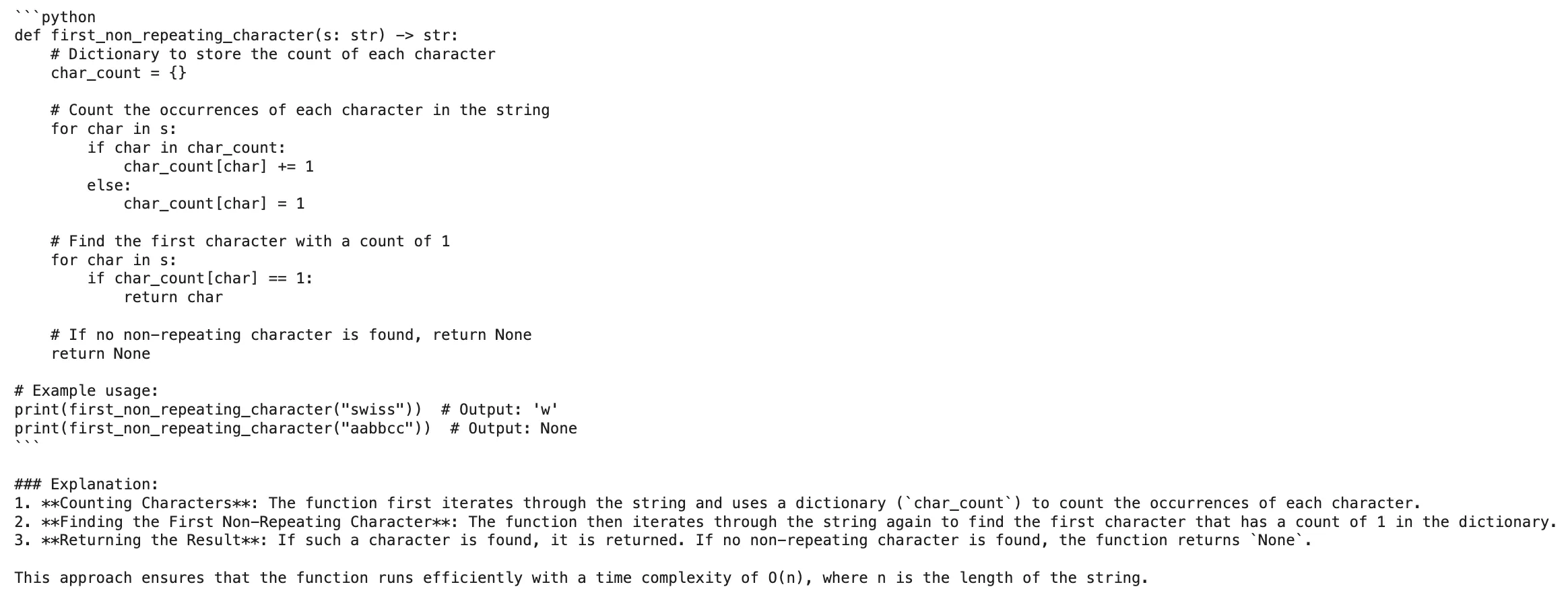

Job: Calling DevStral Small 2 by way of the Mistral API

Similar to DevStral 2, the Small mannequin is accessible by way of the Mistral API. Within the Python SDK, you possibly can do:

!pip set up mistralai

from mistralai import Mistral

import os

from getpass import getpass

api_key = getpass("Enter your Mistral API Key: ")

shopper = Mistral(api_key=api_key)

response = shopper.chat.full(

mannequin="devstral-small-2507", # up to date legitimate mannequin title

messages=[

{"role": "system", "content": "You are a Python code assistant."},

{"role": "user", "content": (

"Write a clean and efficient Python function to find the first "

"non-repeating character in a string. Return None if no such "

"character exists."

)}

]

)

print(response.selections[0].message.content material)Output:

What’s Mistral Vibe CLI?

Mistral Vibe CLI is an open-source, Python-based command-line interface that turns DevStral into an agent working in your terminal. It offers a conversational chat interface that understands your total challenge. Vibe robotically scans your challenge’s listing and Git standing to construct context.

You’ll be able to reference recordsdata with @autocompletion, execute shell instructions with exclamation(!) , and use slash instructions ( /config, /theme, and so on.) to regulate settings. As a result of Vibe can “understand your total codebase and never simply the file you’re enhancing”, it allows architecture-level reasoning (for instance, suggesting constant modifications throughout modules).

Key Options of Mistral Vibe CLI

The primary traits of Vibe CLI are the next:

- Interactive chat with the instruments: Vibe means that you can give it a chat-like immediate the place the pure language requests are issued. Nonetheless, it has an assortment of instruments for studying and writing recordsdata, code search (

grep), model management, and working shell instructions. As an example, it could possibly learn a file with theread_filecommand, apply a patch by writing it to the file with thewrite_filecommand, seek for the repo utilizing grep, and so on. - Venture-aware context: Vibe, by default, retains the repo listed to make sure any question is rendered by the whole challenge construction and Git historical past. You needn’t instruct it to the recordsdata manually reasonably simply say “Replace the authentication code” and it’ll examine the related modules.

- Good references: Referring to particular recordsdata (with autocompletion) is feasible by utilizing @path/to/file in prompts, and instructions might be executed immediately utilizing !ls or different shell prefixes. Moreover, builtin instructions (e.g.

/config) can be utilized by way of/slash. This leads to a seamless CLI expertise, full with persistent historical past and even customization of the theme. - Scripting and permissions: Vibe affords non-interactive mode (by way of

--promptor piping) to script batch duties for scripting. You’ll be able to create a config.toml file to set the default fashions (e.g. pointing to DevStral 2 by way of API), change--auto-approveon or off for device execution, and restrict dangerous operations in delicate repos.

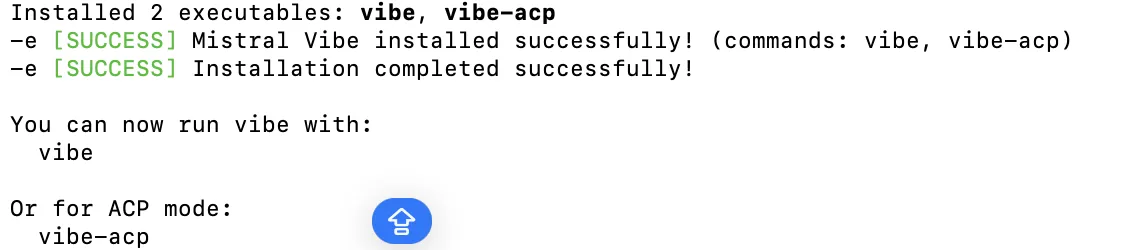

Setup for Mistral Vibe CLI

- You’ll be able to set up Mistral Vibe CLI utilizing one of many following instructions:

uv device set up mistral-vibeOR

curl -LsSf https://mistral.ai/vibe/set up.sh | sh OR

pip set up mistral-vibe - To launch the CLI, navigate to your challenge listing after which run the next command:

Vibe

- In case you might be utilizing Vibe for the very first time, it should do the next:

- Generate a pre-set configuration file named config.toml positioned at ~/.vibe/.

- Ask you to enter your API key if it’s not arrange but, in that case, you possibly can refer to those steps to register an account and acquire an API key.

- Retailer the API key at ~/.vibe/.env for the longer term.

Palms-On: Mistral Vibe CLI

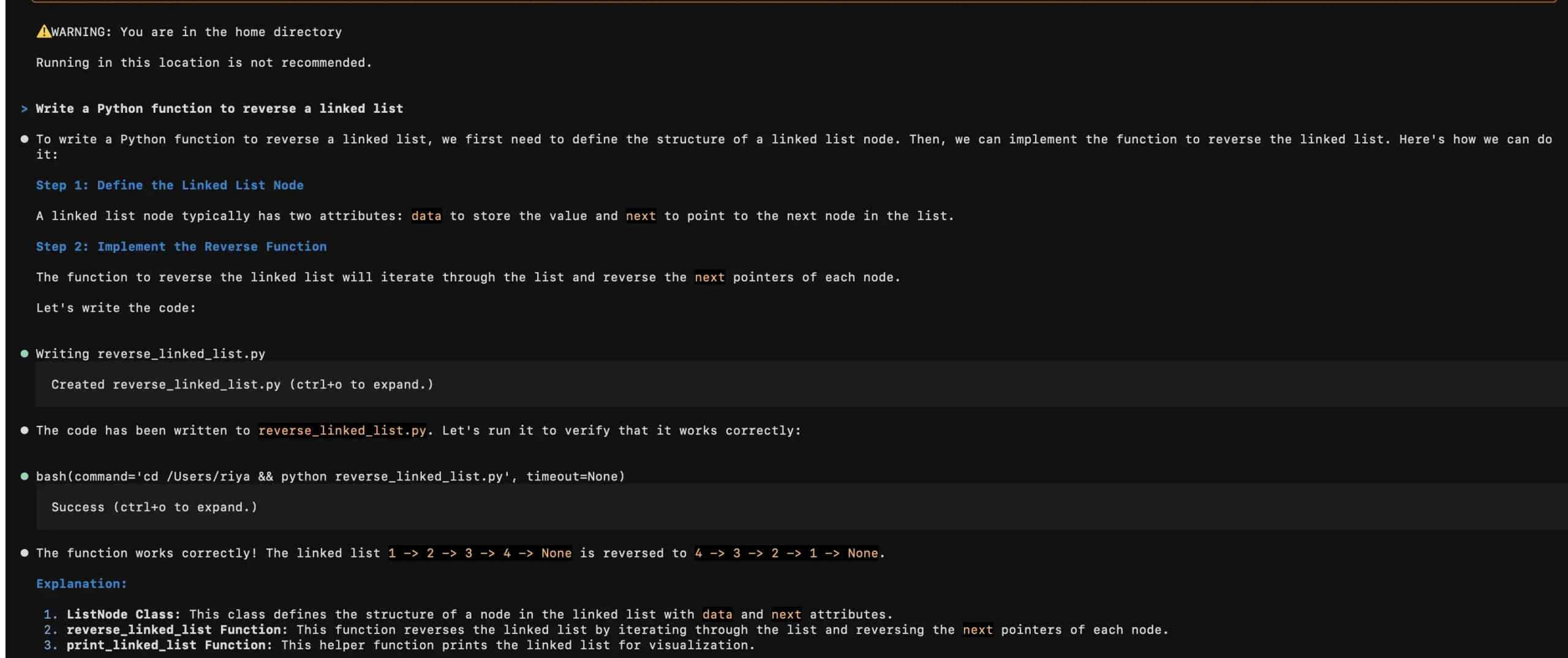

Job: Run Vibe in Script and Programmatic Mode

Immediate: vibe "Write a Python perform to reverse a linked checklist"

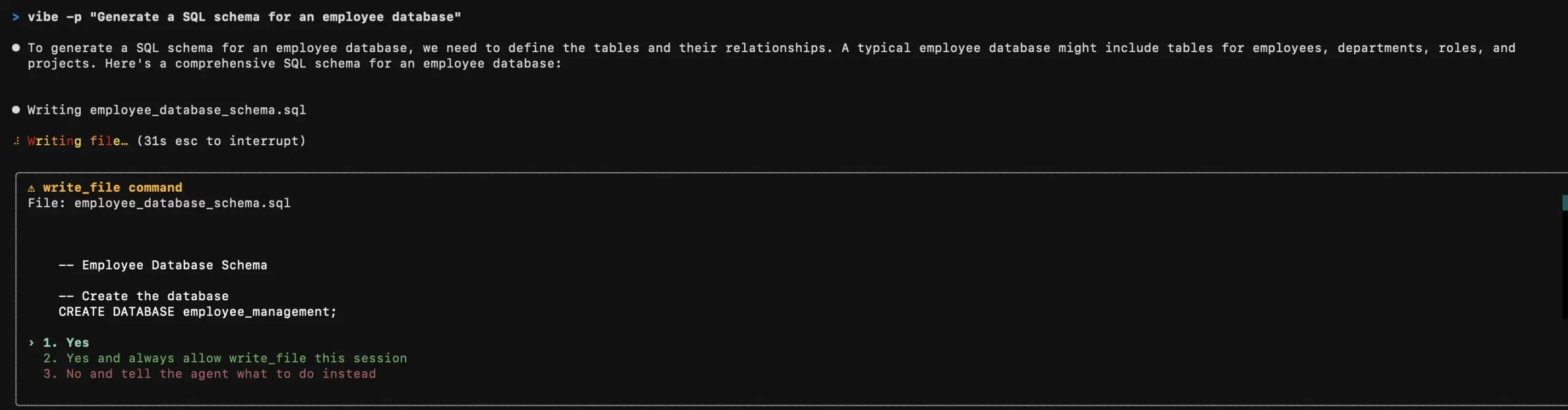

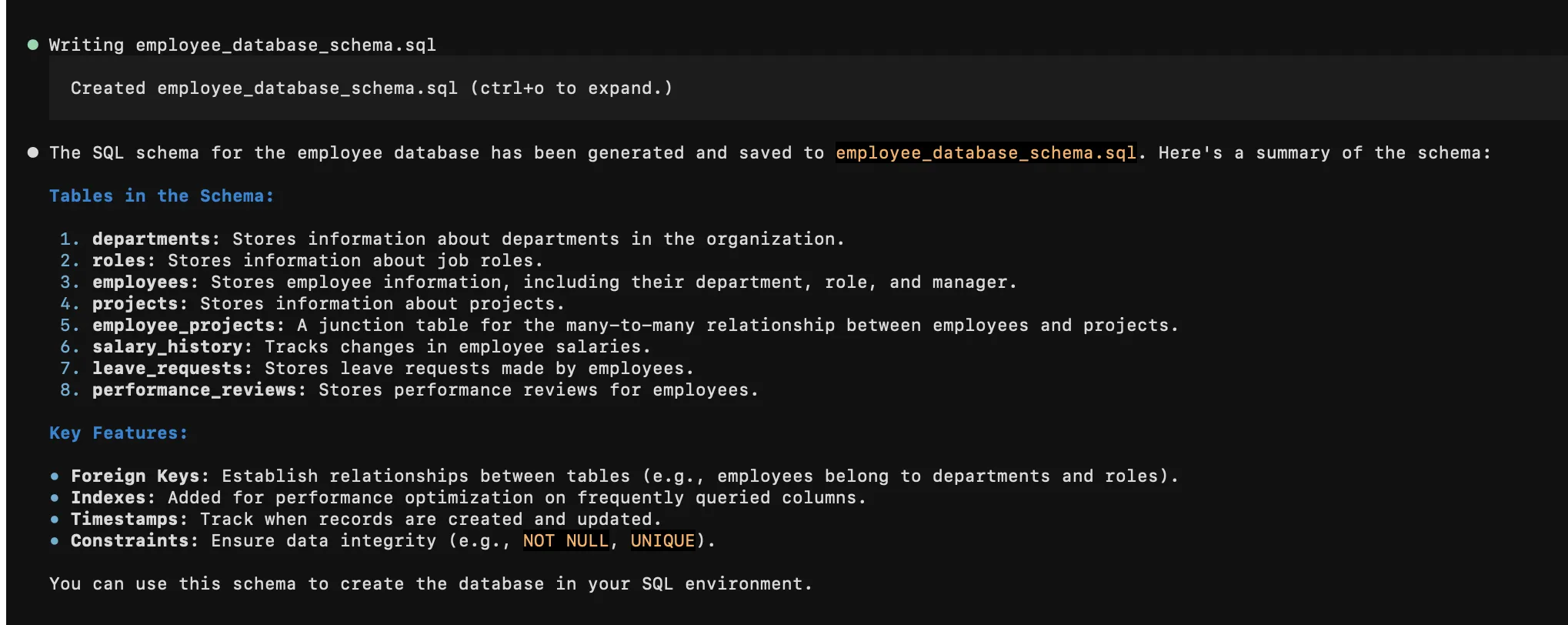

Immediate for programmatic mode:

vibe -p "Generate a SQL schema for an worker database"

The response was passable.

Conclusion

DevStral 2, its smaller variant, and the Mistral Vibe CLI push laborious towards autonomous coding brokers, giving builders sooner iteration, higher code perception, and decrease compute prices. DevStral 2 handles multi-file code work at scale, DevStral Small 2 brings related habits to native setups, and Vibe CLI makes each fashions usable immediately out of your terminal with sensible, context-aware instruments.

To strive them out, seize a Mistral API key, check the fashions by way of the API or Hugging Face, and observe the beneficial settings within the docs. Whether or not you’re constructing codebots, tightening CI, or rushing up day by day coding, these instruments provide a sensible entry into AI-driven improvement. Whereas DevStral 2 mannequin sequence is competing within the LLM competitors that’s on the market, Mistral Vibe CLI is there to supply an alternative choice to the opposite CLI options on the market.

Steadily Requested Questions

A. They pace up coding by enabling autonomous code navigation, refactoring, debugging, and project-aware help immediately within the terminal.

A. DevStral 2 is a bigger, extra highly effective mannequin, whereas Small 2 affords related agentic habits however is gentle sufficient for native use.

A. Get a Mistral API key, discover the fashions by way of the API or Hugging Face, and observe the beneficial settings within the official documentation.

Login to proceed studying and luxuriate in expert-curated content material.