In terms of open-source AI fashions, DeepSeek is without doubt one of the first names that involves thoughts. Identified for being a community-first platform, the workforce has constantly taken consumer suggestions severely and turned it into actionable enhancements. That’s why each new launch from DeepSeek feels much less like an incremental improve and extra like a mirrored image of what the neighborhood truly wants. Their newest launch, DeepSeek-V3.1-Terminus, isn’t any exception. Positioned as their most refined mannequin but, it pushes the boundaries of agentic AI whereas instantly addressing important gaps customers identified in earlier variations.

What’s Deepseek-V3.1-Terminus?

DeepSeek-V3.1-Terminus is an up to date iteration of the corporate’s hybrid reasoning mannequin, DeepSeek-V3.1. The prior model was a giant step ahead, however Terminus seeks to ship a extra secure, dependable, and constant expertise. The title “Terminus” displays that this launch is the end result of a definitive and remaining model of the “V3” collection of fashions till a brand new structure, V4, can come. The mannequin has a complete of 671 billion parameters (with 37 billion lively at any given time) and continues the trail ahead as a strong, environment friendly hybrid Combination of Specialists (MoE) mannequin.

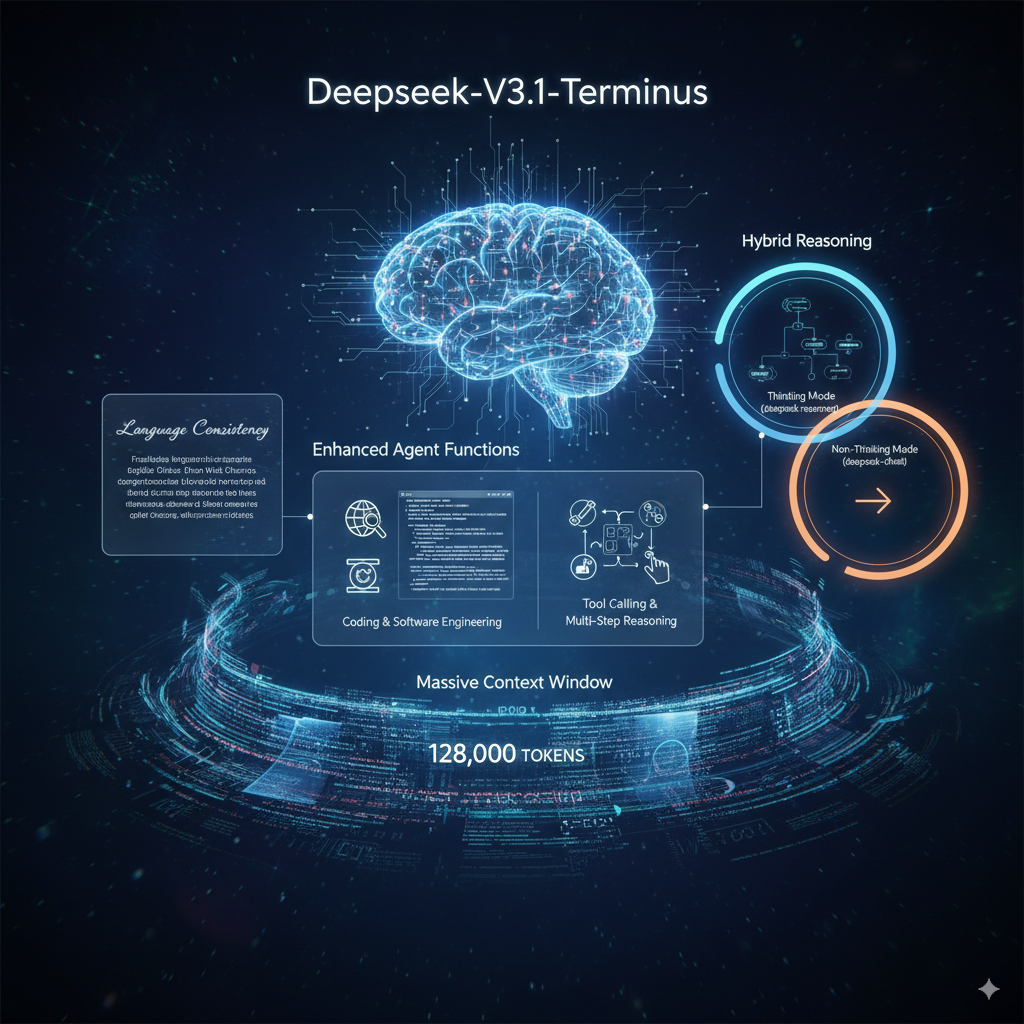

Key Options of Deepseek-V3.1-Terminus

Terminus capitalizes on V3.1’s key strengths and amplifies them, particularly in areas that help real-world utilization. Beneath is a abstract of its options:

- Higher Language Consistency: One of many essential problematic factors from the earlier model was the rare mixing of Chinese language/English and the odd characters that have been generated. Terminus is aimed toward providing a cleaner, extra constant output in its language, which is a giant win for anybody creating multilingual purposes.

- Enhanced Agent perform: That is the place Terminus will get the highlight. The Code Agent and Search Agent capabilities of the mannequin have been vastly improved. Consequently, it’s way more dependable at doing issues like:

- Dwell net looking and geographically particular data retrieval.

- Coding with construction and software program engineering.

- Calling instruments and multi-step reasoning when outdoors instruments are obligatory.

- Hybrid Reasoning: Terminus additionally has the dual-mode performance of its predecessor.

- Considering Mode (deepseek-reasoner): For complicated, multi-step issues, the mannequin can interact in a chain-of-thought course of earlier than it supplies a conclusive reply. Talking of the Considering Mode, imagine it or not, it additionally helps you with duties with subsequent to no pre-process.

- Non-Considering Mode (deepseek-chat): For easy duties, it rapidly distills the reply for you and supplies a direct reply.

- Huge Context Window: The mannequin has the flexibility to help a large, whopping 128,000 token context window, which permits it to deal with prolonged paperwork and huge codebases in a single iteration.

| Mannequin | Deepseek-V3.1-Terminus (Non-Considering Mode) | Deepseek-V3.1-Terminus (Considering Mode) |

|---|---|---|

| JSON Output | ✓ | ✓ |

| Operate Calling | ✓ | ✗(1) |

| Chat Prefix Completion (Beta | ✓ | ✓ |

| FIM Completion (Beta) | ✓ | ✗ |

| Max Output | Default: 4KMaximum: 8K | Default: 32KMaximum: 64K |

| Context Size | 128K | 128K |

Get Began with Deepseek-V3.1-Terminus?

DeepSeek has distributed the mannequin by way of a number of channels, reaching a variety of customers, from hobbyists to enterprise builders.

- Internet and App: The simplest approach to expertise Terminus is instantly by way of DeepSeek’s official net platform or cell app. This supplies an intuitive interface for fast, no-setup engagement.

- API: For builders, the DeepSeek API is a strong possibility. The API is OpenAI-compatible, and you need to use the acquainted OpenAI SDK or any third occasion software program that works with the OpenAI API. All it’s good to do is change the bottom URL and your API key. Pricing is aggressive and aggressive, with output tokens which can be less expensive than many premium mannequin choices.

| Mannequin | Deepseek-V3.1-Terminus (Non-Considering Mode) | Deepseek-V3.1-Terminus (Considering Mode) |

|---|---|---|

| 1M INPUT TOKENS (CACHE HIT) | $0.07 | $0.07 |

| 1M INPUT TOKENS (CACHE MISS) | $0.56 | $0.56 |

| 1M OUTPUT TOKENS | $1.68 | $1.68 |

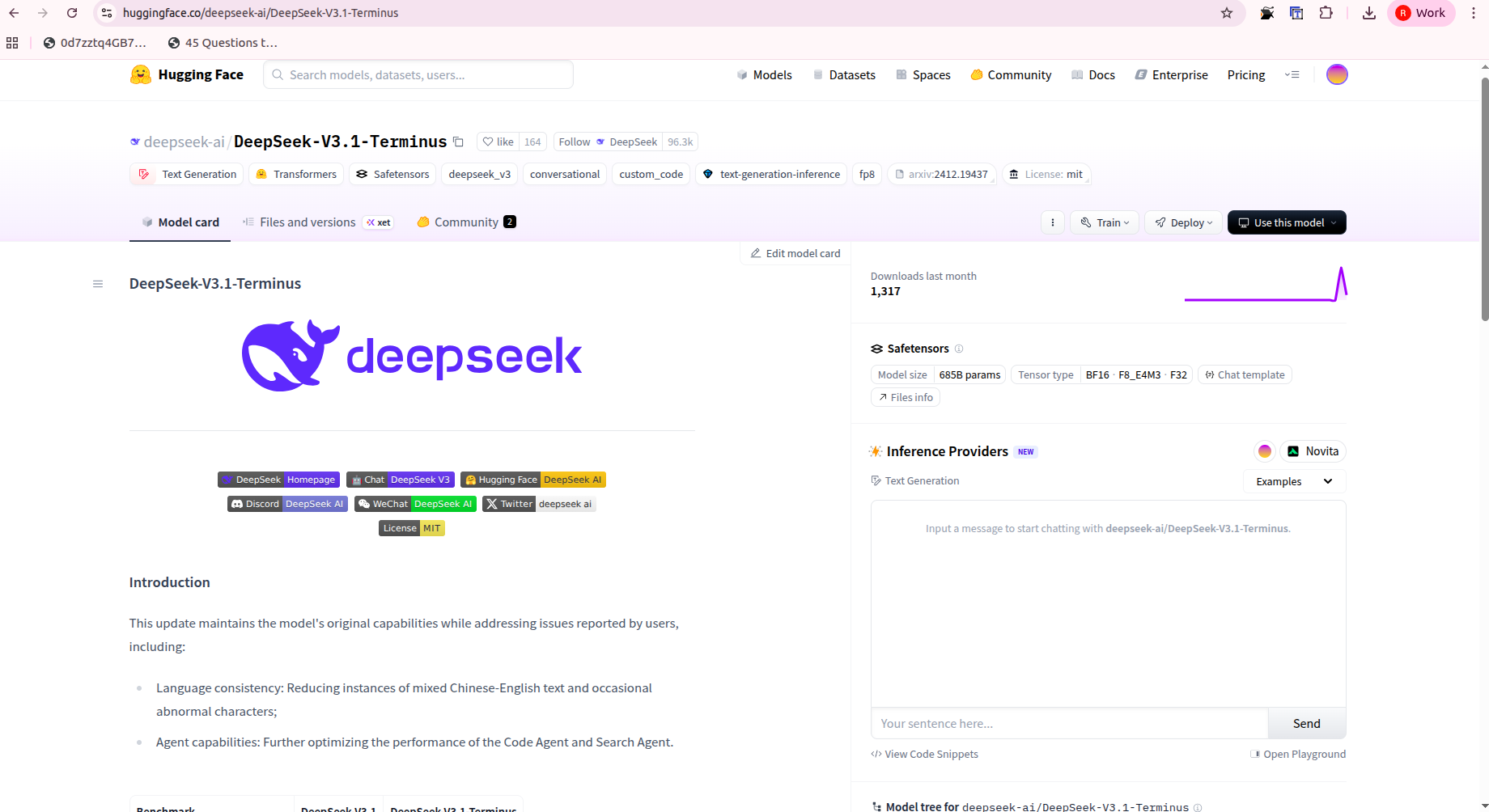

- Run Domestically: In case you are seeking to self-host the mannequin, the mannequin weights can be found on Hugging Face beneath an open-source, permissive MIT license. Working the mannequin in your native machine would require important {hardware}; nevertheless, the neighborhood has some useful assets and guides that will optimize the expertise, e.g., offloading MoE layers to the CPU will mitigate VRAM utilization.

Arms-On with the Internet App

Utilizing the online interface is as straightforward because it comes. Open DeepSeek and provoke a chat. You should use the “considering” and “non-thinking” modes to match kinds and depth of responses. You can provide the “considering” mode a posh coding job or ask to browse the online for data, and you’ll instantly see the development in agentic means because it develops its plan and executes the duty.

Immediate for Search Agent:

“I have to plan a 7-day journey to Kyoto, Japan, for mid-November. The itinerary ought to give attention to conventional tradition, together with temples, gardens, and tea ceremonies. Discover one of the best time to see the autumn leaves, an inventory of three must-visit temples for ‘Momiji’ (autumn leaves), and a highly-rated conventional tea home with English-friendly providers. Additionally, discover a well-reviewed ryokan (conventional Japanese inn) within the Gion district. Arrange all the knowledge into a transparent, day-by-day itinerary.”

Response:

Immediate for Coding Agent:

“I want a Python script that scrapes a public checklist of the highest 100 movies of all time from a web site (you’ll be able to select a dependable supply like IMDb, Rotten Tomatoes, or a widely known journal’s checklist). The script ought to then save the movie titles, launch years, and a quick description for every film right into a JSON file. Embrace error dealing with for community points or modifications within the web site’s construction. Are you able to generate the complete script and clarify every step of the method?”

Response:

My Overview of DeepSeek-V3.1-Terminus

DeepSeek-V3.1-Terminus marks important progress for anybody working with AI brokers. I’ve used the earlier model for some time, and whereas it was exceptionally spectacular, it did current its moments of frustration, like when it generally combined languages or acquired misplaced in multi-step coding duties. The expertise utilizing Terminus felt like the event workforce listened to me. The language consistency is now rock strong, and I used to be actually impressed with its means to conduct a posh net search and synthesize data with no hiccup. It’s not only a highly effective chat mannequin; it’s a dependable and clever accomplice for complicated, real-world duties.

Run Deepseek-V3-Terminus Domestically?

For these with extra technical data, you’ll be able to run DeepSeek-V3.1-Terminus domestically with extra energy and privateness.

- Obtain the Weights: Go to the official DeepSeek AI Hugging Face web page and obtain the mannequin weights. The whole mannequin accommodates 671 billion parameters and requires a considerable quantity of disk area. If area is a priority, you might wish to obtain a quantized model like one of many GGUF fashions.

- Use a Framework: Use a well-liked framework similar to Llama.cpp or Ollama to load and run the mannequin. These frameworks deal with the complexity of operating giant fashions on client {hardware}.

- Optimize in your {hardware}: Because the mannequin is a Combination of Specialists, you’ll be able to switch among the layers to the CPU to avoid wasting on GPU VRAM. This may increasingly take some experimentation to discover a candy spot of pace and reminiscence utilization in your setup.

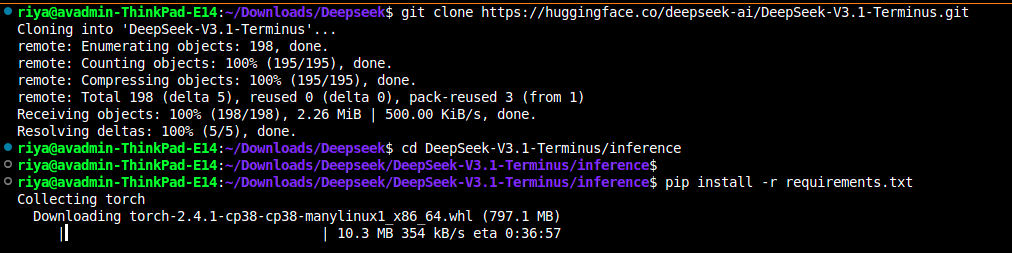

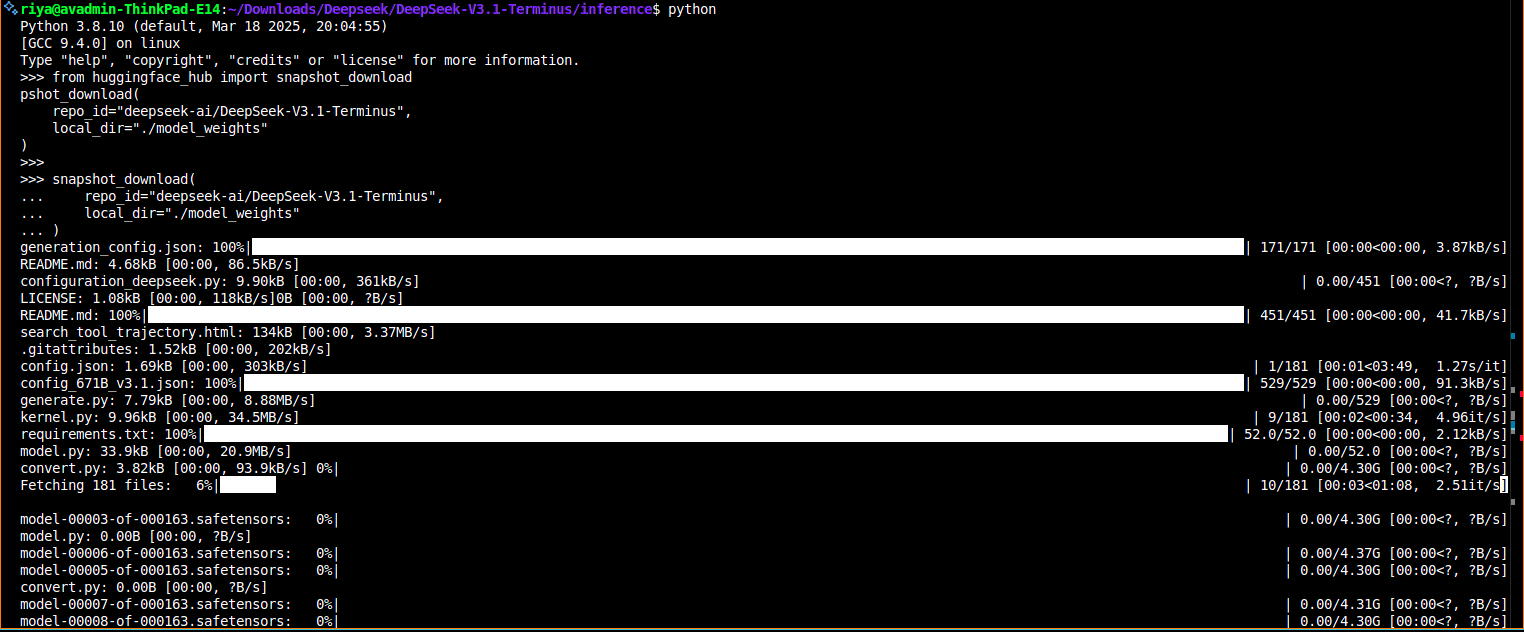

Setup Information

Comply with the next instructions to arrange the DeepSeek mannequin in your native setting.

git clone https://huggingface.co/deepseek-ai/DeepSeek-V3.1-Terminus

cd DeepSeek-V3.1-Terminus

pip set up -r necessities.txt

python inference/demo.py

--input "Implement a minimal Redis clone in Go that helps SET, GET, DEL."

--reasoning true

--max_tokens 2048

Benchmark Comparability

Though the efficiency on pure reasoning benchmarks has modest enhancements, the spotlight of Terminus is its efficiency on task-based agent efficiency. The mannequin has made notable enhancements on the next agent-based benchmarks:

- BrowseComp: Large enhance from 30.0 to 38.5, indicating an improved means to carry out multi-step net searches.

- SWE Verified: Robust enhance from 66.0 to 68.4, particularly for software program engineering duties reliant on exterior instruments.

- Terminal-bench: Vital enchancment from 31.3 to 36.7, displaying the Code Agent is best at dealing with command-line fashion duties.

We should always be aware a lower in efficiency on the Chinese language-language BrowseComp benchmark, which can point out that the modifications to the multilingual consistency enhancements favored English efficiency. Regardless, it’s clear that for any developer utilizing agentic workflows and exterior instruments, Terminus supplies notable upgrades.

Conclusion

DeepSeek-V3.1-Terminus isn’t essentially designed to interrupt data throughout the board on each benchmark; no, that is an intentional and targeted launch centered on what’s essential for sensible use in the actual world: even higher stability, reliability, and wonderful agentic performance for customers. Addressing a few of its earlier inconsistencies and enhancing its means to leverage instruments, DeepSeek has ready a superb open-source mannequin that has by no means felt so deployable and wise. So whether or not you’re a developer making an attempt to construct the subsequent nice AI assistant or only a expertise lover eager to see what’s subsequent, Terminus is value one other look.

Learn extra: Constructing AI Purposes utilizing Deepseek V.3

Continuously Requested Questions

A. It’s the polished V3.1 launch: a 671B-parameter MoE (37B lively) constructed for stability, reliability, and cleaner multilingual output.

A. Non-Considering (deepseek-chat) provides fast, direct solutions and helps perform calling. Considering (deepseek-reasoner) does multi-step reasoning with bigger outputs however no perform calling.

A. Each modes help a 128K context. Non-Considering outputs: default 4K, max 8K. Considering outputs: default 32K, max 64K.

Login to proceed studying and luxuriate in expert-curated content material.