AI and ML builders typically work with native datasets whereas preprocessing knowledge. Engineering options, and constructing prototypes make this straightforward with out the overhead of a full server. The commonest comparability is between SQLite, a serverless database launched in 2000 and broadly used for light-weight transactions, and DuckDB, launched in 2019 because the SQLite of analytics, centered on quick in-process analytical queries. Whereas each are embedded, their targets differ. On this article, we’ll examine DuckDB and SQLite that will help you select the suitable device for every stage of your AI workflow.

What’s SQLite?

SQLite is a self-contained database engine that’s serverless. It creates a button straight out of a disk file. It’s zero-configured and has a low footprint. The database is all saved in a single file that’s.sqlite and the tables and indexes are all contained in that file. The engine itself is a C library that’s embedded in your software.

SQLite is an ACID-compliant database, though it’s easy. This makes it reliable within the transactions and knowledge integrity.

Key options embrace:

- Row-oriented storage: The info is saved row by row. This renders updating or retrieving a person row to be fairly environment friendly.

- Single-file database: The whole database is in a single file. This allows it to be copied or transferred simply.

- No server course of: Direct studying and writing to the database file are made to your software. No separate server is required.

- Broad SQL assist: It’s based mostly on most SQL-2 and helps things like joins, window features, and indexes.

SQLite is often chosen in cellular functions and Web of Issues, in addition to small net functions. It’s luminous the place you require an easy answer to retailer structured knowledge regionally, and when you’ll require quite a few quick learn and write operations.

What’s DuckDB?

DuckDB is an information analytics in-process database. It takes the energy of the SQL database to embedded functions. It would execute sophisticated analytical queries successfully and not using a server. This analytical focus is often the idea of comparability between DuckDB and SQLite.

The essential options of DuckDB are:

- Columnar storage format: DuckDB shops knowledge columns. On this format, it is ready to scan and merge large datasets at a a lot larger price. It reads solely the columns that it requires.

- Vectorized question execution: DuckDB is designed to carry out calculations in chunks, or vectors, slightly than in a single row. This methodology includes the applying of present CPU capabilities to compute at a larger price.

- Direct file querying: DuckDB can question Parquet, CSV and Arrow information straight. There is no such thing as a must put them into the database.

- Deep knowledge science integration: It’s appropriate with Pandas, NumPy and R. DataFrame could be requested questions like database tables.

DuckDB can be utilized to shortly course of interactive knowledge evaluation in Jupyter notebooks and velocity up Pandas workflows. It takes knowledge warehouse capabilities in a small and native bundle.

Key Variations

First, here’s a abstract desk evaluating SQLite and DuckDB on essential features.

| Facet | SQLite (since 2000) | DuckDB (since 2019) |

| Major Objective | Embedded OLTP database (transactions) | Embedded OLAP database (analytics) |

| Storage Mannequin | Row-based (shops complete rows collectively) | Columnar (shops columns collectively) |

| Question Execution | Iterative row-at-a-time processing | Vectorized batch processing |

| Efficiency | Glorious for small, frequent transactions | Glorious for analytical queries on giant knowledge |

| Information Dimension | Optimized for small-to-medium datasets | Handles giant and out-of-memory datasets |

| Concurrency | Multi-reader, single-writer (through locks) | Multi-reader, single-writer; parallel question execution |

| Reminiscence Use | Minimal reminiscence footprint by default | Leverages reminiscence for velocity; can use extra RAM |

| SQL Options | Strong fundamental SQL with some limits | Broad SQL assist for superior analytics |

| Indexes | B-tree indexes are sometimes wanted | Depends on column scans; indexing is much less frequent |

| Integration | Supported in almost each language | Native integration with Pandas, Arrow, NumPy |

| File Codecs | Proprietary file; can import/export CSVs | Can straight question Parquet, CSV, JSON, Arrow |

| Transactions | Absolutely ACID-compliant | ACID inside a single course of |

| Parallelism | Single-threaded question execution | Multi-threaded execution for a single question |

| Typical Use Instances | Cell apps, IoT units, native app storage | Information science notebooks, native ML experiments |

| License | Public area | MIT License (open supply) |

This desk reveals that SQLite focuses on reliability and operations of transactions. DuckDB is optimized to assist fast analytic queries on large knowledge. Now we’re going to talk about every certainly one of them.

Arms-On in Python: From Principle to Apply

We’ll see how one can make the most of each databases in Python. It’s an open-source AI improvement surroundings.

Utilizing SQLite

That is a simple illustration of SQLite Python. We will develop a desk, enter knowledge, and execute a question.

import sqlite3

# Hook up with a SQLite database file

conn = sqlite3.join("instance.db")

cur = conn.cursor()

# Create a desk

cur.execute(

"""

CREATE TABLE customers (

id INTEGER PRIMARY KEY,

title TEXT,

age INTEGER

);

"""

)

# Insert data into the desk

cur.execute(

"INSERT INTO customers (title, age) VALUES (?, ?);",

("Alice", 30)

)

cur.execute(

"INSERT INTO customers (title, age) VALUES (?, ?);",

("Bob", 35)

)

conn.commit()

# Question the desk

for row in cur.execute(

"SELECT title, age FROM customers WHERE age > 30;"

):

print(row)

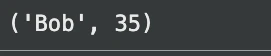

# Anticipated output: ('Bob', 35)

conn.shut()Output:

The database on this case is stored within the instance.db file. We now have made a desk, added two rows to it, and executed a easy question. SQLite makes you load knowledge into the tables after which question. In case you will have a CSV file, you should import the knowledge first.

Utilizing DuckDB

Nonetheless, it’s time to repeat this selection with DuckDB. We will additionally carry your consideration to its knowledge science conveniences.

import duckdb

import pandas as pd

# Hook up with an in-memory DuckDB database

conn = duckdb.join()

# Create a desk and insert knowledge

conn.execute(

"""

CREATE TABLE customers (

id INTEGER,

title VARCHAR,

age INTEGER

);

"""

)

conn.execute(

"INSERT INTO customers VALUES (1, 'Alice', 30), (2, 'Bob', 35);"

)

# Run a question on the desk

outcome = conn.execute(

"SELECT title, age FROM customers WHERE age > 30;"

).fetchall()

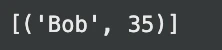

print(outcome) # Anticipated output: [('Bob', 35)]Output:

The easy use resembles the essential utilization. Nonetheless, exterior knowledge will also be queried by DuckDB.

Let’s generate a random dataset for querying:

import pandas as pd

import numpy as np

# Generate random gross sales knowledge

np.random.seed(42)

num_entries = 1000

knowledge = {

"class": np.random.selection(

["Electronics", "Clothing", "Home Goods", "Books"],

num_entries

),

"value": np.spherical(

np.random.uniform(10, 500, num_entries),

2

),

"area": np.random.selection(

["EUROPE", "AMERICA", "ASIA"],

num_entries

),

"sales_date": (

pd.to_datetime("2023-01-01")

+ pd.to_timedelta(

np.random.randint(0, 365, num_entries),

unit="D"

)

)

}

sales_df = pd.DataFrame(knowledge)

# Save to sales_data.csv

sales_df.to_csv("sales_data.csv", index=False)

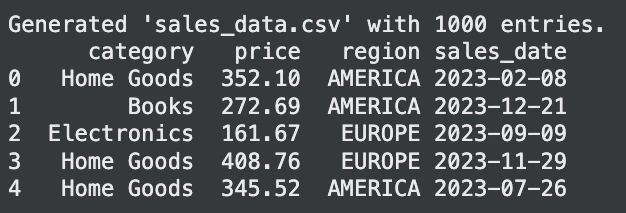

print("Generated 'sales_data.csv' with 1000 entries.")

print(sales_df.head())Output:

Now, let’s question this desk:

# Assume 'sales_data.csv' exists

# Instance 1: Querying a CSV file straight

avg_prices = conn.execute(

"""

SELECT

class,

AVG(value) AS avg_price

FROM 'sales_data.csv'

WHERE area = 'EUROPE'

GROUP BY class;

"""

).fetchdf() # Returns a Pandas DataFrame

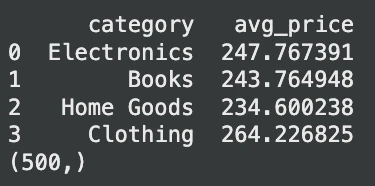

print(avg_prices.head())

# Instance 2: Querying a Pandas DataFrame straight

df = pd.DataFrame({

"id": vary(1000),

"worth": vary(1000)

})

outcome = conn.execute(

"SELECT COUNT(*) FROM df WHERE worth % 2 = 0;"

).fetchone()

print(outcome) # Anticipated output: (500,)Output:

On this case, DuckDB reads the CSV file on the fly. No essential step is required. It’s also capable of question a Pandas DataFrame. This flexibility removes a lot of the info loading code and simplifies AI pipelines.

Structure: Why They Carry out So In a different way

The variations within the efficiency of SQLite and DuckDB must do with their storage and question engines.

- Storage Mannequin: SQLite is row based mostly. It teams all knowledge of 1 row in it. This is superb for updating a single document. Nonetheless, it’s not quick with analytics. Assuming that you simply simply require a single column, then SQLite will nonetheless must learn all the info of every row. DuckDB is column oriented. It places all of the values of 1 column in a single column. That is supreme for analytics. A question equivalent to

SELECT AVG(age)solely reads the age column which is way quicker. - Question Execution: SQLite one question per row. That is reminiscence environment friendly in the case of small queries. DuckDB relies on a vectorized execution. It really works with knowledge on giant batches. This system makes use of present CPUs to do vital speedups on giant scans and joins. It’s also able to executing quite a few threads to execute a single question at a time.

- Reminiscence and On-Disk Conduct: SQLite is designed to make use of minimal reminiscence. It reads from disk as wanted. DuckDB makes use of reminiscence to boost velocity. It could actually execute knowledge greater than out there RAM in out-of-core execution. This suggests that DuckDB can eat extra RAM, however it’s a lot quicker on an analytical job. It has been demonstrated that in DuckDB, aggregation queries are 10-100 occasions quicker than in SQLite.

The Verdict: When to Use DuckDB vs. SQLite

This can be a good guideline to observe in your AI and machine studying initiatives.

| Facet | Use SQLite when | Use DuckDB when |

|---|---|---|

| Major function | You want a light-weight transactional database | You want quick native analytics |

| Information measurement | Low knowledge quantity, up to a couple hundred MBs | Medium to giant datasets |

| Workload kind | Inserts, updates, and easy lookups | Aggregations, joins, and huge desk scans |

| Transaction wants | Frequent small updates with transactional integrity | Learn-heavy analytical queries |

| File dealing with | Information saved contained in the database | Question CSV or Parquet information straight |

| Efficiency focus | Minimal footprint and ease | Excessive-speed analytical efficiency |

| Integration | Cell apps, embedded programs, IoT | Accelerating Pandas-based evaluation |

| Parallel execution | Not a precedence | Makes use of a number of CPU cores |

| Typical use case | Software state and light-weight storage | Native knowledge exploration and analytics |

Conclusion

Each SQLite and DuckDB are robust embedded databases. SQLite is an excellent light-weight knowledge storage and easy-going transaction device. Nevertheless, DuckDB can considerably speed up the processing of knowledge and prototyping of AI builders working with large knowledge. It’s because if you find yourself conscious of their variations, you’ll know the suitable device to make use of in numerous duties. In case of latest knowledge evaluation and machine studying processes, DuckDB can prevent a number of time with a substantial efficiency profit.

Often Requested Questions

A. No, they’re of different makes use of. DuckDB is used to entry quick analytics (OLAP), whereas SQLite is used to enter into dependable transactions. Choose in accordance with your workload.

A. SQLite is often extra suited to net functions which have a lot of small, speaking reads and writes as a result of it has a sound transactional mannequin and WAL mode.

A. Sure, with most large-scale jobs, equivalent to group-bys and joins, DuckDB is usually a lot quicker than Pandas as a result of its parallel, vectorized engine.

Login to proceed studying and revel in expert-curated content material.