Managing and sustaining deployments of complicated software program current engineers with a large number of challenges: safety vulnerabilities, outdated dependencies, and unpredictable and asynchronous vendor launch cadences, to call a couple of.

We describe right here an strategy to automating key actions within the software program operations course of, with give attention to the setup and testing of updates to third-party code. A key profit is that engineers can extra shortly and confidently deploy the most recent variations of software program. This permits a crew to extra simply and safely keep updated on software program releases, each to help shopper wants and to remain present on safety patches.

We illustrate this strategy with a software program engineering course of platform managed by our crew of researchers within the Utilized Programs Group of the SEI’s CERT Division. This platform is designed to be compliant with the necessities of the Cybersecurity Maturity Mannequin Certification (CMMC) and NIST SP 800-171. Every of the challenges above current dangers to the soundness and safety compliance of the platform, and addressing these points calls for effort and time.

When system deployment is completed with out automation, system directors should spend time manually downloading, verifying, putting in, and configuring every new launch of any explicit software program software. Moreover, this course of should first be performed in a check setting to make sure the software program and all its dependencies may be built-in efficiently and that the upgraded system is totally practical. Then the method is completed once more within the manufacturing setting.

When an engineer’s time is freed up by automation, extra effort may be allotted to delivering new capabilities to the warfighter, with extra effectivity, increased high quality, and fewer threat of safety vulnerabilities. Steady deployment of functionality describes a set of ideas and practices that present quicker supply of safe software program capabilities by enhancing the collaboration and communication that hyperlinks software program growth groups with IT operations and safety workers, in addition to with acquirers, suppliers, and different system stakeholders.

Whereas this strategy advantages software program growth typically, we propose that it’s particularly essential in high-stakes software program for nationwide safety missions.

On this put up, we describe our strategy to utilizing DevSecOps instruments for automating the supply of third-party software program to growth groups utilizing CI/CD pipelines. This strategy is focused to software program techniques which can be container suitable.

Constructing an Automated Configuration Testing Pipeline

Not each crew in a software-oriented group is concentrated particularly on the engineering of the software program product. Our crew bears duty for 2 generally competing duties:

- Delivering helpful know-how, reminiscent of instruments for automated testing, to software program engineers that permits them to carry out product growth and

- Deploying safety updates to the know-how.

In different phrases, supply of worth within the steady deployment of functionality could typically not be immediately centered on the event of any particular product. Different dimensions of worth embrace “the folks, processes, and know-how essential to construct, deploy, and function the enterprise’s merchandise. Basically, this enterprise concern consists of the software program manufacturing unit and product operational environments; nonetheless, it doesn’t encompass the merchandise.”

To enhance our skill to finish these duties, we designed and carried out a customized pipeline that was a variation of the standard steady integration/steady deployment (CI/CD) pipeline discovered in lots of conventional DevSecOps workflows as proven under.

Determine 1: The DevSecOps Infinity diagram, which represents the continual integration/steady deployment (CI/CD) pipeline discovered in lots of conventional DevSecOps workflows.

The principle distinction between our pipeline and a conventional CI/CD pipeline is that we’re not creating the appliance that’s being deployed; the software program is often supplied by a third-party vendor. Our focus is on delivering it to our surroundings, deploying it onto our data techniques, working it, and monitoring it for correct performance.

Automation can yield terrific advantages in productiveness, effectivity, and safety all through a corporation. Because of this engineers can hold their techniques safer and deal with vulnerabilities extra shortly and with out human intervention, with the impact that techniques are extra readily saved compliant, steady, and safe. In different phrases, automation of the related pipeline processes can improve our crew’s productiveness, implement safety compliance, and enhance the person expertise for our software program engineers.

There are, nonetheless, some potential damaging outcomes when it’s performed incorrectly. You will need to acknowledge that as a result of automation permits for a lot of actions to be carried out in fast succession, there’s at all times the chance that these actions result in undesirable outcomes. Undesirable outcomes could also be unintentionally launched by way of buggy process-support code that doesn’t carry out the proper checks earlier than taking an motion or an unconsidered edge case in a posh system.

It’s subsequently essential to take precautions if you find yourself automating a course of. This ensures that guardrails are in place in order that automated processes can’t fail and have an effect on manufacturing functions, providers, or knowledge. This could embrace, for instance, writing assessments that validate every stage of the automated course of, together with validity checks and protected and non-destructive halts when operations fail.

Creating significant assessments could also be difficult, requiring cautious and inventive consideration of the various methods a course of might fail, in addition to return the system to a working state ought to failures happen.

Our strategy to addressing this problem revolves round integration, regression, and practical assessments that might be run mechanically within the pipeline. These assessments are required to make sure that the performance of the third-party utility was not affected by adjustments in configuration of the system, and in addition that new releases of the appliance nonetheless interacted as anticipated with older variations’ configurations and setups.

Automating Containerized Deployments Utilizing a CI/CD Pipeline

A Case Examine: Implementing a Customized Steady Supply Pipeline

Groups on the SEI have intensive expertise constructing DevSecOps pipelines. One crew particularly outlined the idea of making a minimal viable course of to border a pipeline’s construction earlier than diving into growth. This permits all the teams engaged on the identical pipeline to collaborate extra effectively.

In our pipeline, we began with the primary half of the standard construction of a CI/CD pipeline that was already in place to help third-party software program launched by the seller. This gave us a possibility to dive deeper into the later phases of the pipelines: supply, testing, deployment, and operation. The tip consequence was a five-stage pipeline which automated testing and supply for all the software program parts within the software suite within the occasion of configuration adjustments or new model releases.

To keep away from the various complexities concerned with delivering and deploying third-party software program natively on hosts in our surroundings, we opted for a container-based strategy. We developed the container construct specs, deployment specs, and pipeline job specs in our Git repository. This enabled us to vet any desired adjustments to the configurations utilizing code critiques earlier than they might be deployed in a manufacturing setting.

A 5-Stage Pipeline for Automating Testing and Supply within the Instrument Suite

Stage 1: Automated Model Detection

When the pipeline is run, it searches the seller website both for the user-specified launch or the most recent launch of the appliance in a container picture. If a brand new launch is discovered, the pipeline makes use of communication channels set as much as notify engineers of the invention. Then the pipeline mechanically makes an attempt to soundly obtain the container picture immediately from the seller. If the container picture is unable to be retrieved from the seller, the pipeline fails and alerts engineers to the problem.

Stage 2: Automated Vulnerability Scanning

After downloading the container from the seller website, it’s best follow to run some type of vulnerability scanner to make it possible for no apparent points which may have been missed by the distributors of their launch find yourself within the manufacturing deployment. The pipeline implements this additional layer of safety by using frequent container scanning instruments, If vulnerabilities are discovered within the container picture, the pipeline fails.

Stage 3: Automated Utility Deployment

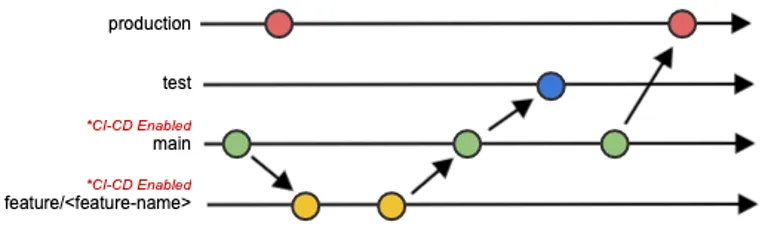

At this level within the pipeline the brand new container picture has been efficiently downloaded and scanned. The subsequent step is to arrange the pipeline’s setting in order that it resembles our manufacturing deployment’s setting as carefully as attainable. To realize this, we created a testing system inside a Docker in Docker (DIND) pipeline container that simulates the method of upgrading functions in an actual deployment setting. The method retains observe of our configuration recordsdata for the software program and hundreds check knowledge into the appliance to make sure that all the things works as anticipated. To distinguish between these environments, we used an environment-based DevSecOps workflow (Determine 2: Git Department Diagram) that provides extra fine-grained management between configuration setups on every deployment setting. This workflow permits us to develop and check on function branches, have interaction in code critiques when merging function branches into the principle department, automate testing on the principle department, and account for environmental variations between the check and manufacturing code (e.g. completely different units of credentials are required in every setting).

Determine 2: The Git Department Diagram

Since we’re utilizing containers, it’s not related that the container runs in two utterly completely different environments between the pipeline and manufacturing deployments. The end result of the testing is predicted to be the identical in each environments.

Now, the appliance is up and operating contained in the pipeline. To raised simulate an actual deployment, we load check knowledge into the appliance which can function a foundation for a later testing stage within the pipeline.

Stage 4: Automated Testing

Automated assessments on this stage of the pipeline fall into a number of classes. For this particular utility, essentially the most related testing methods are regression assessments, smoke assessments, and practical testing.

After the appliance has been efficiently deployed inside the pipeline, we run a collection of assessments on the software program to make sure that it’s functioning and that there aren’t any points utilizing the configuration recordsdata that we supplied. A technique that this may be completed is by making use of the appliance’s APIs to entry the info that was loaded in throughout Stage 3. It may be useful to learn by means of the third-party software program’s documentation and search for API references or endpoints which may simplify this course of. This ensures that you simply not solely check fundamental performance of the appliance, however that the system is functioning virtually as nicely, and that the API utilization is sound.

Stage 5: Automated Supply

Lastly, after all the earlier phases are accomplished efficiently, the pipeline will make the totally examined container picture accessible to be used in manufacturing deployments. After the container has been completely examined within the pipeline and turns into accessible, engineers can select to make use of the container in whichever setting they need (e.g., check, high quality assurance, staging, manufacturing, and so on.).

An essential facet to supply is the communication channels that the pipeline makes use of to convey the data that has been collected. This SEI weblog put up explains the advantages of speaking immediately with builders and DevSecOps engineers by means of channels which can be already part of their respective workflows.

It can be crucial right here to make the excellence between supply and deployment. Supply refers back to the course of of creating software program accessible to the techniques the place it is going to find yourself being put in. In distinction, the time period deployment refers back to the strategy of mechanically pushing the software program out to the system, making it accessible to the top customers. In our pipeline, we give attention to supply as a substitute of deployment as a result of the providers for which we’re automating upgrades require a excessive diploma of reliability and uptime. A future aim of this work is to ultimately implement automated deployments.

Dealing with Pipeline Failures

With this mannequin for a customized pipeline, failures modes are designed into the method. When the pipeline fails, analysis of the failure ought to determine remedial actions to be undertaken by the engineers. These issues might be points with the configuration recordsdata, software program variations, check knowledge, file permissions, setting setup, or another unexpected error. By operating an exhaustive collection of assessments, engineers can come into the scenario outfitted with a better understanding of potential issues with the setup. This ensures that they’ll make the wanted changes as successfully as attainable and keep away from operating into the incompatibility points on a manufacturing deployment.

Implementation Challenges

We confronted some explicit challenges in our experimentation, and we share them right here, since they could be instructive.

The primary problem was deciding how the pipeline can be designed. As a result of the pipeline remains to be evolving, flexibility was required by members of the crew to make sure there was a constant image relating to the standing of the pipeline and future objectives. We additionally wanted the crew to remain dedicated to repeatedly enhancing the pipeline. We discovered it useful to sync up frequently with progress updates so that everybody stayed on the identical web page all through the pipeline design and growth processes.

The subsequent problem appeared throughout the pipeline implementation course of. Whereas we had been migrating our knowledge to a container-based platform, we found that most of the containerized releases of various software program wanted in our pipeline lacked documentation. To make sure that all of the information we gained all through the design, growth, and implementation processes was shared by your entire crew, , we discovered it essential to write down a considerable amount of our personal documentation to function a reference all through the method.

A remaining problem was to beat a bent to stay with a working course of that’s minimally possible, however that fails to learn from fashionable course of approaches and tooling. It may be straightforward to settle into the mindset of “this works for us” and “we’ve at all times performed it this manner” and fail to make the implementation of confirmed ideas and practices a precedence. Complexity and the price of preliminary setup could be a main barrier to alter. Initially, we needed to grasp the effort of making our personal customized container pictures that had the identical functionalities as an present, working techniques. At the moment, we questioned whether or not this additional effort was even essential in any respect. Nonetheless, it turned clear that switching to containers considerably decreased the complexity of mechanically deploying the software program in our surroundings, and that discount in complexity allowed the time and cognitive area for the addition of intensive automated testing of the improve course of and the performance of the upgraded system.

Now, as a substitute of manually performing all of the assessments required to make sure the upgraded system features accurately, the engineers are solely alerted when an automatic check fails and requires intervention. You will need to take into account the assorted organizational limitations that groups may run into whereas coping with implementing complicated pipelines.

Managing Technical Debt and Different Choices When Automating Your Software program Supply Workflow

When making the choice to automate a significant a part of your software program supply workflow, it is very important develop metrics to display advantages to the group to justify the funding of upfront effort and time into crafting and implementing all of the required assessments, studying the brand new workflow, and configuring the pipeline. In our experimentation, we judged that’s was a extremely worthwhile funding to make the change.

Trendy CI/CD instruments and practices are a few of the greatest methods to assist fight technical debt. The automation pipelines that we carried out have saved numerous hours for engineers and we count on will proceed to take action over time of operation. By automating the setup and testing stage for updates, engineers can deploy the most recent variations of software program extra shortly and with extra confidence. This permits our crew to remain updated on software program releases to higher help our prospects’ wants and assist them keep present on safety patches. Our crew is ready to make the most of the newly freed up time to work on different analysis and initiatives that enhance the capabilities of the DoD warfighter.