We work together with LLMs every single day.

We write prompts, paste paperwork, proceed lengthy conversations, and count on the mannequin to recollect what we mentioned earlier. When it does, we transfer on. When it doesn’t, we repeat ourselves or assume one thing went unsuitable.

What most individuals hardly ever take into consideration is that each response is constrained by one thing referred to as the context window. It quietly decides how a lot of your immediate the mannequin can see, how lengthy a dialog stays coherent, and why older info all of the sudden drops out.

Each giant language mannequin has a context window, but most customers by no means be taught what it’s or why it issues. Nonetheless, it performs a essential position in whether or not a mannequin can deal with quick chats, lengthy paperwork, or complicated multi step duties.

On this article, we’ll discover how the context window works, the way it differs throughout fashionable LLMs, and why understanding it modifications the way in which you immediate, select, and use language fashions.

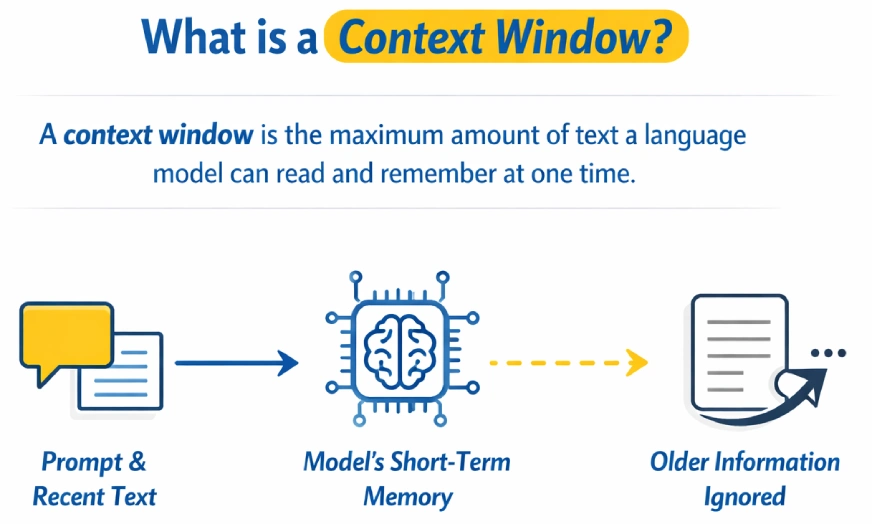

What’s Context Window?

A context window is the utmost quantity of textual content a language mannequin can learn and bear in mind at one time whereas producing a response. It acts because the mannequin’s short-term reminiscence, together with the immediate and up to date dialog. As soon as the restrict is exceeded, older info is ignored or forgotten.

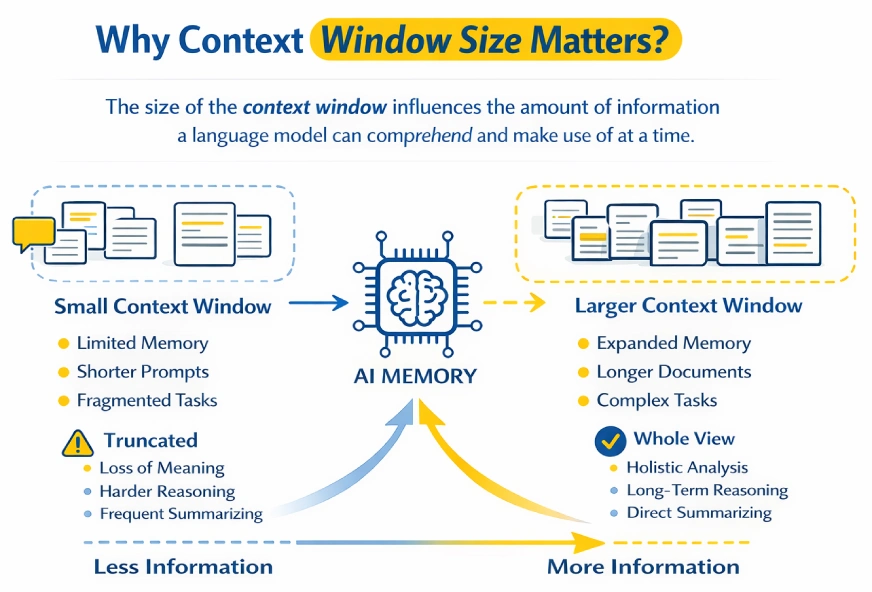

Why Does Context Window Dimension Matter?

The context window determines how a lot info a language mannequin can course of and cause over at a time.

Smaller context home windows power prompts or paperwork to be truncated, usually breaking continuity and shedding necessary particulars. Bigger context home windows permit the mannequin to carry extra info concurrently, making it simpler to cause over lengthy conversations, paperwork, or codebases.

Consider the context window because the mannequin’s working reminiscence. Something outdoors the present immediate is successfully forgotten. Bigger home windows assist protect multi-step reasoning and lengthy dialogues, whereas smaller home windows trigger earlier info to fade rapidly.

Duties like analyzing lengthy reviews or summarizing giant paperwork in a single cross develop into potential with bigger context home windows. Nevertheless, they arrive with trade-offs: greater computational value, slower responses, and potential noise if irrelevant info is included.

The secret is stability. Present sufficient context to floor the duty, however maintain inputs centered and related.

Additionally Learn: Immediate Engineering Information 2026

Context Window Sizes in Totally different LLMs

Context window sizes fluctuate broadly throughout giant language fashions and have expanded quickly with newer generations. Early fashions had been restricted to just a few thousand tokens, limiting them to quick prompts and small paperwork.

Fashionable fashions assist a lot bigger context home windows, enabling long-form reasoning, document-level evaluation, and prolonged multi-turn conversations inside a single interplay. This improve has considerably improved a mannequin’s means to take care of coherence throughout complicated duties.

The desk beneath summarizes the generally reported context window sizes for standard mannequin households from OpenAI, Anthropic, Google, and Meta.

Examples of Context Window Sizes

| Mannequin | Group | Context Window Dimension | Notes |

|---|---|---|---|

| GPT-3 | OpenAI | ~2,048 tokens | Early era; very restricted context |

| GPT-3.5 | OpenAI | ~4,096 tokens | Normal early ChatGPT restrict |

| GPT-4 (baseline) | OpenAI | As much as ~32,768 tokens | Bigger context than GPT-3.5 |

| GPT-4o | OpenAI | 128,000 tokens | Extensively used long-context mannequin |

| GPT-4.1 | OpenAI | ~1,000,000+ tokens | Large extended-context assist |

| GPT-5.1 | OpenAI | ~128K–196K tokens | Subsequent-gen flagship with improved reasoning |

| Claude 3.5 / 3.7 Sonnet | Anthropic | 200,000 tokens | Sturdy stability of pace and lengthy context |

| Claude 4.5 Sonnet | Anthropic | ~200,000 tokens | Improved reasoning with giant window |

| Claude 4.5 Opus | Anthropic | ~200,000 tokens | Highest-end Claude mannequin |

| Gemini 3 / Gemini 3 Professional | Google DeepMind | ~1,000,000 tokens | Business-leading context size |

| Kimi K2 | Moonshot AI | ~256,000 tokens | Giant context, sturdy long-form reasoning |

| LLaMA 3.1 | Meta | ~128,000 tokens | Prolonged context for open-source fashions |

Advantages and Commerce-offs of Bigger Context Home windows

Advantages

- Helps far longer enter, whether or not full paperwork or lengthy conversations or giant codebases.

- Enhances the connectivity of multi-step reasoning and prolonged conversations.

- Makes it potential to carry out difficult duties equivalent to analyzing lengthy reviews or complete books without delay.

- Eliminates the need of chunking, summarizing or exterior retrieval techniques.

- Helps base responses on given reference textual content, which has the capability to lower hallucinations.

Commerce-offs

- Raises the price, latency, and API utilization prices.

- Seems to be inefficient when a large context is utilized when it’s not required.

- The worth of provides decreases when the timid comprises irrelevant or noisy info.

- May cause confusion or inconsistency the place there are conflicting particulars contained in lengthy inputs.

- Might have consideration issues in very lengthy prompts e.g. info not picked out alongside the center.

Virtually, the bigger context home windows are potent and simpler when they’re mixed with concentrated, high-quality enter as an alternative of default most size.

Additionally Learn: What’s Mannequin Collapse? Examples, Causes and Fixes

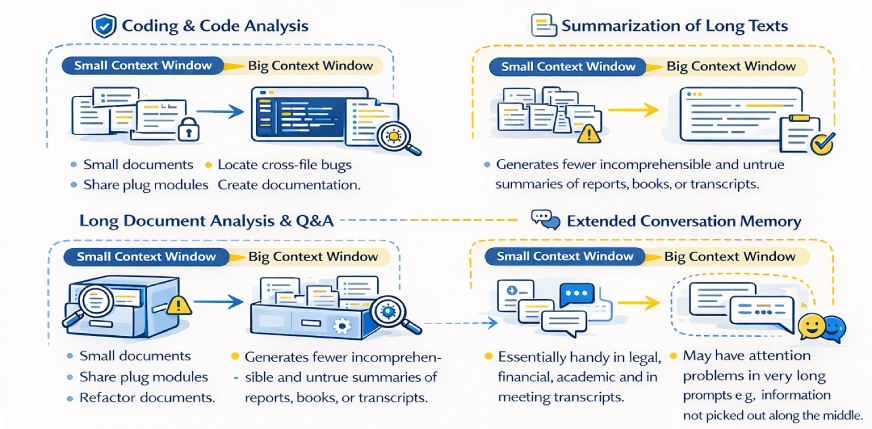

How Context Window Impacts Key Use Instances?

Provided that the context window constrains the quantity of data {that a} mannequin can view at a given time, it extremely influences what the mannequin is able to conducting with out further instruments or workarounds.

Coding and Code Evaluation

- The context home windows are small, which confines the mannequin to a single file or a handful of capabilities without delay.

- The mannequin is unaware of the larger codebase except it’s specified.

- Massive context home windows allow two or extra recordsdata or repositories to be considered.

- Permits whole-system duties equivalent to refactoring modules, finding cross-file bugs, and creating documentation.

- Eliminates guide chunking or the repetition of swapping code snippets.

- Nonetheless wants selectivity of very giant tasks in order to keep away from noise and wastage of context.

Summarization of Lengthy Texts

- Minimal home windows make paperwork be divided and summarized in fragments.

- Small summaries are likely to lose world construction and important relationships.

- Massive context home windows allow a one cross abstract of complete paperwork.

- Generates fewer incomprehensible and unfaithful summaries of reviews, books, or transcripts.

- Basically useful in authorized, monetary, educational and in assembly transcripts.

- The trade-offs are to pay extra and course of slowly with giant inputs.

Lengthy Doc Evaluation and Q&A

- Small dimension home windows want retrieval techniques or guide part choice.

- Large home windows make it possible to question full paperwork or open a couple of doc.

- Additionally permits cross-referencing of data that’s in distant areas.

- Relevant to contracts, analysis papers, insurance policies and data bases.

- Eases pipelines by avoiding the usage of search and chunking logic.

- Regardless of the shortcoming to be correct beneath ambiguous steerage and unrelated enter selections, accuracy can nonetheless enhance.

Prolonged Dialog Reminiscence

- Chatbots have small window sizes, which result in forgetting earlier sections of an extended dialog.

- Because the context fades away, the consumer is required to repeat or restate info.

- Dialog historical past is longer and lasts longer in giant context home windows.

- Facilitates much less robotic, extra pure and private communications.

- Good to make use of in assist chats, writing collaboration and protracted brainstorming.

- Higher reminiscence is accompanied with better use of tokens in addition to value when utilizing lengthy chats.

Additionally Learn: How Does LLM Reminiscence Work?

Conclusion

The context window defines how a lot info a language mannequin can course of without delay and acts as its short-term reminiscence. Fashions with bigger context home windows deal with lengthy conversations, giant paperwork, and complicated code extra successfully, whereas smaller home windows wrestle as inputs develop.

Nevertheless, greater context home windows additionally improve value and latency and don’t assist if the enter consists of pointless info. The fitting context dimension is dependent upon the duty: small home windows work nicely for fast or easy duties, whereas bigger ones are higher for deep evaluation and prolonged reasoning.

Subsequent time you write a immediate, embody solely the context the mannequin really wants. Extra context is highly effective, however centered context is what works finest.

Regularly Requested Questions

A. When the context window is exceeded, the mannequin begins ignoring older components of the enter. This may trigger it to neglect earlier directions, lose observe of the dialog, or produce inconsistent responses.

A. No. Whereas bigger context home windows permit the mannequin to course of extra info, they don’t robotically enhance output high quality. If the enter comprises irrelevant or noisy info, efficiency can really degrade.

A. In case your process entails lengthy paperwork, prolonged conversations, multi-file codebases, or complicated multi-step reasoning, a bigger context window is useful. For brief questions, easy prompts, or fast duties, smaller home windows are often enough.

A. Sure. Bigger context home windows improve token utilization, which ends up in greater prices and slower response instances. For this reason utilizing the utmost out there context by default is usually inefficient.

Login to proceed studying and revel in expert-curated content material.