Massive AI fashions are scaling quickly, with greater architectures and longer coaching runs changing into the norm. As fashions develop, nevertheless, a basic coaching stability subject has remained unresolved. DeepSeek mHC immediately addresses this downside by rethinking how residual connections behave at scale. This text explains DeepSeek mHC (Manifold-Constrained Hyper-Connections) and exhibits the way it improves giant language mannequin coaching stability and efficiency with out including pointless architectural complexity.

The Hidden Downside With Residual and Hyper-Connections

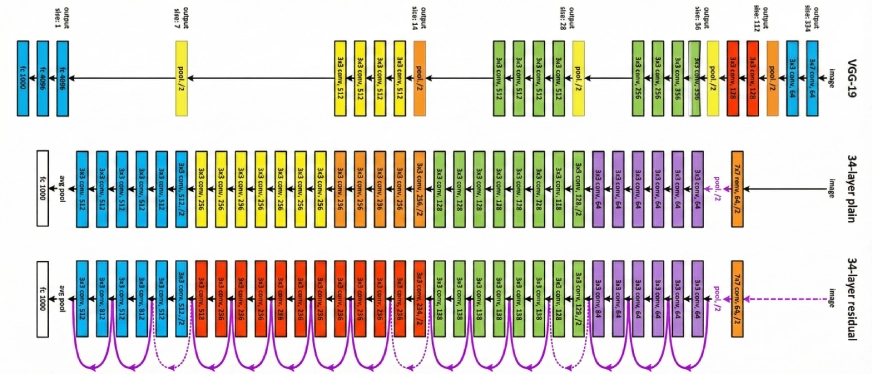

Residual connections have been a core constructing block of deep studying for the reason that launch of ResNet in 2016. They permit networks to create shortcut paths, enabling info to stream immediately by layers as a substitute of being relearned at each step. In easy phrases, they act like categorical lanes in a freeway, making deep networks simpler to coach.

This method labored properly for years. However as fashions scaled from tens of millions to billions, and now tons of of billions of parameters, its limitations grew to become clear. To push efficiency additional, researchers launched Hyper-Connections (HC), successfully widening these info highways by including extra paths. Efficiency improved noticeably, however stability didn’t.

Coaching grew to become extremely unstable. Fashions would prepare usually after which instantly collapse round a particular step, with sharp loss spikes and exploding gradients. For groups coaching giant language fashions, this sort of failure can imply losing large quantities of compute, time, and sources.

What Is Manifold-Constrained Hyper-Connections (mHC)?

It’s a basic framework that maps the residual connection house of HC to a sure manifold to bolster the identification mapping property, and on the similar time includes strict infrastructure optimization to be environment friendly.

Empirical assessments present that mHC is sweet for large-scale coaching, delivering not solely clear efficiency good points but additionally glorious scalability. We count on mHC, being a flexible and accessible addition to HC, to help within the comprehension of topological structure design and to suggest new paths for the event of foundational fashions.

What Makes mHC Totally different?

DeepSeek’s technique is not only good, it’s sensible as a result of it causes you to suppose “Oh, why has nobody ever considered this earlier than?” They nonetheless stored Hyper-Connections however restricted them with a exact mathematical methodology.

That is the technical half (don’t quit on me, it’ll be price your whereas to grasp): Commonplace residual connections permit what is named “identification mapping” to be carried out. Image it because the legislation of conservation of vitality the place indicators are touring by the community accomplish that on the similar energy degree. When HC elevated the width of the residual stream and mixed it with learnable connection patterns, they unintentionally violated this property.

DeepSeek’s researchers comprehended that HC’s composite mappings, basically, when you retain stacking these connections layer upon layer, have been boosting indicators by multipliers of 3000 instances or much more. Image it that you simply stage a dialog and each time somebody communicates your message, the entire room without delay yells it 3000 instances louder. That’s nothing however chaos.

mHC solves the issue by projecting these connection matrices onto the Birkhoff polytope, an summary geometric object during which every row and column has a sum equal to 1. It could seem theoretical, however in actuality, it makes the community to deal with sign propagation as a convex mixture of options. No extra explosions, no extra indicators disappearing fully.

The Structure: How mHC Truly Works

Let’s discover the main points of how DeepSeek modified the connections inside the mannequin. The design depends upon three main mappings that decide the course of the knowledge:

The Three-Mapping System

In Hyper-Connections, three learnable matrices carry out completely different duties:

- H_pre: Takes the knowledge from the prolonged residual stream into the layer

- H_post: Sends the output of the layer again to the stream

- H_res: Combines and refreshes the knowledge within the stream itself

Visualize it as a freeways system the place H_pre is the doorway ramp, H_post is the exit ramp, and H_res is the visitors stream supervisor among the many lanes.

One of many findings of DeepSeek’s ablation research is very fascinating – H_res (the mapping utilized to the residuals) is the principle contributor to the efficiency improve. They turned it off, permitting solely pre and put up mappings, and efficiency dramatically dropped. That is logical: the spotlight of the method is when options from completely different depths get to work together and swap info.

The Manifold Constraint

That is the purpose the place mHC begins to deviate from common HC. Relatively than permitting H_res to be picked arbitrarily, they impose it to be doubly stochastic, which is a attribute that each row and each column sums to 1.

What’s the significance of this? There are three key causes:

- Norms are stored intact: The spectral norm is stored inside the limits of 1, thus gradients can’t explode.

- Closure below composition: Doubling up on doubly stochastic matrices ends in one other doubly stochastic matrix; therefore, the entire community depth continues to be steady.

- An illustration when it comes to geometry: The matrices are within the Birkhoff polytope, which is the convex hull of all permutation matrices. To place it in another way, the community learns weighted combos of routing patterns the place info flows in another way.

The Sinkhorn-Knopp algorithm is the one used for imposing this constraint, which is an iterative methodology that retains normalizing rows and columns alternately until the specified accuracy is reached. Within the experiments, it was established that 20 iterations yield an apt approximation with no extreme computation.

Parameterization Particulars

The execution is sensible. As a substitute of engaged on single function vectors, mHC compresses the entire n×C hidden matrix into one vector. This permits for the whole context info for use within the dynamic mapping’s computation.

The final constrained mappings apply:

- Sigmoid activation for H_pre and H_post (thus guaranteeing non-negativity)

- Sinkhorn-Knopp projection for H_res (thereby imposing double stochasticity)

- Small initialization values (α = 0.01) for gating components to start with conservative

This configuration stops sign cancellation brought on by interactions between positive-negative coefficients and on the similar time retains the essential identification mapping property.

Scaling Conduct: Does It Maintain Up?

One of the vital wonderful issues is how the advantages of mHC scale. DeepSeek performed their experiments in three completely different dimensions:

- Compute Scaling: They educated to 3B, 9B, and 27B parameters with proportional information. The efficiency benefit remained the identical and even barely elevated at increased budgets for the compute. That is unimaginable as a result of often, many architectural methods which work at small-scale don’t work when scaling up.

- Token Scaling: They monitored the efficiency all through the coaching of their 3B mannequin educated on 1 trillion tokens. The loss enchancment was steady from very early coaching to the convergence stage, indicating that mHC’s advantages usually are not restricted to the early-training interval.

- Propagation Evaluation: Do you recall these 3000x sign amplification components in vanilla HC? With mHC, the utmost achieve magnitude was diminished to round 1.6 being three orders of magnitude extra steady. Even after composing 60+ layers, the ahead and backward sign good points remained well-controlled.

Efficiency Benchmarks

DeepSeek evaluated mHC on completely different fashions with parameter sizes various from 3 billion to 27 billion and the steadiness good points have been notably seen:

- Coaching loss was clean through the complete course of with no sudden spikes

- Gradient norms have been stored in the identical vary, in distinction to HC, which displayed wild behaviour

- Essentially the most important factor was that the efficiency not solely improved but additionally proven throughout a number of benchmarks

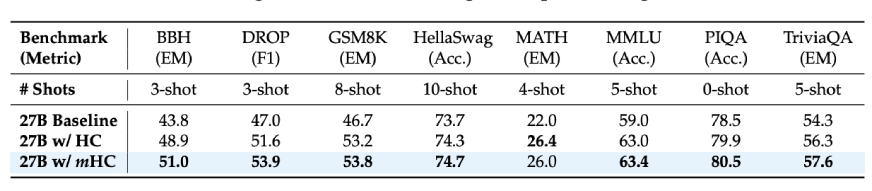

If we contemplate the outcomes of the downstream duties for the 27B mannequin:

- BBH reasoning duties: 51.0% (vs. 43.8% baseline)

- DROP studying comprehension: 53.9% (vs. 47.0% baseline)

- GSM8K math issues: 53.8% (vs. 46.7% baseline)

- MMLU data: 63.4% (vs. 59.0% baseline)

These don’t symbolize minor enhancements however in reality, we’re speaking about 7-10 level will increase on troublesome reasoning benchmarks. Moreover, these enhancements weren’t solely seen as much as the bigger fashions but additionally throughout longer coaching durations, which was the case with the scaling of the deep studying fashions.

Additionally Learn: DeepSeek-V3.2-Exp: 50% Cheaper, 3x Quicker, Most Worth

Conclusion

In case you are engaged on or coaching giant language fashions, mHC is a facet that it’s best to undoubtedly contemplate. It’s a kind of papers that uncommon, which identifies an actual subject, presents a mathematically legitimate answer, and even proves that it really works at a big scale.

The key revelations are:

- Rising residual stream width results in higher efficiency; nevertheless, naive strategies trigger instability

- Limiting interactions to doubly stochastic matrices retain the identification mapping properties

- If finished proper, the overhead will be barely noticeable

- The benefits will be reapplied to fashions with a dimension of tens of billions of parameters

Furthermore, mHC is a reminder that the architectural design continues to be an important issue. The problem of the best way to use extra compute and information can’t final endlessly. There can be instances when it’s essential to take a step again, comprehend the explanation for the failure on the giant scale, and repair it correctly.

And to be sincere, such analysis is what I like most. Not little adjustments to be made, however quite profound adjustments that may make your entire discipline just a little extra strong.

Login to proceed studying and luxuriate in expert-curated content material.