Courtesy: Synopsys

Whilst we push ahead into new frontiers of technological innovation, researchers are revisiting a number of the most elementary concepts within the historical past of computing.

Alan Turing started theorizing the potential capabilities of digital computer systems within the late Thirties, initially exploring computation and later the potential for modeling pure processes. By the Nineteen Fifties, he famous that simulating quantum phenomena, although theoretically attainable, would demand sources far past sensible limits — even with future advances.

These have been the preliminary seeds of what we now name quantum computing. And the problem of simulating quantum methods with classical computer systems ultimately led to new explorations of whether or not it will be attainable to create computer systems primarily based on quantum mechanics itself.

For many years, these investigations have been confined inside the realms of theoretical physics and summary arithmetic — an bold thought explored totally on chalkboards and in scholarly journals. However immediately, quantum computing R&D is quickly shifting to a brand new space of focus: engineering.

Physics analysis continues, after all, however the questions are evolving. Fairly than debating whether or not quantum computing can outpace classical strategies — it will probably, in precept — scientists and engineers at the moment are targeted on making it actual: What does it take to construct a viable quantum supercomputer?

Theoretical and utilized physics alone can not reply that query, and plenty of sensible features stay unsettled. What are the optimum supplies and bodily applied sciences? What architectures and fabrication strategies are wanted? And which algorithms and functions will unlock probably the most potential?

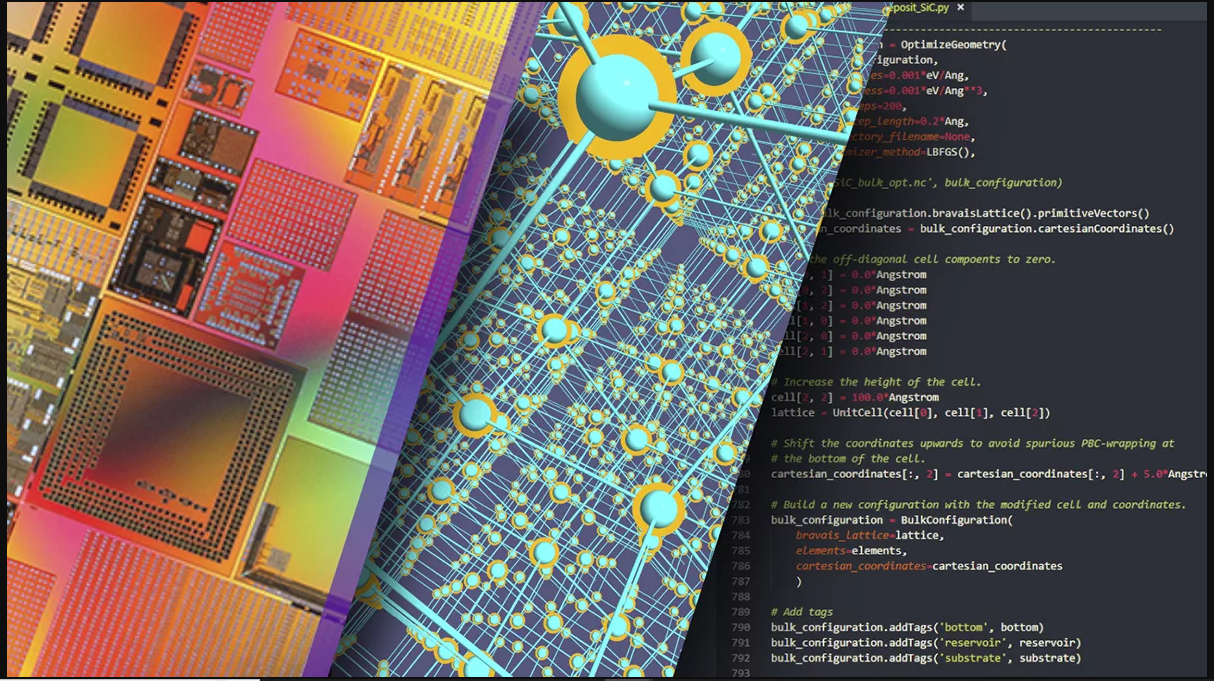

As researchers discover and validate methods to advance quantum computing from speculative science to sensible breakthroughs, extremely superior simulation instruments — corresponding to these used for chip design — are enjoying a pivotal function in figuring out the solutions.

Pursuing quantum utility

In some ways, the engineering behind quantum computing presents much more advanced challenges than the underlying physics. Producing a restricted variety of “qubits” — the essential items of data in quantum computing — in a lab is one factor. Constructing a large-scale, commercially viable quantum supercomputer is kind of one other.

A complete design have to be established. Useful resource necessities have to be decided. Probably the most precious and possible functions have to be recognized. And, finally, the hardest query of all have to be answered: Will the worth generated by the pc outweigh the immense prices of improvement, upkeep, and operation?

The newest insights have been detailed in a latest preprint, “Easy methods to Construct a Quantum Supercomputer: Scaling from A whole lot to Thousands and thousands of Qubits, by Mohseni et. al. 2024,” which I helped co-author alongside Synopsys principal engineer John Sorebo and an prolonged group of analysis collaborators.

Rising quantum computing scale and high quality

At the moment’s quantum computing analysis is pushed by elementary challenges: scaling up the variety of qubits, guaranteeing their reliability, and bettering the accuracy of the operations that hyperlink them collectively. The objective is to supply constant and helpful outcomes throughout not simply tons of, however hundreds and even tens of millions of qubits.

The perfect “modalities” for attaining this are nonetheless up for debate. Superconducting circuits, silicon spins, trapped ions, and photonic methods are all being explored (and, in some circumstances, mixed). Every modality brings its personal distinctive hurdles for controlling and measuring qubits successfully.

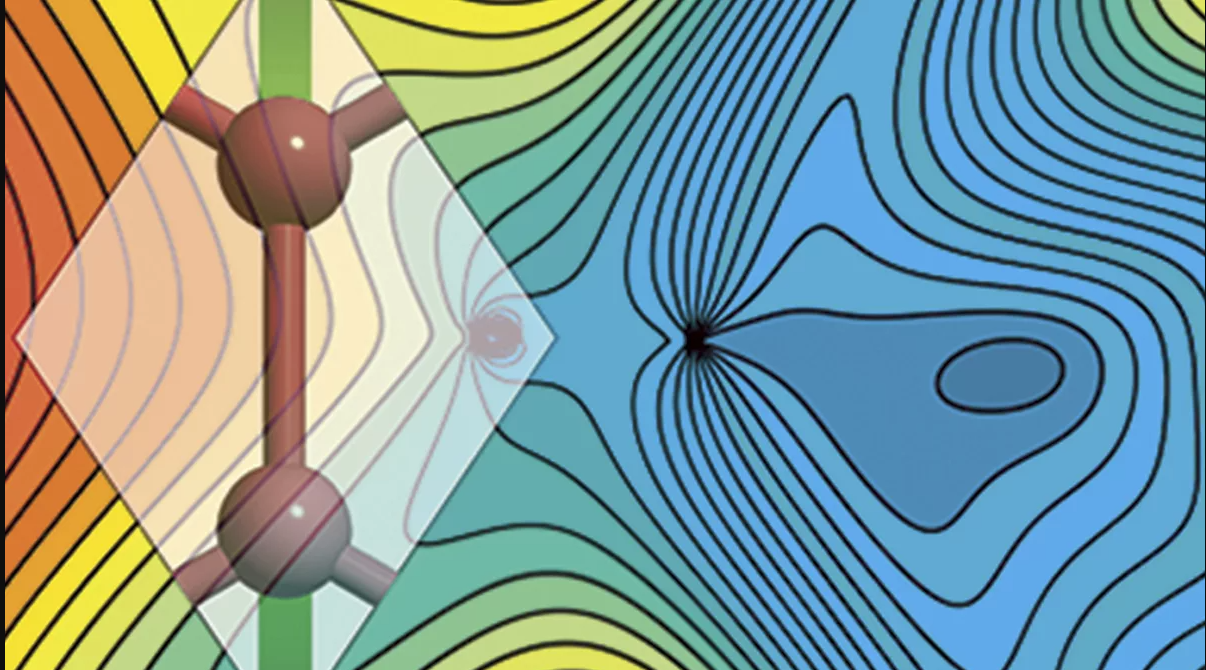

Numerical simulation instruments are important in these investigations, offering vital insights into how totally different modalities can stand up to noise and scale to accommodate extra qubits. These instruments embrace:

- QuantumATK for atomic-scale modeling and materials simulations.

- 3D Excessive Frequency Simulation Software program (HFSS) for simulating the planar electromagnetic crosstalk between qubits at scale.

- RaptorQu for high-capacity electromagnetic simulation of quantum computing functions.

Advancing quantum computing R&D with numerical simulation

The design of qubit units — together with their controls and interconnects — blends superior engineering with quantum physics. Researchers should mannequin phenomena starting from electron confinement and tunnelling in nanoscale supplies to electromagnetic coupling throughout advanced multilayer buildings

Many points which are vital for typical built-in circuit design and atomic-scale fabrication (corresponding to edge roughness, materials inhomogeneity, and phonon results) should even be confronted when working with quantum units, the place even delicate variations can affect system reliability. Numerical simulation performs an important function at each stage, serving to groups:

- Discover gate geometries.

- Optimize Josephson junction layouts.

- Analyze crosstalk between qubits and losses in superconducting interconnects.

- Examine materials interfaces that impression efficiency.

By precisely capturing each quantum-mechanical habits and classical electromagnetic results, simulation instruments enable researchers to guage design alternate options earlier than fabrication, shorten iteration cycles, and acquire deeper perception into how units function underneath sensible circumstances.

Superior numerical simulation instruments corresponding to QuantumATK, HFSS, and RaptorQu are reworking how analysis teams strategy computational modeling. As an alternative of counting on a patchwork of educational codes, groups can now leverage unified environments — with widespread knowledge fashions and constant interfaces — that assist a wide range of computational strategies. These industry-grade platforms:

- Mix dependable but versatile software program architectures with high-performance computational cores optimized for multi-GPU methods, accessible by means of Python interfaces that allow programmable extensions and customized workflows.

- Assist refined automated workflows through which simulations are run iteratively, and subsequent steps adapt dynamically primarily based on intermediate outcomes.

- Leverage machine studying methods to speed up repetitive operations and effectively deal with giant units of simulations, enabling scalable, data-driven analysis.

Simulation instruments like QuantumATK, HFSS, and RaptorQu should not simply advancing particular person analysis initiatives — they’re accelerating the whole area, enabling researchers to check new concepts and scale quantum architectures extra effectively than ever earlier than. With Ansys now a part of Synopsys, we’re uniquely positioned to offer end-to-end options that handle each the design and simulation wants of quantum computing R&D.

Empowering quantum researchers with industry-grade options

Regardless of the progress in quantum computing analysis, many groups nonetheless depend on disjointed, narrowly scoped open-source simulation software program. These instruments typically require important customization to assist particular analysis wants and usually lack sturdy assist for contemporary GPU clusters and machine learning-based simulation speedups. Because of this, researchers and firms spend substantial effort adapting and sustaining fragmented workflows, which might restrict the size and impression of their numerical simulations.

In distinction, mature, absolutely supported business simulation software program that integrates seamlessly with sensible workflows and has been extensively validated in semiconductor manufacturing duties provides a transparent benefit. By leveraging such platforms, researchers are freed to concentrate on qubit system innovation slightly than spending time on infrastructure challenges. This additionally permits the extension of numerical simulation to extra advanced and larger-scale issues, supporting fast iteration and deeper perception.

To advance quantum computing from analysis to business actuality, the quantum ecosystem wants dependable, complete numerical simulation software program — simply because the semiconductor {industry} depends on established options from Synopsys immediately. Sturdy, scalable simulation platforms are important not just for particular person initiatives however for the expansion and maturation of the whole quantum computing area.

“Profitable repeatable tiles with superconducting qubits want to attenuate crosstalk between wires, and candidate designs are simpler to check by numerical simulation than in lab experiments,” stated Qolab CTO John Martinis, who was not too long ago acknowledged by the Royal Swedish Academy of Sciences for his groundbreaking work in quantum mechanics. “As a part of our collaboration, Synopsys enhanced electromagnetic simulations to deal with more and more advanced microwave circuit layouts working close to 0K temperature. Simulating future layouts optimized for quantum error-correcting codes would require scaling up efficiency utilizing superior numerical strategies, machine studying, and multi-GPU clusters.”