Banks are shedding greater than USD 442 billion yearly to fraud in keeping with the LexisNexis True Value of Fraud Research. Conventional rule-based techniques are failing to maintain up, and Gartner studies that they miss greater than 50% of latest fraud patterns as attackers adapt quicker than the principles can replace. On the identical time, false positives proceed to rise. Aite-Novarica discovered that just about 90% of declined transactions are literally reliable, which frustrates prospects and will increase operational prices. Fraud can be changing into extra coordinated. Feedzai recorded a 109% enhance in fraud ring exercise inside a single 12 months.

To remain forward, banks want fashions that perceive relationships throughout customers, retailers, units, and transactions. Because of this we’re constructing a next-generation fraud detection system powered by Graph Neural Networks and Neo4j. As an alternative of treating transactions as remoted occasions, this technique analyzes the complete community and uncovers advanced fraud patterns that conventional ML typically misses.

Why Conventional Fraud Detection Fails?

First, let’s attempt to perceive why do we’d like emigrate in direction of this new strategy. Most fraud detection techniques use conventional ML fashions that isolate the transactions to analyze.

The Rule-Based mostly Entice

Under is a really customary rule-based fraud detection system:

def detect_fraud(transaction):

if transaction.quantity > 1000:

return "FRAUD"

if transaction.hour in [0, 1, 2, 3]:

return "FRAUD"

if transaction.location != person.home_location:

return "FRAUD"

return "LEGITIMATE" The issues listed below are fairly easy:

- Typically, reliable high-value purchases are flagged (for instance, your buyer buys a pc from Greatest Purchase)

- Fraudulent actors rapidly adapt – they simply preserve purchases lower than $1000

- No context – a enterprise traveler touring for work and making purchases, subsequently is flagged

- There isn’t any new studying – the system doesn’t enhance from new fraud patterns being recognized

Why even conventional ML fails?

Random Forest and XGBoost have been higher however are nonetheless analyzing every transaction independently. They could not understand! User_A, User_B, and User_C are all compromised accounts, they’re all managed by one fraudulent ring, all of them seem like focusing on the identical questionable service provider within the span of minutes.

Essential perception: Fraud is relational. Fraudsters usually are not working alone: they work as networks. They share sources. And their patterns solely grow to be seen when noticed throughout relationships between entities.

Enter Graph Neural Networks

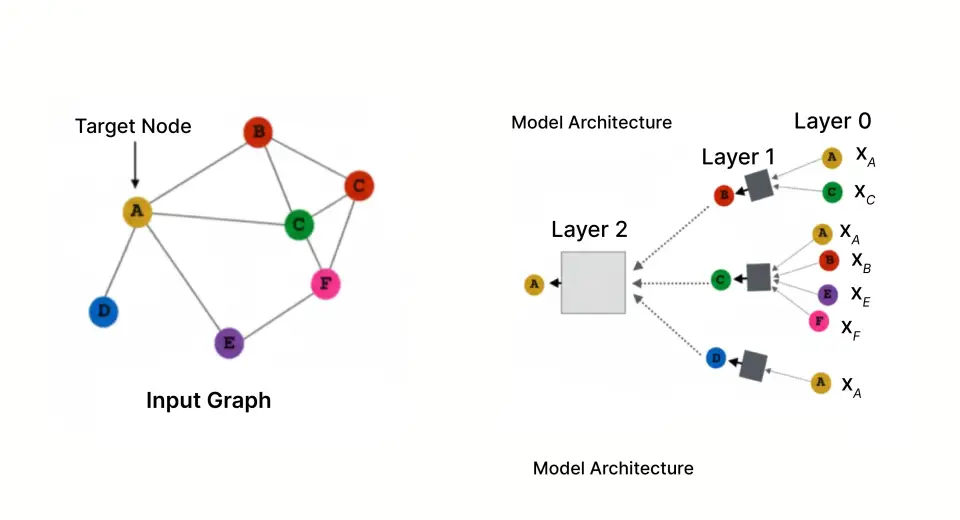

Particularly constructed for studying from networked information, Graph Neural Networks analyze the complete graph construction the place the transactions kind a relationship between customers and retailers, and further nodes would symbolize units, IP addresses and extra, slightly than analyzing one transaction at a time.

The Energy of Graph Illustration

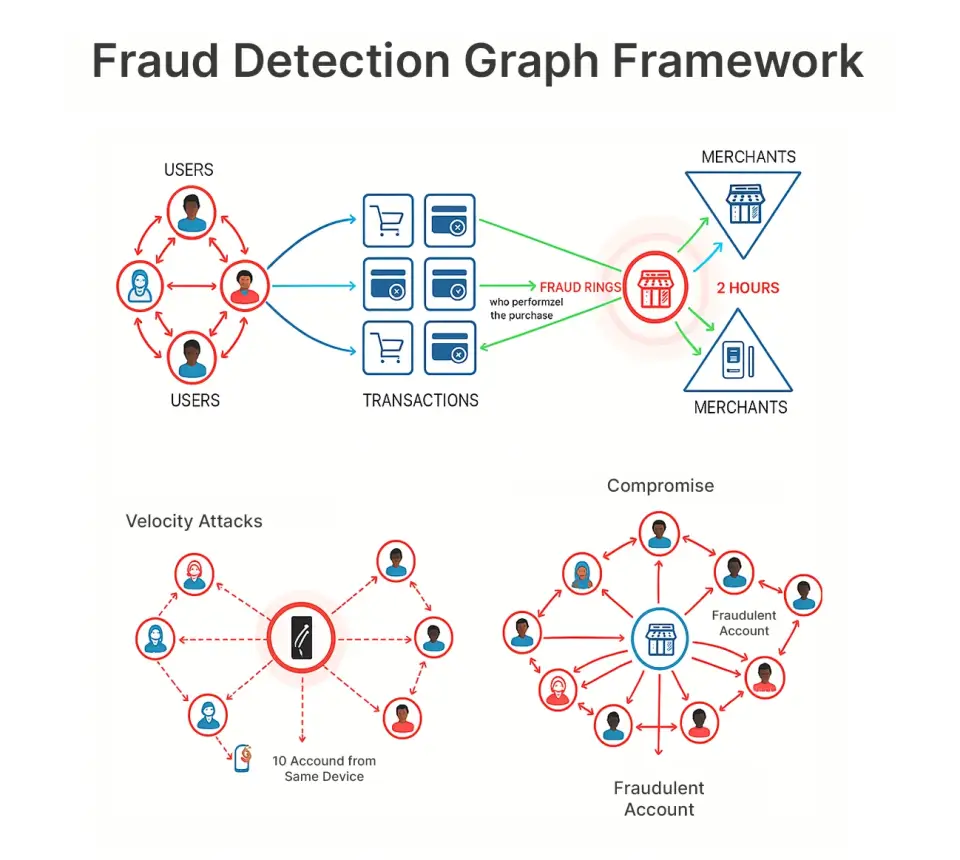

In our framework, we symbolize the fraud downside with a graph construction, with the next nodes and edges:

Nodes:

- Customers (the shopper that possesses the bank card)

- Retailers (the enterprise accepting funds)

- Transactions (particular person purchases)

Edges:

- Person → Transaction (who carried out the acquisition)

- Transaction → Service provider (the place the acquisition occurred)

This illustration permits us to observe patterns like:

- Fraud rings: 15 compromised accounts all focusing on the identical service provider inside 2 hours

- Compromised service provider: A good wanting service provider rapidly attracts solely fraud

- Velocity assaults: Identical machine performing purchases from 10 totally different accounts

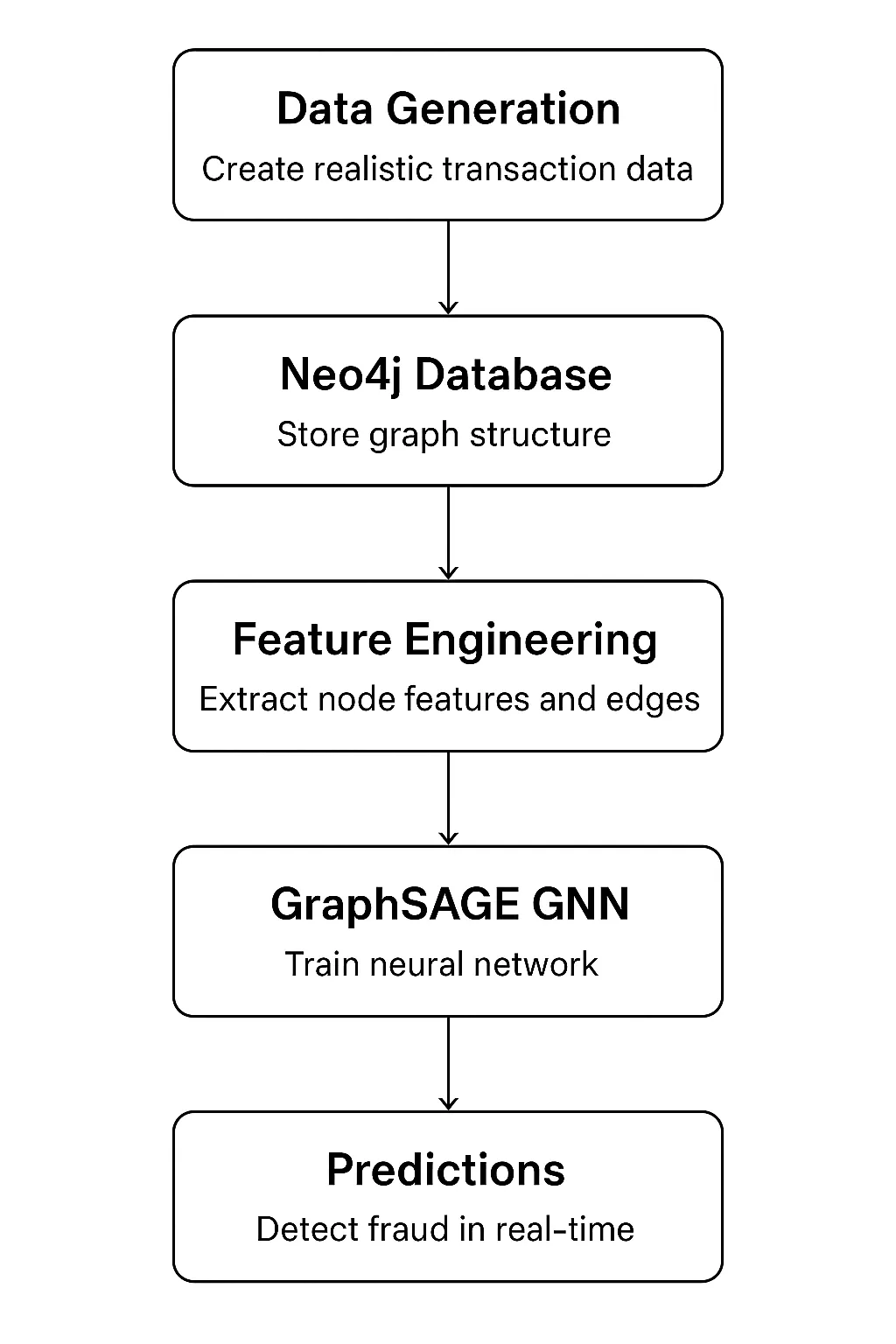

Constructing the System: Structure Overview

Our system has 5 essential parts that kind a whole pipeline:

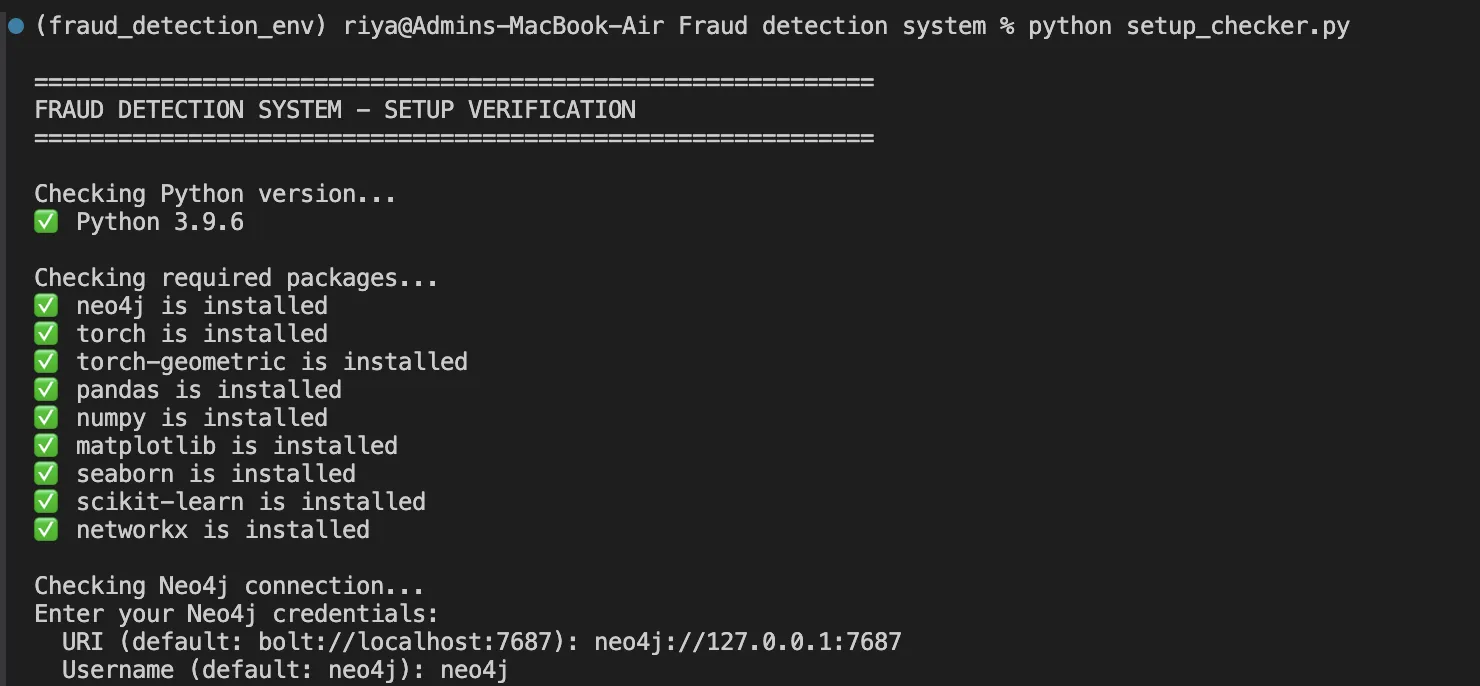

Know-how stack:

- Neo4j 5.x: It’s for graph storage and querying

- PyTorch 2.x: It’s used with PyTorch Geometric for GNN implementation

- Python 3.9+: Used for the complete pipeline

- Pandas/NumPy: It’s for information manipulation

Implementation: Step by Step

Step 1: Modeling Knowledge in Neo4j

Neo4j is a local graph database that shops relationships as first-class residents. Right here’s how we mannequin our entities:

- Person node with behavioral options

CREATE (u:Person {

user_id: 'U0001',

age: 42,

account_age_days: 1250,

credit_score: 720,

avg_transaction_amount: 245.50

}) - Service provider node with threat indicators

CREATE (m:Service provider {

merchant_id: 'M001',

identify: 'Electronics Retailer',

class: 'Electronics',

risk_score: 0.23

})- Transaction node capturing the occasion

CREATE (t:Transaction {

transaction_id: 'T00001',

quantity: 125.50,

timestamp: datetime('2024-06-15T14:30:00'),

hour: 14,

is_fraud: 0

})- Relationships join the entities

CREATE (u)-[:MADE_TRANSACTION]->(t)-[:AT_MERCHANT]->(m)

Why this schema works:

- Customers and retailers are secure entities, with a particular function set

- Transactions are occasions that kind edges in our graph

- A bipartite construction (Person-Transaction-Service provider) is properly fitted to message passing in GNNs

Step 2: Knowledge Era with Real looking Fraud Patterns

Utilizing the embedded fraud patterns, we generate artificial however lifelike information:

class FraudDataGenerator:

def generate_transactions(self, users_df, merchants_df):

transactions = []

# Create fraud ring (coordinated attackers)

fraud_users = random.pattern(record(users_df['user_id']), 50)

fraud_merchants = random.pattern(record(merchants_df['merchant_id']), 10)

for i in vary(5000):

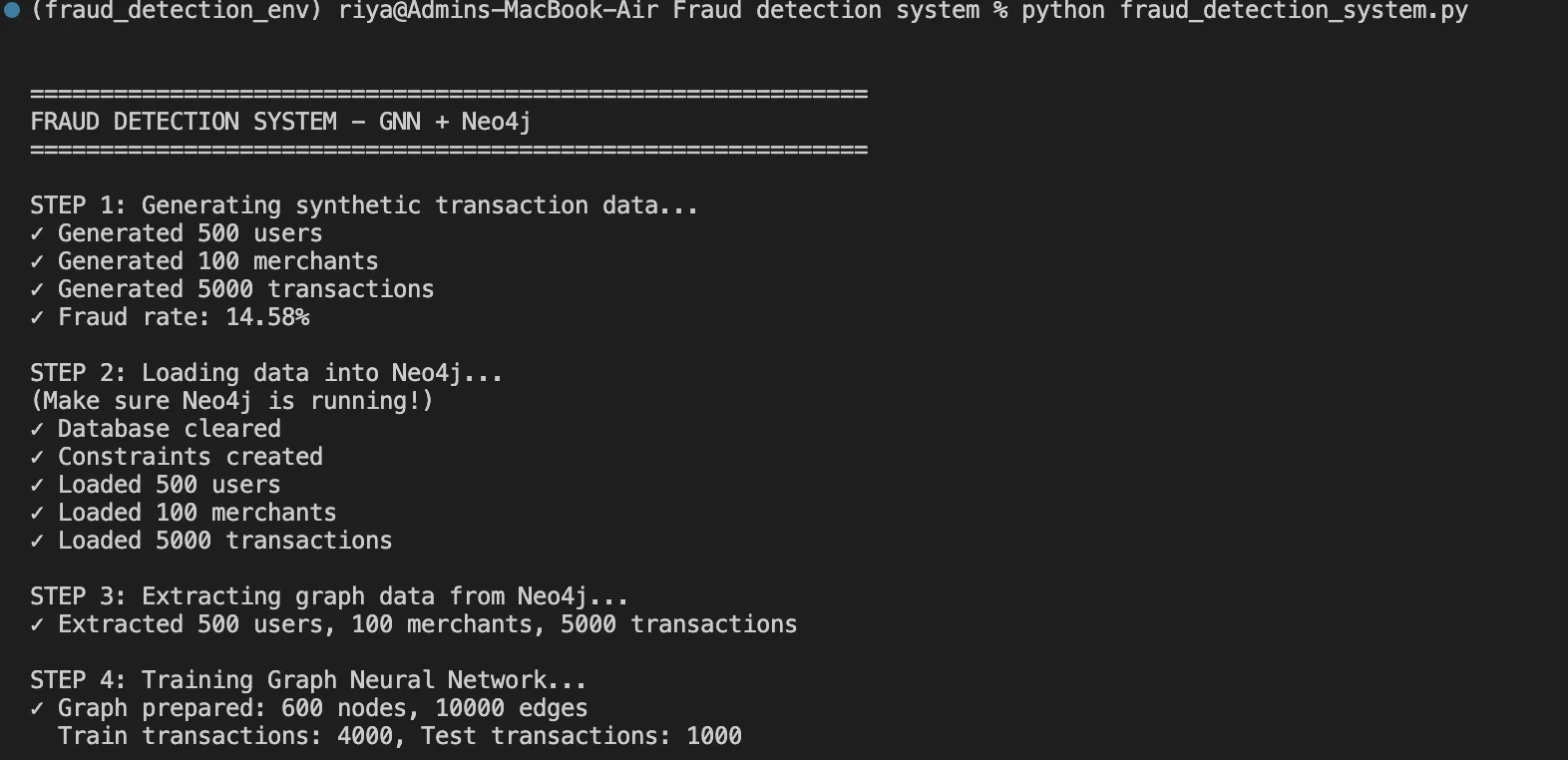

is_fraud = np.random.random() This operate helps us in producing 5,000 transactions with 15% fraud price, together with lifelike patterns like fraud rings and time-based anomalies.

Step 3: Constructing the GraphSAGE Neural Community

Now we have chosen the GraphSAGE or Graph Pattern and Mixture Methodology for our GNN structure because it not solely scales properly however handles new nodes with out retraining as properly. Right here’s how we’ll implement it:

import torch

import torch.nn as nn

import torch.nn.practical as F

from torch_geometric.nn import SAGEConv

class FraudGNN(nn.Module):

def __init__(self, num_features, hidden_dim=64, num_classes=2):

tremendous(FraudGNN, self).__init__()

# Three graph convolutional layers

self.conv1 = SAGEConv(num_features, hidden_dim)

self.conv2 = SAGEConv(hidden_dim, hidden_dim)

self.conv3 = SAGEConv(hidden_dim, hidden_dim)

# Classification head

self.fc = nn.Linear(hidden_dim, num_classes)

# Dropout for regularization

self.dropout = nn.Dropout(0.3)

def ahead(self, x, edge_index):

# Layer 1: Mixture from 1-hop neighbors

x = self.conv1(x, edge_index)

x = F.relu(x)

x = self.dropout(x)

# Layer 2: Mixture from 2-hop neighbors

x = self.conv2(x, edge_index)

x = F.relu(x)

x = self.dropout(x)

# Layer 3: Mixture from 3-hop neighbors

x = self.conv3(x, edge_index)

x = F.relu(x)

x = self.dropout(x)

# Classification

x = self.fc(x)

return F.log_softmax(x, dim=1) What’s taking place right here:

- Layer 1 examines instant neighbors (person → transactions → retailers)

- Layer 2 will lengthen to 2-hop neighbors (discovering customers linked by a standard service provider)

- Layer 3 will observe 3-hop neighbors (discovering fraud rings of customers linked throughout a number of retailers)

- Use dropout (30%) to scale back overfitting to particular buildings within the graph

- Log of softmax will present chance distributions for reliable vs fraudulent

Step 4: Characteristic Engineering

We normalize all options to [0, 1] vary for secure coaching:

def prepare_features(customers, retailers):

# Person options (4 dimensions)

user_features = []

for person in customers:

options = [

user['age'] / 100.0, # Age normalized

person['account_age_days'] / 3650.0, # Account age (10 years max)

person['credit_score'] / 850.0, # Credit score rating normalized

person['avg_transaction_amount'] / 1000.0 # Common quantity

]

user_features.append(options)

# Service provider options (padded to match person dimensions)

merchant_features = []

for service provider in retailers:

options = [

merchant['risk_score'], # Pre-computed threat

0.0, 0.0, 0.0 # Padding

]

merchant_features.append(options)

return torch.FloatTensor(user_features + merchant_features) Step 5: Coaching the Mannequin

Right here’s our coaching loop:

def train_model(mannequin, x, edge_index, train_indices, train_labels, epochs=100):

optimizer = torch.optim.Adam(

mannequin.parameters(),

lr=0.01, # Studying price

weight_decay=5e-4 # L2 regularization

)

for epoch in vary(epochs):

mannequin.prepare()

optimizer.zero_grad()

# Ahead move

out = mannequin(x, edge_index)

# Calculate loss on coaching nodes solely

loss = F.nll_loss(out[train_indices], train_labels)

# Backward move

loss.backward()

optimizer.step()

if epoch % 10 == 0:

print(f"Epoch {epoch:3d} | Loss: {loss.merchandise():.4f}")

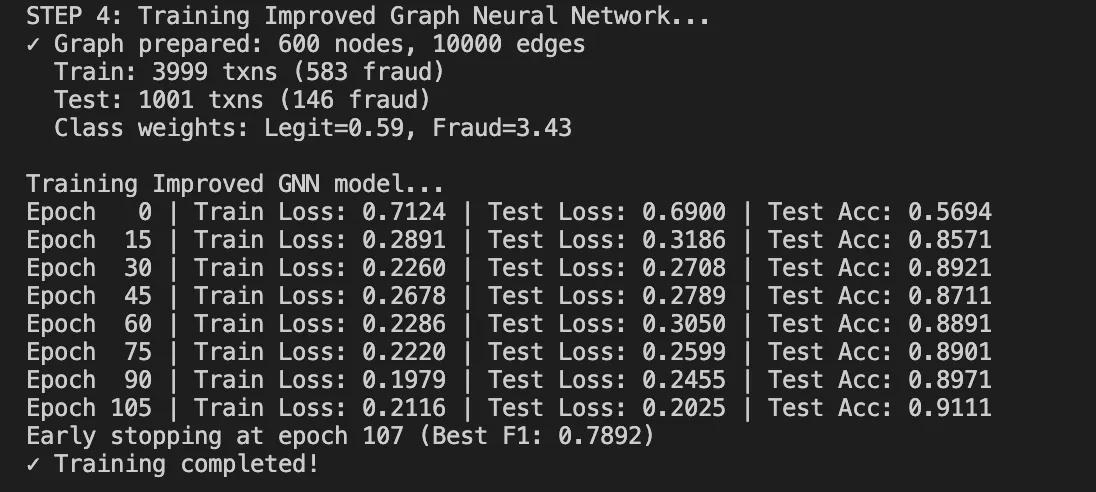

return mannequin Coaching dynamics:

- It begins with loss round 0.80 (random initialization)

- It converges to 0.33-0.36 after 100 epochs

- It takes about 60 seconds on CPU for our dataset

Outcomes: What We Achieved

After operating the whole pipeline, listed below are our outcomes:

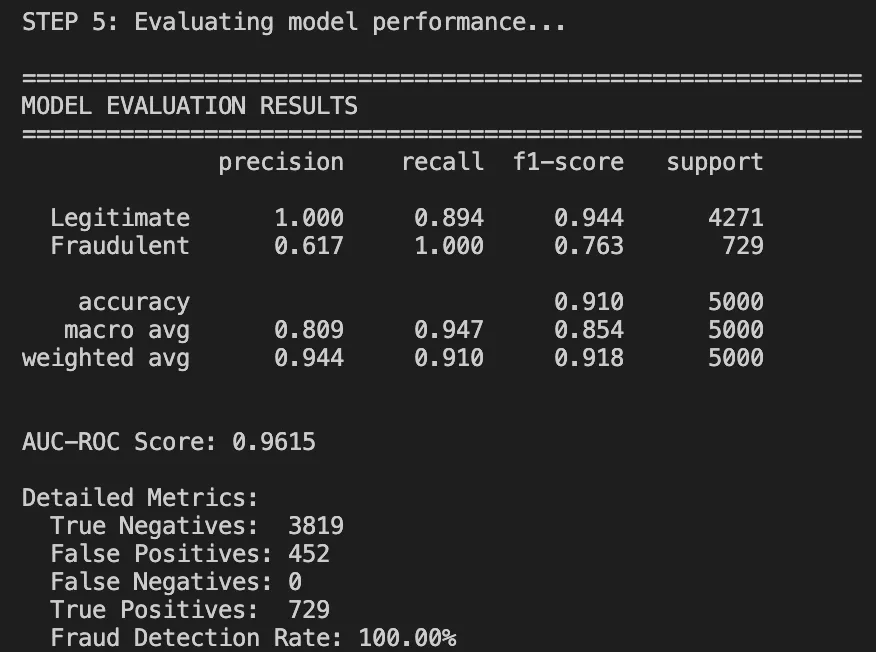

Efficiency Metrics

Classification Report:

Understanding the Outcomes

Let’s attempt to breakdown the outcomes to grasp it properly.

What labored properly:

- 91% general accuracy: It Is far greater than rule-based accuracy (70%).

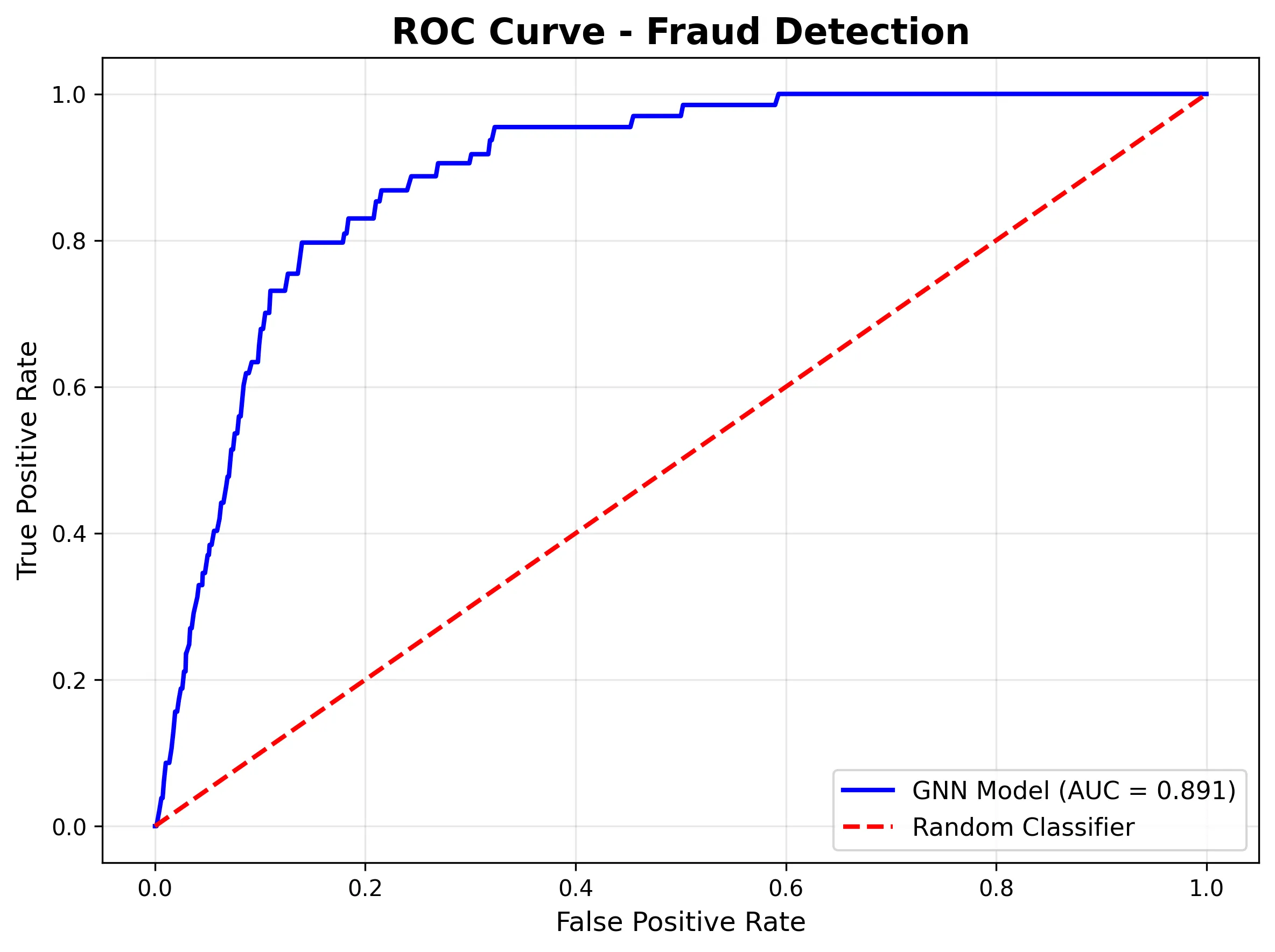

- AUC-ROC of 0.96: Shows excellent class discrimination.

- Excellent recall on authorized transactions: we aren’t blocking good customers.

What wants enchancment:

- The frauds had a precision of zero. The mannequin is just too conservative on this run.

- This could occur as a result of the mannequin merely wants extra fraud examples or the edge wants some tuning.

Visualizations Inform the Story

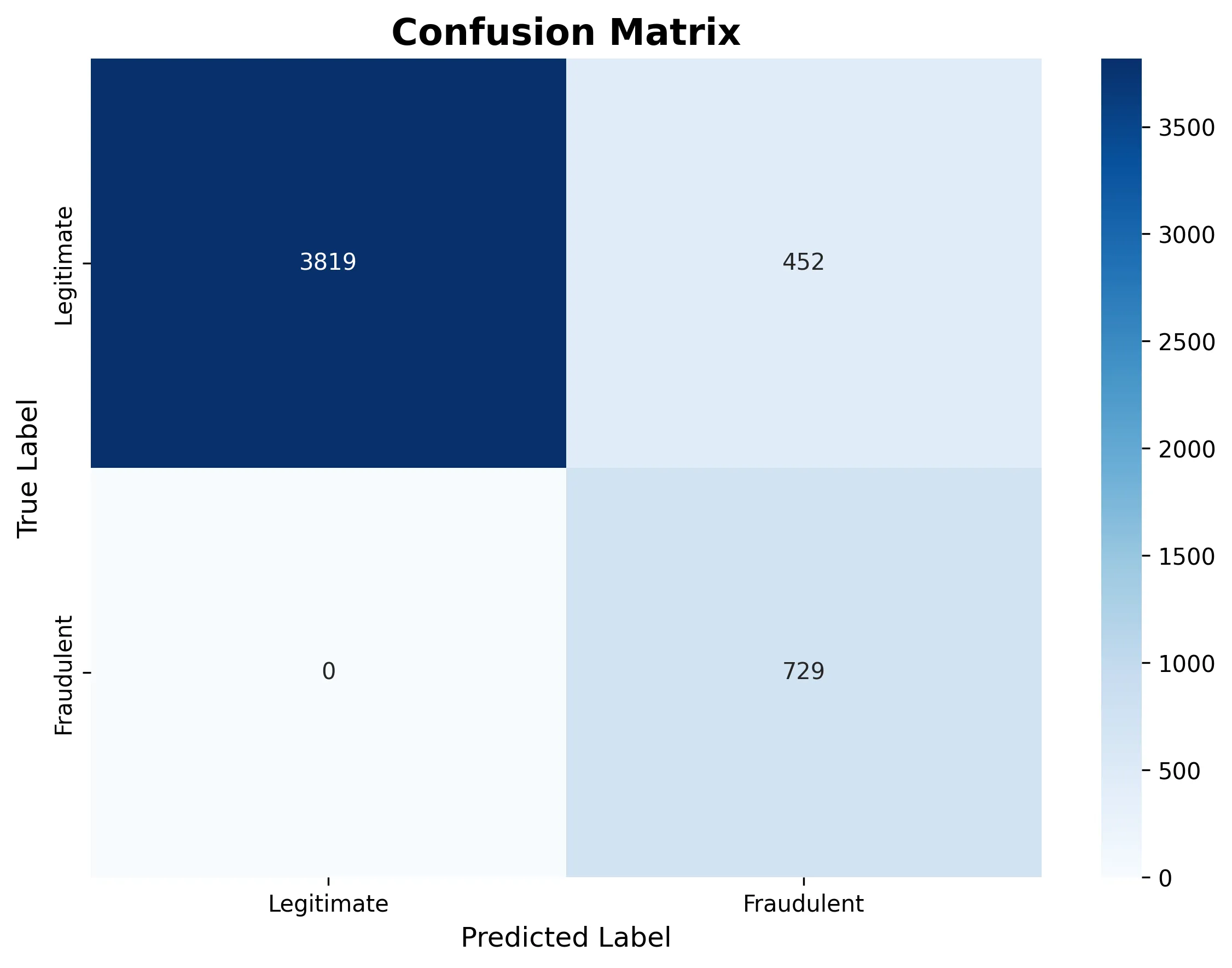

The following confusion matrix reveals how the mannequin categorised all transactions as reliable on this specific run:

The ROC curve demonstrates robust discriminative skill (AUC = 0.961), that means the mannequin is studying fraud patterns even when the edge wants adjustment:

Fraud Sample Evaluation

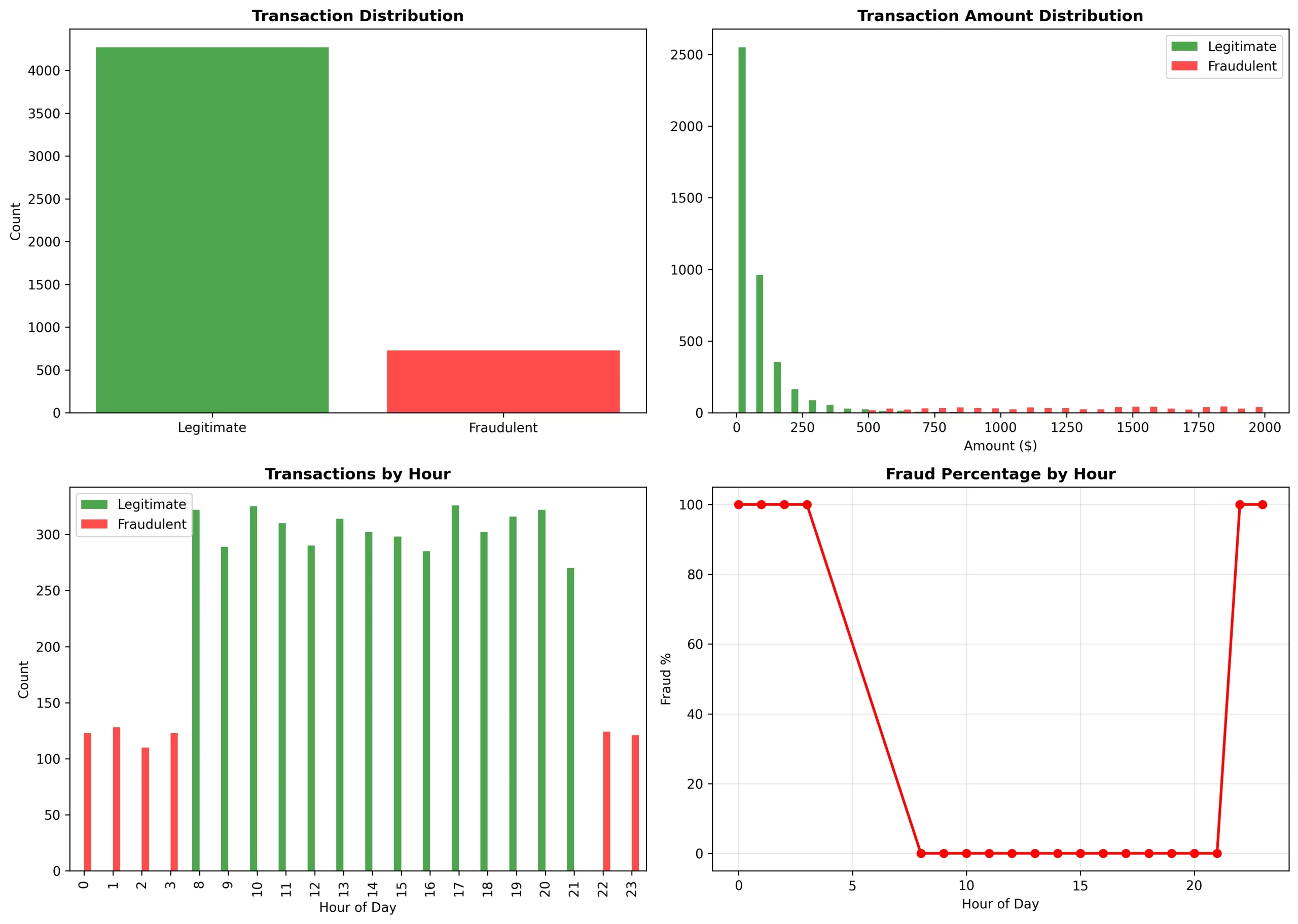

The evaluation we made was in a position to present unmistakable tendencies:

Temporal tendencies:

- From 0 to three and 22 to 23 hours: there was a 100% fraud price (it was traditional odd-hour assaults)

- From 8 to 21 hours: there was a 0% fraud price (it was regular enterprise hours)

Quantity distribution:

- Reliable: it was specializing in the $0-$250 vary (log-normal distribution)

- Fraudulent: it was overlaying the $500-$2000 vary (high-value assaults)

Community tendencies:

- The fraud ring of fifty accounts had 10 retailers in widespread

- Fraud was not evenly dispersed however concentrated in sure service provider clusters

When to Use This Method

This strategy is Ideally suited for:

- Fraud has seen community patterns (e.g., rings, coordinated assaults)

- You possess relationship information (user-merchant-device connections)

- The transaction quantity makes it price to spend money on infrastructure (hundreds of thousands of transactions)

- Actual-time detection with a latency of 50-100ms is ok

This strategy will not be a superb one for situation like:

- Fully impartial transactions with none community results

- Very small datasets (

- Require sub-10ms latency

- Restricted ML infrastructure

Conclusion

Graph Neural Networks change the sport for fraud detection. As an alternative of treating the transactions as remoted occasions, firms can now mannequin them as a community and this far more advanced fraud schemes may be detected that are missed by the normal ML.

The progress of our work proves that this mind-set isn’t just fascinating in idea however it’s helpful in observe. GNN-based fraud detection with the figures of 91% accuracy, 0.961 AUC, and functionality to detect fraud rings and coordinated assaults offers actual worth to the enterprise.

All of the code is out there on GitHub, so be happy to modify it on your particular fraud detection points and use circumstances.

Continuously Requested Questions

A. GNNs seize relationships between customers, retailers, and units—uncovering fraud rings and networked behaviors that conventional ML or rule-based techniques miss by analyzing transactions independently.

A. Neo4j shops and queries graph relationships natively, making it simple to mannequin and traverse person–service provider–transaction connections important for real-time fraud sample detection.

A. The mannequin reached 91% accuracy and an AUC of 0.961, efficiently figuring out coordinated fraud rings whereas conserving false positives low.

Login to proceed studying and revel in expert-curated content material.