As we’re wrapping up 2025, I assumed it might be good to have a look again on the AI fashions which have left a long-lasting influence all year long. This yr introduced new AI fashions to the limelight, whereas a few of the older fashions have additionally surged in reputation. From Pure Language Processing to Laptop Imaginative and prescient, these fashions have influenced a mess of AI domains. This text will showcase the fashions which have produced essentially the most influence within the yr 2025.

Mannequin Choice Standards

The AI fashions listed on this article have been chosen from HuggingFace leaderboards primarily based on the next standards:

- The variety of downloads

- Having both an Apache 2.0 or MIT open-source licence

This contains a mixture of the fashions that got here out this yr, or within the earlier yr which skilled a surge in reputation. You’ll be able to view the entire record at HuggingFace leaderboard from right here: https://huggingface.co/fashions?license=license:apache-2.0&kind=downloads

1. Sentence Transformer MiniLM

Class: Pure Language Processing

A compact English sentence embedding mannequin optimized for semantic similarity, clustering, and retrieval. It distills MiniLM right into a 6-layer transformer (384-dimensional embeddings) educated on hundreds of thousands of sentence pairs. Regardless of its measurement, it delivers sturdy efficiency throughout semantic search and subject modelling duties, rivalling bigger fashions.

License: Apache 2.0

HuggingFace Hyperlink: https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2

2. Google Electra Base Discriminator

Class: Pure Language Processing

ELECTRA redefines masked language modeling by coaching fashions to detect changed tokens as a substitute of predicting them. The bottom model (110M parameters) achieves efficiency corresponding to BERT-base whereas requiring a lot much less computation. It’s extensively used for function extraction and fine-tuning in classification and QA pipelines.

License: Apache 2.0

HuggingFace Hyperlink: https://huggingface.co/google/electra-base-discriminator

3. FalconsAI NSFW Picture Detection

Class: Laptop Imaginative and prescient

A CNN-based mannequin designed to detect NSFW or unsafe content material in pictures. Folks utilizing websites like Reddit would pay attention to a notorious “NSFW Blocker”. Constructed on architectures like EfficientNet or MobileNet, it outputs possibilities for “protected” versus “unsafe” classes, making it a key moderation part for AI-generated or user-uploaded visuals.

License: Apache 2.0

HuggingFace Hyperlink: https://huggingface.co/Falconsai/nsfw_image_detection

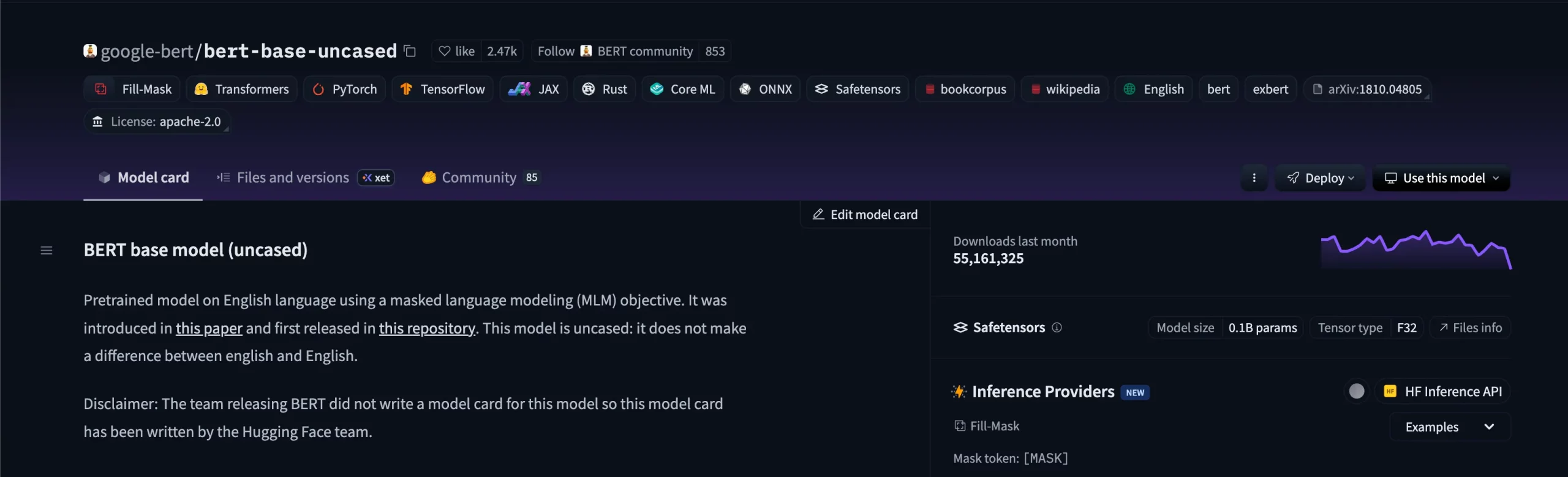

4. Google Uncased BERT

Class: Pure Language Processing

The unique BERT-base mannequin from Google Analysis, educated on BooksCorpus and English Wikipedia. With 12 layers and 110M parameters, it laid the groundwork for contemporary transformer architectures and stays a powerful baseline for classification, NER, and query answering.

License: Apache 2.0

HuggingFace Hyperlink: https://huggingface.co/google-bert/bert-base-uncased

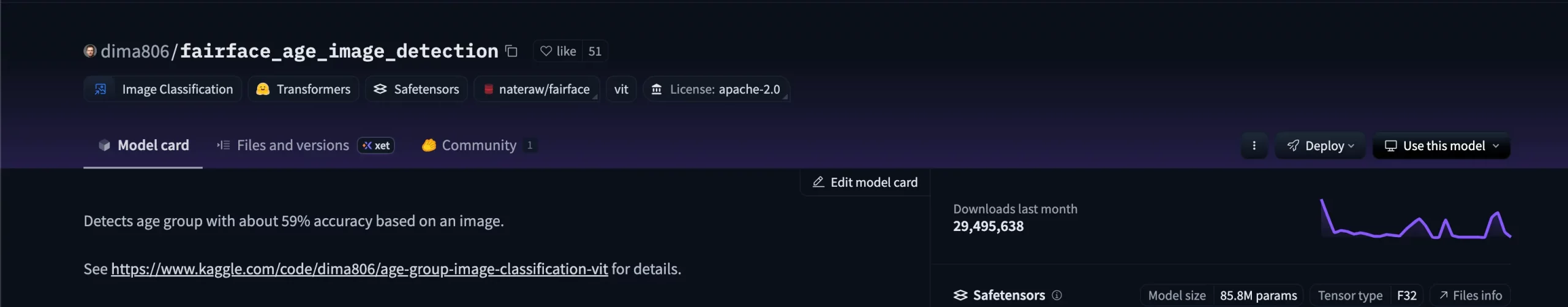

5. Fairface Picture Age Detection

Class: Laptop Imaginative and prescient

A facial age prediction mannequin educated on the FairFace dataset, emphasizing balanced illustration throughout ethnicity and gender. It prioritizes equity and demographic consistency, making it appropriate for analytics and analysis pipelines involving facial attributes.

License: Apache 2.0

HuggingFace Hyperlink: https://huggingface.co/dima806/fairface_age_image_detection

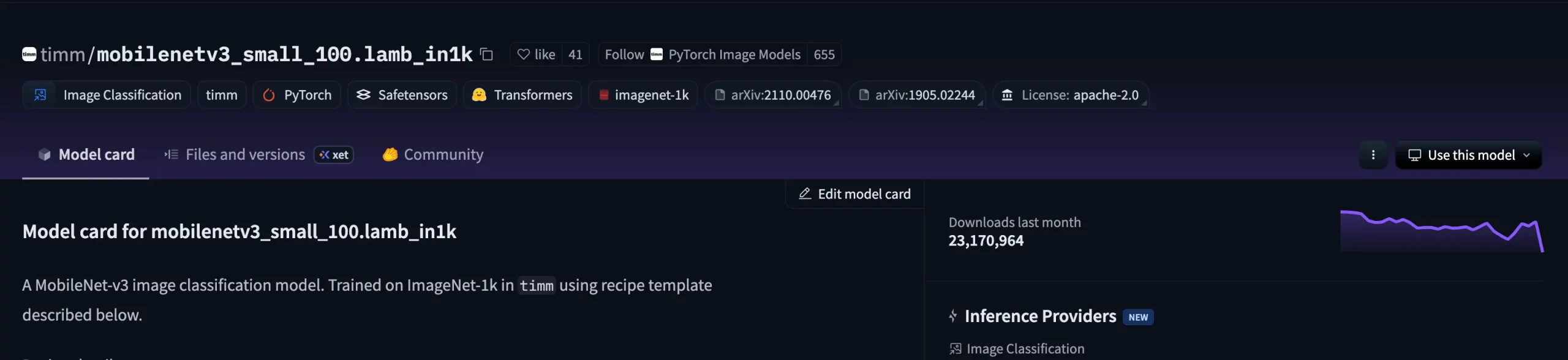

6. MobileNet Picture Classification Mannequin

Class: Laptop Imaginative and prescient

A light-weight convolutional picture classifier from the timm library, designed for environment friendly deployment on resource-limited units. MobileNetV3 Small, educated on ImageNet-1k utilizing the LAMB optimizer, achieves stable accuracy with low latency, making it preferrred for edge and cellular inference.

License: Apache 2.0

HuggingFace Hyperlink: https://huggingface.co/timm/mobilenetv3_small_100.lamb_in1k

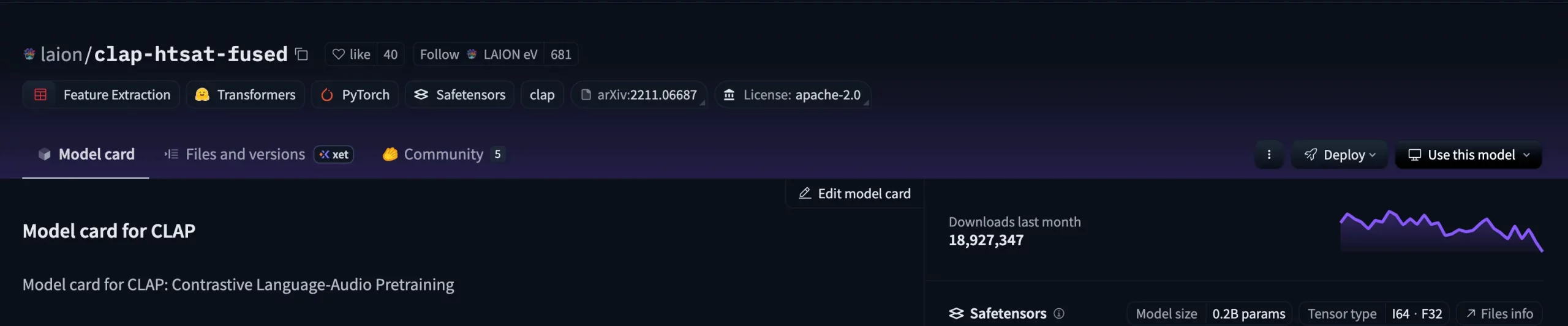

7. Laion CLAP

Class: Multimodal (Audio to Language)

A fusion of CLAP (Contrastive Language–Audio Pretraining) and HTS-AT (Hierarchical Token-Semantic Audio Transformer) that maps audio and textual content right into a shared embedding area. It helps zero-shot audio retrieval, tagging, and captioning, bridging sound understanding and pure language.

License: Apache 2.0

HuggingFace Hyperlink: https://huggingface.co/laion/clap-htsat-fused

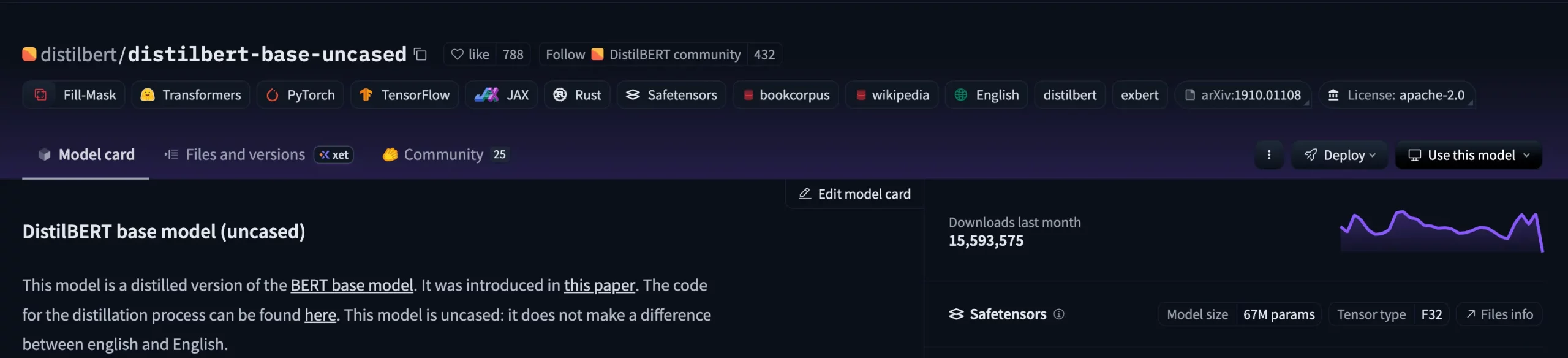

8. DistilBERT

Class: Pure Language Processing

A distilled model of BERT-base developed by Hugging Face to stability efficiency and effectivity. Retaining about 97% of BERT’s accuracy whereas being 40% smaller and 60% sooner, it’s preferrred for light-weight NLP duties like classification, embeddings, and semantic search.

License: Apache 2.0

HuggingFace Hyperlink: https://huggingface.co/distilbert/distilbert-base-uncased

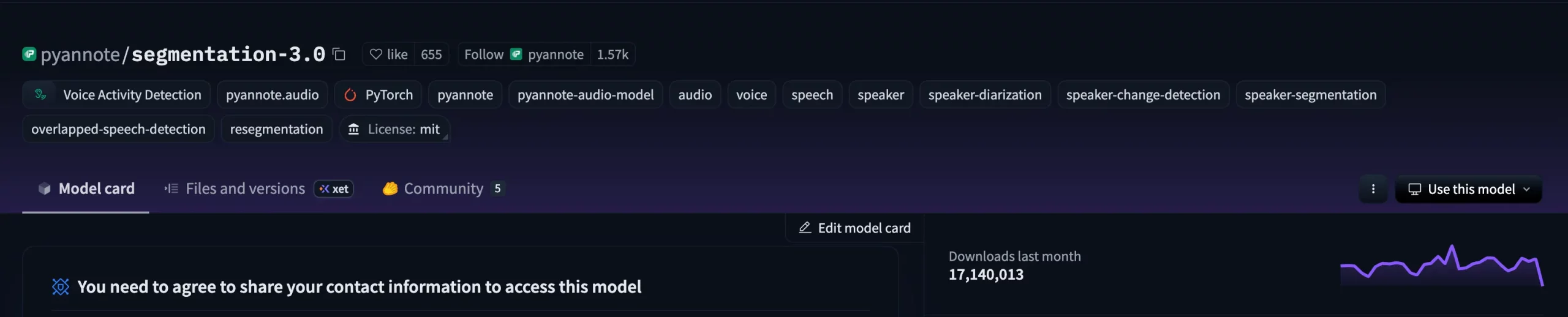

9. Pyannote Segmentation 3

Class: Speech Processing

A core part of the Pyannote Audio pipeline for detecting and segmenting speech exercise. It identifies areas of silence, single-speaker, and overlapping speech, performing reliably even in noisy environments. Generally used as the inspiration for speaker programs.

License: MIT

HuggingFace Hyperlink: https://huggingface.co/pyannote/segmentation-3.0

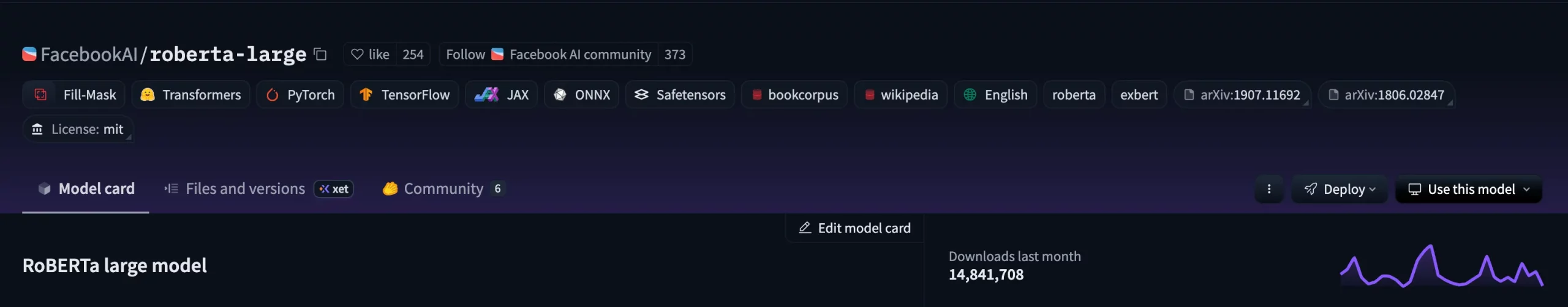

10. FacebookAI Roberta Massive

Class: Pure Language Processing

A robustly optimized BERT variant educated on 160 GB of English textual content with dynamic masking and no next-sentence prediction. With 24 layers and 355M parameters, RoBERTa-large persistently outperforms BERT-base throughout GLUE and different benchmarks, powering high-accuracy NLP functions.

License: MIT

HuggingFace Hyperlink: https://huggingface.co/FacebookAI/roberta-large

Conclusion

This listicle isn’t exhaustive, and there are a number of fashions on the market which have had super influence, however didn’t make it to the record. Some which had been simply as impactful, however lacked an open-source license. And others simply didn’t have the numbers. However what all of them did was contribute in direction of fixing part of a much bigger downside. The fashions shared on this record may not have the thrill that follows fashions like Gemini, ChatGPT, Claude, however what they provide is an open-letter to information science lovers which need to create issues from scratch, with out housing a knowledge middle.

Continuously Requested Questions

A. The fashions had been chosen primarily based on two key components: complete downloads on Hugging Face and having a permissive open-source license (Apache 2.0 or MIT), guaranteeing they’re each fashionable and freely usable.

A. Most are, however some—like Falconsai/nsfw_image_detection or FairFace-based fashions—might have utilization restrictions. All the time verify the mannequin card and license earlier than deploying in manufacturing.

A. Open-source fashions give researchers and builders freedom to experiment, fine-tune, and deploy with out vendor lock-in or heavy infrastructure wants—making innovation extra accessible.

A. Most of them are launched beneath permissive licenses like Apache 2.0 or MIT, that means you should use them for each analysis and business initiatives.

A. Sure. Many manufacturing programs chain fashions from completely different domains — for instance, utilizing a speech segmentation mannequin like Pyannote to isolate dialogue, then a language mannequin like RoBERTa to research sentiment or intent, and at last a imaginative and prescient mannequin to average accompanying pictures.

Login to proceed studying and revel in expert-curated content material.