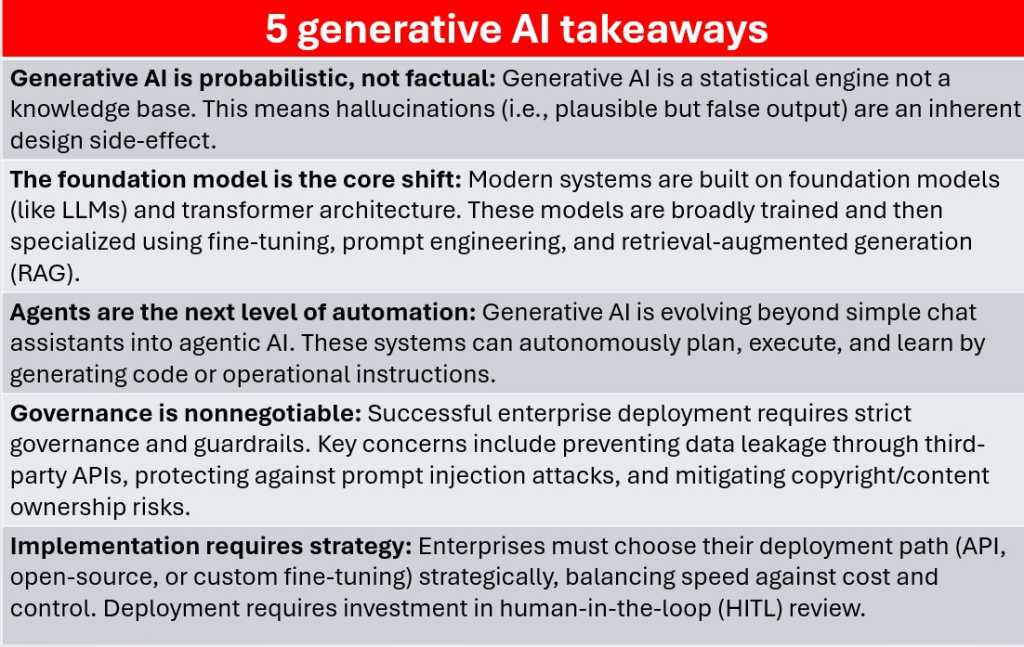

Each generative AI system, irrespective of how superior, is constructed round prediction. Bear in mind, a mannequin doesn’t really know info—it appears to be like at a sequence of tokens, then calculates, primarily based on evaluation of its underlying coaching information, what token is most probably to come back subsequent. That is what makes the output fluent and human-like, but when its prediction is improper, that shall be perceived as a hallucination.

Foundry

As a result of the mannequin doesn’t distinguish between one thing that’s identified to be true and one thing more likely to observe on from the enter textual content it’s been given, hallucinations are a direct facet impact of the statistical course of that powers generative AI. And don’t neglect that we’re typically pushing AI fashions to give you solutions to questions that we, who even have entry to that information, can’t reply ourselves.

In textual content fashions, hallucinations may imply inventing quotes, fabricating references, or misrepresenting a technical course of. In code or information evaluation, it may well produce syntactically appropriate however logically improper outcomes. Even RAG pipelines, which offer actual information context to fashions, solely cut back hallucination—they don’t eradicate it. Enterprises utilizing generative AI want assessment layers, validation pipelines, and human oversight to stop these failures from spreading into manufacturing techniques.