Guardrails are the constructing blocks of LLM purposes, serving to flip experimental LLM apps into dependable, enterprise-grade options. How? Whereas LLM-powered AI purposes could look easy in Proof of Idea (POC), scaling them reliably is a tough process. Whereas LLMs excel at open-ended reasoning, they wrestle with management and consistency when tailored for particular, mission-critical use instances.

This results in frequent manufacturing points, inconsistent habits, hallucinations, and unpredictable outputs, all of which influence person belief, compliance, and enterprise threat. Since LLMs are inherently probabilistic and delicate to adjustments in prompts, information, and context, conventional software program engineering alone doesn’t lower it.

That’s why sturdy guardrails, purpose-built frameworks, and steady monitoring are essential to make LLM programs reliable at scale. Right here, we discover simply how essential guardrails are for LLM

What are Guardrails?

Guardrails in LLM are mainly the foundations, filters, and checks that preserve an AI mannequin’s habits secure, moral, and constant when it’s producing responses.

Consider them as a security layer wrapped across the mannequin, validating what goes in (inputs) and what comes out (outputs) so the system stays dependable, safe, and aligned with the supposed function.

How are Guardrails Carried out?

There are a number of approaches to implementing guardrails in an LLM.

| Method | Methods / Use Circumstances |

|---|---|

| Guidelines or Heuristic Programs |

|

| Small Finetuned ML Fashions |

|

| Secondary LLM Name |

|

What are the kinds of Guardrails?

There are broadly two kinds of guardrails, enter guardrails and output guardrails.

Enter guardrails act as the primary line of protection for any LLM. They verify and validate every little thing earlier than it reaches the mannequin, issues like filtering out delicate data, blocking malicious or off-topic queries, and making certain the enter stays inside the app’s function.

Output guardrails, however, kick in after the mannequin generates a response. They be certain that the output is secure, related, and aligned with enterprise or compliance guidelines, catching points like hallucinations, coverage violations, or undesirable mentions earlier than the response reaches the person.

Collectively, these two layers preserve LLM programs constant, safe, and reliable in manufacturing.

Dangers with LLMs

On this article, we’ll take a look at 4 key issues most LLM purposes face:

- Mannequin limitations: Can the mannequin truly deal with the query? Does it hallucinate or go off monitor?

Observe: Hallucination is a relative time period. Typically, it refers to AI outputs that seem genuine however are factually incorrect. In our case, we outline hallucination as any response that isn’t grounded in or derived from our supposed information or context. - Unintended use: Customers can simply break directions or push the system past its function. For instance, a studying chatbot may be misused for unrelated conversations if not correctly restricted.

- Info leakage: Delicate information (PII – Private identifiable data) like names or telephone numbers should keep inside the group. We’d like filters to stop such particulars from being despatched to third-party LLM suppliers.

- Reputational threat: A chatbot mentioning opponents or violating firm insurance policies can hurt the model. Guardrails must be in place to stop that, and bolstered in the event that they fail.

How will we deal with Hallucinations?

In our case, any response that isn’t grounded in our personal data base is taken into account a hallucination. We wish the LLM to generate solutions strictly based mostly on our inner information, not guess or fill in gaps. In brief, hallucination = lack of groundedness.

Pure Language Inference (NLI)

NLI helps us verify how trustworthy the mannequin’s response is to the precise context. It really works with two parts — Premise and Speculation. The premise is what we all know to be true (the retrieved chunks from our vector DB), and the speculation is the mannequin’s response.

Pure Language Inference then evaluates how nicely the speculation aligns with the premise, mainly checking if the LLM’s reply stays grounded within the information it was speculated to depend on.

Arms-on creating Guardrail utilizing NLI

You possibly can try the complete code from – https://github.com/Badribn0612/Guardrails/blob/predominant/Lesson_5.ipynb

We will likely be utilizing guardrails-ai to create guardrail. Checkout https://www.guardrailsai.com/docs/getting_started/quickstart

https://www.guardrailsai.com/docs/getting_started/guardrails_server

To arrange the setting.

We will likely be utilizing a finetuned mannequin – GuardrailsAI/finetuned_nli_provenance – https://huggingface.co/GuardrailsAI/finetuned_nli_provenance

Beneath is the code which will likely be used as our Guardrail – in guardrail-ai, they name it a validator.

@register_validator(identify="hallucination_detector", data_type="string")

class HallucinationValidation(Validator):

def __init__(

self,

embedding_model: Non-compulsory[str] = None,

entailment_model: Non-compulsory[str] = None,

sources: Non-compulsory[List[str]] = None,

**kwargs

):

if embedding_model is None:

embedding_model="all-MiniLM-L6-v2"

self.embedding_model = SentenceTransformer(embedding_model)

self.sources = sources

if entailment_model is None:

entailment_model="GuardrailsAI/finetuned_nli_provenance"

self.nli_pipeline = pipeline("text-classification", mannequin=entailment_model)

tremendous().__init__(**kwargs)

def validate(

self, worth: str, metadata: Non-compulsory[Dict[str, str]] = None

) -> ValidationResult:

# Cut up the textual content into sentences

sentences = self.split_sentences(worth)

# Discover the related sources for every sentence

relevant_sources = self.find_relevant_sources(sentences, self.sources)

entailed_sentences = []

hallucinated_sentences = []

for sentence in sentences:

# Verify if the sentence is entailed by the sources

is_entailed = self.check_entailment(sentence, relevant_sources)

if not is_entailed:

hallucinated_sentences.append(sentence)

else:

entailed_sentences.append(sentence)

if len(hallucinated_sentences) > 0:

return FailResult(

error_message=f"The next sentences are hallucinated: {hallucinated_sentences}",

)

return PassResult()

def split_sentences(self, textual content: str) -> Listing[str]:

if nltk is None:

increase ImportError(

"This validator requires the `nltk` bundle. "

"Set up it with `pip set up nltk`, and take a look at once more."

)

return nltk.sent_tokenize(textual content)

def find_relevant_sources(self, sentences: str, sources: Listing[str]) -> Listing[str]:

source_embeds = self.embedding_model.encode(sources)

sentence_embeds = self.embedding_model.encode(sentences)

relevant_sources = []

for sentence_idx in vary(len(sentences)):

# Discover the cosine similarity between the sentence and the sources

sentence_embed = sentence_embeds[sentence_idx, :].reshape(1, -1)

cos_similarities = np.sum(np.multiply(source_embeds, sentence_embed), axis=1)

# Discover the highest 5 sources which are most related to the sentence which have a cosine similarity larger than 0.8

top_sources = np.argsort(cos_similarities)[::-1][:5]

top_sources = [i for i in top_sources if cos_similarities[i] > 0.8]

# Return the sources which are most related to the sentence

relevant_sources.prolong([sources[i] for i in top_sources])

return relevant_sources

def check_entailment(self, sentence: str, sources: Listing[str]) -> bool:

for supply in sources:

output = self.nli_pipeline({'textual content': supply, 'text_pair': sentence})

if output['label'] == 'entailment':

return True

return FalseInside the category, we initialize two key fashions:

- An embedding mannequin (all-MiniLM-L6-v2) to measure similarity between the LLM’s response and the supply paperwork.

- An entailment mannequin (GuardrailsAI/finetuned_nli_provenance) that performs Pure Language Inference (NLI) to verify if the response is definitely supported by the retrieved content material.

Validation circulate

- Cut up the output:

The LLM response (worth) is cut up into sentences. - Discover related sources:

For every sentence, we discover probably the most comparable chunks from our offered sources (like docs or vector DB outcomes) utilizing embeddings and cosine similarity. - Verify entailment:

For every sentence, we run NLI — checking if the sentence is “entailed” (supported) by the related sources. - Classify outcomes:

If a sentence is supported → it’s entailed.

If not → it’s flagged as hallucinated.

If any hallucinated sentences are discovered, the validator fails and returns the checklist of problematic traces. In any other case, it passes efficiently.

In brief, this validator acts as a fact filter. It ensures the LLM’s response is grounded within the precise supply information and doesn’t make issues up.

guard = Guard().use(

HallucinationValidation(

embedding_model="all-MiniLM-L6-v2",

entailment_model="GuardrailsAI/finetuned_nli_provenance",

sources=['The sun rises in the east and sets in the west.', 'The sun is hot.'],

on_fail=OnFailAction.EXCEPTION

)

)Now we create a guard, this is sort of a wrapper across the validators(guardrails), which is able to execute a number of validators in parallel in the event that they exist.

guard.validate(

'The solar rises within the east.',

)

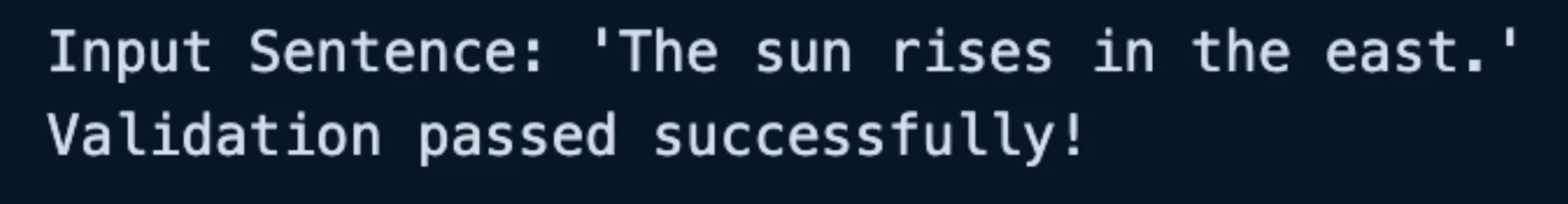

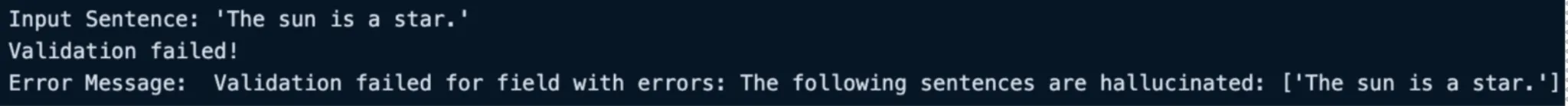

print("Enter Sentence: 'The solar rises within the east.'")

print("Validation handed efficiently!nn")

We will see that the speculation is legitimate, based mostly on the retrieved premise. You possibly can play with the edge to search out the appropriate level for validation. Beneath is an instance the place the validation fails.

strive:

guard.validate(

'The solar is a star.',

)

besides Exception as e:

print("Enter Sentence: 'The solar is a star.'")

print("Validation failed!")

print("Error Message: ", e)

The rationale why this failed isn’t as a result of the sentence is wrong however the sentence isn’t from our sources.

How to ensure our chatbot stays on matter?

We wish our chatbot to stay to its function, not drift into random conversations. For instance, a recruiting chatbot ought to solely speak about hiring, purposes, or job-related queries. An academic chatbot ought to give attention to serving to customers be taught, not chat about films or play trivia.

The concept is straightforward: preserve the chatbot aligned with its core intent. If it’s constructed for information science studying, it shouldn’t out of the blue begin discussing Sport of Thrones.

To do that, we will add area guardrails that filter inputs and outputs based mostly on the subject. Enter guardrails catch off-topic queries earlier than they attain the mannequin, and output guardrails be certain that the mannequin’s responses keep related and centered.

This helps preserve consistency, prevents misuse, and retains the person expertise aligned with what the chatbot is definitely meant to do.

Arms On – Guardrail for Matter Classification

You possibly can try the complete implementation right here: https://github.com/Badribn0612/Guardrails/blob/predominant/Lesson_6.ipynb

So, with a view to filter incoming queries to the agent or chatbot, we will likely be utilizing a subject classifier. Right here, Guardrails AI is utilizing a zero-shot classification mannequin, Fb/bart-large-mnli, and prompts it with the subjects that you really want your LLMs to remain inside.

Take a look at the Hugging Face web page for a similar – https://huggingface.co/fb/bart-large-mnli

Beneath is a pattern code to impose this guardrail.

from transformers import pipeline

CLASSIFIER = pipeline(

"zero-shot-classification",

mannequin="fb/bart-large-mnli",

hypothesis_template="This sentence above incorporates discussions of the folllowing subjects: {}.",

multi_label=True,

)

CLASSIFIER(

"Chick-Fil-A is closed on Sundays.",

["food", "business", "politics"]

)Whereas this strategy may be helpful for basic area restrictions, will probably be tough to make use of zeroshot classification for area of interest subjects, so in these instances we must use an LLM to categorise the subjects, one down of this strategy is that LLM based mostly guardrails are liable to Immediate Injection, therefore utilizing a easy classifier for immediate injection and LLM based mostly guardrails for matter classification in parallel can be one of the best ways to do it.

class Matters(BaseModel):

detected_topics: checklist[str]

t = time.time()

for i in vary(10):

completion = unguarded_client.beta.chat.completions.parse(

mannequin="gpt-4o-mini",

messages=[

{"role": "system", "content": "Given the sentence below, generate which set of topics out of ['food', 'business', 'politics'] is current within the sentence."},

{"function": "person", "content material": "Chick-Fil-A is closed on Sundays."},

],

response_format=Matters,

)

topics_detected = ', '.be a part of(completion.selections[0].message.parsed.detected_topics)

print(f'Iteration {i}, Matters detected: {topics_detected}')

print(f'nTotal time: {time.time() - t}')Above is the implementation of LLM LLM-based matter classifier. That is how we will make our AI programs keep inside the subjects. Now let’s bounce into the subsequent use case.

Find out how to keep away from PII (Private Identifiable Info) leakage

So, what’s PII? Private Identifiable Info contains identifiers and information as talked about beneath.

| Information Kind | Examples |

|---|---|

| Direct Identifiers | |

| Oblique Identifiers | |

| Delicate Information |

|

LLM Information Privateness Dangers:

- Third-party processing publicity

- Potential information retention by suppliers

- Danger of coaching information contamination

- Restricted management over information dealing with

When constructing LLM-powered apps, one of many largest dangers is unintentionally exposing person information like names, emails, or monetary data. To stop that, we have to have PII filtering at two key levels:

- Earlier than sending information to the LLM supplier: Any delicate or private data within the person question must be masked or eliminated earlier than it’s handed to the mannequin. This ensures we’re not leaking personal information to third-party APIs.

- Earlier than exhibiting the response to the person: Even the mannequin’s output can typically echo or regenerate delicate data. We’d like a post-processing layer to scan and filter such information earlier than displaying it again to the person.

By combining enter and output filtering, we be certain that person information stays protected inside our system, preserving privateness, compliance, and belief intact.

We’ll be utilizing Presidio Analyzer, an open-source challenge from Microsoft, to detect and deal with PII information.

If any PII exists inside our vector database, we’ll additionally must filter that out earlier than sending the ultimate response to the person, ensuring no delicate data slips by means of at any stage.

Arms On – Guardrails for PII filtering

Take a look at the complete implementation right here: https://github.com/Badribn0612/Guardrails/blob/predominant/Lesson_7.ipynb

# Presidio imports

from presidio_analyzer import AnalyzerEngine

from presidio_anonymizer import AnonymizerEngine

presidio_analyzer = AnalyzerEngine()

presidio_anonymizer= AnonymizerEngine()

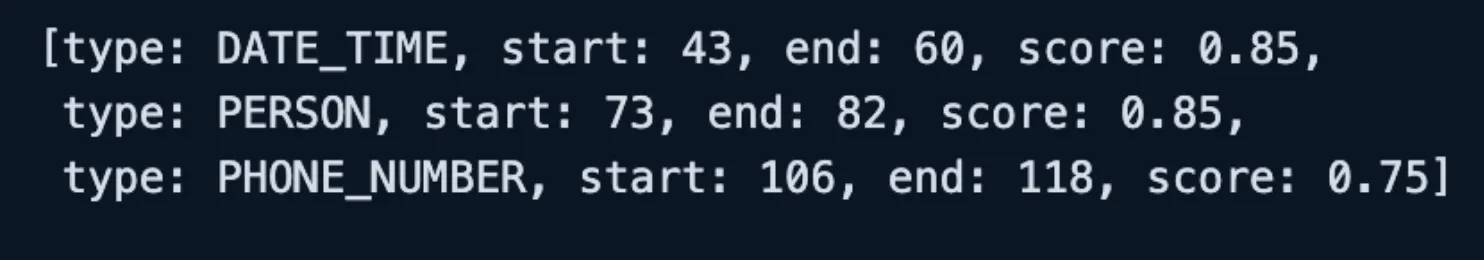

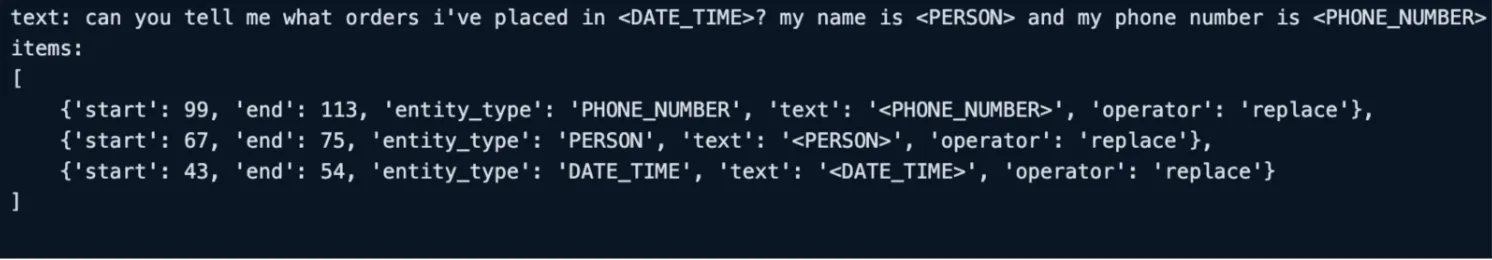

# First, let's analyze the textual content

textual content = "are you able to inform me what orders i've positioned within the final 3 months? my identify is Hank Tate and my telephone quantity is 555-123-4567"

evaluation = presidio_analyzer.analyze(textual content, language="en")

print(presidio_anonymizer.anonymize(textual content=textual content, analyzer_results=evaluation))

Implement a perform to detect PII

def detect_pii(

textual content: str

) -> checklist[str]:

outcome = presidio_analyzer.analyze(

textual content,

language="en",

entities=["PERSON", "PHONE_NUMBER"]

)

return [entity.entity_type for entity in result] Create a Guardrail that filters out PII

@register_validator(identify="pii_detector", data_type="string")

class PIIDetector(Validator):

def _validate(

self,

worth: Any,

metadata: Dict[str, Any] = {}

) -> ValidationResult:

detected_pii = detect_pii(worth)

if detected_pii:

return FailResult(

error_message=f"PII detected: {', '.be a part of(detected_pii)}",

metadata={"detected_pii": detected_pii},

)

return PassResult(message="No PII detected") Create a Guard that ensures no PII is leaked

guard = Guard(identify="pii_guard").use(

PIIDetector(

on_fail=OnFailAction.EXCEPTION

),

)

strive:

guard.validate("are you able to inform me what orders i've positioned within the final 3 months? my identify is Hank Tate and my telephone quantity is 555-123-4567")

besides Exception as e:

print(e)That is how one can implement PII filtering to not expose confidential information to LLM suppliers. Now let’s transfer on to our ultimate use case.

Stopping Competitor Mentions

This is a vital guardrail to make sure our system by no means references competitor names, merchandise, or sources. Even an off-the-cuff point out can hurt the corporate’s fame or violate model tips.

By organising filters or prompt-level restrictions, we will be certain that the chatbot stays impartial and centered on our personal ecosystem, avoiding any content material that would not directly promote or evaluate in opposition to opponents.

For instance, in case you’ve constructed a chatbot for Bain & Firm, it shouldn’t be speaking about or selling opponents like EY or PwC. Its responses ought to strictly mirror Bain’s companies, experience, and model positioning, not draw comparisons or reference exterior corporations.

Above is an structure that you could implement to keep away from mentioning opponents.

Arms On – Guardrails for Competitor Identify Filtering

Take a look at the complete implementation right here: https://github.com/Badribn0612/Guardrails/blob/predominant/Lesson_8.ipynb

Competitor Verify Validator

You’ll construct a validator to verify for opponents talked about within the response out of your LLM. This validator will use a specialised Named Entity Recognition mannequin to verify in opposition to a listing of opponents.

from typing import Non-compulsory, Listing

from transformers import AutoTokenizer, AutoModelForTokenClassification, pipeline

from sentence_transformers import SentenceTransformer

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

import reArrange the NER mannequin in Hugging Face to make use of within the validator:

# Initialize NER pipeline

tokenizer = AutoTokenizer.from_pretrained("dslim/bert-base-NER")

mannequin = AutoModelForTokenClassification.from_pretrained("dslim/bert-base-NER")

NER = pipeline("ner", mannequin=mannequin, tokenizer=tokenizer)

Organising the validator (Guardrail)

@register_validator(identify="check_competitor_mentions", data_type="string")

class CheckCompetitorMentions(Validator):

def __init__(

self,

opponents: Listing[str],

**kwargs

):

self.opponents = opponents

self.competitors_lower = [comp.lower() for comp in competitors]

self.ner = NER

# Initialize sentence transformer for vector embeddings

self.sentence_model = SentenceTransformer('all-MiniLM-L6-v2')

# Pre-compute competitor embeddings

self.competitor_embeddings = self.sentence_model.encode(self.opponents)

# Set the similarity threshold

self.similarity_threshold = 0.6

tremendous().__init__(**kwargs)

def exact_match(self, textual content: str) -> Listing[str]:

text_lower = textual content.decrease()

matches = []

for comp, comp_lower in zip(self.opponents, self.competitors_lower):

if comp_lower in text_lower:

# Use regex to search out complete phrase matches

if re.search(r'b' + re.escape(comp_lower) + r'b', text_lower):

matches.append(comp)

return matches

def extract_entities(self, textual content: str) -> Listing[str]:

ner_results = self.ner(textual content)

entities = []

current_entity = ""

for merchandise in ner_results:

if merchandise['entity'].startswith('B-'):

if current_entity:

entities.append(current_entity.strip())

current_entity = merchandise['word']

elif merchandise['entity'].startswith('I-'):

current_entity += " " + merchandise['word']

if current_entity:

entities.append(current_entity.strip())

return entities

def vector_similarity_match(self, entities: Listing[str]) -> Listing[str]:

if not entities:

return []

entity_embeddings = self.sentence_model.encode(entities)

similarities = cosine_similarity(entity_embeddings, self.competitor_embeddings)

matches = []

for i, entity in enumerate(entities):

max_similarity = np.max(similarities[i])

if max_similarity >= self.similarity_threshold:

most_similar_competitor = self.opponents[np.argmax(similarities[i])]

matches.append(most_similar_competitor)

return matches

def validate(

self,

worth: str,

metadata: Non-compulsory[dict[str, str]] = None

):

# Step 1: Carry out precise matching on the complete textual content

exact_matches = self.exact_match(worth)

if exact_matches:

return FailResult(

error_message=f"Your response immediately mentions opponents: {', '.be a part of(exact_matches)}"

)

# Step 2: Extract named entities

entities = self.extract_entities(worth)

# Step 3: Carry out vector similarity matching

similarity_matches = self.vector_similarity_match(entities)

# Step 4: Mix matches and verify if any had been discovered

all_matches = checklist(set(exact_matches + similarity_matches))

if all_matches:

return FailResult(

error_message=f"Your response mentions opponents: {', '.be a part of(all_matches)}"

)

return PassResult()This validator mainly helps me be certain that my chatbot by no means mentions or promotes opponents, immediately or not directly.

Right here’s the way it works in easy phrases

- I go in a listing of competitor names when initializing the validator. The code then shops these names (in each regular and lowercase) and prepares embeddings for them utilizing a SentenceTransformer mannequin.

- It makes use of two checks — one for precise mentions and one other for semantic similarity, so even when the mannequin tries to rephrase a competitor’s identify barely, we will nonetheless catch it.

What truly occurs

- Precise match: It first seems by means of the chatbot’s response to see if any competitor names are immediately talked about.

- Entity extraction: Then it runs NER to search out any group names within the response — this helps detect model mentions even when the chatbot doesn’t use the precise identify.

- Vector similarity verify: For every extracted entity, it checks how semantically shut it’s to any competitor utilizing embeddings. If the similarity is above the set threshold (0.6), that entity is flagged as a competitor.

- Last verify: If any competitor names present up (both precisely or semantically), the validation fails with an error message itemizing them. In any other case, it passes.

So, briefly, this validator is my means of making certain that the chatbot stays fully aligned with our model voice and doesn’t slip up by mentioning or selling opponents like EY or PwC in a Bain chatbot state of affairs.

Extra issues to discover

It’s also possible to try the Guardrails Hub – it’s an awesome place to discover open-source and community-built guardrails, and even create your individual: https://hub.guardrailsai.com/

Most guardrails are designed for particular use instances, however in relation to extra complicated eventualities, we regularly want to make use of LLMs as guardrails themselves. Whereas this strategy can introduce immediate injection dangers, we will mitigate that by including an ML classifier layer on high for additional security.

It’s also possible to discover NVIDIA NeMo Guardrails, one other highly effective framework for constructing secure and managed AI apps:

Conclusion

Constructing production-ready LLM purposes wants extra than simply flashy demos; it wants sturdy, systematic safeguards. Guardrails play a key function in tackling 4 main challenges confronted by any LLM: detecting hallucinations with NLI validation, preserving conversations on-topic by means of classifiers, defending PII utilizing instruments like Presidio Analyzer, and making certain model security with NER and semantic checks.

The perfect programs mix a number of layers, easy rule-based filters, small ML fashions, and LLM-based validators to construct dependable defenses. However this goes past only one app. Unchecked AI content material provides to the rising “AI slop” on-line, the place hallucinated information feeds again into future fashions.

Organizations ought to deal with validation pipelines not solely as a compliance want however as a accountability to keep up content material high quality and belief. Use frameworks like Guardrails AI and NVIDIA NeMo Guardrails, take a look at constantly, and bear in mind, guardrails aren’t limits. They’re what flip LLM experiments into secure, enterprise-grade programs that ship actual worth safely.

Login to proceed studying and luxuriate in expert-curated content material.