In case you’re following AI Brokers, you then may need seen that LangChain has created a pleasant ecosystem with LangChain, LangGraph, LangSmith & LangServe. Leveraging these, we are able to construct, deploy, consider, and monitor Agentic AI techniques. Whereas constructing an AI Agent, I simply thought to myself, “Why not present a easy demo to indicate the intertwined working of LangGraph & LangSmith?”. That is gonna be useful as AI Brokers typically want a number of LLM calls and now have greater prices related to them. This combo will assist monitor the bills and likewise consider the system utilizing customized datasets. With none additional ado, let’s dive in.

LangGraph for AI Brokers

Merely put, AI Brokers are LLMs with the aptitude to suppose/purpose and should entry instruments to handle their shortcomings or acquire entry to real-time info. LangGraph is an Agentic AI framework primarily based on LangChain to construct these AI Brokers. LangGraph helps construct graph-based Brokers; additionally, the creation of Agentic workflows is simplified with many inbuilt features already current within the LangGraph/LangChain libraries.

Learn extra: What’s LangGraph?

What’s LangSmith?

LangSmith is a monitoring and analysis platform by LangChain. It’s framework-agnostic, designed to work with any Agentic framework, corresponding to LangGraph, and even with Brokers constructed utterly from scratch. LangSmith could be simply configured to hint the runs and likewise monitor the bills of the Agentic system. It additionally helps operating experiments on the system, like altering the immediate and fashions within the system, and evaluating the outcomes. It has predefined evaluators like helpfulness, correctness, and hallucinations. You too can select to outline your personal evaluators. Let’s have a look at the LangSmith platform to get a greater thought of it.

Learn extra: Final Information to LangSmith

The LangSmith Platform

Let’s first join/sign up to take a look at the platform: https://www.langchain.com/langsmith

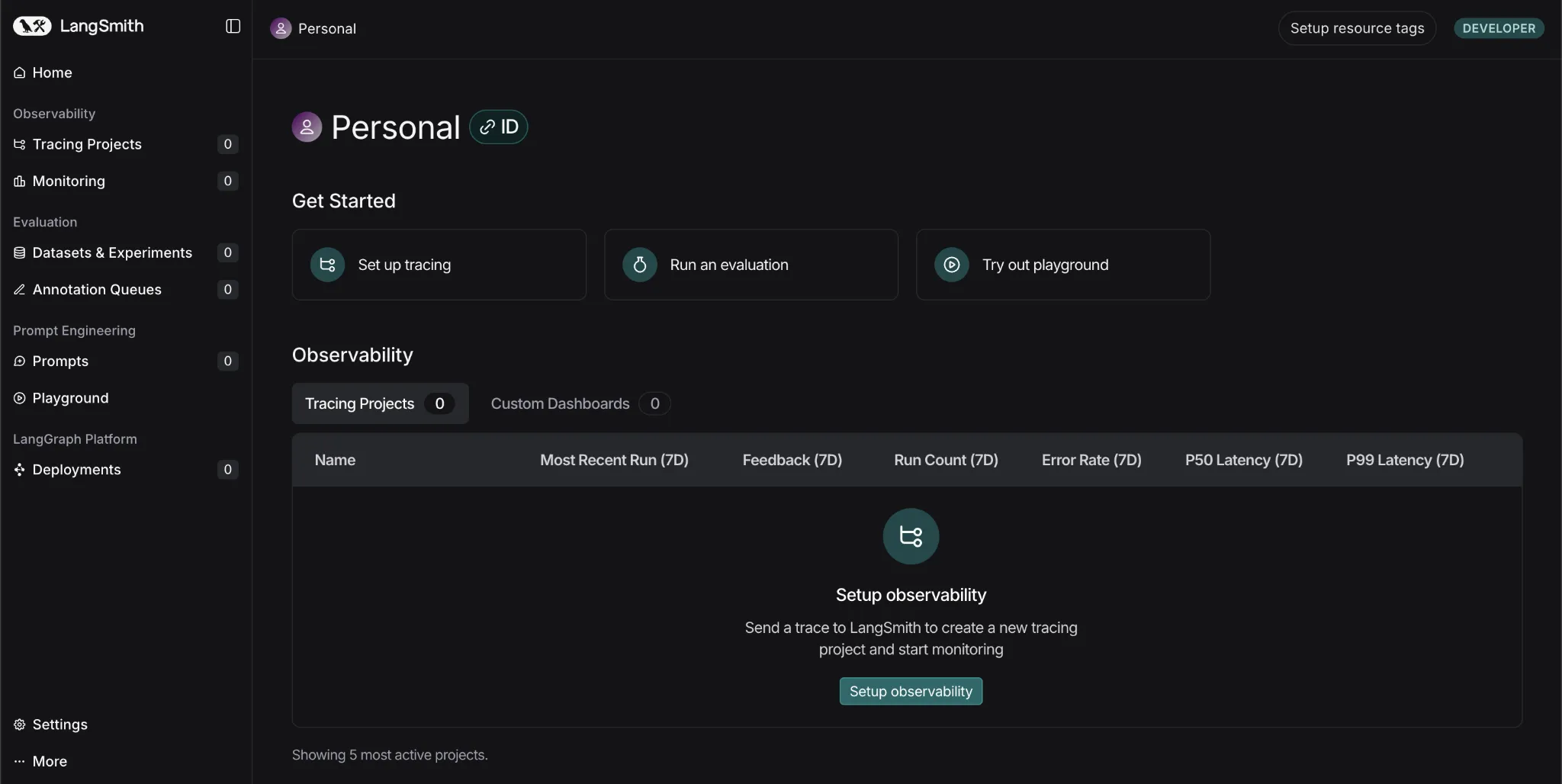

That is how the platform appears to be like with a number of tabs:

- Tracing Initiatives: Retains a monitor of a number of tasks together with their traces or units of runs. Right here, the prices, errors, latency, and plenty of different issues are tracked.

- Monitoring: Right here you may set alerts to warn you, as an example, if the system fails or the latency is above the set threshold.

- Dataset & Experiments: Right here, you may run experiments utilizing human-crafted datasets or use the platform to create AI-generated datasets for testing your system. You too can change your mannequin to see how the efficiency varies.

- Prompts: Right here you may retailer just a few prompts and later change the wording or sequence of directions to see how your outcomes are altering.

LangSmith in Motion

Word: We’ll solely construct easy brokers for this tutorial to give attention to the LangSmith aspect of issues.

Let’s construct a simple arithmetic expression-solving agent that makes use of a easy device after which allow traceability. After which we’ll verify the LangSmith dashboard to see what could be tracked utilizing the platform.

Getting the API keys:

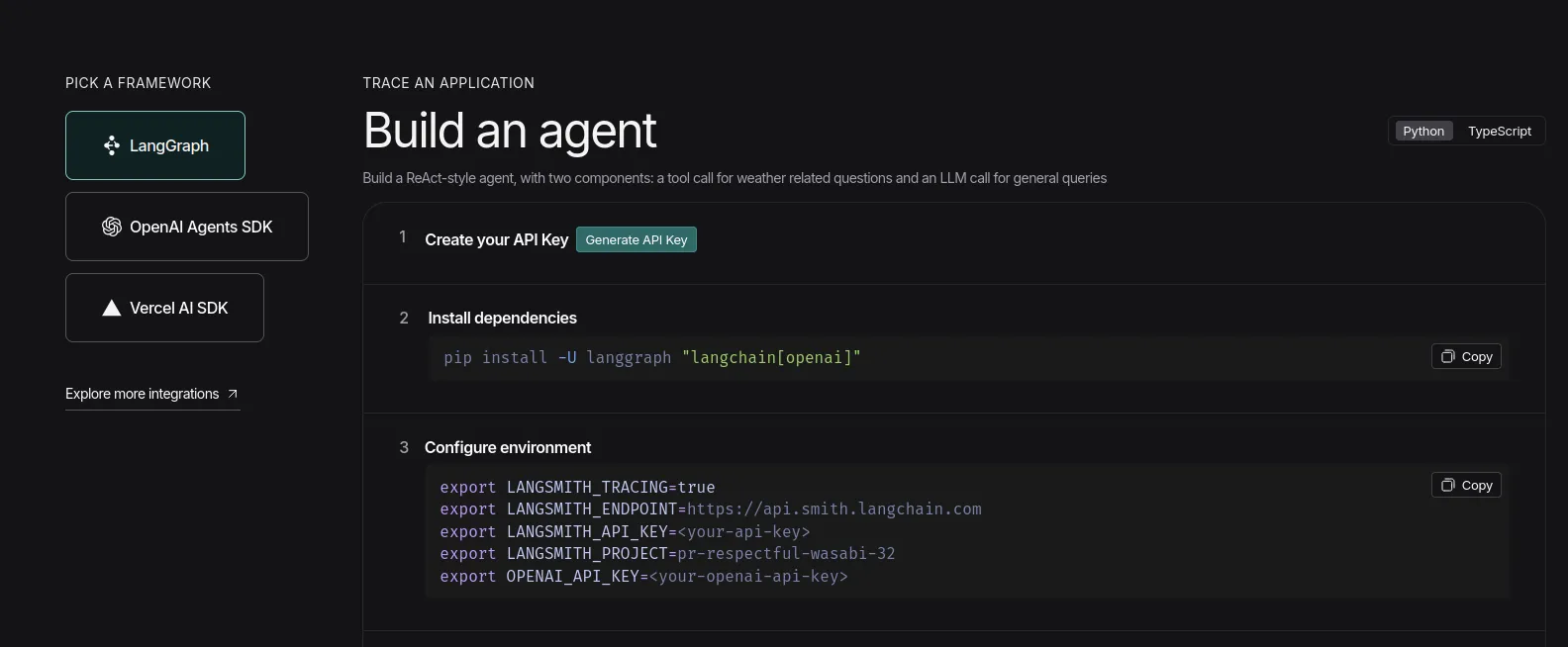

- Go to the Langsmith dashboard and click on on the ‘Setup Observability’ Button. Then you definately’ll see this display. https://www.langchain.com/langsmith

Now, click on on the ‘Generate API Key’ choice and preserve the LangSmith key useful.

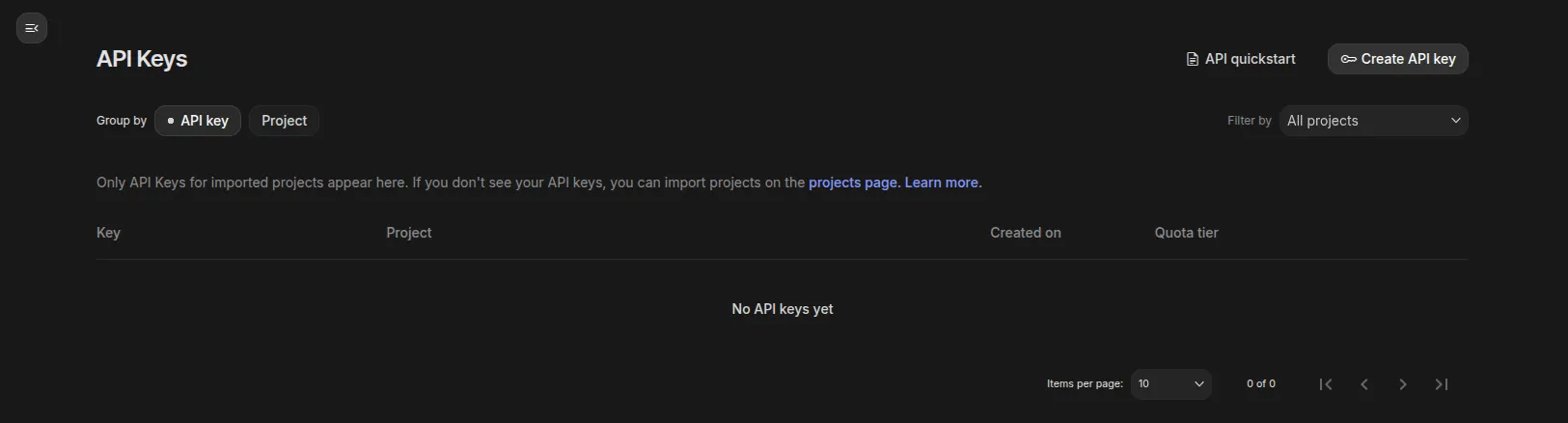

- Now go to Google AI Studio to get your palms on the Gemini API key: https://aistudio.google.com/api-keys

Click on on ‘Create API key’ on the right-top and create a venture if it doesn’t exist already, and preserve the important thing useful.

Python Code

Word: I’ll be utilizing Google Colab for operating the code.

Installations

!pip set up -q langgraph langsmith langchain

!pip set up -q langchain-google-genaiWord: Be certain to restart the session earlier than persevering with from right here.

Setting the atmosphere

Cross the API keys when prompted.

from getpass import getpass

LANGCHAIN_API_KEY=getpass('Enter LangSmith API Key: ')

GOOGLE_API_KEY=getpass('Enter Gemini API Key: ')

import os

os.environ['LANGCHAIN_TRACING_V2'] = 'true'

os.environ['LANGCHAIN_API_KEY'] = LANGCHAIN_API_KEY

os.environ['LANGCHAIN_PROJECT'] = 'Testing'Word: It’s advisable to trace totally different tasks with totally different venture names; right here, I’m naming it ‘Testing’.

Organising and operating the agent

- Right here, we’re utilizing a easy device that the agent can use to unravel math expressions

- We’re utilizing the in-built

create_react_agentfrom LangGraph, the place we’ve got to outline the mannequin, give entry to instruments, and we’re good to go.

from langgraph.prebuilt import create_react_agent

from langchain_google_genai import ChatGoogleGenerativeAI

def solve_math_problem(expression: str) -> str:

"""Remedy a math drawback."""

strive:

# Consider the mathematical expression

outcome = eval(expression, {"__builtins__": {}})

return f"The reply is {outcome}."

besides Exception:

return "I could not resolve that expression."

# Initialize the Gemini mannequin with API key

mannequin = ChatGoogleGenerativeAI(

mannequin="gemini-2.5-flash",

google_api_key=GOOGLE_API_KEY

)

# Create the agent

agent = create_react_agent(

mannequin=mannequin,

instruments=[solve_math_problem],

immediate=(

"You're a Math Tutor AI. "

"When a person asks a math query, purpose by way of the steps clearly "

"and use the device `solve_math_problem` for numeric calculations. "

"At all times clarify your reasoning earlier than giving the ultimate reply."

),

)

# Run the agent

response = agent.invoke(

{"messages": [{"role": "user", "content": "What is (12 + 8) * 3?"}]}

)

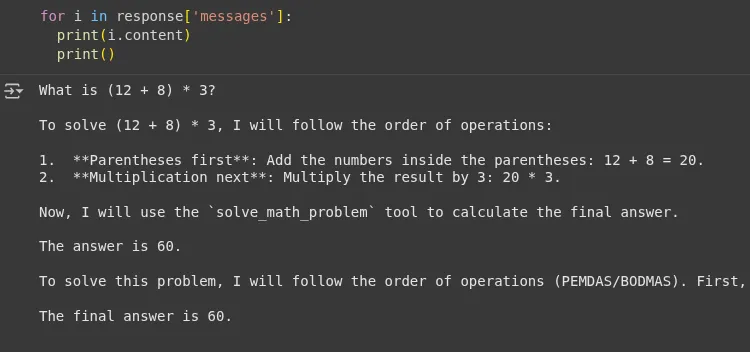

print(response)Output:

We are able to see that the agent used the device’s response ‘The reply is 60’ and didn’t hallucinate whereas answering the query. Now let’s verify the LangSmith dashboard.

LangSmith Dashboard

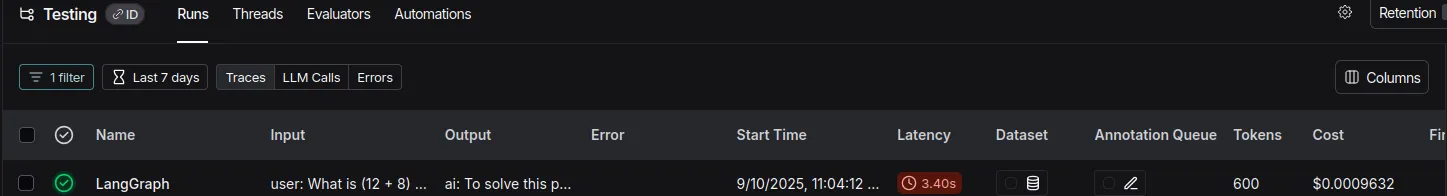

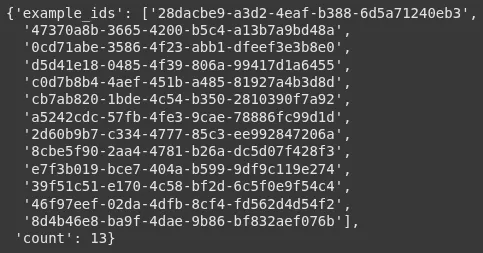

Tracing Initiatives tab

We are able to see that the venture has been created with the title ‘testing’; you may click on on it to see detailed logs.

Right here it exhibits the run-wise:

- Whole Tokens

- Whole Price

- Latency

- Enter

- Output

- Time when the code was executed

Word: I’m utilizing the free tier of Gemini right here, so I can use the important thing freed from value based on the day by day limits.

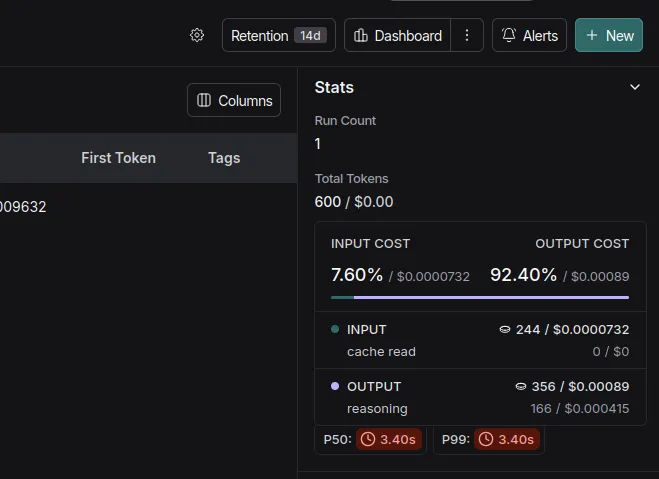

Monitoring tab

- Right here you may see a dashboard with the tasks, runs, and complete prices.

LLM as a choose

LangSmith permits the creation of a dataset utilizing a easy dictionary with enter and output keys. This dataset with the anticipated output can be utilized to judge an AI system’s generated outputs on metrics like helpfulness, correctness, and hallucinations.

We’ll use an identical math agent, create the dataset, and consider our agentic system.

Word: I’ll be utilizing OpenAI API (gpt-4o-mini) for the demo right here, that is to keep away from API Restrict points with the free-tier Gemini API.

Installations

!pip set up -q openevals langchain-openai Atmosphere Setup

import os

from google.colab import userdata

os.environ['OPENAI_API_KEY']=userdata.get('OPENAI_API_KEY')Defining the Agent

from langsmith import Shopper, wrappers

from openevals.llm import create_llm_as_judge

from openevals.prompts import CORRECTNESS_PROMPT

from langchain_openai import ChatOpenAI

from langgraph.prebuilt import create_react_agent

from langchain_core.instruments import device

from typing import Dict, Checklist

import requests

# STEP 1: Outline Instruments for the Agent =====

@device

def solve_math_problem(expression: str) -> str:

"""Remedy a math drawback."""

strive:

# Consider the mathematical expression

outcome = eval(expression, {"__builtins__": {}})

return f"The reply is {outcome}."

besides Exception:

return "I could not resolve that expression."

# STEP 2: Create the LangGraph ReAct Agent =====

def create_math_agent():

"""Create a ReAct agent with instruments."""

# Initialize the LLM

mannequin = ChatOpenAI(mannequin="gpt-4o-mini", temperature=0)

# Outline the instruments

instruments = [solve_math_problem]

# Create the ReAct agent utilizing LangGraph's prebuilt operate

agent = create_react_agent(

mannequin=mannequin,

instruments=[solve_math_problem],

immediate=(

"You're a Math Tutor AI. "

"When a person asks a math query, purpose by way of the steps clearly "

"and use the device `solve_math_problem` for numeric calculations. "

"At all times clarify your reasoning earlier than giving the ultimate reply."

),

)

return agentCreating the dataset

- Let’s create a dataset with easy and laborious math expressions that we are able to later use to run experiments.

consumer = Shopper()

dataset = consumer.create_dataset(

dataset_name="Math Dataset",

description="Onerous numeric + combined arithmetic expressions to judge the solver agent."

)

examples = [

# Simple check

{

"inputs": {"question": "12 + 7"},

"outputs": {"answer": "The answer is 19."},

},

{

"inputs": {"question": "100 - 37"},

"outputs": {"answer": "The answer is 63."},

},

# Mixed operators and parentheses

{

"inputs": {"question": "(3 + 5) * 2 - 4 / 2"},

"outputs": {"answer": "The answer is 14.0."},

},

{

"inputs": {"question": "2 * (3 + (4 - 1)*5) / 3"},

"outputs": {"answer": "The answer is 14.0."},

},

# Large numbers & multiplication

{

"inputs": {"question": "98765 * 4321"},

"outputs": {"answer": "The answer is 426,373,565."},

},

{

"inputs": {"question": "123456789 * 987654321"},

"outputs": {"answer": "The answer is 121,932,631,112,635,269."},

},

# Division, decimals, rounding

{

"inputs": {"question": "22 / 7"},

"outputs": {"answer": "The answer is approximately 3.142857142857143."},

},

{

"inputs": {"question": "5 / 3"},

"outputs": {"answer": "The answer is 1.6666666666666667."},

},

# Exponents, roots

{

"inputs": {"question": "2 ** 10 + 3 ** 5"},

"outputs": {"answer": "The answer is 1128."},

},

{

"inputs": {"question": "sqrt(2) * sqrt(8)"},

"outputs": {"answer": "The answer is 4.0."},

},

# Edge / error / “unanswerable” cases

{

"inputs": {"question": "5 / 0"},

"outputs": {"answer": "I couldn’t solve that expression."},

},

{

"inputs": {"question": "abc + 5"},

"outputs": {"answer": "I couldn’t solve that expression."},

},

{

"inputs": {"question": ""},

"outputs": {"answer": "I couldn’t solve that expression."},

},

]

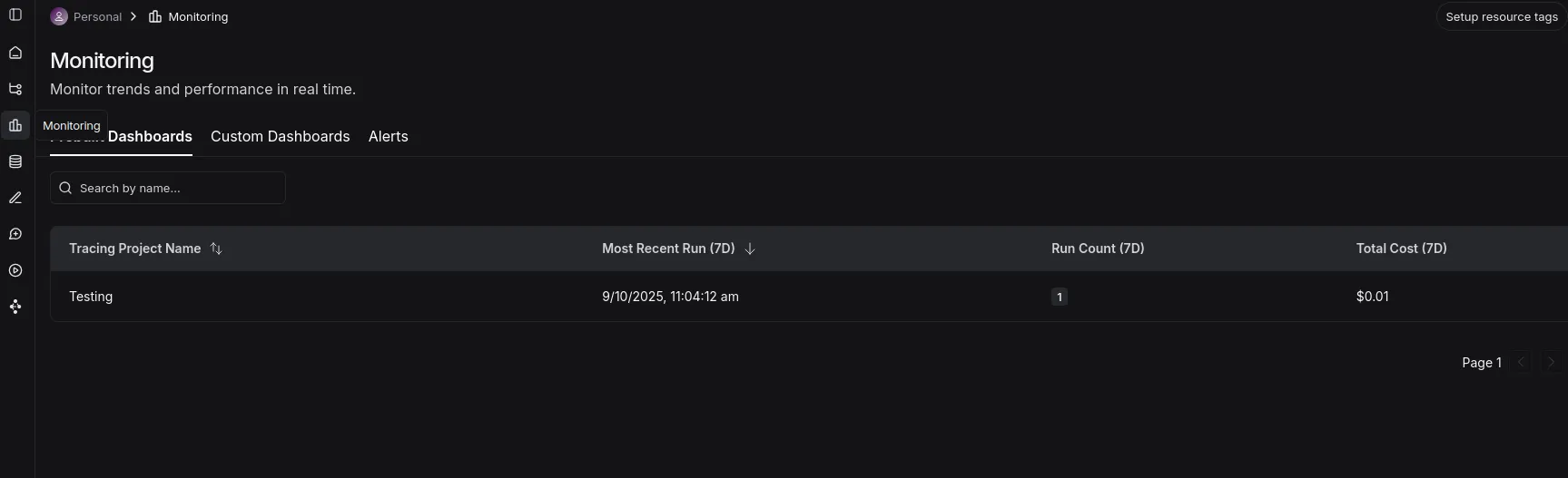

consumer.create_examples(

dataset_id=dataset.id,

examples=examples)Nice! We created a dataset with 13 information:

Defining the goal operate

- This operate invokes the agent and returns the response

def goal(inputs: Dict) -> Dict:

agent = create_math_agent()

agent_input = {

"messages": [{"role": "user", "content": inputs["question"]}]

}

outcome = agent.invoke(agent_input)

final_message = outcome["messages"][-1]

reply = final_message.content material if hasattr(final_message, 'content material') else str(final_message)

return {"reply": reply}Defining the Evaluator

- We use the pre-built

llm_as_judgeoperate and likewise import the immediate from the openevals library. - We’re utilizing 4o-mini for now to maintain the prices low, however a reasoning mannequin could be higher fitted to this process.

def correctness_evaluator(inputs: Dict, outputs: Dict, reference_outputs: Dict) -> Dict:

evaluator = create_llm_as_judge(

immediate=CORRECTNESS_PROMPT,

mannequin="openai:gpt-4o-mini",

feedback_key="correctness",

)

eval_result = evaluator(

inputs=inputs,

outputs=outputs,

reference_outputs=reference_outputs

)

return eval_resultOperating the analysis

experiment_results = consumer.consider(

goal,

knowledge="Math Dataset",

evaluators=[correctness_evaluator],

experiment_prefix="langgraph-math-agent",

max_concurrency=2,

)Output:

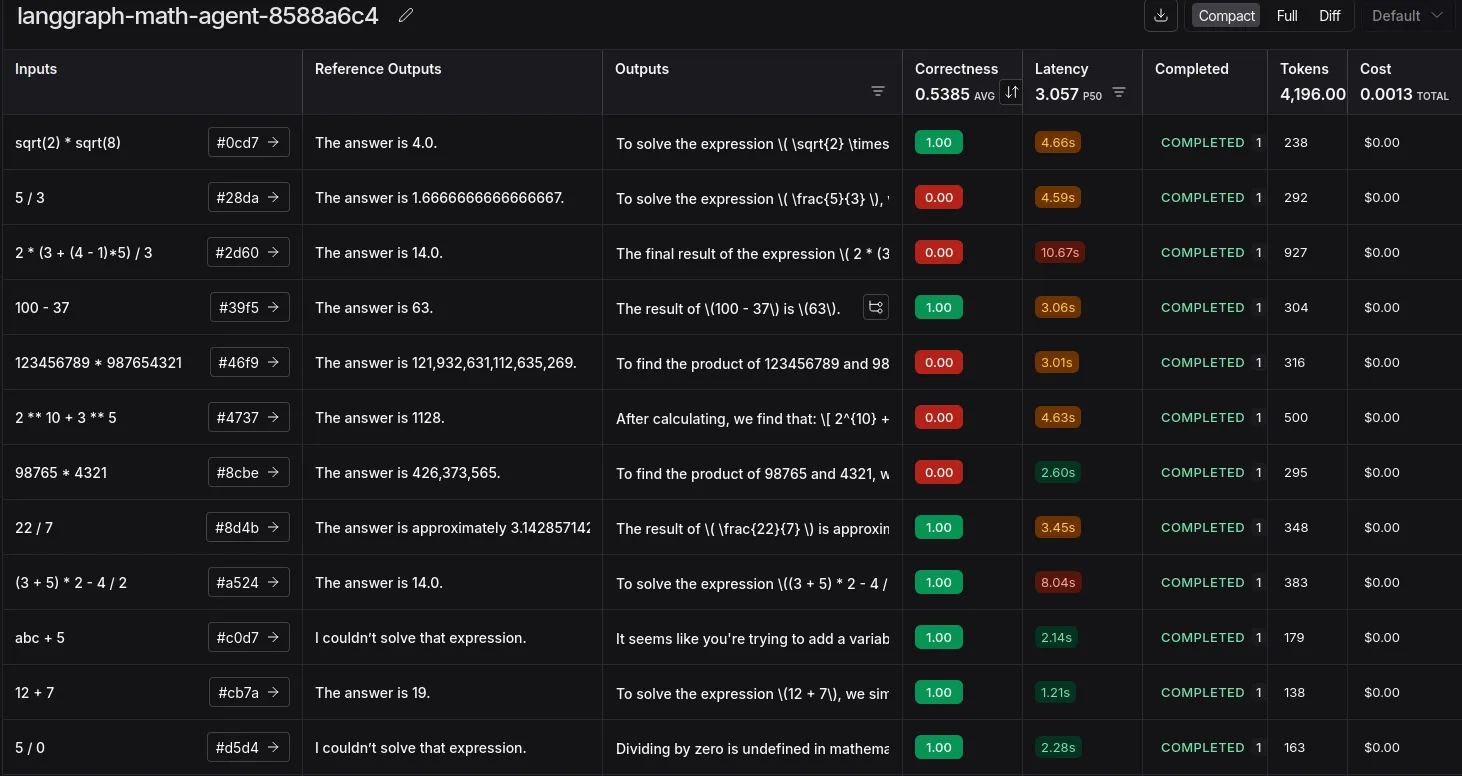

A hyperlink can be generated after the run. On click on, you’ll be redirected to LangSmith’s ‘Datasets & Experiments’ tab, the place you may see the outcomes of the experiment.

Now we have efficiently experimented with utilizing LLM as a Decide. That is insightful when it comes to discovering edge circumstances, prices, and token utilization.

The errors listed below are principally mismatched as a result of using commas or the presence of lengthy decimals. This may be solved by altering the analysis immediate or making an attempt a reasoning mannequin. Or simplify including commas and guaranteeing decimal formatting on the device degree itself.

Conclusion

And there you’ve got it! We’ve efficiently proven the intertwined working of LangGraph for constructing our agent and LangSmith for tracing and evaluating it. This combo is extremely highly effective for monitoring bills and guaranteeing your agent performs as anticipated with customized datasets. Whereas we targeted on tracing and experiments, LangSmith’s capabilities don’t cease there. You too can discover highly effective options like A/B testing totally different prompts in manufacturing, including human-in-the-loop suggestions on to traces, and creating automations to streamline your debugging workflow.

Ceaselessly Requested Questions

A. The -q (or –quiet) flag tells pip to be “quiet” throughout set up. It reduces the log output, making your pocket book cleaner by solely displaying essential warnings or errors.

A. LangChain is finest for creating sequential chains of actions. LangGraph extends this by letting you outline advanced, cyclical flows with conditional logic, which is important for constructing refined brokers.

A. No, LangSmith is framework-agnostic. You’ll be able to combine it into any LLM software to get tracing and analysis, even when it’s constructed from scratch utilizing libraries like OpenAI’s straight.

Login to proceed studying and luxuriate in expert-curated content material.