Which of your browser workflows would you delegate immediately if an agent might plan and execute predefined UI actions? Google AI introduces Gemini 2.5 Laptop Use, a specialised variant of Gemini 2.5 that plans and executes actual UI actions in a reside browser by way of a constrained motion API. It’s out there in public preview by way of Google AI Studio and Vertex AI. The mannequin targets net automation and UI testing, with documented, human-judged positive aspects on customary net/cellular management benchmarks and a security layer that may require human affirmation for dangerous steps.

What the mannequin really ships?

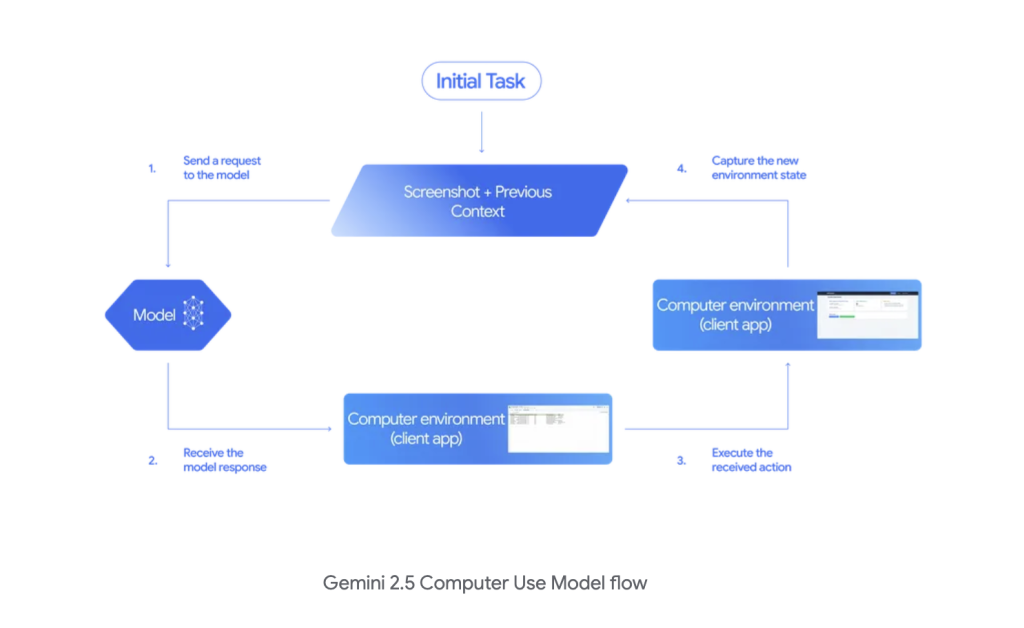

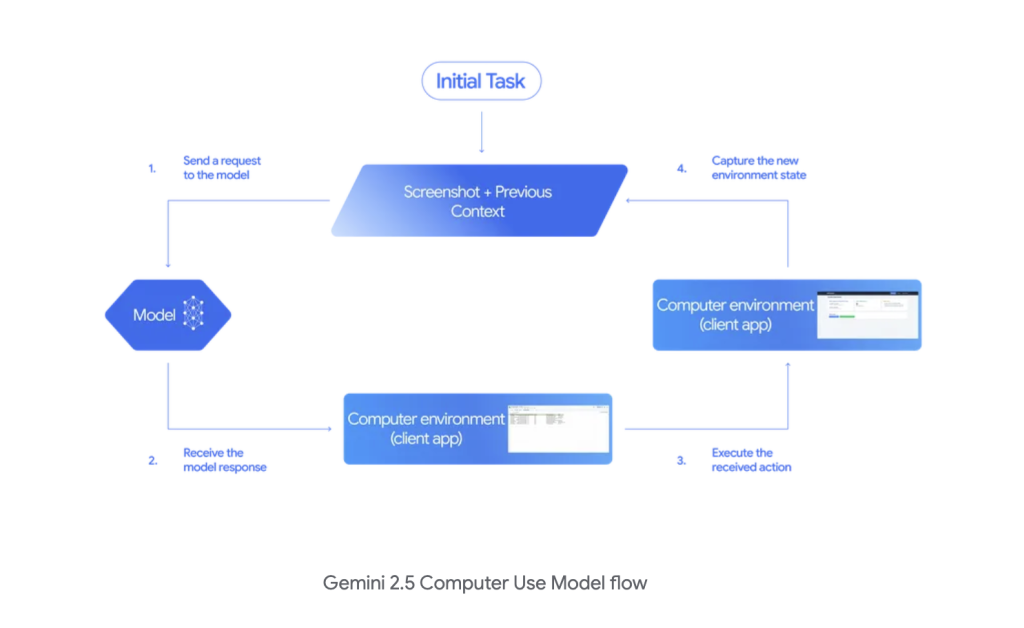

Builders name a brand new computer_use instrument that returns operate calls like click_at, type_text_at, or drag_and_drop. Shopper code executes the motion (e.g., Playwright/Browserbase), captures a recent screenshot/URL, and loops till the duty ends or a security rule blocks it. The supported motion house is 13 predefined UI actions—open_web_browser, wait_5_seconds, go_back, go_forward, search, navigate, click_at, hover_at, type_text_at, key_combination, scroll_document, scroll_at, drag_and_drop—and might be prolonged with customized capabilities (e.g., open_app, long_press_at, go_home) for non-browser surfaces.

What’s the scope and constraints?

The mannequin is optimized for net browsers. Google states it’s not but optimized for desktop OS-level management; cellular situations work by swapping in customized actions beneath the identical loop. A built-in security monitor can block prohibited actions or require consumer affirmation earlier than “high-stakes” operations (funds, sending messages, accessing delicate data).

Measured efficiency

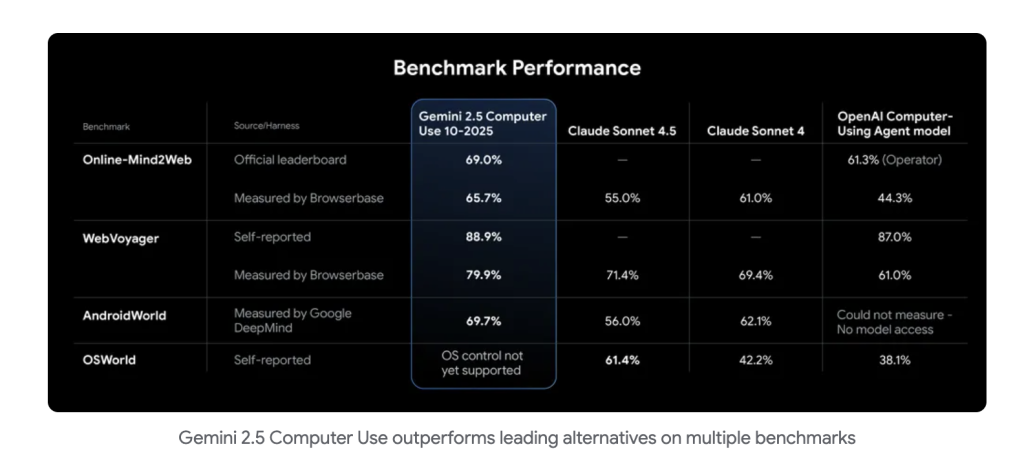

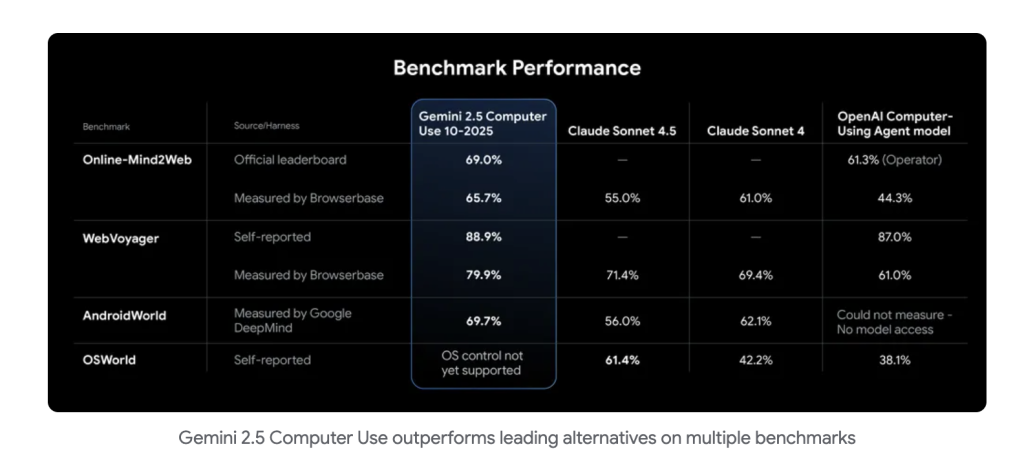

- On-line-Mind2Web (official): 69.0% go@1 (majority-vote human judgments), validated by benchmark organizers.

- Browserbase matched harness: Leads competing computer-use APIs on each accuracy and latency throughout On-line-Mind2Web and WebVoyager beneath equivalent time/step/atmosphere constraints. Google’s mannequin card lists 65.7% (OM2W) and 79.9% (WebVoyager) in Browserbase runs.

- Latency/high quality trade-off (Google determine): ~70%+ accuracy at ~225 s median latency on the Browserbase OM2W harness. Deal with as Google-reported, with human analysis.

- AndroidWorld (cellular generalization): 69.7% measured by Google; achieved by way of the identical API loop with customized cellular actions and excluded browser actions.

Early manufacturing indicators

- Automated UI take a look at restore: Google’s funds platform staff studies the mannequin rehabilitates >60% of beforehand failing automated UI take a look at executions. That is attributed (and needs to be cited) to public reporting relatively than the core weblog put up.

- Operational pace: Poke.com (early exterior tester) studies workflows usually ~50% quicker versus their next-best various.

Gemini 2.5 Laptop Use is in public preview by way of Google AI Studio and Vertex AI; it exposes a constrained API with 13 documented UI actions and requires a client-side executor. Google’s supplies and the mannequin card report state-of-the-art outcomes on net/cellular management benchmarks, and Browserbase’s matched harness reveals ~65.7% go@1 on On-line-Mind2Web with main latency beneath equivalent constraints. The scope is browser-first with per-step security/affirmation. These information factors justify measured analysis in UI testing and net ops.

Try the GitHub Web page and Technical particulars. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Publication.