Organizations together with the U.S. army, are more and more adopting cloud deployments for his or her flexibility and price financial savings in deployment. One facet of such deployments is the shared safety mannequin promulgated by NSA, which describes lots of the safety companies that cloud service suppliers (CSPs) assist and gives for cooperation on safety points. This mannequin additionally leaves safety duties on the organizations contracting for service. These duties embrace making certain the hosted software is engaging in its meant goal for the licensed set of customers.

Cloud move logs, as recognized by community defenders, are a worthwhile supply of knowledge to assist this safety duty. If anticipated occasions (indicated by switch of knowledge to and from the cloud) occur, these logs assist determine which exterior endpoints obtain service, the extent of the service, and whether or not there are customers who overuse cloud assets.

The SEI has an extended historical past of assist for move log evaluation, together with its early 2025 releases (for Azure or AWS) of open-source scripts to facilitate cloud move log evaluation. This weblog summarizes these efforts and explores challenges related to correlating occasions throughout a number of CSPs.

Gathering Cloud Circulation Logs

A cloud move log is a group of data that include summaries of community site visitors to and from endpoints within the cloud. Hosts within the cloud are particularly configured to provide and eat packets of knowledge throughout the web. That is in contrast to on-premises move era, which is completed for all hosts on a given community primarily based on sensors. Hosts (digital personal clouds or community safety teams) or subnets (VNets) within the cloud could generate these move data. Whereas not essentially meant for long-term retention for assessing safety, these logs cowl a historical past of cloud exercise with out respect to malware or alert signatures or any particular community occasions. This historical past gives context for detected occasions and profiles of anticipated, anomalous, or malicious exercise. This context helps extra dependable interpretation of alerts and community experiences, which in the end makes organizations safer.

Ongoing assortment additionally permits for identification of three kinds of site visitors observations:

- Occasions—remoted behaviors with safety implications, together with benign (assuring that one thing is going on that ought to occur) and malicious (figuring out that one thing is going on that compromises safety)

- Patterns—collections of occasions that will represent proof of a defensive measure or an aggressive motion. Normally, patterns are collections of multiple occasion and supply context for evaluating actions.

- Developments—sequences of occasions that cumulatively determine shifts in community habits (once more, cumulatively benign or cumulatively malicious)

Approaches to Analyzing Cloud Circulation Logs

Cloud service suppliers provide quite a lot of assortment choices and report contents. For examples see Desk 1, which is mentioned beneath. The gathering choices embrace the interval for which the data mixture community site visitors (e.g., 1-minute or 5-minute intervals) and the sampling employed within the aggregation (e.g., all packets or a pattern of 1 packet from every ten). These variations can complicate comparability or integration throughout CSPs. Assumptions made by CSPs, similar to assumed site visitors course, may additionally complicate evaluation of the community site visitors. If the evaluation course of doesn’t tackle these variations, fusion of knowledge from completely different clouds turns into tough and outcomes enhance in uncertainty. Whereas evaluation of cloud move logs shares all of the challenges of analyzing different community logs, the dealing with of those variations presents extra challenges.

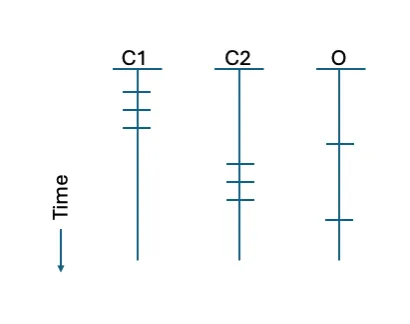

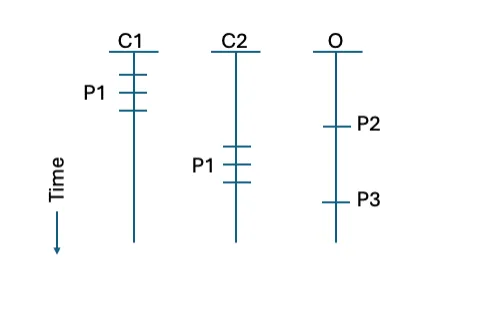

Determine 1: Instance set of timelines for an infrastructure applied throughout two CSPs (C1 and C2) and an on-premises host (O).

For instance, take into account Determine 1 above, which reveals timelines for occasions throughout an infrastructure that’s applied throughout two CSPs and an on-premises internet hosting supplier. An analyst needs to guage the interactions, all of that are contacts from the identical exterior host as proven in Determine 1 by the small horizontal strains. Taking a look at every occasion or timeline individually, the contact seems non-threatening. By evaluating the interactions in mixture, the analyst obtains a broader view of the exercise.

There are a number of doable methods of addressing variations between CSPs: current the outcomes individually, use separate analyses and caveat the outcomes, or interpolate the variations to restructure the information for a typical evaluation. Given the vary of selections obtainable, organizations searching for to enhance their entry and use of cloud move logs could architect an analytic infrastructure to go well with their wants. In any of those approaches, the general objective shall be to enhance consciousness of cloud exercise and to use that consciousness to enhance the safety of the group’s data.

The paragraphs beneath take into account a number of approaches.

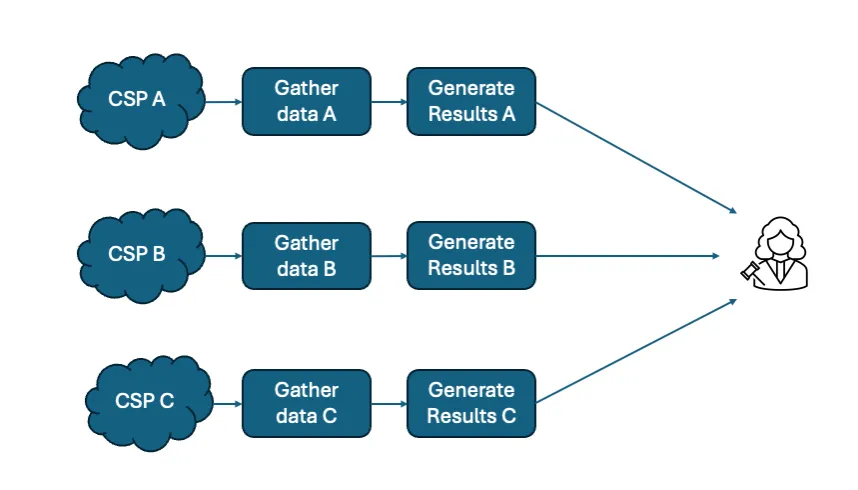

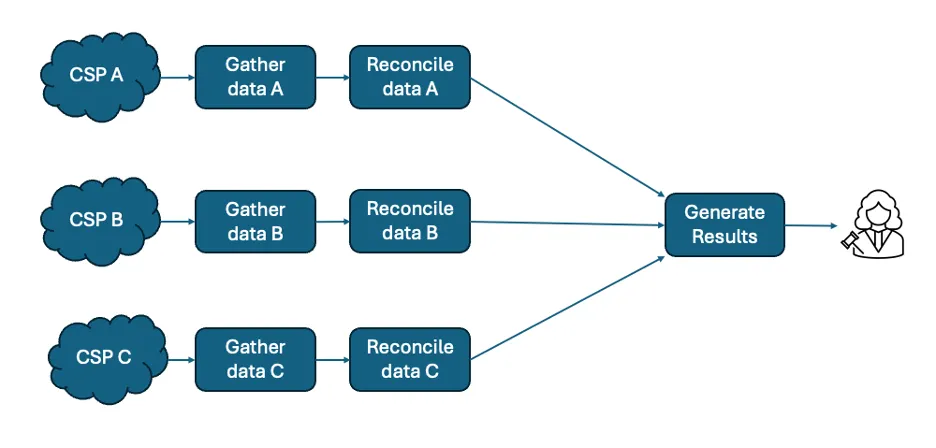

Determine 2: A separate outcomes evaluation strategy

The separate outcomes strategy proven in Determine 2 above makes use of every cloud’s knowledge to generate a set of outcomes utilizing knowledge constructions and evaluation strategies acceptable to that cloud. Since separate suppliers produce logs, the setting of every supplier’s logs will differ.

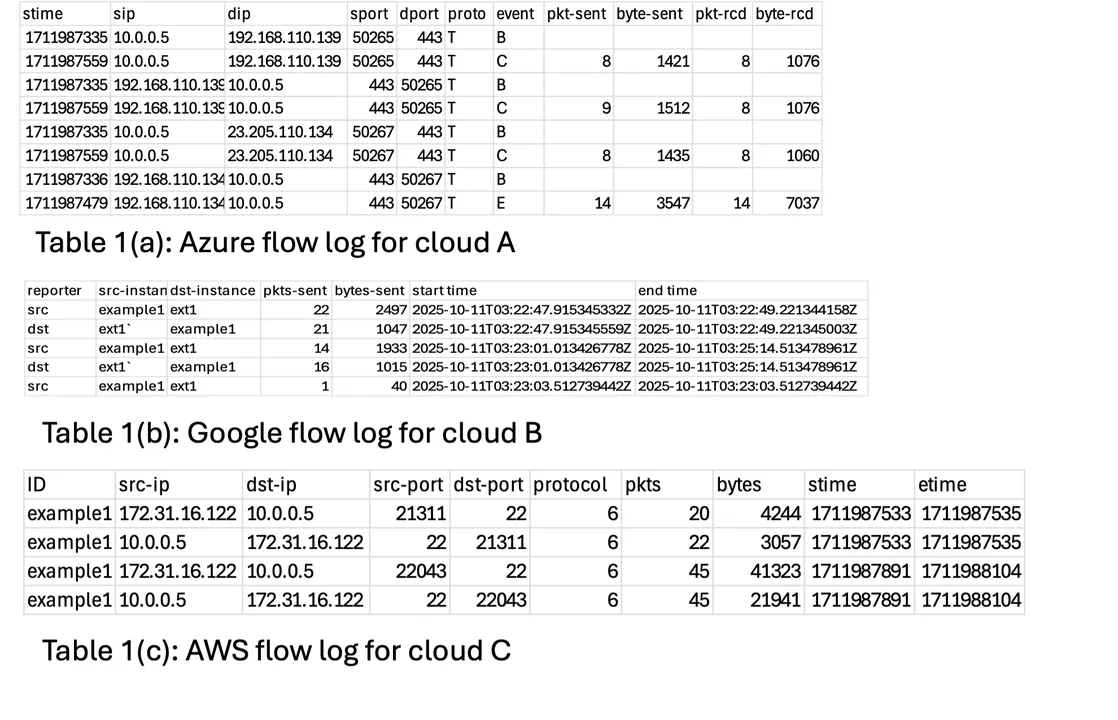

Desk 1 beneath reveals artificially-generated entries with the content material of logs from three cloud suppliers, simplified into tables and with chosen report fields for readability of show. Azure and Google logs are usually in JSON format, with Azure utilizing a deeply nested construction and Google a comparatively flat construction. AWS logs are usually in formatted textual content. The logs differ in that AWS (Desk 1c) and Google (Desk 1b) depict exercise as samples over time, whereas Azure (Desk 1a) describes exercise with start, proceed, and finish occasions at recognized occasions.

Within the instance knowledge in Desk 1, the Azure and AWS logs use IP addresses to discuss with situations, however the Google log makes use of occasion identifier strings. The separate outcomes strategy would depart these variations and never attempt to reconcile between them.

It’s obvious that the fields of the move data differ between suppliers, and the format of the person fields additionally differ, similar to for time values. There is no such thing as a clock synchronization throughout separate suppliers.

The separate outcomes strategy permits for probably the most lodging to variations between clouds, with out contemplating the comparability of outcomes from different clouds. The separate outcomes strategy aligns with the particular CPS environments, however on the potential value of obscuring frequent actors or methods that have an effect on multi-cloud internet hosting employed by a corporation.

Desk 1: Instance cloud move logs

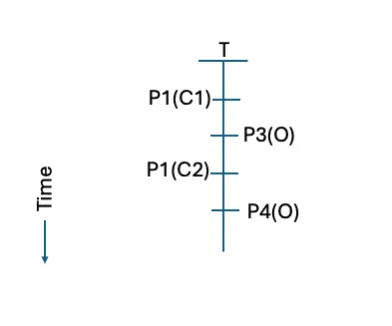

Determine 3: An instance of the separate outcomes evaluation with 4 occasions (P1-P4)

In Determine 3, the analyst examines every CSP and the on-premises knowledge individually. This produces a sequence of 4 occasions (one in every of the cloud-hosted functionalities and two within the on-premises hosted performance). These occasions may be ordered, however the differing nature of the cloud knowledge assortment prevents each exact time relationships and use of the small print recorded within the move report.

Utilizing this strategy does permit a broader view than the beforehand mentioned evaluation, however not the extent of element usually desired by the analyst. Nevertheless, for these analysts primarily centered on a single cloud implementation, the separate outcomes strategy could also be most well-liked for simplicity.

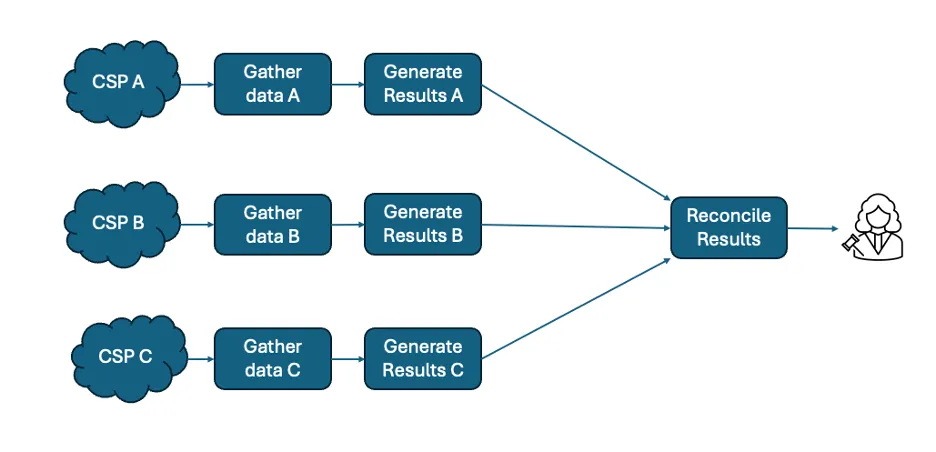

Determine 4: A separate evaluation strategy that features outcome reconciliation

An alternate technique is the separate evaluation strategy, which applies strategies focused to every CSP’s distinctive options however presents outcomes with format and content material that permit a reconciliation course of to provide a typical set of outcomes as proven in Determine 4. For instance, Every line of outcomes could normalize IP addresses to a typical format through the use of enrichment data, similar to registration or DNS decision. Every course of could reconcile timestamps by offsetting for clock skew and utilizing a shared format. This strategy permits for a typical consciousness throughout multi-cloud internet hosting, however potential prices embrace sacrifice of the extra data {that a} single CSP could present and lack of precision in timing and quantity data to accommodate variations in assortment processes between clouds. The SEI has launched an open supply set of scripts implementing this strategy for AWS and for Azure.

Determine 5: An instance of the separate evaluation strategy that results in sample identification

In Determine 5 above we see that making use of the separate evaluation strategy permits identification that the 2 occasions on the CSPs are each situations of the identical sample. Wanting on the knowledge in Desk 1, the query-response construction of the interactions entails analyzing port and protocol pairing in Desk 1a however supply and vacation spot matching in Desk 1b. This requires separate evaluation logic to succeed in a typical understanding. The same habits along with comparable packet and byte sizes in every of the 2 clouds helps identification of the exercise with a typical sample. This identification permits software of the options of the sample within the evaluation, though clocks within the separate clouds aren’t synchronized, which suggests the occasion ordering could also be inferred however not the time interval between occasions. Nevertheless, for comparatively low velocity assortment throughout a number of clouds, the separate evaluation strategy could also be most well-liked for the extent of element it helps.

Determine 6: An instance of the frequent evaluation strategy

A 3rd technique is the frequent evaluation strategy as proven in Determine 6 above. This works by translating every set of cloud logs right into a format and content material that’s achievable from every CSP’s move logs. This strategy permits extra code-efficient analytical work processes since solely a single evaluation script is required to look at all the logs within the frequent format, plus the transformation scripts from every CSP’s format to the frequent format. There’s a potential for lack of sure fields from every CSP’s format, particularly people who haven’t any frequent format equal. As well as, assortment right into a single location from a number of clouds will possible contain data-transfer prices to the group. organizations might want to outline and apply acceptable entry restrictions for the logs in frequent format, primarily based on their data safety insurance policies

Determine 7: A typical timeline from a typical evaluation

Determine 7 continues the instance by making use of the frequent evaluation strategy to resolve variations in move aggregation to interpolate exercise into a typical timeline. One doable interpolation can be to common the amount data into a typical time unit, then align time items between sources (assuming the sources have fairly aligned clocks, even when not totally synchronized). Changing the options of the move data into frequent format (e.g., JSON, CSV, and so on.), order of options, and resolving any knowledge construction points may also facilitate the frequent evaluation. As soon as aligned and transformed, the analyst could both deliver the data into a typical repository or apply the evaluation individually in source-specific repositories after which mixture the outcomes into a typical timeline.

This mixture view provides the chance for a complete view throughout knowledge sources however at the price of extra processing and imprecision as a result of alignment course of. For a extra summary view throughout a number of clouds and to make sure a typical view of the outcomes, the shared evaluation strategy could also be most well-liked.

Future Work in Cloud Circulation Evaluation on the SEI

The work reported on this weblog submit is exploratory and on the proof-of-concept stage. Future efforts will apply these strategies in manufacturing and at a sensible scale. As such, additional points with infrastructure and with the work reported right here will come up and be addressed.

This submit has outlined three approaches for evaluation of cloud move log entries. Over time, additional approaches could emerge and be utilized on this evaluation, together with approaches extra suited to streaming evaluation reasonably than retrospective evaluation.

Cloud move logs aren’t the one operations-focused cloud knowledge sources. CSP-specific sources, similar to cloudTrail and S3 logs could have entries that correlate with cloud move logs. Since these logs could present extra particulars on the functions producing the site visitors, they could present extra context to enhance safety. To facilitate this correlation, figuring out the baseline of exercise in these logs and evaluating it with the baseline in cloud move logs will tackle problems with scale.

Safety researchers have described malicious exercise by way of Techniques, Methods, and Procedures (TTPs). A number of catalogs of such TTPs exist and analysts might map exercise in cloud move logs (and different knowledge sources) to determine consistencies with TTPs. This may result in improved safety detection.

SEI researchers are working to develop the suitable construction for a multi-cloud repository of move log knowledge. Given the associated fee mannequin frequent amongst CSPs, such a repository will possible have to be a distributed construction, and that may contain problems within the question and response infrastructure.

Cloud knowledge derived from a number of sources may be costly to retailer as a result of velocity of the information. Insurance policies have to stability value towards worth of the information. This may be complicated since some analyses could require longer knowledge retention intervals. There have been community assaults such because the Sunburst assault on SolarWinds) which have exploited log retention occasions to hide their exercise. Some cloud knowledge sources seem to have worth in reporting transient circumstances of relevance to safety. For instance, some service logs report inputs that fail to observe anticipated formatting. This can be as a consequence of misconfigurations, transmission errors, or a type of vulnerability probing. Such log entries are unlikely to be of lasting worth in assessing safety since they report detected (and sure blocked) inputs. Different cloud knowledge sources are prone to be of extra lasting worth. An instance can be entries mapping to TTPs as described earlier. A course of is required to guage cloud knowledge sources for long run retention versus people who ought to solely feed streaming anomaly detection, with out long run storage of entries.