The hunt is on to cut back AI’s overdependence on power-hungry and costly GPUs, making them extra reasonably priced, accessible, and sustainable.

To the novice, it may appear as if synthetic intelligence (AI) is a magical genie that provides you all the things you ask for with the snap of a finger, however techies know that numerous muscle energy (learn graphics processing models, or GPUs) goes into making all of it work.

The tech infrastructure required for coaching and deploying AI programs is so huge that it could not even be sustainable sooner or later.

Environmentalists are already very involved in regards to the widespread use of GPUs for AI, because it guzzles energy, will increase carbon dioxide emissions, and makes use of alarming quantities of water to chill knowledge centres.

Additionally, the risky geopolitical panorama of at this time is placing GPUs out of attain of many international locations, thereby inflicting an imbalance within the focus of tech energy.

At present, once we check with GPUs within the AI context, it invariably means Nvidia’s GPUs, such because the A100, H100, and GB200. Tensor core accelerators that velocity up matrix operations, and an efficient parallel computing platform and programming mannequin known as CUDA (compute unified gadget structure), have made Nvidia GPUs the prime selection for coaching large-scale AI fashions.

The most recent from their stables is the GB200 collection of superchips, which convey collectively Blackwell GPUs and Grace CPUs right into a core, enabling huge generative AI (GenAI) workloads like coaching and real-time inference of trillion-parameter large-language fashions (LLMs). Market research estimate that Nvidia holds greater than 90% of the discrete GPU market at this time.

Over-dependence on Nvidia GPUs

Regardless of Nvidia’s GPUs being technically superior, the business and academia are working laborious at this time to cut back the dependence of AI fashions on these highly effective GPUs. One apparent purpose is the export restrictions imposed by the US authorities. There’s restricted or no provide of H100, A100, and GB200 to many international locations; and most others should be content material with much less succesful cousins just like the H20, or the GTX and RTX collection GPUs.

There’s additionally the associated fee facet. These are costly GPUs, and never everybody can afford them! From OpenAI and Google to xAI and Anthropic, all of the AI majors are utilizing hundreds of Nvidia GPUs and ready in line for extra, so why would the value ever come down? Then, there may be the query of provide vis-à-vis the burgeoning demand.

Lastly, there may be the large challenge of sustainability. Giant knowledge centres populated with highly effective GPUs are a serious environmental concern—the facility consumed, the emissions, the e-waste generated, and naturally the assets used of their manufacture.

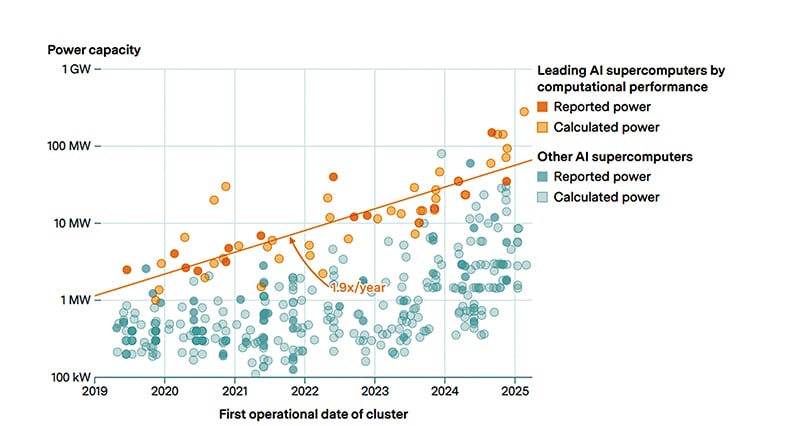

Image this. In line with an X submit by Elon Musk in July this 12 months, xAI’s flagship supercomputer Colossus 1 is now stay, coaching their Grok AI mannequin. It runs on 230,000 GPUs, which embody 30,000 GB200s. Coming very quickly is Colossus 2, with 550,000 GPUs, primarily comprising GB200s and GB300s. And the roadmap goes as much as 1 million GPUs, which suggests an funding of some trillion {dollars}! In line with a report by Epoch AI, Colossus consumes round 280 megawatts of energy; which some sustainability studies declare can energy greater than 1 / 4 million households! Epoch AI’s chart additionally reveals that the facility necessities of main AI supercomputers are doubling each 13 months.

Allow us to take a fast have a look at OpenAI’s ChatGPT lest they really feel offended. In line with studies on the web, it took roughly 1287 megawatt-hours of electrical energy to coach GPT-3. The each day operation of ChatGPT is estimated to eat round 39.98 million kilowatt-hours. Analysis signifies that a median interplay with GPT-3, similar to a brief dialog with the person or drafting a 100-word e mail, could use round 500ml water to chill the information centre.

We’re, arguably, heading in direction of an apocalypse, due to AI know-how!

Taking the bull by its two horns

Now, there’s a two-pronged downside that the AI business worldwide is making an attempt to resolve. The primary problem is to cut back the dependency on Nvidia. Allow us to face the fact—many should not have entry to it, some can not afford it, and others don’t need to wait in line! In response, some corporations are engaged on growing customised GPUs for his or her particular wants. And a few international locations, together with India, are encouraging the event of indigenous GPUs.

The second problem is to search out means to cut back the quantity of computing energy required by AI fashions—both work with out GPUs, like Kompact AI, or cut back the variety of GPUs used, like DeepSeek. This may have a constructive financial and environmental influence.

Now, allow us to take a top-level have a look at a couple of ongoing efforts from all over the world that try to deal with one or each of the above challenges.

Tech majors growing in-house AI chips

EFY++ CONTENT: ACCESS TO THIS CONTENT IS FREE! BUT YOU NEED TO BE A REGISTERED USER.