Relating to constructing higher AI, the standard technique is to make fashions greater. However this strategy has a serious drawback: it turns into extremely costly.

However, DeepSeek-V3.2-Exp took a special path…

As an alternative of simply including extra energy, they centered on working smarter. The result’s a brand new type of mannequin that delivers top-tier efficiency for a fraction of the price. By introducing their “sparse consideration” mechanism, DeepSeek isn’t simply tweaking the engine; it’s redesigning the gasoline injection system for unprecedented effectivity.

Let’s break down precisely how they did it.

Highlights of the Replace

- Including Sparse Consideration: The one architectural distinction between the brand new V3.2 mannequin and its predecessor (V3.1) is the introduction of DSA. This exhibits they centered all their effort on fixing the effectivity drawback.

- A “Lightning Indexer”: DSA works through the use of a quick, light-weight element referred to as a lightning indexer. This indexer shortly scans the textual content and picks out solely an important phrases for the mannequin to give attention to, ignoring the remainder.

- A Huge Complexity Discount: DSA modifications the core computational drawback from an exponentially tough one

O(L²)to a a lot easier, linear oneO(Lk). That is the mathematical secret behind the large velocity and price enhancements. - Constructed for Actual {Hardware}: The success of DSA depends on extremely optimized software program designed to run completely on fashionable AI chips (like H800 GPUs). This tight integration between the good algorithm and the {hardware} is what delivers the ultimate, dramatic beneficial properties.

Learn concerning the Earlier replace right here: Deepseek-V3.1-Terminus!

DeepSeek Sparse Consideration (DSA)

On the coronary heart of each LLM is the “consideration” mechanism: the system that determines how essential every phrase in a sentence is to each different phrase.

The issue?

Conventional “dense” consideration is wildly inefficient. Its computational price scales quadratically (O(L²)), which means that doubling the textual content size quadruples the computation and price.

DeepSeek Sparse Consideration (DSA) is the answer to this bloat. It doesn’t have a look at every little thing; it well selects what to give attention to. The system consists of two key elements:

- The Lightning Indexer: This can be a light-weight, high-speed scanner. For any given phrase (a “question token”), it quickly scores all of the previous phrases to find out their relevance. Crucially, this indexer is designed for velocity: it makes use of a small variety of heads and might run in FP8 precision, making its computational footprint remarkably small.

- Nice-Grained Token Choice: As soon as the indexer has scored every little thing, DSA doesn’t simply seize blocks of textual content. It performs a exact, “fine-grained” choice, plucking solely the top-Okay most related tokens from throughout your complete doc. The principle consideration mechanism then solely processes this rigorously chosen, sparse set.

The End result: DSA reduces the core consideration complexity from O(L²) to O(Lk), the place okay is a set variety of chosen tokens. That is the mathematical basis for the huge effectivity beneficial properties. Whereas the lightning indexer itself nonetheless has O(L²) complexity, it’s so light-weight that the web impact remains to be a dramatic discount in whole computation.

The Coaching Pipeline: A Two-Stage Tune-Up

You may’t simply slap a brand new consideration mechanism onto a billion-parameter mannequin and hope it really works. DeepSeek employed a meticulous, two-stage coaching course of to combine DSA seamlessly.

- Stage 1: Continued Pre-Coaching (The Heat-Up)

- Dense Heat-up (2.1B tokens): Ranging from the V3.1-Terminus checkpoint, DeepSeek first “warmed up” the brand new lightning indexer. They saved the principle mannequin frozen and ran a brief coaching stage the place the indexer realized to foretell the output of the total, dense consideration mechanism. This aligned the brand new indexer with the mannequin’s current data.

- Sparse Coaching (943.7B tokens): That is the place the actual magic occurred. After the warm-up, DeepSeek switched on the total sparse consideration, choosing the highest 2048 key-value tokens for every question. For the primary time, your complete mannequin was educated to function with this new, selective imaginative and prescient, studying to depend on the sparse picks slightly than the dense entire.

- Stage 2: Publish-Coaching (The Ending Faculty)

To make sure a good comparability, DeepSeek used the very same post-training pipeline as V3.1-Terminus. This rigorous strategy proves that any efficiency variations are on account of DSA, not modifications in coaching information.- Specialist Distillation: They created 5 powerhouse specialist fashions (for Math, Coding, Reasoning, Agentic Coding, and Agentic Search) utilizing heavy-duty Reinforcement Studying. The data from these specialists was then distilled into the ultimate V3.2 mannequin.

- Combined RL with GRPO: As an alternative of a multi-stage course of, they used Group Relative Coverage Optimization (GRPO) in a single, blended stage. The reward operate was rigorously engineered to steadiness key trade-offs:

- Size vs. Accuracy: Penalizing unnecessarily lengthy solutions.

- Language Consistency vs. Accuracy: Guaranteeing responses remained coherent and human-like.

- Rule-Primarily based & Rubric-Primarily based Rewards: Utilizing automated checks for reasoning/agent duties and tailor-made rubrics for common duties.

The {Hardware} Secret Sauce: Optimized Kernels

A superb algorithm is ineffective if it runs slowly on precise {hardware}. DeepSeek’s dedication to effectivity shines right here with deeply optimized, open-source code.

The mannequin leverages specialised kernels like FlashMLA, that are custom-built to run the advanced MLA and DSA operations with excessive effectivity on fashionable Hopper GPUs (just like the H800). These optimizations are publicly out there in pull requests to repositories like DeepGEMM, FlashMLA, and tilelang, permitting the mannequin to attain near-theoretical peak reminiscence bandwidth (as much as 3000 GB/s) and compute efficiency. This hardware-aware design is what transforms the theoretical effectivity of DSA into tangible, real-world velocity.

Efficiency & Value – A New Steadiness

So, what’s the ultimate end result of this engineering marvel? The info reveals a transparent and compelling story.

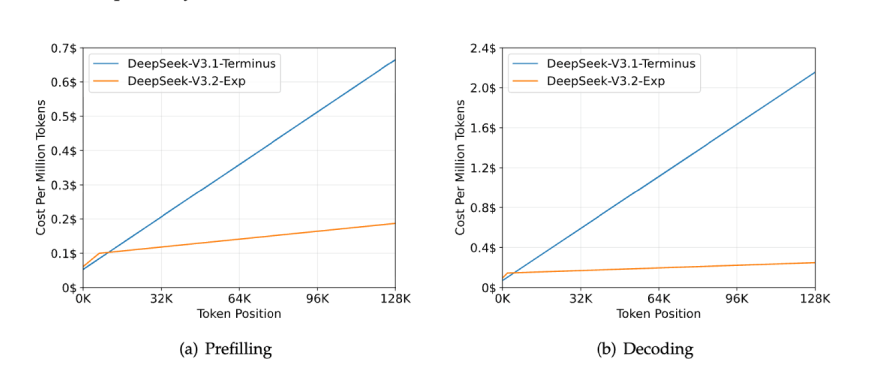

Value Discount

Probably the most quick impression is on the underside line. DeepSeek introduced a >50% discount in API pricing. The technical benchmarks are much more placing:

- Inference Pace: 2–3x sooner on lengthy contexts.

- Reminiscence Utilization: 30–40% decrease.

- Coaching Effectivity: 50% sooner.

The actual-world inference price for decoding a 128K context window plummets to an estimated $0.25, in comparison with $2.20 for dense consideration, making it 10x cheaper.

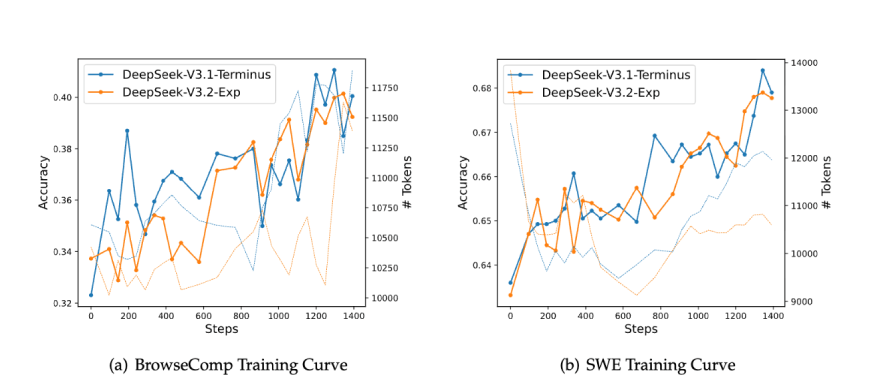

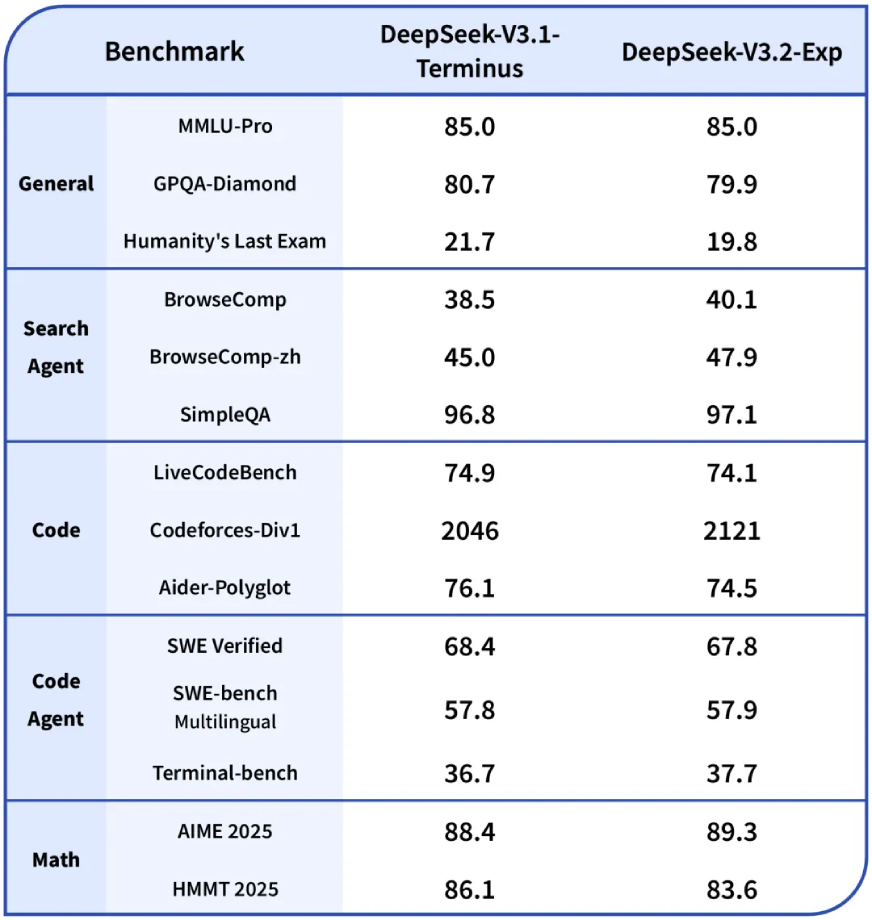

Higher Efficiency

On mixture, V3.2-Exp maintains efficiency parity with its predecessor. Nevertheless, a better look reveals a logical trade-off:

- The Wins: The mannequin exhibits vital beneficial properties in coding (Codeforces) and agentic duties (BrowseComp). This makes excellent sense: code and tool-use usually comprise redundant info, and DSA’s capacity to filter noise is a direct benefit.

- The Commerce-Offs: There are minor regressions in a number of ultra-complex, summary reasoning benchmarks (like GPQA Diamond and HMMT). The speculation is that these duties depend on connecting very refined, long-range dependencies that the present DSA masks may sometimes miss.

Deepseek-V3.1-Terminus vs DeepSeek-V3.2-Exp

Let’s Strive the New DeepSeek-V3.2-Exp

The duties I shall be doing right here shall be similar as we did in one among our earlier articles on Deepseek-V3.1-Terminus. This can assist in figuring out how the brand new replace is healthier.

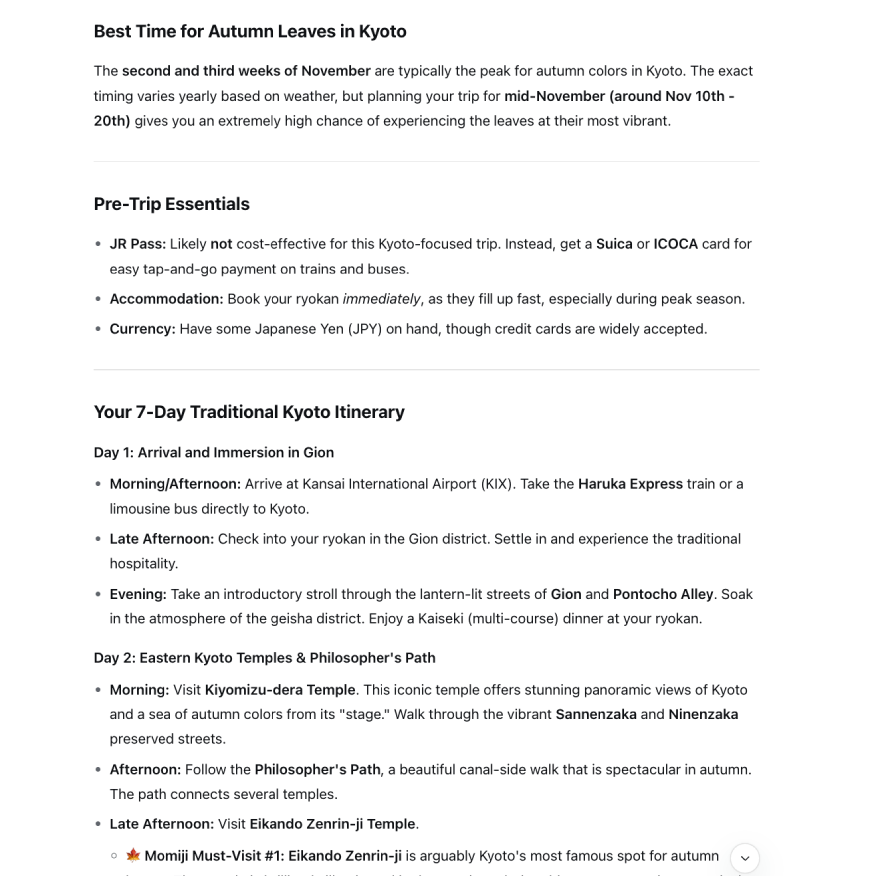

Activity 1: Journey Plan

I must plan a 7-day journey to Kyoto, Japan, for mid-November. The itinerary ought to give attention to conventional tradition, together with temples, gardens, and tea ceremonies. Discover the most effective time to see the autumn leaves, an inventory of three must-visit temples for ‘Momiji’ (autumn leaves), and a highly-rated conventional tea home with English-friendly providers. Additionally, discover a well-reviewed ryokan (conventional Japanese inn) within the Gion district. Set up all the knowledge into a transparent, day-by-day itinerary.

Output:

You may view the total output right here.

Deepseek-V3.1-Terminus vs DeepSeek-V3.2-Exp Output Comparability

| Characteristic | DeepSeek-V3.1-Terminus | DeepSeek-V3.2-Exp | Winner |

|---|---|---|---|

| Construction & Group | Single steady paragraph | Effectively-structured with clear sections and bullet factors | V3.2-Exp |

| Command Presentation | Instructions embedded in working textual content | Devoted code blocks with correct formatting | V3.2-Exp |

| Completeness & Depth | Primary rationalization solely | Contains sensible examples and use circumstances | V3.2-Exp |

| Newbie Friendliness | Assumes prior data | Explains ideas clearly for newcomers | V3.2-Exp |

Activity 2: Coding Agent

I would like a Python script that scrapes a public record of the highest 100 movies of all time from a web site (you may select a dependable supply like IMDb, Rotten Tomatoes, or a well known journal’s record). The script ought to then save the movie titles, launch years, and a quick description for every film right into a JSON file. Embrace error dealing with for community points or modifications within the web site’s construction. Are you able to generate the total script and clarify every step of the method?

Output:

class IMDbTop100Scraper:

def __init__(self):

self.base_url = "https://www.imdb.com/chart/prime"

self.headers = {

'Consumer-Agent': 'Mozilla/5.0 (Home windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

self.films = []

def fetch_page(self, url: str) -> Elective[BeautifulSoup]:

"""

Fetch the webpage and return BeautifulSoup object.

Handles community errors and retries.

"""

attempt:

response = requests.get(url, headers=self.headers, timeout=10)

response.raise_for_status() # Raises an HTTPError for unhealthy responses

# Examine if we bought a legitimate HTML response

if 'textual content/html' not in response.headers.get('content-type', ''):

elevate ValueError("Obtained non-HTML response")

return BeautifulSoup(response.content material, 'html.parser')

besides requests.exceptions.RequestException as e:

print(f"Community error occurred: {e}")

return None

besides Exception as e:

print(f"Surprising error whereas fetching web page: {e}")

return None

def parse_movie_list(self, soup: BeautifulSoup) -> Checklist[Dict]:

"""

Parse the principle film record web page to extract titles and years.

"""

films = []

attempt:

# IMDb's prime chart construction - this selector may want updating

movie_elements = soup.choose('li.ipc-metadata-list-summary-item')

if not movie_elements:

# Different selector if the first one fails

movie_elements = soup.choose('.cli-children')

if not movie_elements:

elevate ValueError("Couldn't discover film parts on the web page")

for ingredient in movie_elements[:100]: # Restrict to prime 100

movie_data = self.extract_movie_data(ingredient)

if movie_data:

films.append(movie_data)

besides Exception as e:

print(f"Error parsing film record: {e}")

return filmsDiscover full code right here.

Deepseek-V3.1-Terminus vs DeepSeek-V3.2-Exp Output Comparability

| Characteristic | DeepSeek-V3.1-Terminus | DeepSeek-V3.2-Exp | Winner |

|---|---|---|---|

| Construction & Presentation | Single dense paragraph | Clear headings, bullet factors, abstract desk | V3.2-Exp |

| Security & Consumer Steerage | No security warnings | Daring warning about unstaged modifications loss | V3.2-Exp |

| Completeness & Context | Primary two strategies solely | Provides legacy `git checkout` technique and abstract desk | V3.2-Exp |

| Actionability | Instructions embedded in textual content | Devoted command blocks with specific flag explanations | V3.2-Exp |

Additionally Learn: Evolution of DeepSeek: The way it Turned a International AI Recreation-Changer!

Conclusion

DeepSeek-V3.2-Exp is greater than a mannequin; it’s a press release. It proves that the following nice leap in AI received’t essentially be a leap in uncooked energy, however a leap in effectivity. By surgically attacking the computational waste in conventional transformers, DeepSeek has made long-context, high-volume AI functions financially viable for a much wider market.

The “Experimental” tag is a candid admission that this can be a work in progress, significantly in balancing efficiency throughout all duties. However for the overwhelming majority of enterprise use circumstances, the place processing whole codebases, authorized paperwork, and datasets is the objective. DeepSeek hasn’t simply launched a brand new mannequin; it has began a brand new race.

To know extra concerning the mannequin, checkout this hyperlink.

Login to proceed studying and luxuriate in expert-curated content material.