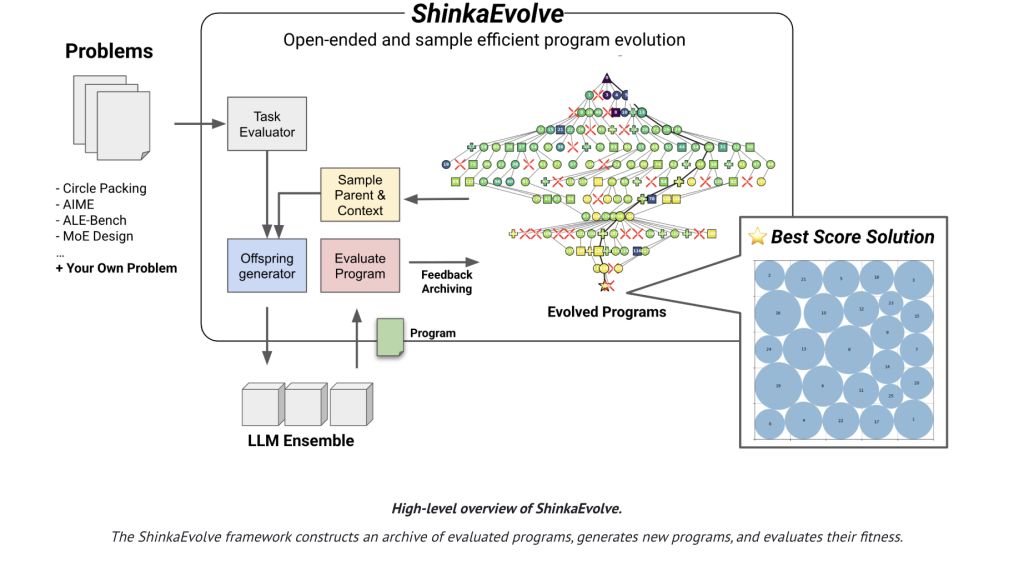

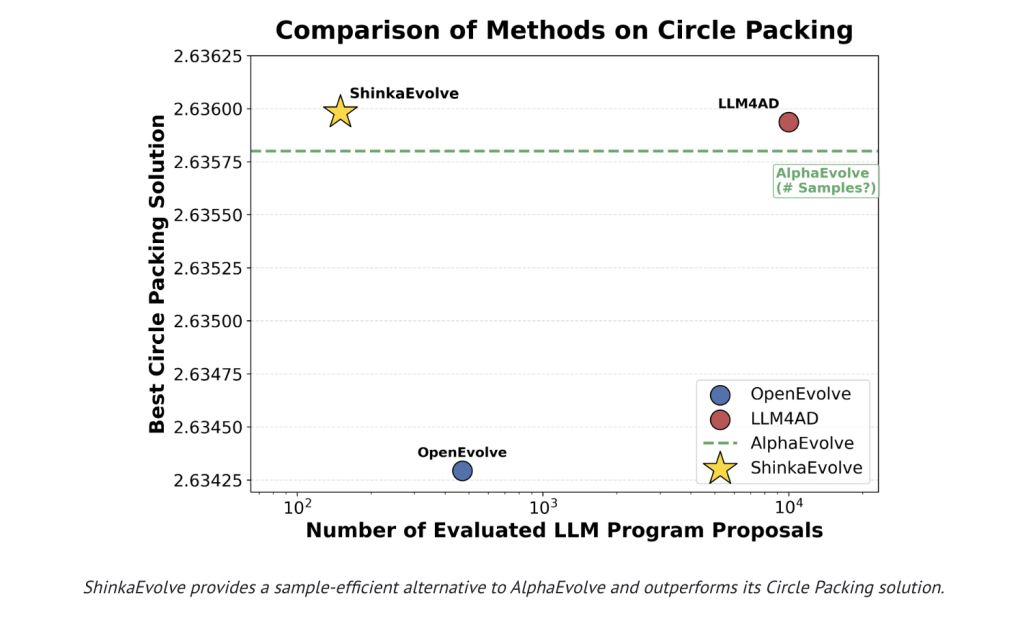

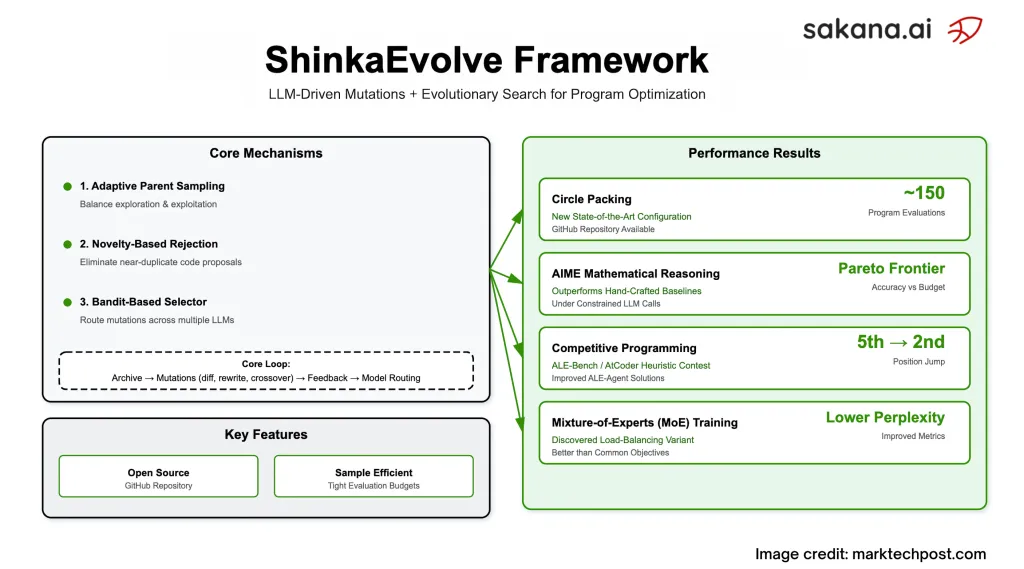

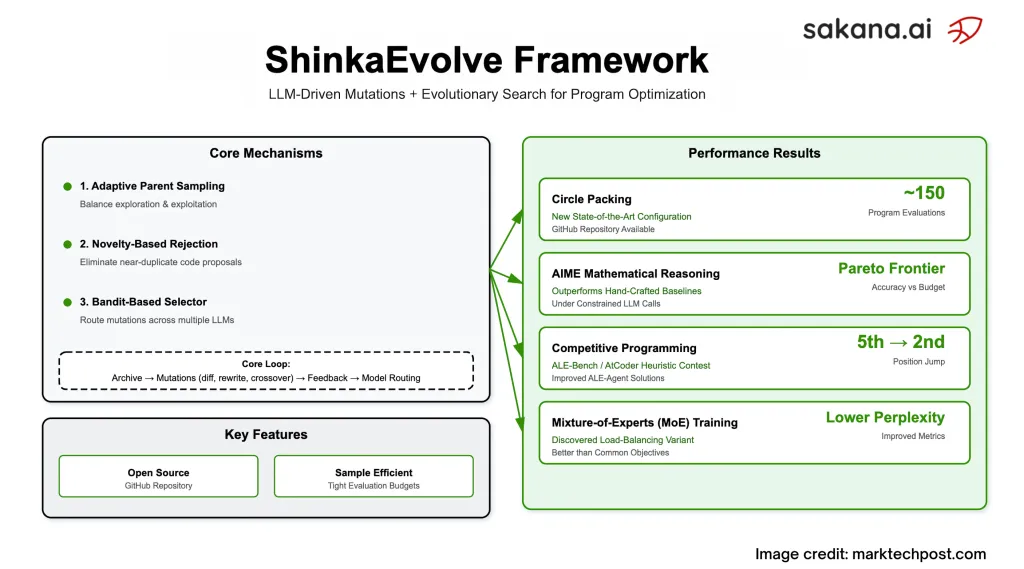

Sakana AI has launched ShinkaEvolve, an open-sourced framework that makes use of massive language fashions (LLMs) as mutation operators in an evolutionary loop to evolve applications for scientific and engineering issues—whereas drastically chopping the variety of evaluations wanted to achieve sturdy options. On the canonical circle-packing benchmark (n=26 in a unit sq.), ShinkaEvolve stories a brand new SOTA configuration utilizing ~150 program evaluations, the place prior methods usually burned 1000’s. The challenge ships beneath Apache-2.0, with a analysis report and public code.

What drawback is it truly fixing?

Most “agentic” code-evolution methods discover by brute drive: they mutate code, run it, rating it, and repeat—consuming monumental sampling budgets. ShinkaEvolve targets that waste explicitly with three interacting parts:

- Adaptive mother or father sampling to stability exploration/exploitation. Mother and father are drawn from “islands” by way of fitness- and novelty-aware insurance policies (power-law or weighted by efficiency and offspring counts) reasonably than all the time climbing the present greatest.

- Novelty-based rejection filtering to keep away from re-evaluating near-duplicates. Mutable code segments are embedded; if cosine similarity exceeds a threshold, a secondary LLM acts as a “novelty decide” earlier than execution.

- Bandit-based LLM ensembling so the system learns which mannequin (e.g., GPT/Gemini/Claude/DeepSeek households) is yielding the most important relative health jumps and routes future mutations accordingly (UCB1-style replace on enchancment over mother or father/baseline).

Does the sample-efficiency declare maintain past toy issues?

The analysis staff evaluates 4 distinct domains and exhibits constant beneficial properties with small budgets:

- Circle packing (n=26): reaches an improved configuration in roughly 150 evaluations; the analysis staff additionally validate with stricter exact-constraint checking.

- AIME math reasoning (2024 set): evolves agentic scaffolds that hint out a Pareto frontier (accuracy vs. LLM-call funds), outperforming hand-built baselines beneath restricted question budgets / Pareto frontier of accuracy vs. calls and transferring to different AIME years and LLMs.

- Aggressive programming (ALE-Bench LITE): ranging from ALE-Agent options, ShinkaEvolve delivers ~2.3% imply enchancment throughout 10 duties and pushes one process’s resolution from fifth → 2nd in an AtCoder leaderboard counterfactual.

- LLM coaching (Combination-of-Specialists): evolves a new load-balancing loss that improves perplexity and downstream accuracy at a number of regularization strengths vs. the widely-used global-batch LBL.

How does the evolutionary loop look in observe?

ShinkaEvolve maintains an archive of evaluated applications with health, public metrics, and textual suggestions. For every technology: pattern an island and mother or father(s); assemble a mutation context with top-Ok and random “inspiration” applications; then suggest edits by way of three operators—diff edits, full rewrites, and LLM-guided crossovers—whereas defending immutable code areas with specific markers. Executed candidates replace each the archive and the bandit statistics that steer subsequent LLM/mannequin choice. The system periodically produces a meta-scratchpad that summarizes lately profitable methods; these summaries are fed again into prompts to speed up later generations.

What are the concrete outcomes?

- Circle packing: mixed structured initialization (e.g., golden-angle patterns), hybrid international–native search (simulated annealing + SLSQP), and escape mechanisms (temperature reheating, ring rotations) found by the system—not hand-coded a priori.

- AIME scaffolds: three-stage knowledgeable ensemble (technology → vital peer evaluate → synthesis) that hits the accuracy/value candy spot at ~7 calls whereas retaining robustness when swapped to completely different LLM backends.

- ALE-Bench: focused engineering wins (e.g., caching kd-tree subtree stats; “focused edge strikes” towards misclassified objects) that push scores with out wholesale rewrites.

- MoE loss: provides an entropy-modulated under-use penalty to the global-batch goal; empirically reduces miss-routing and improves perplexity/benchmarks as layer routing concentrates.

How does this evaluate to AlphaEvolve and associated methods?

AlphaEvolve demonstrated sturdy closed-source outcomes however at greater analysis counts. ShinkaEvolve reproduces and surpasses the circle-packing outcome with orders-of-magnitude fewer samples and releases all parts open-source. The analysis staff additionally distinction variants (single-model vs. mounted ensemble vs. bandit ensemble) and ablate mother or father choice and novelty filtering, displaying every contributes to the noticed effectivity.

Abstract

ShinkaEvolve is an Apache-2.0 framework for LLM-driven program evolution that cuts evaluations from 1000’s to a whole lot by combining health/novelty-aware mother or father sampling, embedding-plus-LLM novelty rejection, and a UCB1-style adaptive LLM ensemble. It units a new SOTA on circle packing (~150 evals), finds stronger AIME scaffolds beneath strict question budgets, improves ALE-Bench options (~2.3% imply acquire, fifth→2nd on one process), and discovers a new MoE load-balancing loss that improves perplexity and downstream accuracy. Code and report are public.

FAQs — ShinkaEvolve

1) What’s ShinkaEvolve?

An open-source framework that {couples} LLM-driven program mutations with evolutionary search to automate algorithm discovery and optimization. Code and report are public.

2) How does it obtain greater sample-efficiency than prior evolutionary methods?

Three mechanisms: adaptive mother or father sampling (discover/exploit stability), novelty-based rejection to keep away from duplicate evaluations, and a bandit-based selector that routes mutations to essentially the most promising LLMs.

3) What helps the outcomes?

It reaches state-of-the-art circle packing with ~150 evaluations; on AIME-2024 it evolves scaffolds beneath a 10-query cap per drawback; it improves ALE-Bench options over sturdy baselines.

4) The place can I run it and what’s the license?

The GitHub repo supplies a WebUI and examples; ShinkaEvolve is launched beneath Apache-2.0.

Try the Technical particulars, Paper and GitHub Web page. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.