A brand new method encodes information throughout a number of frequencies in a single analog machine, enabling energy-efficient, high-accuracy AI {hardware} with out including extra bodily elements.

Researchers have unveiled an method to scaling analog computing programs utilizing an artificial frequency area, a way that might allow compact, energy-efficient AI {hardware} with out including extra bodily elements. The tactic, developed by groups at Virginia Tech, Oak Ridge Nationwide Laboratory, and the College of Texas at Dallas.

Analog computer systems encode data as steady portions—like voltage, vibrations, or frequency—moderately than digital 0s and 1s. Whereas inherently extra energy-efficient than digital programs, analog platforms face challenges when scaled: elements can behave inconsistently throughout bigger arrays, resulting in errors. The brand new synthetic-domain technique circumvents this by encoding information throughout totally different frequencies inside a single machine, minimizing device-to-device variation.

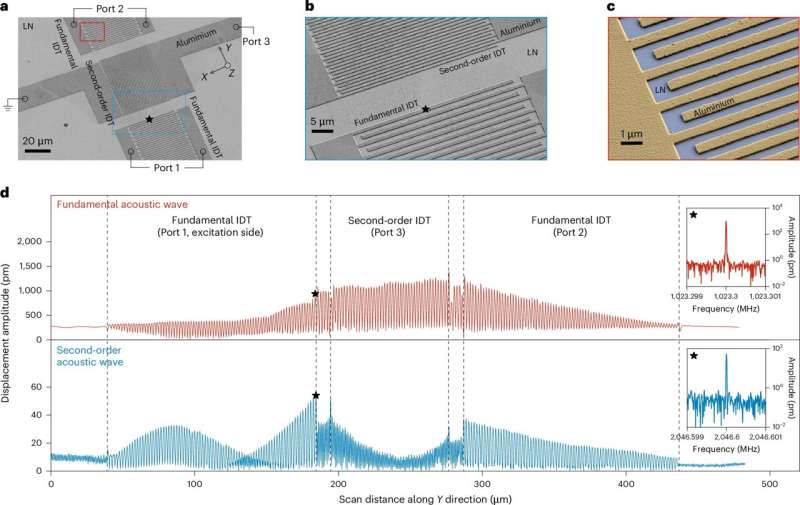

On the core of the method is a lithium niobate built-in nonlinear phononic machine, which leverages acoustic-wave physics to carry out operations similar to matrix multiplication. By representing a 16×16 information matrix on one machine, the system avoids the necessity for a number of elements usually required in standard analog computing upscaling.

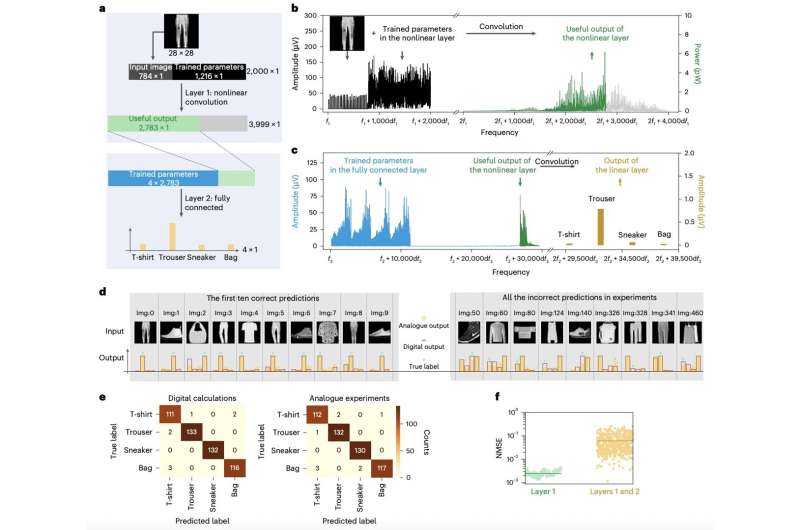

Preliminary demonstrations confirmed the platform efficiently classifying information into 4 classes utilizing a single or minimal variety of units, highlighting the potential for early-stage R&D functions the place {hardware} availability is restricted. The tactic may lengthen to rising units throughout totally different computing modalities, providing a pathway to dependable analog AI accelerators.

Past classification duties, the researchers are exploring scaling the platform to deal with bigger neural networks and extra complicated computations. The mix of vitality effectivity, compact type issue, and excessive accuracy positions synthetic-domain analog computing as a promising route for next-generation AI {hardware}, notably in functions the place standard digital programs face vitality or measurement constraints.

With this method, analog computing may lastly bridge the hole between lab-scale prototypes and scalable, sensible programs, opening doorways for AI-specific processors that leverage physics-based computation moderately than purely digital logic. “The artificial area method permits us to implement bodily neural networks (PNNs) utilizing only some acoustic-wave units,co-designing the neural community with the {hardware} considerably boosts accuracy—in our case, reaching 98.2% on a classification activity” mentioned Linbo Shao, the research’s senior creator.