Each day, billions of information factors are tied to locations on the map. Supply routes, retailer visits, street networks, cell towers, and crop fields, all carry essential context for making enterprise selections. The issue is that analyzing this knowledge at scale has been laborious. Legacy spatial programs are sluggish, require handbook indexing, and infrequently lock info into proprietary codecs.

Right this moment, we introduce Spatial SQL in Databricks. Spatial SQL brings geospatial evaluation on to the Databricks Platform. Now you can work with native GEOMETRY and GEOGRAPHY knowledge varieties, use greater than 80 SQL features, and run spatial joins at excessive velocity and efficiency, all whereas holding your knowledge open and prepared for scale.

Location knowledge performs a task in almost each trade, and Spatial SQL makes it simpler to make use of that info.

Listed here are some examples:

- Retail operations can perceive the place their clients come from by analyzing areas and foot visitors

- Transportation analysts can enhance security and buyer expertise by analyzing car incidents and mobile community connectivity

- Vitality corporations can optimize crew deployment throughout outages and discover ideally suited areas for finding wind and solar energy farms

- Agricultural operators can apply precision agriculture strategies to decrease prices and enhance yield effectivity

- Insurance coverage analysts can perceive threat by analyzing policyholder addresses throughout flood, hearth, and hurricane zones

- Healthcare organizations can evaluate and predict well being outcomes by analyzing environmental components throughout geographies

- And way more!

Spatial SQL is already serving to clients velocity up efficiency and drive decrease prices:

“Databricks Spatial SQL has redefined how we run large-scale spatial joins. By integrating Spatial SQL features into our processing pipelines, we’ve seen over 20X quicker efficiency and greater than 50% decrease prices on the identical workloads. This breakthrough makes it doable to combine and ship wealthy street community knowledge at a scale and velocity that merely wasn’t possible earlier than.”

– Laxmi Duddu, Sr. Supervisor, Autonomy Knowledge Platform & Analytics, Rivian Automotive

Prospects have beforehand struggled to handle and scale spatial workloads with legacy programs, third get together libraries, or resorting to handbook indexing methods. With Spatial SQL, clients get out-of-the-box simplicity and scalability.

“Spatial SQL lets us scale geospatial ETL like by no means earlier than. As an alternative of overloading PostGIS servers with heavy queries, we shift the load to Databricks and reap the benefits of distributed processing, quick spatial joins, and environment friendly dealing with of vector knowledge. It’s a extra environment friendly, resilient, and scalable method for dealing with massive and complicated geospatial datasets.”

— Pierre Chenaux, Tech Chief of Geospatial division, TotalEnergies

A serious driver of efficiency is assist for first-class geospatial knowledge varieties. As an alternative of storing geo knowledge in string, binary, or decimal columns, now you can use native GEOMETRY and GEOGRAPHY knowledge varieties. These varieties embrace bounding field statistics that Databricks makes use of throughout question execution to skip irrelevant knowledge and velocity up joins. Spatial SQL additionally supplies import features for normal codecs corresponding to Nicely Identified Textual content, Nicely Identified Binary, GeoJSON, and easy latitude or longitude values.

These knowledge varieties are fully open in Parquet, Iceberg, and Delta. The Databricks crew has helped shape the proposed specs, guaranteeing there is no such thing as a lock-in with proprietary warehouses. With the permitted Apache Spark™ SPIP, GEOMETRY and GEOGRAPHY will quickly be first-class knowledge varieties within the open supply engine as effectively.

What are you able to do with Spatial SQL?

Spatial SQL is greater than a set of recent features. It offers you the constructing blocks to handle the total journey of spatial knowledge, from storage and import to evaluation and transformation. By working with native knowledge varieties and environment friendly operations, you’ll be able to deliver location into on a regular basis queries with out including complexity.

Listed here are a number of the core issues you are able to do:

- Retailer spatial knowledge natively with GEOMETRY and GEOGRAPHY

- Import and export in codecs corresponding to WKT, WKB, GeoJSON, and GeoHash

- Construct new objects with constructors like ST_Point or ST_MakeLine

- Calculate measurements utilizing features like ST_Distance and ST_AREA

- Carry out spatial joins utilizing relationships corresponding to ST_Contains and ST_Intersects

- Remodel between coordinate programs with ST_Transform

- Edit, validate, and mix spatial objects utilizing ST_ISVALID or ST_UNION_AGG

- And way more!

These options offer you an entire toolkit for spatial evaluation instantly in SQL, additionally obtainable with Python and Scala APIs. Whenever you put them collectively, they unlock actual workflows that matter in follow, which we are going to stroll by means of within the subsequent part.

Spatial SQL Examples in follow

Geospatial knowledge is all over the place and rising. GPS traces with latitudes and longitudes are emitted from an rising variety of gadgets, sensors, and automobiles each second of the day. The world is being cataloged and up to date continuously, with locations, roads, networks, and bounds modeled as factors, traces, and polygons. Throughout each trade – retail, transportation and logistics, power, local weather and pure sciences, agriculture, public sector, monetary companies, actual property, insurance coverage, telecommunications – location issues to each decision-maker who wants to know the “the place” of their knowledge.

Now we have crafted 4 brief examples to get began with working with the brand new spatial knowledge varieties and expressions with the next targets.

- Put together knowledge for environment friendly processing through the use of the brand new GEOMETRY sort

- Carry out knowledge enrichment by combining two spatial datasets utilizing a spatial be a part of

- Remodel knowledge into an applicable spatial reference system to enhance the accuracy of distance measurements

- Measure the space between two cities

In these examples, we are going to use addresses, buildings, and divisions datasets from OvertureMaps.org. These datasets are supplied for obtain in varied methods, corresponding to GeoParquet.

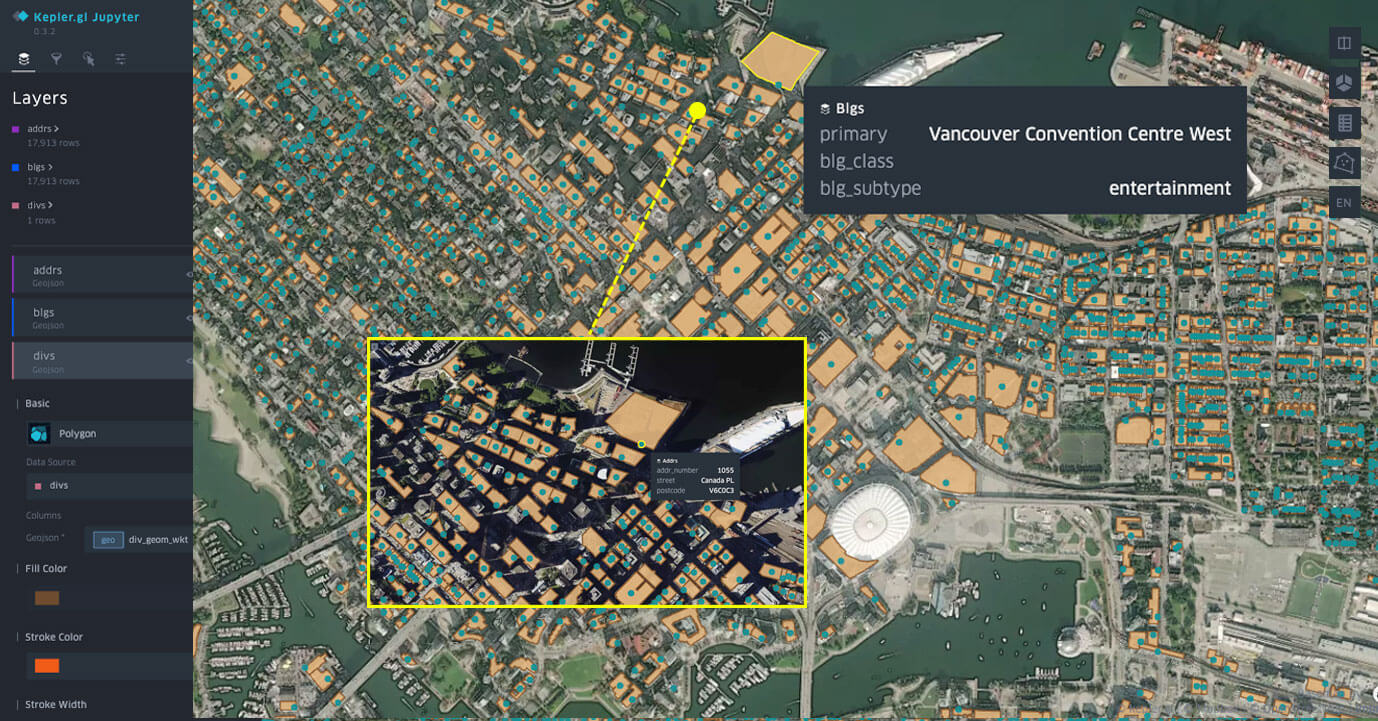

Overture Maps datasets visualized in Databricks Pocket book with kepler.gl.

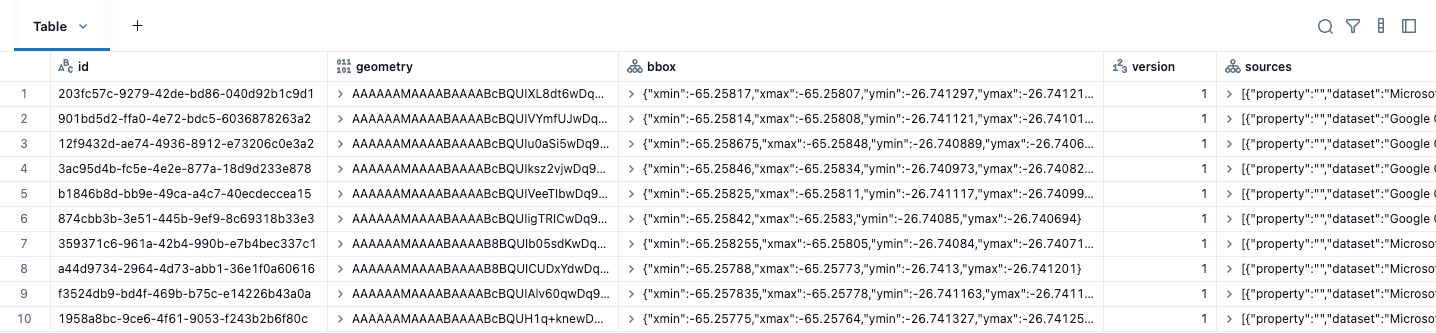

1. Making a GEOMETRY column

Step one earlier than performing any spatial evaluation is to transform your knowledge to make use of GEOMETRY OR GEOGRAPHY knowledge varieties. After downloading the Overture Maps knowledge, we merely must create a local GEOMETRY column from the supplied WKB geometry column and drop different pointless columns like bbox. A bounding field is the smallest rectangle that comprises a geometry. In spatial queries, bounding bins velocity queries up by rapidly discarding knowledge that may’t presumably overlap. If two bounding bins don’t intersect, the geometries inside them positively don’t, so the database can skip the costly intersection verify and cut back the quantity of information being processed. We don’t want the bbox area as a result of this info is now managed within the column statistics. For these datasets, addresses are POINTS, whereas buildings and divisions are POLYGONS / MULTI-POLYGONS. Right here is the initially downloaded buildings knowledge, displaying the primary 5 columns.

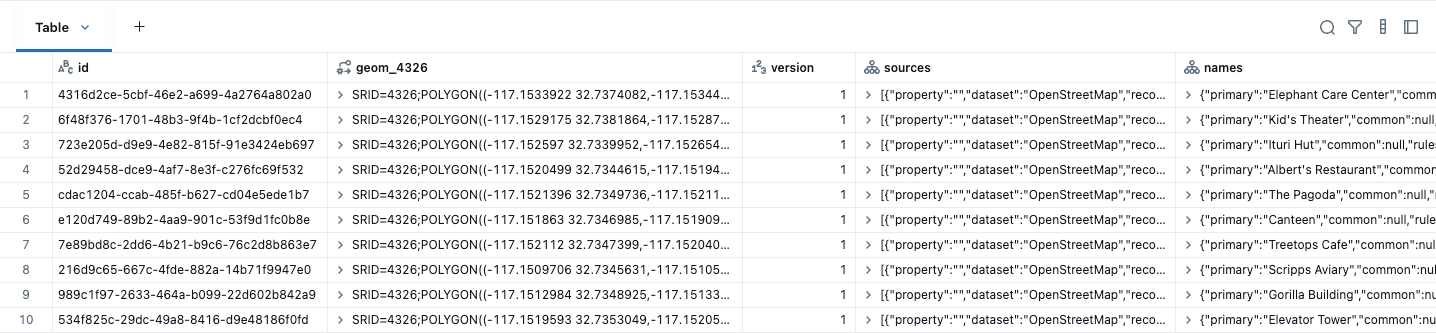

This knowledge will be simply transformed right into a Lakehouse Desk with native GEOMETRY utilizing ST_GeomFromWKB, proven within the instance under for buildings. We all know our knowledge is in WGS84 (EPSG:4326), so we specify that within the creation of the spatial sort. An SRID identifies the coordinate system of your spatial knowledge, which defines the models (like levels or meters) utilized in calculations corresponding to distance and space. You will need to set a legitimate SRID when making a geometry column, or the question will return an error. Additionally, be aware that our native varieties show in a human-friendly format (EWKT).

Along with WKB, spatial knowledge can be instantly imported into our native varieties from the most typical interchange codecs:

- Latitude and longitude coordinates utilizing ST_POINT

- WKT utilizing ST_GeomFromWKT or ST_GeomFromText

- WKB utilizing ST_GeomFromWKB or ST_GeomFromBinary

- GeoJSON utilizing ST_GeomFromGeoJSON

- GeoHash utilizing ST_PointFromGeoHash

Equally, spatial knowledge will be exported as a variety of codecs:

- WKT utilizing ST_AsWKT or ST_AsText

- WKB utilizing ST_AsWKB or ST_AsBinary

- GeoJSON utilizing ST_AsGeoJSON

- Prolonged WKT utilizing ST_AsEWKT

- Prolonged WKB utilizing ST_AsEWKB

- GeoHash utilizing ST_GeoHash

Be aware: We even have import and export expressions for GEOGRAPHY varieties.

2. Spatially becoming a member of a number of datasets collectively

Spatial joins are among the many most essential and broadly used operations in geospatial knowledge processing. They can help you mix attributes from completely different datasets and carry out aggregations or knowledge enrichment primarily based on their spatial relationships, like containment, intersection, and proximity. This makes spatial joins important for answering real-world questions like figuring out which buildings fall inside a flood zone, assigning census demographics to buyer addresses, and analyzing related automobiles inside cell protection areas. As a result of a lot geospatial evaluation relies on integrating a number of datasets, spatial joins are sometimes a primary step in exploratory spatial evaluation, spatial modeling, and location-based decision-making.

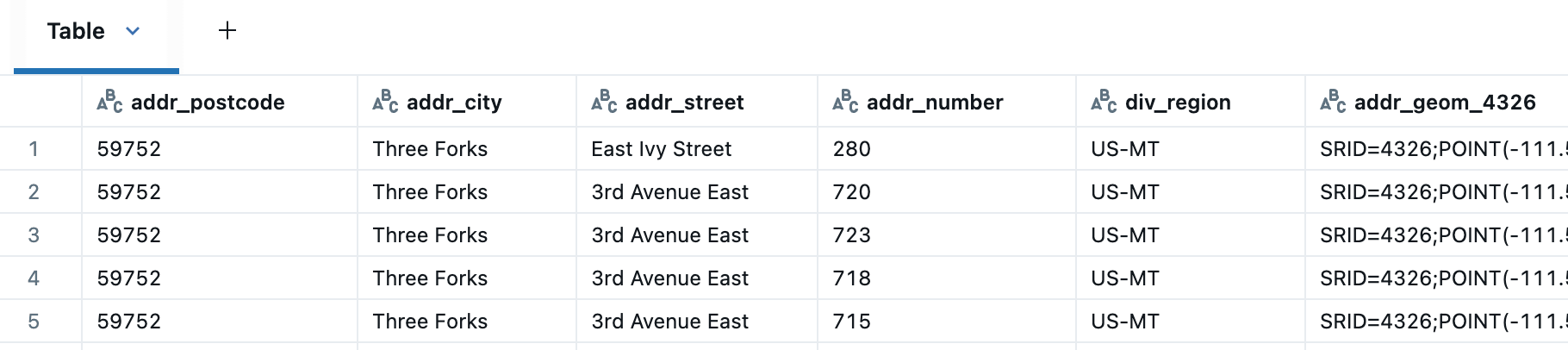

Subsequent, we are going to be a part of the deal with and division tables utilizing a spatial be a part of. Anybody who has labored with deal with knowledge sources is aware of that addresses will be messy knowledge (one widespread trigger is that completely different nations use completely different addressing programs). Additional, the deal with desk doesn’t embrace a full administrative hierarchy (i.e., no county info for US addresses). So we are going to use the division desk to validate the town info and enrich it by including county-equivalent info.

This knowledge validation and enrichment course of can be completely different to resolve with no spatial be a part of. To do that, we have to discover the deal with contained in the division. We’ll use ST_Contains to carry out a point-in-polygon spatial be a part of, letting Databricks deal with the internals of the operation, no do-it-yourself spatial indexing required.

Now we are able to extra readily standardize to the right metropolis, state, county, and nation, e.g. change lacking cities in addresses utilizing these supplied within the divisions desk.

After validating the addresses, we adopted an analogous method to hitch the addresses on buildings utilizing ST_Intersects to complement the Buildings desk with deal with info. For the US, this spatial be a part of matched 44M addresses to buildings, with 55M buildings remaining unmatched. Within the subsequent instance, let’s see how we are able to use proximity to probably determine buildings that didn’t match to an deal with.

3. Reworking knowledge to particular spatial reference programs

Geospatial datasets are sometimes created in several coordinate reference programs (CRS), corresponding to latitude–longitude (WGS84) or projected programs like UTM, relying on their supply and goal. Whereas every CRS defines how the earth’s curved floor is represented on a flat map, utilizing datasets with mismatched projections may cause options to misalign, distort distances, or produce incorrect spatial joins and measurements. A retailer positioned in a flood zone won’t match in a spatial be a part of if utilizing completely different coordinate programs. For correct evaluation—whether or not calculating areas, becoming a member of layers, or visualizing spatial relationships—it’s important to make sure all datasets are reworked into the identical projection in order that they share a constant spatial reference.

To determine addresses inside proximity of the remaining 55M unmatched buildings within the US, let’s undertaking our WGS84 GEOMETRY knowledge to Conus Albers (EPSG:5070) for North America, which supplies us models in meters. That is completed with the ST_Transform perform.

Let’s apply ST_DWithin between our unmatched U.S. buildings and addresses, utilizing a distance inside worth of simply 2 meters.

The gap inside worth will be elevated as wanted to assemble a set of potential deal with matches; additionally, a Recursive CTE will be helpful to iterate over a number of distances. For this instance, a filter polygon permits us to simply isolate our search to the neighborhood of Saint Petersburg, Florida. The polygon is initially ready from WKT utilizing ST_GeomFromWKT, then reworked into SRID 5070 to match the deal with and constructing knowledge.

To arrange datasets for the recursive CTE, we apply a spatial filter over our knowledge by intersecting buildings and addresses with the search polygon, displaying buildings under (addresses are dealt with equally).

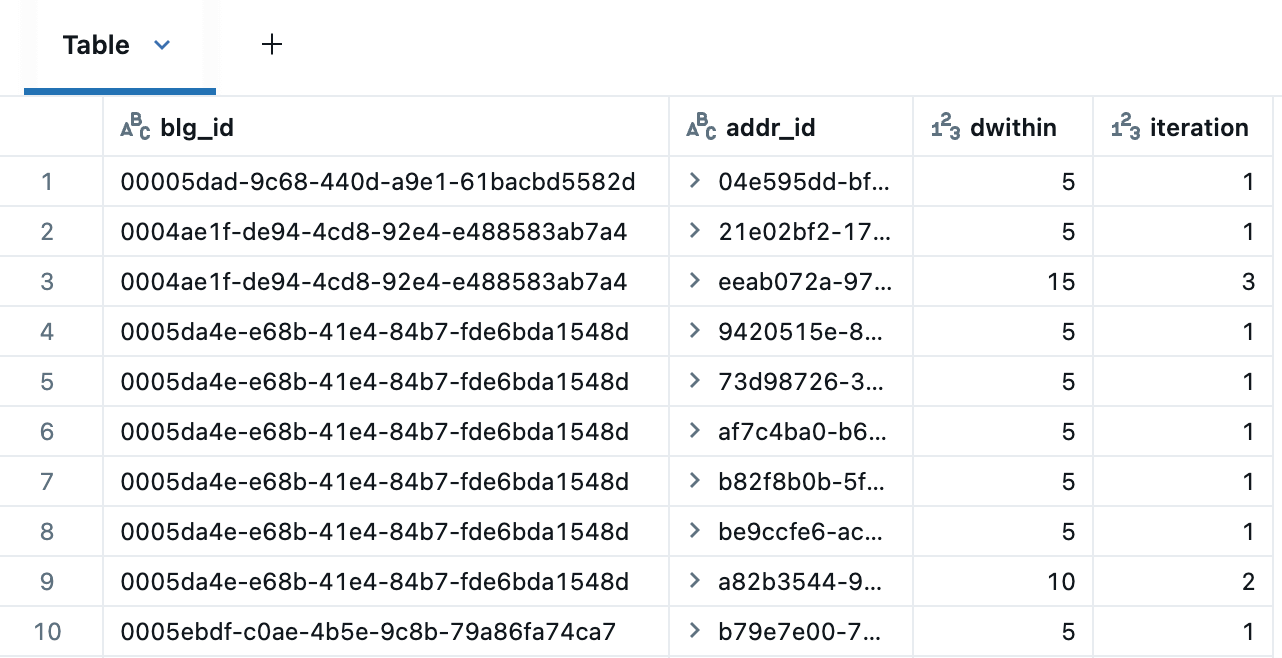

The recursive CTE under iterates over buildings to determine deal with candidates inside 5, 10, and 15 meters. The end result desk removes duplicate addresses over successive distances utilizing the next window expression: QUALIFY RANK() OVER (PARTITION BY blg_id,addr_id ORDER BY dwithin) = 1.

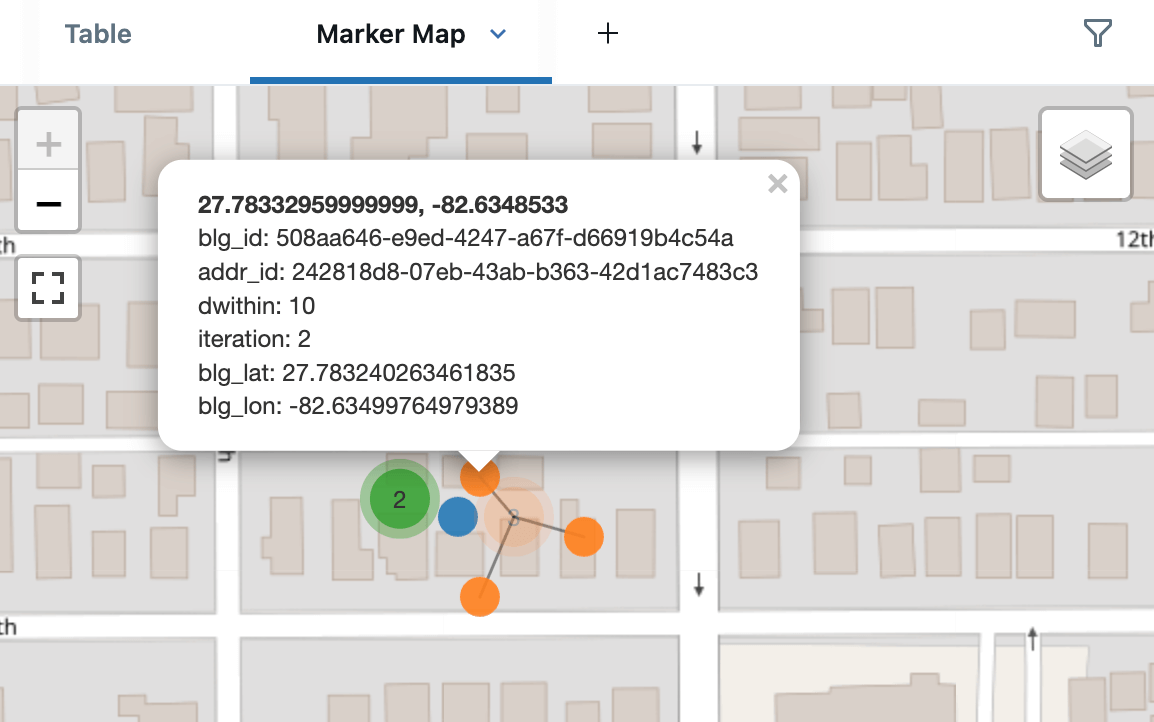

Listed here are the deal with candidates round one of many buildings, displaying matches at 5m (blue), 10m (orange) and 15m (inexperienced). That is rendered utilizing Databricks’ built-in Marker Maps utilizing clustering mode, which can fan out shut factors for simpler viewing. When making a Dashboard, we’d even have used AI/BI Level Maps, which assist cross-filtering and drill-through.

There are quite a few alternate makes use of of Recursive CTEs for spatial processing, e.g., implementing Prim’s algorithm for constructing a minimal spanning tree of your supply factors.

4. Measuring distances between areas

Proximity is a core idea in spatial evaluation as a result of distance typically determines the energy or relevance of relationships between areas. Whether or not figuring out the closest hospital, analyzing competitors between shops, or mapping commuter patterns, understanding how shut options are to at least one one other supplies crucial context.

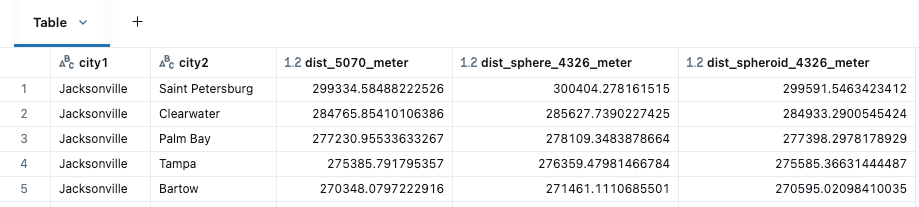

Persevering with with our instance dataset, we carried out the identical Conus Albers remodel operation on our cities in Florida to measure their distances. We’re measuring from the geometric middle of the cities, generated utilizing the perform: ST_Centroid.

When calculating the space between two GEOMETRIES, there are a number of completely different features to contemplate:

- ST_Distance – Returns the Cartesian distance within the models of the supplied GEOMETRIES, calculating the straight-line path primarily based on their x and y coordinates, as if the Earth had been flat.

- ST_DistanceSphere – Returns the spherical distance (all the time in meters) between two level GEOMETRIES, measured on the imply radius of the WGS84 ellipsoid of the Earth, with coordinate factors assumed to have models of levels, e.g., SRID 4326 can be legitimate however not SRID 5070.

- ST_DistanceSpheroid – Returns the geodesic distance (all the time in meters) between two level GEOMETRIES on the WGS84 ellipsoid of the Earth; additionally, with coordinate factors assumed to have models of levels. Once more, SRID 4326, or another SRID with models in levels, can be legitimate inputs for this instance.

The varied distance calculations are utilized between city1 and city2 with ST_Distance used for the GEOMETRIES in 5070, then ST_DistanceSphere and ST_DistanceSpheroid for the GEOMETRIES in 4326.

Among the many distance features, we might anticipate ST_DistanceSpheriod (measurements primarily based on Earth’s ellipsoidal form) to be probably the most correct on this occasion, adopted by ST_DistanceSphere (measurements assume Earth is an ideal sphere). Be aware that ST_Distance is most helpful when engaged on projected coordinate reference programs or when the Earth’s curvature can in any other case be ignored. Despite the fact that SRID 5070 is in meters, we are able to see the results of Cartesian calculations over bigger distances. ST_Distance wouldn’t typically be an acceptable selection for SRID 4326, provided that the space lined by one diploma of longitude modifications drastically as you progress from the equator towards the poles, e.g., 1 diploma of longitude differs by as much as 6KM simply inside the state of Florida.

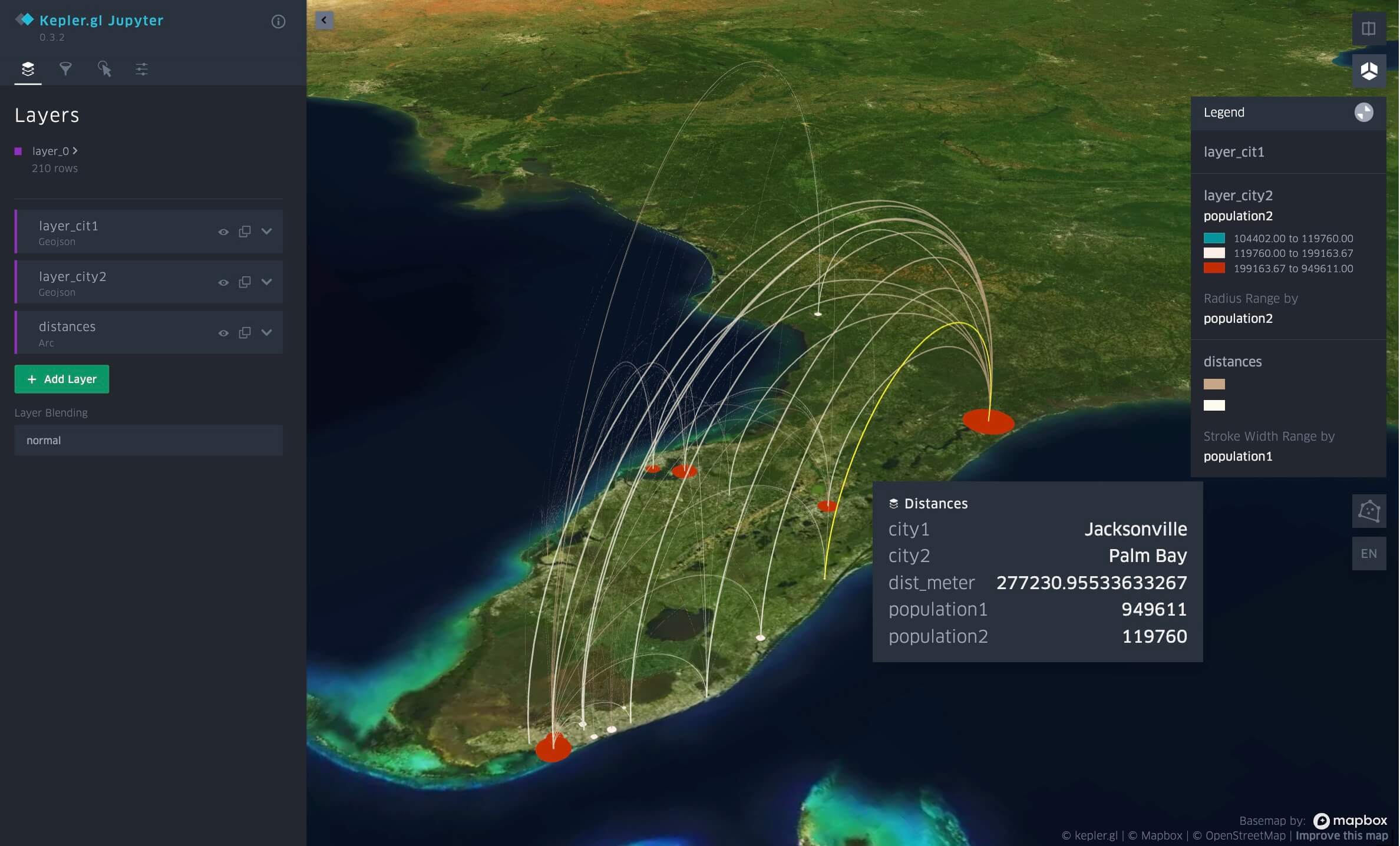

Proximity by distance between Florida cities, visualized in Databricks Pocket book with kepler.gl.

Spatial SQL contains 80+ features, permitting clients to carry out widespread spatial knowledge operations with simplicity and scale. Powered by Spatial SQL, clients are actually beginning to shift their method to managing and integrating with GIS programs:

“Spatial knowledge is on the core of all the things we do at OSPRI, be it Livestock Traceability, Illness Eradication, or Pest Administration. Databricks Spatial SQL permits us to totally combine Databricks with all our work. These developments permit us to shift massive desktop-based spatial modelling duties to a platform the place they’re nearer to the information and will be run in parallel, at velocity. Weeks of iterations throughout operational boundaries will be comfortably run inside a day or two, lowering our time to resolution. These new features additionally permit us to make Databricks the combination layer between our transactional programs and our GIS platform, guaranteeing it may be knowledgeable by knowledge throughout the organisation with out compromise.” – Campbell Fleury, Supervisor, Knowledge and Info Merchandise, OSPRI New Zealand

What’s subsequent

There may be a lot you are able to do with Spatial SQL in Databricks, with extra to return, together with new expressions and quicker spatial joins. If you need to share what extra ST expressions you require, please fill out this brief survey.