A ruling final week in Australia makes utilizing facial recognition to fight fraud nearly not possible and is the newest instance of world regulators’ rising disapproval of biometric expertise in retail environments.

The Workplace of the Australian Info Commissioner (OAIC) decided that Kmart Australia Restricted had violated the nation’s Privateness Act 1988 when it used facial recognition to stop return fraud and theft.

Kmart shops in Australia had used FRT to catch fraudsters. Picture: Wesfarmers.

Kmart and Bunnings

At query was a Kmart pilot program that had positioned facial recognition expertise (FRT) in 28 of the corporate’s retail places from June 2020 via July 2022.

The corporate created a face print, if you’ll, of each shopper getting into one of many pilot program shops. When a buyer returned an merchandise, Kmart’s system would evaluate that individual’s face print to a listing of identified thieves and fraudsters.

Kmart argued that the expertise aimed to thwart return fraud and shield its workers, which thieves had ceaselessly threatened. Biometrics, nevertheless, symbolize a particular class of privateness safety in Australia.

The case was just like a November 2024 OAIC dedication towards Bunnings, a home-improvement retailer, for utilizing FRT to determine criminals. Australian conglomerate Wesfarmers Restricted owns Kmart Australia, Bunnings, and different retail chains, together with Goal Australia.

FRT Challenges

The OAIC said that its discovering is not a ban on FRT, however its circumstances make utilizing the expertise difficult, if not not possible.

For instance, an Australian retailer would want consent earlier than using FRT, and the thieves stealing gadgets to try return fraud would nearly definitely refuse.

Kmart had disclosed FRT in an indication on the entrance of every pilot retailer, which learn, “This retailer has 24-hour CCTV protection, which incorporates facial recognition expertise.” However this discover didn’t set up consent in line with the OAIC.

Asking would-be criminals for permission to make use of facial recognition has the identical impact as banning it, given the present state of the expertise.

GDPR

The OAIC’s Kmart resolution relating to specific consent aligns with different privateness rules and rulings.

For instance, many privateness specialists word that Article 9 of the European Union’s Normal Information Privateness Regulation, which covers the processing of particular classes of non-public knowledge, requires specific consent for using FRT.

FTC vs. Ceremony Assist

In america, there are situations of rulings towards FRT and using biometric knowledge.

In a 2023 dedication, the U.S. Federal Commerce Fee prohibited Ceremony Assist Pharmacy from utilizing FRT and different automated biometric programs for 5 years.

The company argued that Ceremony Assist had not taken ample measures to stop false positives and algorithmic racial profiling.

Illinois BIPA

The Illinois Biometric Info Privateness Act was enacted in 2008 and is, maybe, essentially the most stringent biometric privateness regulation within the nation.

The BIPA requires companies to offer written notification of using biometric knowledge and procure buyers’ written consent. The regulation permits people to sue for violations, and has resulted in lots of instances towards retailers, similar to:

- A 2022 lawsuit alleges that Walmart’s in-store “cameras and superior video surveillance programs” secretly acquire buyers’ biometric knowledge with out discover or consent.

- A March 2024 class-action lawsuit towards Goal alleges the retailer used FRT to determine shoplifters with out correct consent.

- A category-action lawsuit filed in August 2025 alleges that Residence Depot is illegally utilizing FRT at its self-checkout kiosks.

M•A•C Cosmetics

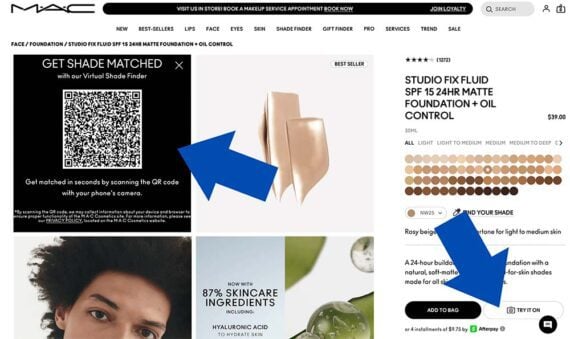

From the retail and ecommerce perspective, essentially the most regarding BIPA lawsuit could also be Fiza Javid v. M.A.C. Cosmetics Inc. The category-action swimsuit, filed in August 2025, shouldn’t be involved with crime combating however with digital try-on expertise.

The grievance notes that M•A•C’s web site asks buyers to add a photograph or allow stay video in order that it might probably detect somebody’s facial construction and pores and skin shade. Plaintiff Fiza Javid asserts the characteristic would require BIPA’s written consent and is due to this fact in violation of the Illinois regulation.

M•A•C Cosmetics provides instruments for digital try-on and pores and skin shade identification.

M•A•C’s digital make-up try-on instruments improve the expertise for buyers and nearly definitely enhance ecommerce conversion charges.

The deserves of the case are pending, but BIPA has already spawned digital try-on instances, together with:

- Kukovec v. Estée Lauder Corporations, Inc. (2022).

- Theriot v. Louis Vuitton North America, Inc. (2022).

- Gielow v. Pandora Jewellery LLC (2022).

- Shores v. Wella Operations US LLC (2022).

Engagement and Enforcement

AI-driven facial recognition and biometric expertise are among the many most promising developments in retail and ecommerce.

The expertise has the potential to cut back fraud, deter theft, and assist legal prosecutions. A 2023 article within the Worldwide Safety Journal estimated that facial biometrics may cut back retail shoplifting by between 50% and 90% relying on location and use.

Furthermore, biometrics can enhance on-line and in-store purchasing with digital try-on instruments. Some retailers have reported a 35% improve in gross sales conversions when digital purchasing is on the market.

The query is how privateness rules and rulings, similar to final week’s Kmart resolution, in the end influence its use.