Synthetic intelligence (AI) has shifted from a daring experiment to a strategic necessity. Latest research present that 88% of C-level decision-makers wish to speed up AI adoption in 2025. But, only a fraction of AI initiatives delivers the anticipated outcomes.

Conventional AI fashions demand huge, meticulously labeled datasets. For a lot of organizations, gathering, cleansing, and annotating such volumes is prohibitively costly, time-consuming, and even inconceivable as a result of knowledge shortage or privateness restrictions. These bottlenecks delay deployment and drive up prices.

That is the place few-shot studying (FSL) gives a breakthrough. By enabling fashions to study new duties from solely a handful of examples, FSL bypasses the heavy knowledge necessities of conventional AI, remodeling what has lengthy been a roadblock into a bonus.

On this article, ITRex AI consultants clarify what few-shot studying is and the way firms can use it to facilitate AI deployment and scaling.

What’s few-shot studying, and why do you have to care?

The few-shot studying method displays the best way people study. Individuals don’t have to see lots of of examples to acknowledge one thing new – just a few well-chosen cases usually suffice.

Few-shot studying definition

So, what’s few-shot studying?

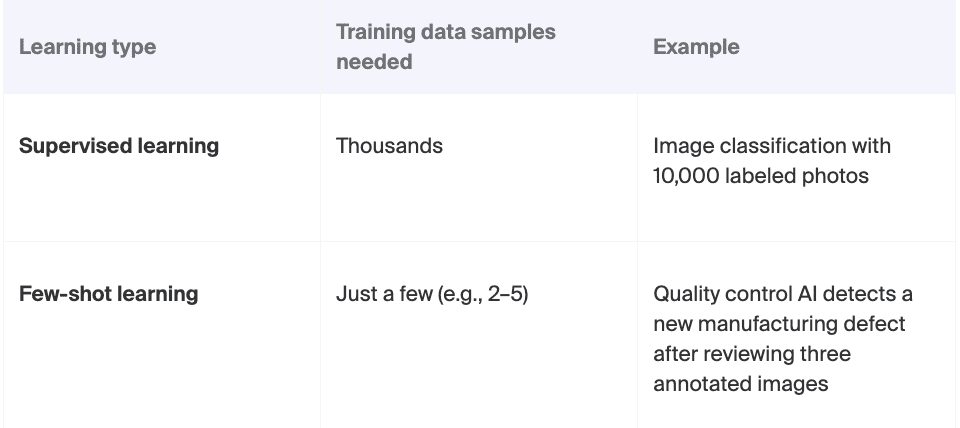

Few-shot studying, defined in easy phrases, is a technique in AI that allows fashions to study new duties or acknowledge new patterns from only some examples. Typically as few as two to 5. In contrast to conventional machine studying, which requires 1000’s of labeled knowledge factors to carry out nicely, few-shot studying considerably reduces the dependency on massive, curated datasets.

Press enter or click on to view picture in full measurement

Let’s take a enterprise analogy of fast onboarding. A seasoned worker adapts rapidly to a brand new position. You don’t have to ship them via months of coaching. Simply present them just a few workflows, introduce the appropriate context, they usually start delivering outcomes. Few-shot studying applies the identical precept to AI, permitting programs to absorb restricted steerage and nonetheless produce significant, correct outcomes.

What are the benefits of few-shot studying?

Few-shot studying does greater than improve AI efficiency – it modifications the economics of AI totally. It’s a sensible lever for leaders targeted on velocity, financial savings, and staying forward. FSL will:

- Lower prices with out minimizing capabilities. Few-shot studying slashes the necessity for big, labeled datasets, which is commonly probably the most costly and time-consuming steps in AI initiatives. By minimizing knowledge assortment and guide annotation, firms redirect that finances towards innovation as an alternative of infrastructure.

- Speed up deployment and time to market. FSL permits groups to construct and deploy fashions in days, not months. As a substitute of ready for excellent datasets, AI builders present the mannequin just a few examples, and it will get to work. This implies firms can roll out new AI-driven options, instruments, or companies rapidly – precisely when the market calls for it.

For instance, few-shot studying methods lowered the time wanted to coach a generative AI mannequin by 85%. - Improve adaptability and generalization. Markets shift and knowledge evolves. Few-shot studying permits companies to maintain up with these sudden modifications. This studying method doesn’t depend on fixed retraining. It helps fashions adapt to new classes or sudden inputs with minimal effort.

How does few-shot studying work?

Few-shot studying is applied in a different way for traditional AI and generative AI with massive language fashions (LLMs).

Few-shot studying in traditional AI

In traditional AI, fashions are first skilled on a broad vary of duties to construct a basic function understanding. When launched to a brand new process, they use only a few labeled examples (the help set) to adapt rapidly with out full retraining.

- Pre-training for basic information. The mannequin first trains on a broad, numerous dataset, studying patterns, relationships, and options throughout many domains. This basis equips it to acknowledge ideas and adapt with out ranging from scratch every time.

- Speedy process adaptation. When confronted with a brand new process, the mannequin receives a small set of labeled examples – the help set. The mannequin depends on its prior coaching to generalize from this minimal knowledge and make correct predictions on new inputs, refining its potential with every iteration. As an example, if an AI has been skilled on numerous animal photos, FSL would enable it to rapidly determine a brand new, uncommon species after seeing only a handful of its images, without having 1000’s of recent examples.

Press enter or click on to view picture in full measurement

Few-shot studying replaces the gradual, data-heavy cycle of conventional AI coaching with an agile, resource-efficient method. FSL for traditional AI usually depends on meta-learning or metric-based methods.

- Meta-learning – usually referred to as “studying to study” – trains fashions to adapt quickly to new duties utilizing only some examples. As a substitute of optimizing for a single process, the mannequin learns throughout many small duties throughout coaching, growing methods for fast adaptation.

- Metric-based approaches classify new inputs by measuring their similarity to a couple labeled examples within the help set. As a substitute of retraining a fancy mannequin, these strategies give attention to studying a illustration area the place associated objects are shut collectively and unrelated objects are far aside. The mannequin transforms inputs into embeddings (numerical vectors) and compares them utilizing a similarity metric (e.g., cosine similarity, Euclidean distance).

Few-shot studying in LLMs

In LLMs, few-shot studying usually takes the type of few-shot prompting. As a substitute of retraining, you information the mannequin’s habits by together with just a few task-specific examples immediately within the immediate.

As an example, if you need the mannequin to generate product descriptions in a particular type, you embody two to 5 instance descriptions within the immediate together with the request for a brand new one. The mannequin then mimics the type, tone, and format.

Few-shot vs. one-shot vs. zero-shot studying: key variations

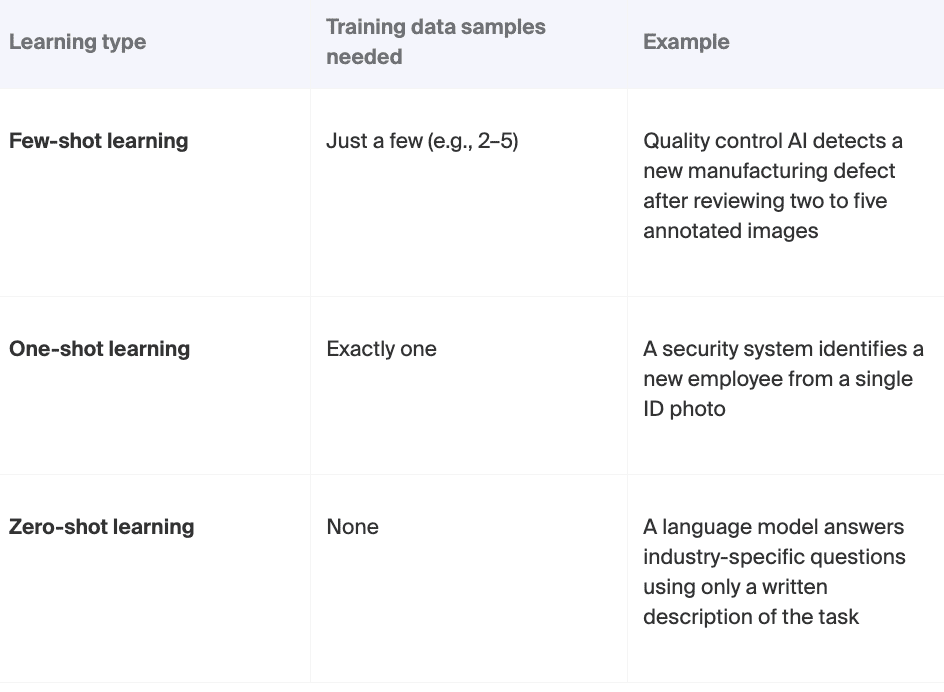

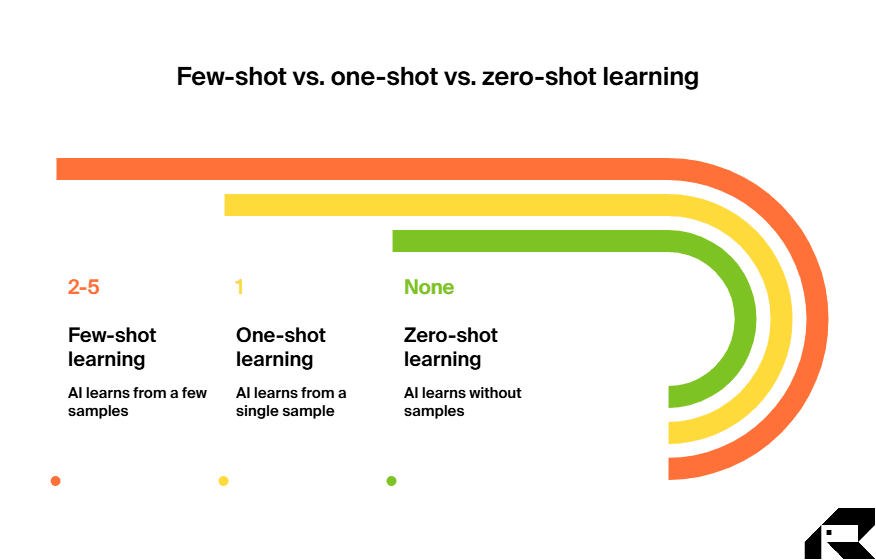

Along with few-shot studying, firms may use one-shot and zero-shot studying. Every gives distinctive methods to deploy AI when knowledge availability is proscribed. Understanding their variations is vital to matching the appropriate method to your corporation wants.

- Few-shot studying. The mannequin learns from a small set of labeled examples (usually 2-5). Excellent when you may present some consultant knowledge for a brand new product, course of, or class however wish to keep away from the time and price of accumulating 1000’s of samples.

- One-shot studying. The mannequin learns from precisely one labeled instance per class. That is well-suited for situations the place classes change usually or examples are exhausting to acquire.

- Zero-shot studying. The mannequin learns with none task-specific examples. It depends solely on its prior coaching and an outline of the duty. Zero-shot is effective when there is no such thing as a knowledge obtainable in any respect, but fast deployment is crucial.

Press enter or click on to view picture in full measurement

Press enter or click on to view picture in full measurement

When to keep away from few-shot studying?

Few-shot studying gives velocity and effectivity, however it’s not at all times the optimum selection. In some circumstances, fine-tuning or conventional supervised studying will ship extra dependable outcomes. These circumstances embody:

- When precision is important. If the duty calls for near-perfect accuracy, similar to in important medical diagnostics or fraud detection, counting on only some examples could introduce unacceptable error charges. High-quality-tuning with a bigger, task-specific dataset supplies better management and consistency.

- When knowledge is available and inexpensive. In case your group can simply accumulate and label 1000’s of examples, conventional supervised studying could yield stronger efficiency, particularly for complicated or nuanced duties the place broad variability have to be captured.

- When the duty is very domain-specific. Few-shot fashions excel at generalization, however area of interest domains with distinctive terminology, codecs, or patterns usually profit from focused fine-tuning. As an example, a authorized AI assistant working with patent filings should interpret extremely specialised vocabulary and doc buildings. High-quality-tuning on a big corpus of patent paperwork will ship higher outcomes than counting on just a few illustrative examples.

- When the output have to be steady over time. Few-shot studying thrives in dynamic environments, but when your system is steady and unlikely to vary, like a barcode recognition system, investing in a completely skilled, specialised mannequin is a more sensible choice.

Actual-world examples: few-shot studying in motion

Let’s discover the completely different use circumstances of few-shot studying in enterprise AI and enterprise purposes.

Few-shot studying in manufacturing

Few-shot studying accelerates manufacturing high quality management by enabling AI fashions to detect new product variations or defects from only a handful of examples. Additionally, when factories produce extremely custom-made or limited-edition merchandise, few-shot studying can rapidly adapt AI programs for sorting, labeling, or meeting duties with minimal retraining, which is right for brief manufacturing runs or fast design modifications.

Few-shot studying instance in manufacturing

Philips Client Way of life BV has utilized few-shot studying to remodel high quality management in manufacturing, specializing in defect detection with minimal labeled knowledge. As a substitute of accumulating 1000’s of annotated examples, researchers practice fashions on only one to 5 samples per defect sort. They improve accuracy by combining these few labeled photos with anomaly maps generated from unlabeled knowledge, making a hybrid technique that strengthens the mannequin’s potential to identify faulty parts.

This technique delivers efficiency corresponding to conventional supervised fashions whereas drastically lowering the time, value, and energy of dataset creation. It permits Philips to adapt its detection programs quickly to new defect sorts with out overhauling whole pipelines.

Few-shot studying in training

This studying approach permits instructional AI fashions to adapt to new topics, educating types, and pupil wants with out the heavy knowledge necessities of conventional AI fashions. Few-shot studying can personalize studying paths based mostly on only a handful of examples, bettering content material relevance and engagement whereas lowering the time wanted to create custom-made supplies. Built-in into real-time studying platforms, FSL can rapidly incorporate new subjects or evaluation sorts.

Past personalised instruction, instructional establishments use FSL to streamline administrative processes and improve adaptive testing, boosting effectivity throughout tutorial and operational capabilities.

Few-shot studying instance from the ITRex portfolio

ITRex constructed a Gen AI-powered gross sales coaching platform to automate onboarding. This answer transforms inside paperwork, together with presentation slides, PDFs, and audio, into personalised classes and quizzes.

Our generative AI builders used an LLM that may examine the obtainable firm materials, factoring in a brand new rent’s expertise, {qualifications}, and studying preferences to generate a custom-made examine plan. We utilized few-shot studying to allow the mannequin to supply custom-made programs.

Our group offered the LLM with a small set of pattern course designs for various worker profiles. For instance, one template confirmed the right way to construction coaching for a novice gross sales consultant preferring a gamified studying expertise, whereas one other demonstrated a plan for an skilled rent choosing a standard format.

With few-shot studying, we lowered the coaching cycle from three weeks with traditional fine-tuning to only a few hours.

Few-shot studying in finance and banking

Few-shot studying permits fast adaptation to new fraud patterns with out prolonged retraining, bettering detection accuracy and lowering false positives that disrupt prospects and drive up prices. Built-in into real-time programs, it could rapidly add new fraud prototypes whereas preserving transaction scoring quick, particularly when mixed with rule-based checks for stability.

Past fraud prevention, banks additionally use few-shot studying to streamline doc processing, automate compliance checks, and deal with different administrative duties, boosting effectivity throughout operations.

Few-shot studying instance in finance:

The Indian subsidiary of Hitachi deployed few-shot studying to coach its doc processing fashions on over 50 completely different financial institution assertion codecs. These fashions are presently processing over 36,000 financial institution statements monthly and preserve a 99% accuracy stage.

Equally, Grid Finance used few-shot studying to show its fashions to extract key earnings knowledge from numerous codecs of financial institution statements and payslips, enabling constant and correct outcomes throughout various doc sorts.

Addressing government considerations: mitigating dangers and guaranteeing ROI

Whereas few-shot studying gives velocity, effectivity, and suppleness, it additionally brings particular challenges that may have an effect on efficiency and return on funding. Understanding these dangers and addressing them with focused methods is crucial for translating FSL’s potential into measurable, sustainable enterprise worth.

Challenges and limitations of few-shot studying embody:

- Knowledge high quality as a strategic precedence. Few-shot studying reduces the quantity of coaching knowledge required, but it surely will increase the significance of choosing high-quality, consultant examples. A small set of poor inputs can result in weak outcomes. This shifts an organization’s knowledge technique from accumulating all the things to curating solely essentially the most related samples. It means investing in disciplined knowledge governance, rigorous high quality management, and cautious choice of the important few examples that can form mannequin efficiency and scale back the chance of overfitting.

- Moral AI and bias mitigation. Few-shot studying delivers velocity and effectivity, however it could additionally carry ahead biases embedded within the massive pre-trained fashions it will depend on. AI engineers ought to deal with accountable AI governance as a precedence, implementing bias testing, diversifying coaching knowledge the place attainable, and guaranteeing transparency in decision-making. This safeguards in opposition to misuse and ensures FSL’s advantages are realized in a good, explainable, and accountable manner.

- Optimizing the “few” examples. In few-shot studying, success hinges on selecting the correct examples. Take too few, and the mannequin underfits – studying too little to generalize. Poorly chosen or noisy examples could cause overfitting and degrade efficiency. So, deal with choice as a strategic step. Use area specialists to curate consultant samples and validate them via fast experiments. Pair human perception with automated knowledge evaluation to determine examples that really seize the range and nuances of the duty.

- Sensitivity to immediate high quality (few-shot studying for LLMs). In LLM-based few-shot studying, the immediate determines the result. Nicely-crafted prompts information the mannequin to supply related, correct responses. Poorly designed ones result in inconsistency or errors. Deal with immediate creation as a important ability. Contain area specialists to make sure prompts mirror actual enterprise wants, and take a look at them iteratively to refine wording, construction, and context.

- Managing computational calls for. Few-shot studying reduces knowledge preparation prices, but it surely nonetheless depends on massive, pre-trained fashions that may be computationally intensive, particularly when scaled throughout the enterprise. To maintain initiatives environment friendly, plan early for the mandatory infrastructure – from high-performance GPUs to distributed processing frameworks – and monitor useful resource utilization intently. Optimize mannequin measurement and coaching pipelines to stability efficiency with value, and discover methods like mannequin distillation or parameter-efficient fine-tuning to cut back compute load with out sacrificing accuracy.

Few-shot studying: AI’s path to agile intelligence

Few-shot studying gives a wiser manner for companies to make use of AI, particularly when knowledge is scarce or must adapt rapidly. It’s not a magic answer however a sensible device that may enhance effectivity, scale back prices, and assist groups reply quicker to new challenges. For leaders trying to keep forward, understanding the place and the right way to apply FSL could make an actual distinction.

Implementing AI successfully requires the appropriate experience. At ITRex, we’ve labored with firms throughout industries, similar to healthcare, finance, and manufacturing, to construct AI options that work – with out pointless complexity. If you happen to’re exploring how few-shot studying may match into your technique, we’d be glad to share what we’ve discovered.

Generally the perfect subsequent step is only a dialog.

FAQs

How is few-shot studying completely different from zero-shot studying?

Few-shot studying adapts a mannequin to a brand new process utilizing a handful of labeled examples, permitting it to generalize based mostly on each prior coaching and these task-specific samples. Zero-shot studying, in contrast, offers the mannequin no examples in any respect – solely an outline of the duty – and depends totally on its pre-existing information. Few-shot usually delivers increased accuracy when even a small quantity of related knowledge is accessible, whereas zero-shot is beneficial when no examples exist.

How does few-shot studying enhance massive language fashions?

In LLMs, few-shot studying takes the type of few-shot prompting. By embedding just a few fastidiously chosen input-output examples within the immediate, you information the mannequin’s reasoning, format, and tone for the duty at hand. This improves consistency, reduces ambiguity, and helps the LLM align extra intently with enterprise necessities with out retraining or fine-tuning.

How do you create efficient few-shot studying prompts?

Efficient prompts are concise, related, and consultant of the duty. Embody a small set of high-quality examples that cowl the vary of anticipated inputs and outputs. Preserve formatting constant, use clear directions, and take a look at variations to search out the construction that yields essentially the most correct outcomes. In high-stakes enterprise contexts, contain area specialists to make sure examples mirror real-world use circumstances and terminology.

Why is few-shot studying necessary for adapting AI fashions?

Few-shot studying permits fashions to regulate to new classes, codecs, or patterns rapidly – usually in hours as an alternative of weeks. This agility is essential for responding to evolving markets, altering buyer wants, or rising dangers with out the price and delay of full-scale retraining. It permits organizations to increase AI capabilities into new areas whereas preserving operational momentum.

How does few-shot studying scale back the necessity for big coaching datasets?

FSL leverages the overall information a mannequin has acquired throughout pre-training and makes use of just a few task-specific examples to bridge the hole to the brand new process. This eliminates the necessity for enormous, totally labeled datasets, chopping down knowledge assortment, cleansing, and annotation prices. The result’s quicker deployment, decrease useful resource consumption, and a extra favorable ROI on AI initiatives.

Initially revealed at https://itrexgroup.com on August 26, 2025.

;