Think about this.. It’s late, and your deadline is inching nearer. You’ve been gazing a clean web page for hours. Lastly, you flip to an AI chatbot for assist, and on queue, it generates a superbly crafted response… that’s fully incorrect. Everyone knows this sense. This second of digital betrayal, powered by synthetic intelligence (particularly LLMs), is known as a “hallucination.”

However what if these aren’t simply random glitches? What if they’re a function, not a bug? What if the very approach we prepare and consider our most superior AI fashions is actively instructing them to mislead us or hallucinate like they do?

As per a latest analysis paper, “Why Language Fashions Hallucinate” by Adam Tauman Kalai and his workforce at OpenAI and Georgia Tech: this isn’t simply one other technical evaluation. It’s a wake-up name for the complete AI neighborhood, from builders to end-users. They argue that hallucinations aren’t some ambiguous occurring; they’re the pure, statistical consequence of a flawed course of. And to repair them, we will’t simply rework the code; we now have to vary the way in which we work with LLMs.

What causes LLM hallucinations?

To know why LLMs hallucinate, we have to return to the purpose the place all of it begins, principally, the LLM “education” level. The paper makes a robust analogy: consider a barely confused scholar taking a tough examination. When confronted with a query they don’t know, they may guess, and even bluff, to get a greater rating. However they’re not doing this to deceive; they’re doing it as a result of the examination analysis system rewards it.

That is precisely what occurs with our LLMs. The issue isn’t only one factor; it’s a two-stage course of that inevitably results in the hallucinations in LLMs. Let’s perceive each these steps:

Step 1: The Pre-Coaching

The primary stage is pre-training, the place a mannequin learns the final patterns and distributions of language from large textual content information. Essentially the most attention-grabbing perception from the paper right here is its connection of this generative course of to a a lot less complicated idea: binary classification.

Think about a easy, two-question drawback for an AI:

- Is that this a sound, factual assertion? (Sure/No)

- Is that this an incorrect, hallucinated assertion? (Sure/No)

The researchers present {that a} mannequin’s skill to generate legitimate statements is instantly tied to its skill to resolve this straightforward “Is-It-Legitimate” (IIV) classification drawback.

In actual fact, the generative error price (which determines how typically it hallucinates) is a minimum of double the speed of misclassification on this binary check.

Now it is a actually highly effective consequence! This simply implies that we will cease labelling hallucinations as some international or new phenomenon. In actual fact, we must always begin to see them as the identical outdated, well-understood, and kind of anticipated “errors” which have plagued machine studying because the begin of time.

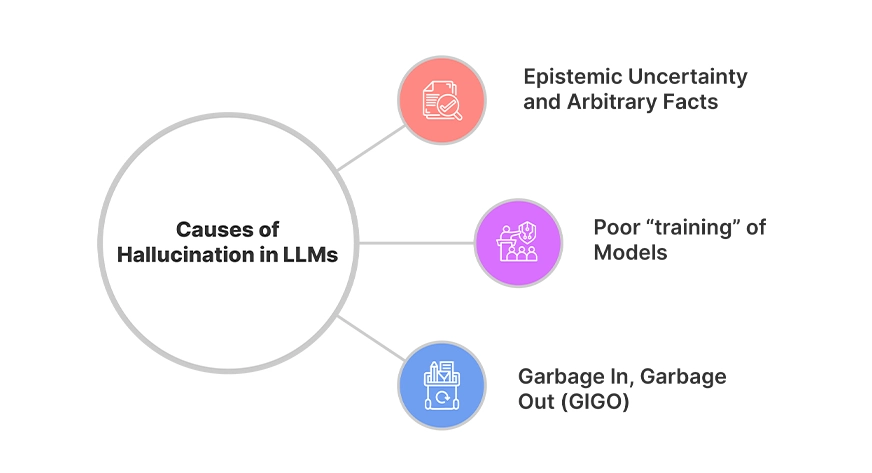

In response to the paper, three fundamental elements contribute to this:

- Epistemic Uncertainty and Arbitrary Info: Some information don’t have any discernible sample. For instance, an individual’s birthday is a random truth. If the AI sees a selected birthday solely as soon as in its large coaching information, it has no option to “study” that truth. So, when requested for it once more, it’s pressured to guess based mostly on what’s statistically believable. The paper states that if 20% of birthday information seem solely as soon as, you’ll be able to count on the mannequin to hallucinate on a minimum of 20% of these information. That is pure statistical strain, not a failure of logic.

- Poor “coaching” of Fashions: Typically, the mannequin merely hasn’t discovered the “rule” for a process. Throughout its coaching course of, a mannequin is skilled to grasp and construct logic by itself. The paper provides an instance of an LLM struggling to depend the variety of “D’s” within the phrase “DEEPSEEK,” giving numerous incorrect solutions. This isn’t an absence of information, however a failure of the mannequin to correctly apply the underlying logic.

- Rubbish In, Rubbish Out (GIGO): Coaching information, even when cleaned and ready correctly, just isn’t excellent. It comprises errors, misinformation, and biases. The mannequin will, naturally, replicate these. Whereas post-training can scale back a few of this, like conspiracy theories, it doesn’t get rid of the basic drawback.

The conclusion from this primary stage is stark: even with pristine information, the statistical nature of pre-training makes a point of hallucination unavoidable for a mannequin that’s making an attempt to be a general-purpose language generator like ChatGPT, Gemini, and Mistral.

Step 2: The Publish-Coaching

So, if pre-training creates an inclination to err, shouldn’t the fashionable post-training strategies like Reinforcement Studying from Human Suggestions (RLHF) have the ability to repair them? The paper gives a really surprising revelation for this: These strategies can’t repair these issues, as a result of the very techniques which can be used to judge the LLMs really reward the incorrect conduct!

Bear in mind the scholar analogy that we mentioned above? They could know that answering “I don’t know” is the sincere response, but when the examination provides zero factors for a clean reply and one level for an accurate one (even when it’s a fortunate guess), the selection is obvious: your best option is to at all times guess. Since right here they may at all times have a “probability” to attain.

As per this analysis paper, it is a “socio-technical” drawback related to all LLMs. Many of the dominant benchmarks that fashions are judged on, those that gasoline the general public leaderboards and drive progress, use a easy binary scoring system. So the output for them is black or white. Which means {that a} response is both appropriate or it isn’t. An “I don’t know” (IDK) response, or every other expression of uncertainty, is scored as zero.

To know this, take the next instance from the analysis paper. Suppose there are two fashions: Mannequin A and Mannequin B.

- Mannequin A is a “good” mannequin that is aware of when it’s unsure and responds with “IDK.” It by no means hallucinates.

- Mannequin B is identical as Mannequin A, but it surely at all times guesses when it’s not sure, by no means admitting uncertainty.

Now, underneath a binary scoring system,

Mannequin B will at all times outperform Mannequin A. This creates an “epidemic” of penalizing uncertainty, forcing fashions to behave like overconfident college students on a high-stakes examination. What’s the results of this? Hallucinations persist, even in essentially the most superior language fashions. Basically, the system we constructed to check honesty is actively instructing fashions to lie.

How can we keep away from Hallucinations?

The paper just isn’t all gloom; in actual fact, it brings in hope. The researchers suggest a “socio-technical mitigation” that doesn’t require a elementary AI breakthrough, however a easy change in human conduct. As an alternative of introducing new and extra complicated “hallucination-specific” evaluations, we have to modify the prevailing, widely-used benchmarks that dominate the sphere.

Their core concept is to enhance the prevailing scoring system to reward uncertainty. As an alternative of a binary appropriate/incorrect, we must always introduce a “third possibility”. This might take the type of:

“Giving credit score for an accurate “IDK” response when the mannequin really doesn’t know.”

Implementing “behavioral calibration”, which suggests the mannequin learns to offer essentially the most helpful response for which it’s at a sure “predefined” confidence degree. This teaches the AI to be sincere about its information boundaries.

The paper argues it is a easy, sensible change that may repair the misaligned incentives. When being sincere stops being a dropping technique on the leaderboard, fashions will naturally evolve to be extra reliable. The objective is to maneuver from a system that rewards guessing to at least one that rewards correct self-assessment.

Conclusion

This analysis paper peels again the layers of one among AI’s most persistent issues. It reveals us that LLM hallucinations aren’t some mysterious, untraceable ghost within the machine. They’re the predictable consequence of a system that rewards overconfidence and penalizes honesty.

This paper is a name to motion. For researchers and builders, it’s a plea to rethink analysis benchmarks. For leaders and professionals, it’s a reminder {that a} perfect-sounding reply just isn’t at all times a reliable one. And for all of us, it’s a essential perception into the instruments shaping our world.

The AI of tomorrow received’t simply be about velocity and energy; will probably be about belief. We should cease grading them like college students on a multiple-choice check and begin holding them to the next normal, one which values the phrases, “I don’t know,” as a lot as the appropriate reply. The way forward for a dependable and protected AI is determined by it.

Learn extra: 7 Methods to Mitigate Hallucinations in LLMs

Ceaselessly Requested Questions

A. Due to the way in which they’re skilled and evaluated. Pre-training forces them to guess on unsure information, and post-training rewards overconfident solutions as a substitute of sincere uncertainty.

A. No. They’re a statistical consequence of flawed coaching and analysis techniques, not unintended errors.

A. Imperfect or uncommon information, like a singular birthday, creates epistemic uncertainty, forcing fashions to guess and infrequently hallucinate.

A. As a result of benchmarks penalize “I don’t know” and reward guessing, fashions study to bluff as a substitute of admitting uncertainty.

A. By altering analysis benchmarks to reward sincere uncertainty. Giving partial credit score for “I don’t know” encourages fashions to calibrate confidence and scale back LLM hallucinations.

Login to proceed studying and revel in expert-curated content material.