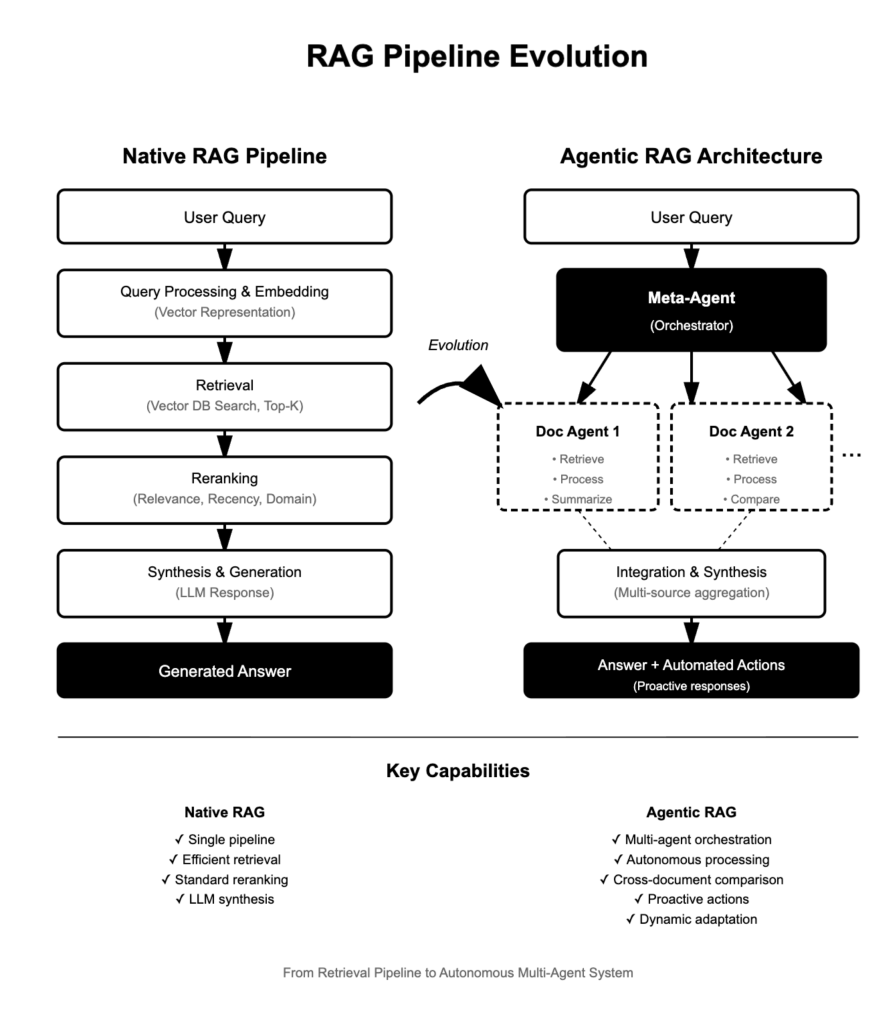

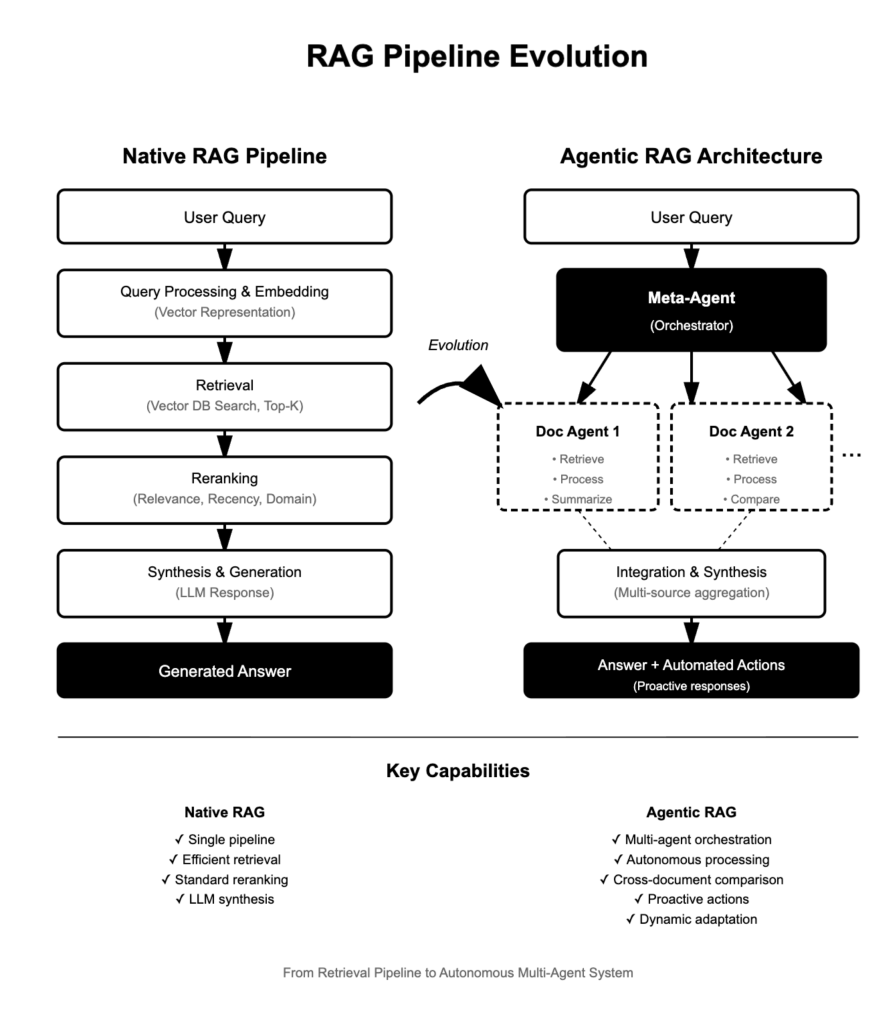

Retrieval-Augmented Technology (RAG) has emerged as a cornerstone approach for enhancing Massive Language Fashions (LLMs) with real-time, domain-specific data. However the panorama is quickly shifting—in the present day, the commonest implementations are “Native RAG” pipelines, and a brand new paradigm known as “Agentic RAG” is redefining what’s attainable in AI-powered info synthesis and determination assist.

Native RAG: The Customary Pipeline

Structure

A Native RAG pipeline harnesses retrieval and generation-based strategies to reply complicated queries whereas making certain accuracy and relevance. The pipeline sometimes entails:

- Question Processing & Embedding: The person’s query is rewritten, if wanted, embedded right into a vector illustration utilizing an LLM or devoted embedding mannequin, and ready for semantic search.

- Retrieval: The system searches a vector database or doc retailer, figuring out top-k related chunks utilizing similarity metrics (cosine, Euclidean, dot product). Environment friendly ANN algorithms optimize this stage for velocity and scalability.

- Reranking: Retrieved outcomes are reranked based mostly on relevance, recency, domain-specificity, or person desire. Reranking fashions—starting from rule-based to fine-tuned ML techniques—prioritize the highest-quality info.

- Synthesis & Technology: The LLM synthesizes the reranked info to generate a coherent, context-aware response for the person.

Widespread Optimizations

Latest advances embody dynamic reranking (adjusting depth by question complexity), fusion-based methods that combination rankings from a number of queries, and hybrid approaches that mix semantic partitioning with agent-based choice for optimum retrieval robustness and latency.

Agentic RAG: Autonomous, Multi-Agent Info Workflows

What Is Agentic RAG?

Agentic RAG is an agent-based method to RAG, leveraging a number of autonomous brokers to reply questions and course of paperwork in a extremely coordinated trend. Reasonably than a single retrieval/technology pipeline, Agentic RAG buildings its workflow for deep reasoning, multi-document comparability, planning, and real-time adaptability.

Key Elements

| Element | Description |

|---|---|

| Doc Agent | Every doc is assigned its personal agent, capable of reply queries concerning the doc and carry out abstract duties, working independently inside its scope. |

| Meta-Agent | Orchestrates all doc brokers, managing their interactions, integrating outputs, and synthesizing a complete reply or motion. |

Options and Advantages

- Autonomy: Brokers function independently, retrieving, processing, and producing solutions or actions for particular paperwork or duties.

- Adaptability: The system dynamically adjusts its technique (e.g., reranking depth, doc prioritization, device choice) based mostly on new queries or altering knowledge contexts.

- Proactivity: Brokers anticipate wants, take preemptive steps in direction of objectives (e.g., pulling further sources or suggesting actions), and study from earlier interactions.

Superior Capabilities

Agentic RAG goes past “passive” retrieval—brokers can examine paperwork, summarize or distinction particular sections, combination multi-source insights, and even invoke instruments or APIs for enriched reasoning. This allows:

- Automated analysis and multi-database aggregation

- Complicated determination assist (e.g., evaluating technical options, summarizing key variations throughout product sheets)

- Government assist duties that require unbiased synthesis and real-time motion suggestion.

Functions

Agentic RAG is good for situations the place nuanced info processing and decision-making are required:

- Enterprise Information Administration: Coordinating solutions throughout heterogeneous inside repositories

- AI-Pushed Analysis Assistants: Cross-document synthesis for technical writers, analysts, or executives

- Automated Motion Workflows: Triggering actions (e.g., responding to invites, updating information) after multi-step reasoning over paperwork or databases.

- Complicated Compliance and Safety Audits: Aggregating and evaluating proof from various sources in actual time.

Conclusion

Native RAG pipelines have standardized the method of embedding, retrieving, reranking, and synthesizing solutions from exterior knowledge, enabling LLMs to function dynamic data engines. Agentic RAG pushes the boundaries even additional—by introducing autonomous brokers, orchestration layers, and proactive, adaptive workflows, it transforms RAG from a retrieval device right into a full-blown agentic framework for superior reasoning and multi-document intelligence.

Organizations in search of to maneuver past primary augmentation—and into realms of deep, versatile AI orchestration—will discover in Agentic RAG the blueprint for the following technology of clever techniques.