The Chinese language AI startup DeepSeek releases DeepSeek-V3.1, it’s newest flagship language mannequin. It builds on the structure of DeepSeek-V3, including vital enhancements to reasoning, software use, and coding efficiency. Notably, DeepSeek fashions have quickly gained a status for delivering OpenAI and Anthropic-level efficiency at a fraction of the price.

Mannequin Structure and Capabilities

- Hybrid Considering Mode: DeepSeek-V3.1 helps each pondering (chain-of-thought reasoning, extra deliberative) and non-thinking (direct, stream-of-consciousness) technology, switchable through the chat template. This can be a departure from earlier variations and provides flexibility for various use circumstances.

- Instrument and Agent Help: The mannequin has been optimized for software calling and agent duties (e.g., utilizing APIs, code execution, search). Instrument calls use a structured format, and the mannequin helps customized code brokers and search brokers, with detailed templates offered within the repository.

- Huge Scale, Environment friendly Activation: The mannequin boasts 671B whole parameters, with 37B activated per token—a Combination-of-Specialists (MoE) design that lowers inference prices whereas sustaining capability. The context window is 128K tokens, a lot bigger than most rivals.

- Lengthy Context Extension: DeepSeek-V3.1 makes use of a two-phase long-context extension strategy. The primary section (32K) was skilled on 630B tokens (10x greater than V3), and the second (128K) on 209B tokens (3.3x greater than V3). The mannequin is skilled with FP8 microscaling for environment friendly arithmetic on next-gen {hardware}.

- Chat Template: The template helps multi-turn conversations with express tokens for system prompts, consumer queries, and assistant responses. The pondering and non-thinking modes are triggered by

tokens within the immediate sequence.

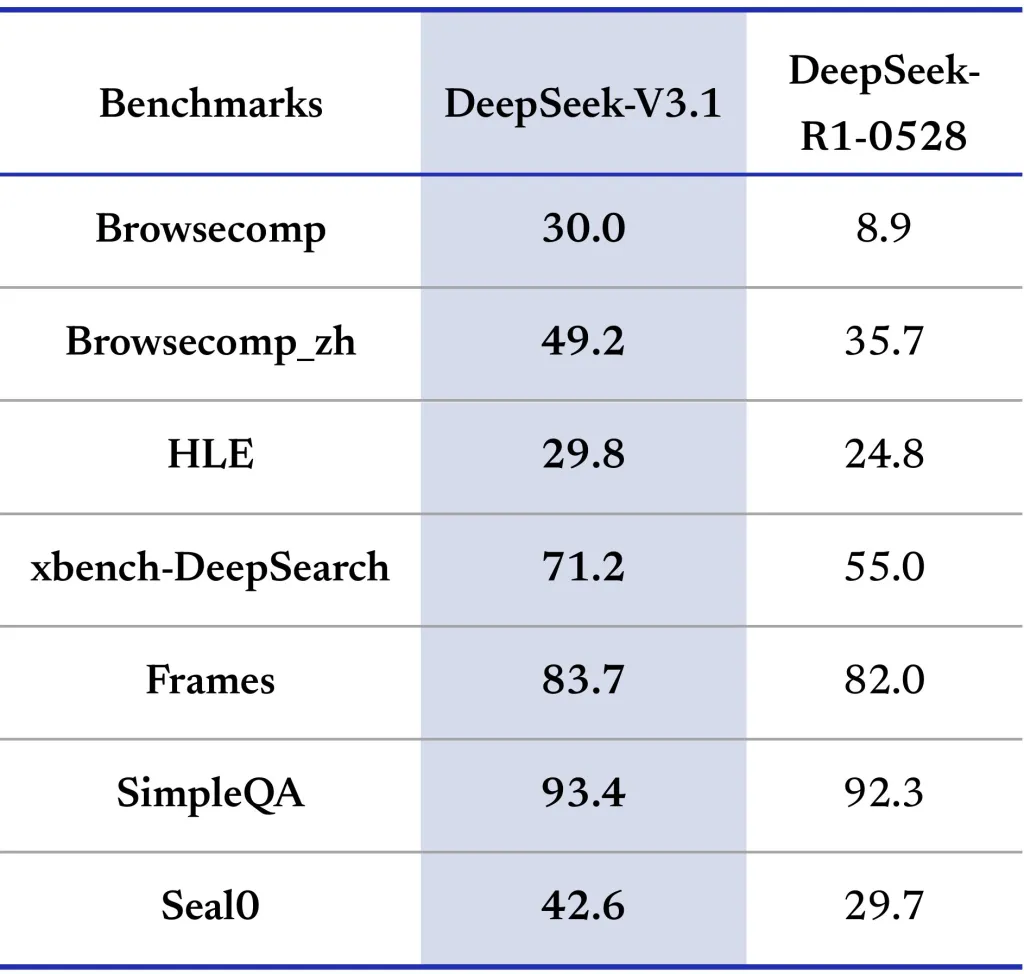

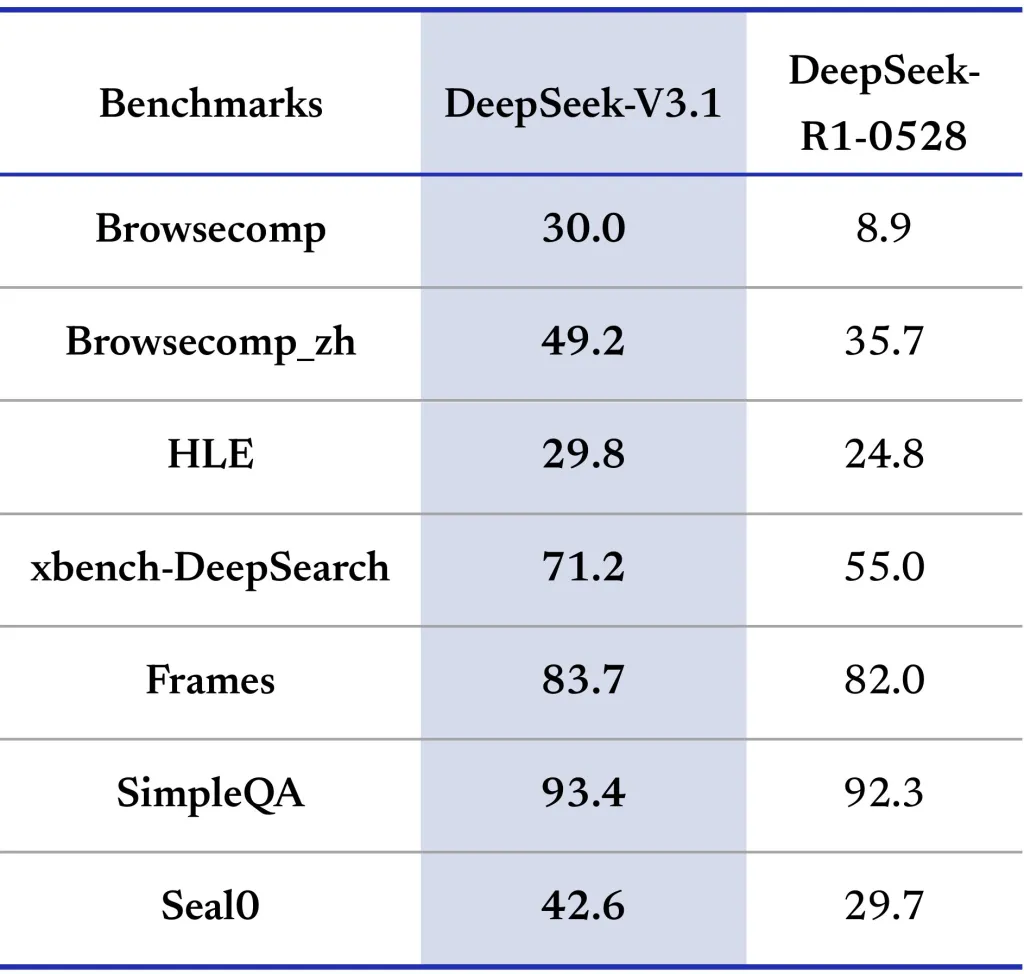

Efficiency Benchmarks

DeepSeek-V3.1 is evaluated throughout a variety of benchmarks (see desk under), together with common information, coding, math, software use, and agent duties. Listed here are highlights:

| Metric | V3.1-NonThinking | V3.1-Considering | Rivals |

|---|---|---|---|

| MMLU-Redux (EM) | 91.8 | 93.7 | 93.4 (R1-0528) |

| MMLU-Professional (EM) | 83.7 | 84.8 | 85.0 (R1-0528) |

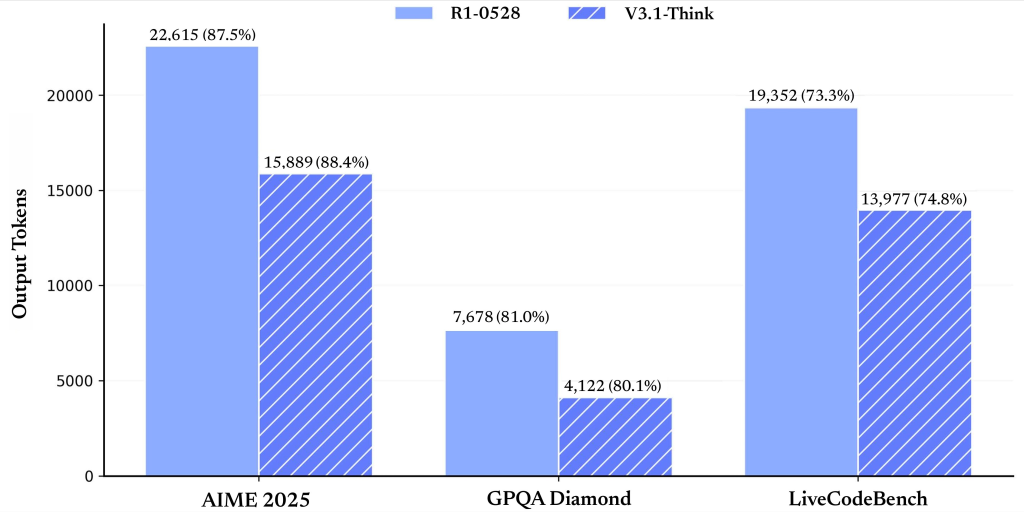

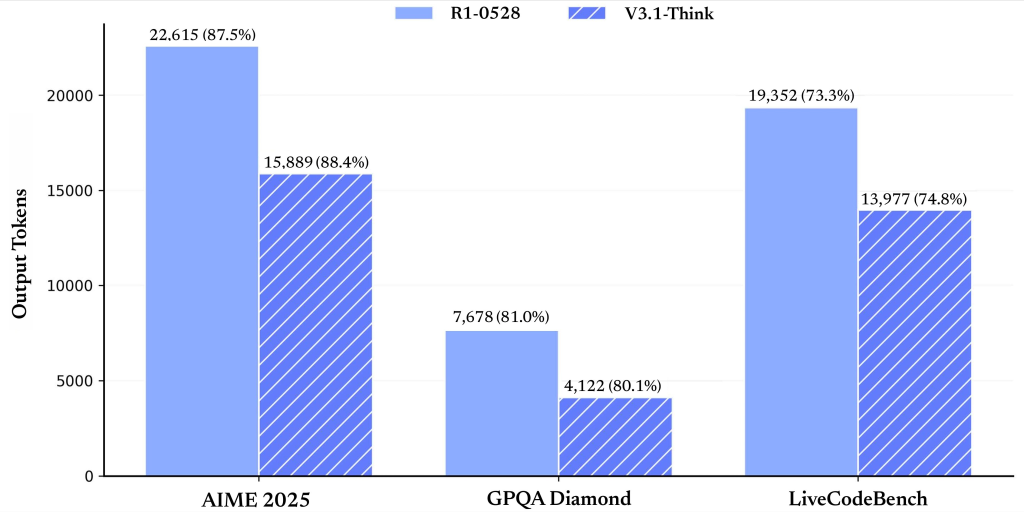

| GPQA-Diamond (Go@1) | 74.9 | 80.1 | 81.0 (R1-0528) |

| LiveCodeBench (Go@1) | 56.4 | 74.8 | 73.3 (R1-0528) |

| AIMÉ 2025 (Go@1) | 49.8 | 88.4 | 87.5 (R1-0528) |

| SWE-bench (Agent mode) | 54.5 | — | 30.5 (R1-0528) |

The pondering mode persistently matches or exceeds earlier state-of-the-art variations, particularly in coding and math. The non-thinking mode is quicker however barely much less correct, making it ultimate for latency-sensitive functions.

Instrument and Code Agent Integration

- Instrument Calling: Structured software invocations are supported in non-thinking mode, permitting for scriptable workflows with exterior APIs and companies.

- Code Brokers: Builders can construct customized code brokers by following the offered trajectory templates, which element the interplay protocol for code technology, execution, and debugging. DeepSeek-V3.1 can use exterior search instruments for up-to-date info, a characteristic vital for enterprise, finance, and technical analysis functions.

Deployment

- Open Supply, MIT License: All mannequin weights and code are freely obtainable on Hugging Face and ModelScope underneath the MIT license, encouraging each analysis and business use.

- Native Inference: The mannequin construction is suitable with DeepSeek-V3, and detailed directions for native deployment are offered. Working requires vital GPU assets as a result of mannequin’s scale, however the open ecosystem and neighborhood instruments decrease boundaries to adoption.

Abstract

DeepSeek-V3.1 represents a milestone within the democratization of superior AI, demonstrating that open-source, cost-efficient, and extremely succesful language fashions. Its mix of scalable reasoning, software integration, and distinctive efficiency in coding and math duties positions it as a sensible alternative for each analysis and utilized AI growth.

Take a look at the Mannequin on Hugging Face. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.