For a lot of organizations, the largest problem with AI brokers constructed over unstructured information is not the mannequin, nevertheless it’s the context. If the agent can’t retrieve the fitting info, even probably the most superior mannequin will miss key particulars and provides incomplete or incorrect solutions.

We’re introducing reranking in Mosaic AI Vector Search, now in Public Preview. With a single parameter, you may enhance retrieval accuracy by a mean of 15 proportion factors on our enterprise benchmarks. This implies higher-quality solutions, higher reasoning, and extra constant agent efficiency—with out additional infrastructure or complicated setup.

What Is Reranking?

Reranking is a method that improves agent high quality by guaranteeing the agent will get probably the most related information to carry out its process. Whereas vector databases excel at shortly discovering related paperwork from hundreds of thousands of candidates, reranking applies deeper contextual understanding to make sure probably the most semantically related outcomes seem on the prime. This two-stage method—quick retrieval adopted by clever reordering—has turn into important for RAG agent methods the place high quality issues.

Why We Added Reranking

You may be constructing internally-facing chat brokers to reply questions on your paperwork. Otherwise you may be constructing brokers that generate reviews to your clients. Both manner, if you wish to construct brokers that may precisely use your unstructured information, then high quality is tied to retrieval. Reranking is how Vector Search clients enhance the standard of their retrieval and thereby enhance the standard of their RAG brokers.

From buyer suggestions, we’ve seen two widespread points:

- Brokers can miss essential context buried in giant units of unstructured paperwork. The “proper” passage not often sits on the very prime of the retrieved outcomes from a vector database.

- Homegrown reranking methods considerably improve agent high quality, however they take weeks to construct after which want vital upkeep.

By making reranking a local Vector Search function, you need to use your ruled enterprise information to floor probably the most related info with out additional engineering.

The reranker function helped elevate our Lexi chatbot from functioning like a highschool pupil to performing like a legislation college graduate. We’ve seen transformative features in how our methods perceive, motive over, and generate content material from authorized documents-unlocking insights that had been beforehand buried in unstructured information. — David Brady, Senior Director, G3 Enterprises

A Substantial High quality Enchancment Over Baselines

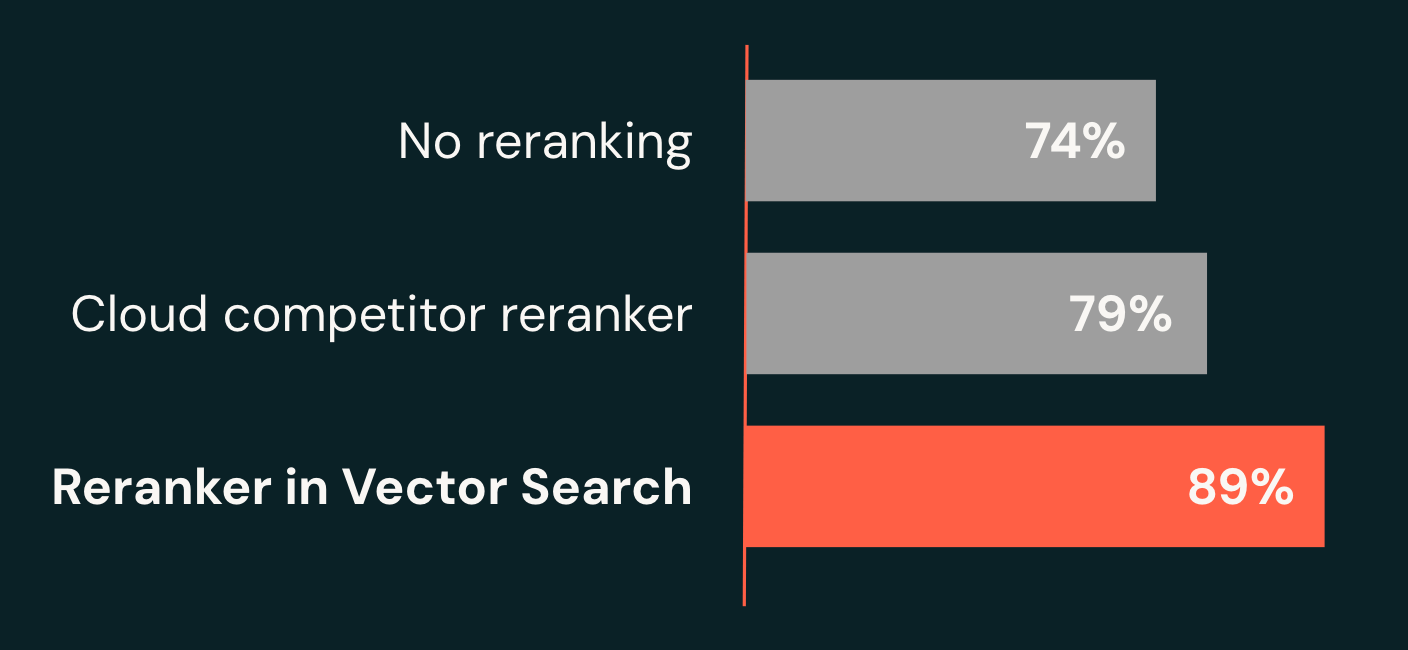

Our analysis staff achieved a breakthrough by constructing a novel compound AI system for agent workloads. On our enterprise benchmarks, the system retrieves the proper reply inside its prime 10 outcomes 89% of the time (recall@10), a 15-point enchancment over our baseline (74%) and 10 factors increased than main cloud options (79%). Crucially, our reranker delivers this high quality with latencies as little as 1.5 seconds, whereas modern methods typically take a number of seconds—and even minutes—to return high-quality solutions.

Simple, Excessive-High quality Retrieval

Allow enterprise-grade reranking in minutes, not weeks. Groups sometimes spend weeks researching fashions, deploying infrastructure, and writing customized logic. In distinction, enabling reranking for Vector Search requires only one further parameter in your Vector Search question to immediately get increased high quality retrieval to your brokers. No mannequin serving endpoints to handle, no customized wrappers to keep up, no complicated configurations to tune.

By specifying a number of columns in columns_to_rerank, you take the reranker’s high quality to the following stage by giving it entry to metadata past simply the principle textual content. On this instance, the reranker makes use of contract summaries and class info to raised perceive context and enhance the relevance of search outcomes.

Optimized for Agent Efficiency

Pace meets high quality for real-time AI, agentic functions. Our analysis staff optimized this compound AI system to rerank 50 ends in as little as 1.5 seconds. This makes it extremely efficient for agent methods that demand each accuracy and responsiveness. This breakthrough efficiency permits subtle retrieval methods with out compromising person expertise.

When to make use of Reranking?

We suggest testing reranking for any RAG agent use case. Usually, clients will see huge high quality features when their present methods do discover the fitting reply someplace within the prime 50 outcomes from retrieval, however battle to floor it throughout the prime 10. In technical phrases, this implies clients with low recall@10 however excessive recall@50.

Enhanced Developer Expertise

Past core reranking capabilities, we’re making it simpler than ever to construct and deploy high-quality retrieval methods.

LangChain Integration: Reranker works seamlessly with VectorSearchRetrieverTool, our official LangChain integration for Vector Search. Groups constructing RAG brokers with VectorSearchRetrieverTool can profit from increased high quality retrieval—no code modifications required.

Clear Efficiency Metrics: Reranker latency is now included in question debug information, providing you with an entire end-to-end breakdown of your question efficiency.

response latency breakdown in milliseconds

Versatile Column Choice: Rerank based mostly on any mixture of textual content and metadata columns, permitting you to leverage all out there area context—from doc summaries to classes to customized metadata—for top relevance.

Begin Constructing At the moment

Reranker in Vector Search transforms the way you construct AI functions. With zero infrastructure overhead and seamless integration, you may lastly ship the retrieval high quality your customers deserve.

Able to get began?