How can we make each node in a graph its personal clever agent—able to customized reasoning, adaptive retrieval, and autonomous decision-making? That is the core query explored by a gaggle researchers from Rutgers College. The analysis crew launched ReaGAN—a Retrieval-augmented Graph Agentic Community that reimagines every node as an unbiased reasoning agent.

Why Conventional GNNs Battle

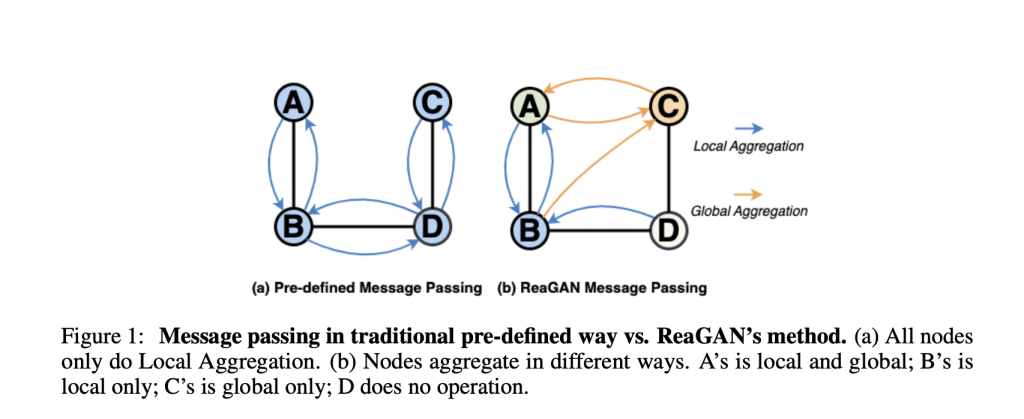

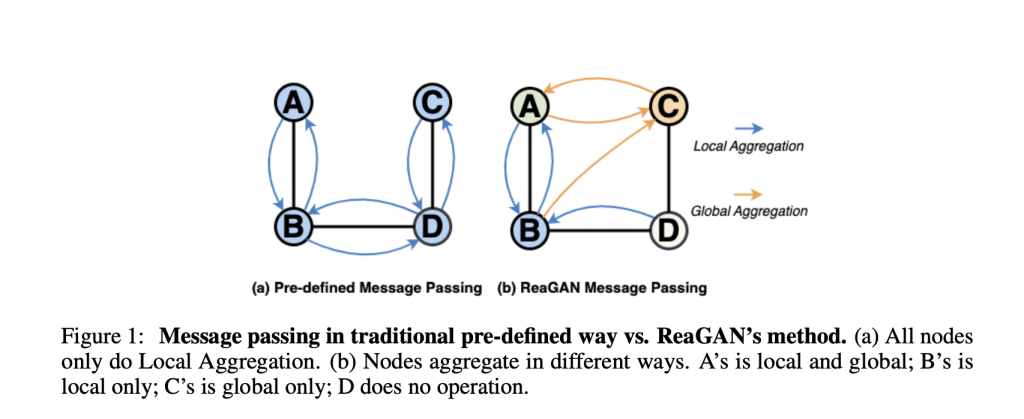

Graph Neural Networks (GNNs) are the spine for a lot of duties like quotation community evaluation, advice programs, and scientific categorization. Historically, GNNs function through static, homogeneous message passing: every node aggregates data from its instant neighbors utilizing the identical predefined guidelines.

However two persistent challenges have emerged:

- Node Informativeness Imbalance: Not all nodes are created equal. Some nodes carry wealthy, helpful data whereas others are sparse and noisy. When handled identically, invaluable indicators can get misplaced, or irrelevant noise can overpower helpful context.

- Locality Limitations: GNNs concentrate on native construction—data from close by nodes—usually lacking out on significant, semantically comparable however distant nodes throughout the bigger graph.

The ReaGAN Strategy: Nodes as Autonomous Brokers

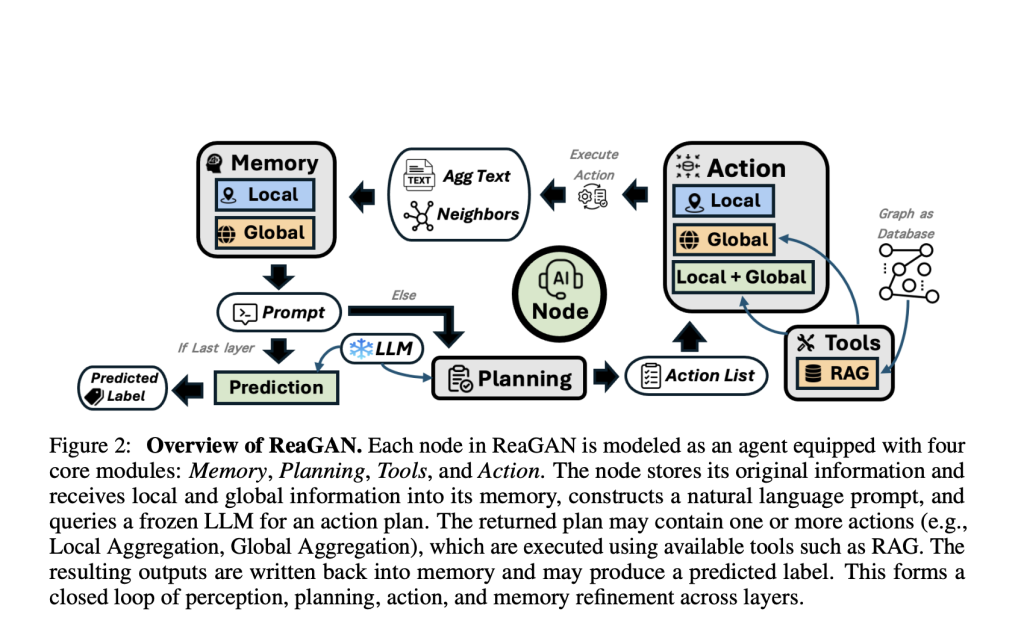

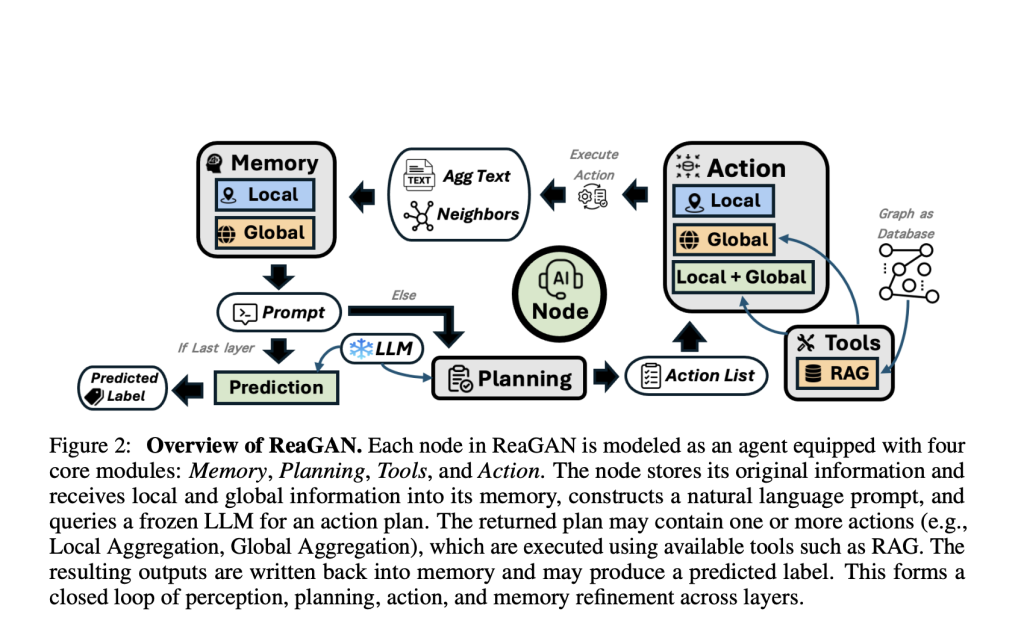

ReaGAN flips the script. As an alternative of passive nodes, every node turns into an agent that actively plans its subsequent transfer primarily based on its reminiscence and context. Right here’s how:

- Agentic Planning: Nodes work together with a frozen giant language mannequin (LLM), equivalent to Qwen2-14B, to dynamically resolve actions (“Ought to I collect extra information? Predict my label? Pause?”).

- Versatile Actions:

- Native Aggregation: Harvest data from direct neighbors.

- International Aggregation: Retrieve related insights from anyplace on the graph—utilizing retrieval-augmented technology (RAG).

- NoOp (“Do Nothing”): Typically, the most effective transfer is to attend—pausing to keep away from data overload or noise.

- Reminiscence Issues: Every agent node maintains a non-public buffer for its uncooked textual content options, aggregated context, and a set of labeled examples. This enables tailor-made prompting and reasoning at each step.

How Does ReaGAN Work?

Right here’s a simplified breakdown of the ReaGAN workflow:

- Notion: The node gathers instant context from its personal state and reminiscence buffer.

- Planning: A immediate is constructed (summarizing the node’s reminiscence, options, and neighbor information) and despatched to an LLM, which recommends the subsequent motion(s).

- Appearing: The node could combination domestically, retrieve globally, predict its label, or take no motion. Outcomes are written again to reminiscence.

- Iterate: This reasoning loop runs for a number of layers, permitting data integration and refinement.

- Predict: Within the ultimate stage, the node goals to make a label prediction—supported by the blended native and world proof it’s gathered.

What makes this novel is that each node decides for itself, asynchronously. There’s no world clock or shared parameters forcing uniformity.

Outcomes: Surprisingly Sturdy—Even With out Coaching

ReaGAN’s promise is matched by its outcomes. On basic benchmarks (Cora, Citeseer, Chameleon), it achieves aggressive accuracy, usually matching or outperforming baseline GNNs—with none supervised coaching or fine-tuning.

Pattern Outcomes:

| Mannequin | Cora | Citeseer | Chameleon |

|---|---|---|---|

| GCN | 84.71 | 72.56 | 28.18 |

| GraphSAGE | 84.35 | 78.24 | 62.15 |

| ReaGAN | 84.95 | 60.25 | 43.80 |

ReaGAN makes use of a frozen LLM for planning and context gathering—highlighting the ability of immediate engineering and semantic retrieval.

Key Insights

- Immediate Engineering Issues: How nodes mix native and world reminiscence in prompts impacts accuracy, and the most effective technique is dependent upon graph sparsity and label locality.

- Label Semantics: Exposing express label names can result in biased predictions; anonymizing labels yields higher outcomes.

- Agentic Flexibility: ReaGAN’s decentralized, node-level reasoning is especially efficient in sparse graphs or these with noisy neighborhoods.

Abstract

ReaGAN units a brand new normal for agent-based graph studying. With the rising sophistication of LLMs and retrieval-augmented architectures, we would quickly see graphs the place each node is not only a quantity or an embedding, however an adaptive, contextually-aware reasoning agent—able to deal with the challenges of tomorrow’s information networks.

Try the Paper right here. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication.

Nikhil is an intern advisor at Marktechpost. He’s pursuing an built-in twin diploma in Supplies on the Indian Institute of Expertise, Kharagpur. Nikhil is an AI/ML fanatic who’s at all times researching purposes in fields like biomaterials and biomedical science. With a robust background in Materials Science, he’s exploring new developments and creating alternatives to contribute.