Estimated studying time: 5 minutes

Introduction

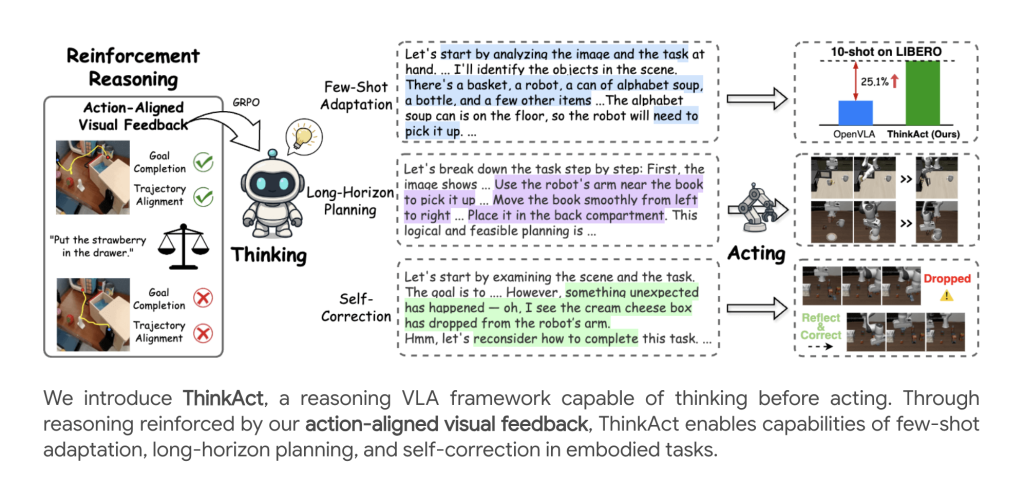

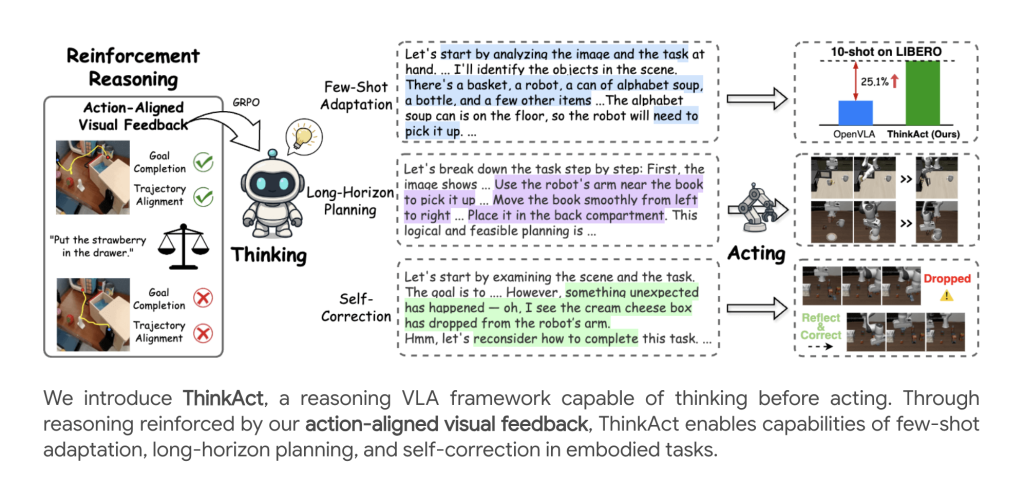

Embodied AI brokers are more and more being known as upon to interpret advanced, multimodal directions and act robustly in dynamic environments. ThinkAct, introduced by researchers from Nvidia and Nationwide Taiwan College, provides a breakthrough for vision-language-action (VLA) reasoning, introducing bolstered visible latent planning to bridge high-level multimodal reasoning and low-level robotic management.

Typical VLA fashions map uncooked visible and language inputs on to actions by means of end-to-end coaching, which limits reasoning, long-term planning, and adaptableness. Latest strategies started to include intermediate chain-of-thought (CoT) reasoning or try RL-based optimization, however struggled with scalability, grounding, or generalization when confronted with extremely variable and long-horizon robotic manipulation duties.

The ThinkAct Framework

Twin-System Structure

ThinkAct consists of two tightly built-in parts:

- Reasoning Multimodal LLM (MLLM): Performs structured, step-by-step reasoning over visible scenes and language directions, outputting a visible plan latent that encodes high-level intent and planning context.

- Motion Mannequin: A Transformer-based coverage conditioned on the visible plan latent, executing the decoded trajectory as robotic actions within the atmosphere.

This design permits asynchronous operation: the LLM “thinks” and generates plans at a sluggish cadence, whereas the motion module carries out fine-grained management at increased frequency.

Strengthened Visible Latent Planning

A core innovation is the reinforcement studying (RL) strategy leveraging action-aligned visible rewards:

- Purpose Reward: Encourages the mannequin to align the beginning and finish positions predicted within the plan with these in demonstration trajectories, supporting aim completion.

- Trajectory Reward: Regularizes the expected visible trajectory to intently match distributional properties of professional demonstrations utilizing dynamic time warping (DTW) distance.

Whole reward rrr blends these visible rewards with a format correctness rating, pushing the LLM to not solely produce correct solutions but additionally plans that translate into bodily believable robotic actions.

Coaching Pipeline

The multi-stage coaching process contains:

- Supervised Fantastic-Tuning (SFT): Chilly-start with manually-annotated visible trajectory and QA knowledge to show trajectory prediction, reasoning, and reply formatting.

- Strengthened Fantastic-Tuning: RL optimization (utilizing Group Relative Coverage Optimization, GRPO) additional incentivizes high-quality reasoning by maximizing the newly outlined action-aligned rewards.

- Motion Adaptation: The downstream motion coverage is educated utilizing imitation studying, leveraging the frozen LLM’s latent plan output to information management throughout different environments.

Inference

At inference time, given an noticed scene and a language instruction, the reasoning module generates a visible plan latent, which then situations the motion module to execute a full trajectory—enabling sturdy efficiency even in new, beforehand unseen settings.

Experimental Outcomes

Robotic Manipulation Benchmarks

Experiments on SimplerEnv and LIBERO benchmarks exhibit ThinkAct’s superiority:

- SimplerEnv: Outperforms sturdy baselines (e.g., OpenVLA, DiT-Coverage, TraceVLA) by 11–17% in varied settings, particularly excelling in long-horizon and visually numerous duties.

- LIBERO: Achieves the best total success charges (84.4%), excelling in spatial, object, aim, and long-horizon challenges, confirming its capacity to generalize and adapt to novel expertise and layouts.

Embodied Reasoning Benchmarks

On EgoPlan-Bench2, RoboVQA, and OpenEQA, ThinkAct demonstrates:

- Superior multi-step and long-horizon planning accuracy.

- State-of-the-art BLEU and LLM-based QA scores, reflecting improved semantic understanding and grounding for visible query answering duties.

Few-Shot Adaptation

ThinkAct allows efficient few-shot adaptation: with as few as 10 demonstrations, it achieves substantial success price positive factors over different strategies, highlighting the facility of reasoning-guided planning for rapidly studying new expertise or environments.

Self-Reflection and Correction

Past job success, ThinkAct displays emergent behaviors:

- Failure Detection: Acknowledges execution errors (e.g., dropped objects).

- Replanning: Mechanically revises plans to get better and full the duty, because of reasoning on latest visible enter sequences.

Ablation Research and Mannequin Evaluation

- Reward Ablations: Each aim and trajectory rewards are important for structured planning and generalization. Eradicating both considerably drops efficiency, and relying solely on QA-style rewards limits multi-step reasoning functionality.

- Discount in Replace Frequency: ThinkAct achieves a steadiness between reasoning (sluggish, planning) and motion (quick, management), permitting sturdy efficiency with out extreme computational demand1.

- Smaller Fashions: The strategy generalizes to smaller MLLM backbones, sustaining sturdy reasoning and motion capabilities.

Implementation Particulars

- Principal spine: Qwen2.5-VL 7B MLLM.

- Datasets: Various robotic and human demonstration movies (Open X-Embodiment, One thing-One thing V2), plus multimodal QA units (RoboVQA, EgoPlan-Bench, Video-R1-CoT, and so forth.).

- Makes use of a imaginative and prescient encoder (DINOv2), textual content encoder (CLIP), and a Q-Former for connecting reasoning output to motion coverage enter.

- In depth experiments on actual and simulated settings affirm scalability and robustness.

Conclusion

Nvidia’s ThinkAct units a brand new customary for embodied AI brokers, proving that bolstered visible latent planning—the place brokers “suppose earlier than they act”—delivers sturdy, scalable, and adaptive efficiency in advanced, real-world reasoning and robotic manipulation duties. Its dual-system design, reward shaping, and powerful empirical outcomes pave the way in which for clever, generalist robots able to long-horizon planning, few-shot adaptation, and self-correction in numerous environments.

Take a look at the Paper and Venture. All credit score for this analysis goes to the researchers of this challenge. Additionally, be at liberty to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.

You may additionally like NVIDIA’s Open Sourced Cosmos DiffusionRenderer [Check it now]

Nikhil is an intern guide at Marktechpost. He’s pursuing an built-in twin diploma in Supplies on the Indian Institute of Expertise, Kharagpur. Nikhil is an AI/ML fanatic who’s at all times researching functions in fields like biomaterials and biomedical science. With a robust background in Materials Science, he’s exploring new developments and creating alternatives to contribute.