Current long-CoT reasoning fashions have achieved state-of-the-art efficiency in mathematical reasoning by producing reasoning trajectories with iterative self-verification and refinement. Nevertheless, open-source long-CoT fashions rely solely on pure language reasoning traces, making them computationally costly and vulnerable to errors with out verification mechanisms. Though tool-aided reasoning supplies better effectivity and reliability for large-scale numerical computations by way of frameworks like OpenHands that combine code interpreters, these agentic approaches battle with summary or conceptually advanced reasoning issues.

DualDistill Framework and Agentic-R1 Mannequin

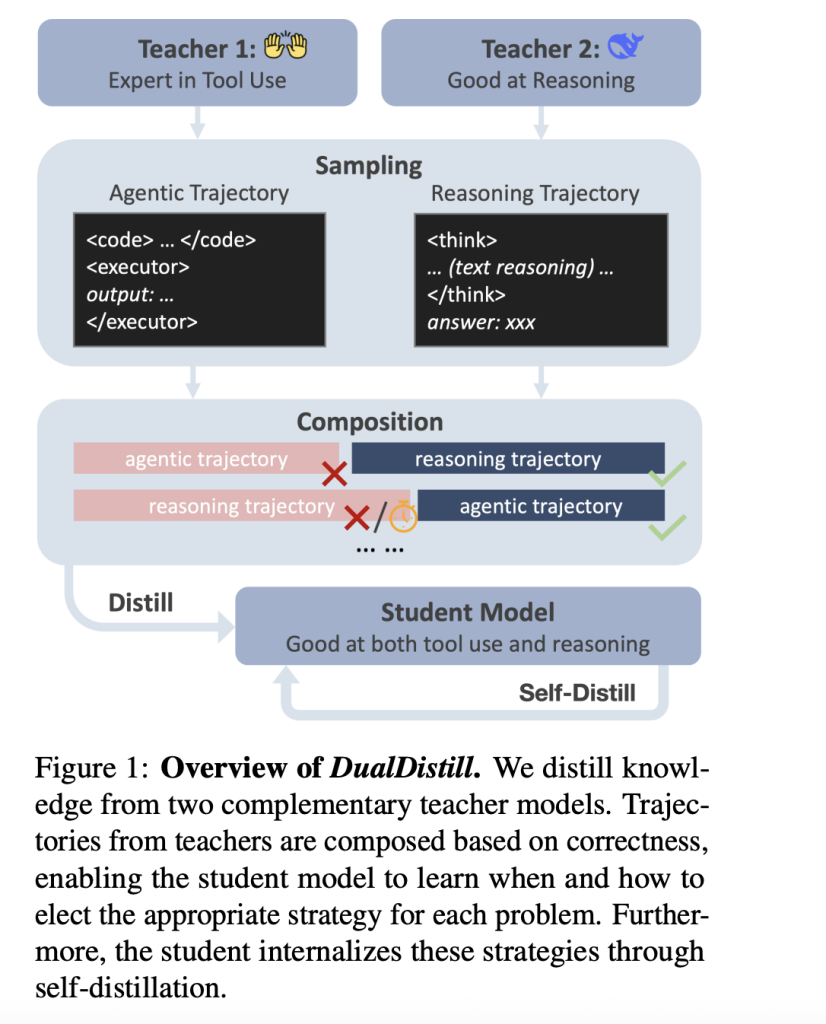

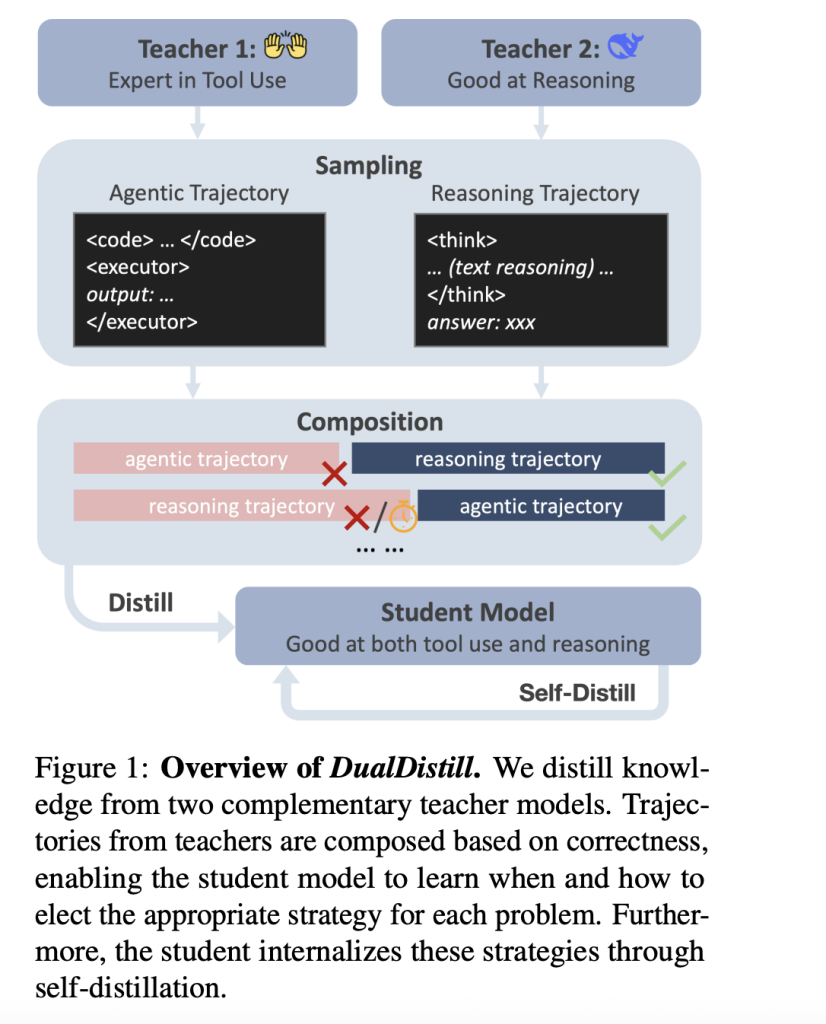

Researchers from Carnegie Mellon College have proposed DualDistill, a distillation framework that mixes trajectories from two complementary lecturers to create a unified scholar mannequin. The framework makes use of one reasoning-oriented trainer and one tool-augmented trainer to develop Agentic-R1, a mannequin that learns to pick out essentially the most applicable technique for every drawback kind dynamically. Agentic-R1 executes code for arithmetic and algorithmic duties whereas using pure language reasoning for summary issues. DualDistill makes use of trajectory composition to distill information from each complementary lecturers, adopted by self-distillation. Furthermore, researchers used OpenHands because the agentic reasoning trainer, and DeepSeek-R1 because the text-based reasoning trainer.

Analysis and Benchmarks

The proposed methodology is evaluated throughout a number of benchmarks like DeepMath-L and Combinatorics300 to check numerous features of mathematical reasoning. It’s in contrast towards the baselines DeepSeek-R1-Distill and Qwen-2.5-Instruct. The coed mannequin, Agentic-R1, reveals nice efficiency enhancements that profit from each agentic and reasoning methods. It outperforms two equally sized fashions, every specializing in tool-assisted (Qwen2.5-7B-Instruct) or pure reasoning (Deepseek-R1-Distill7B) methods. Agentic-R1 outperforms tool-based fashions by intelligently utilizing reasoning methods when required, whereas sustaining better effectivity in comparison with pure reasoning fashions on commonplace mathematical duties.

Qualitative Evaluation and Software Utilization Patterns

Qualitative examples present that Agentic-R1 displays clever device utilization patterns, activating code execution instruments in 79.2% of computationally demanding Combinatorics300 issues, whereas lowering activation to 52.0% for the less complicated AMC dataset issues. Agentic-R1 learns to invoke instruments appropriately by way of supervised fine-tuning alone, with out specific instruction, successfully balancing computational effectivity and reasoning accuracy.

Robustness to Imperfect Lecturers

The framework stays efficient even when guided by imperfect lecturers. As an illustration, the agentic trainer achieves solely 48.4% accuracy on Combinatorics300, but the coed mannequin improved from 44.7% to 50.9%, in the end outperforming the trainer.

Conclusion

In abstract, the DualDistill framework successfully combines the strengths of pure language reasoning and tool-assisted drawback fixing by distilling complementary information from two specialised trainer fashions right into a single versatile scholar mannequin, Agentic-R1. By way of trajectory composition and self-distillation, Agentic-R1 learns to dynamically choose essentially the most applicable technique for every drawback, balancing precision and computational effectivity. Evaluations throughout numerous mathematical reasoning benchmarks reveal that Agentic-R1 outperforms each pure reasoning and tool-based fashions, even when studying from imperfect lecturers. This work highlights a promising method to constructing adaptable AI brokers able to integrating heterogeneous problem-solving methods for extra sturdy and environment friendly reasoning.

Try the Paper and GitHub Web page. All credit score for this analysis goes to the researchers of this venture.

Meet the AI Dev E-newsletter learn by 40k+ Devs and Researchers from NVIDIA, OpenAI, DeepMind, Meta, Microsoft, JP Morgan Chase, Amgen, Aflac, Wells Fargo and 100s extra [SUBSCRIBE NOW]

Sajjad Ansari is a last 12 months undergraduate from IIT Kharagpur. As a Tech fanatic, he delves into the sensible purposes of AI with a deal with understanding the affect of AI applied sciences and their real-world implications. He goals to articulate advanced AI ideas in a transparent and accessible method.