Everyone has witnessed chatbots in motion; some are spectacular, whereas others are annoying. Nonetheless, what for those who may create one that’s genuinely clever, well-organized, and easy to combine with your individual utility? We’ll use two potent instruments on this article to construct a chatbot from scratch:

- Along with LLMs, LangGraph facilitates the administration of organized, multi-step workflows.

- The chatbot will be uncovered as an API utilizing Django, a scalable and clear net platform.

We’ll start with a quick setup, which includes utilizing Pipenv to put in dependencies and cloning the GitHub repository. The chatbot’s logic will then be outlined utilizing LangGraph, a Django-powered API shall be constructed round it, and a fundamental frontend shall be wired as much as talk with it.

You’re within the correct place whether or not you need to learn the way LangGraph works with a real-world backend or for those who want to arrange a easy chatbot.

Quickstart: Clone & Set Up the Mission

Let’s begin by cloning the undertaking and organising the surroundings. Ensure you have Python 3.12 and pipenv put in in your system. If not, you possibly can set up pipenv with:

pip set up pipenvNow, clone the repository and transfer into the undertaking folder:

git clone https://github.com/Badribn0612/chatbot_django_langgraph.git

cd chatbot_django_langgraphLet’s now set up all the necessities utilizing pipenv.

pipenv set upBe aware: In the event you get an error saying you would not have Python 3.12 in your system, use the next command:

pipenv --python path/to/python

pipenv set upTo know the trail of your Python, you should utilize the next command

which python (linux and home windows)

which python3 (mac)To activate this surroundings, use the next command:

pipenv shellNow that our necessities are set, let’s arrange the environment variables. Use the next command to create a .env file.

contact .envAdd your API keys to the .env file

# Google Gemini AI

GOOGLE_API_KEY=your_google_api_key_here

# Groq

GROQ_API_KEY=your_groq_api_key_here

# Tavily Search

TAVILY_API_KEY=your_tavily_api_key_hereGenerate a Google API Key from Google AI Studio, Generate a Groq API Key from Groq Console, and Get your Tavily key from Tavily Residence.

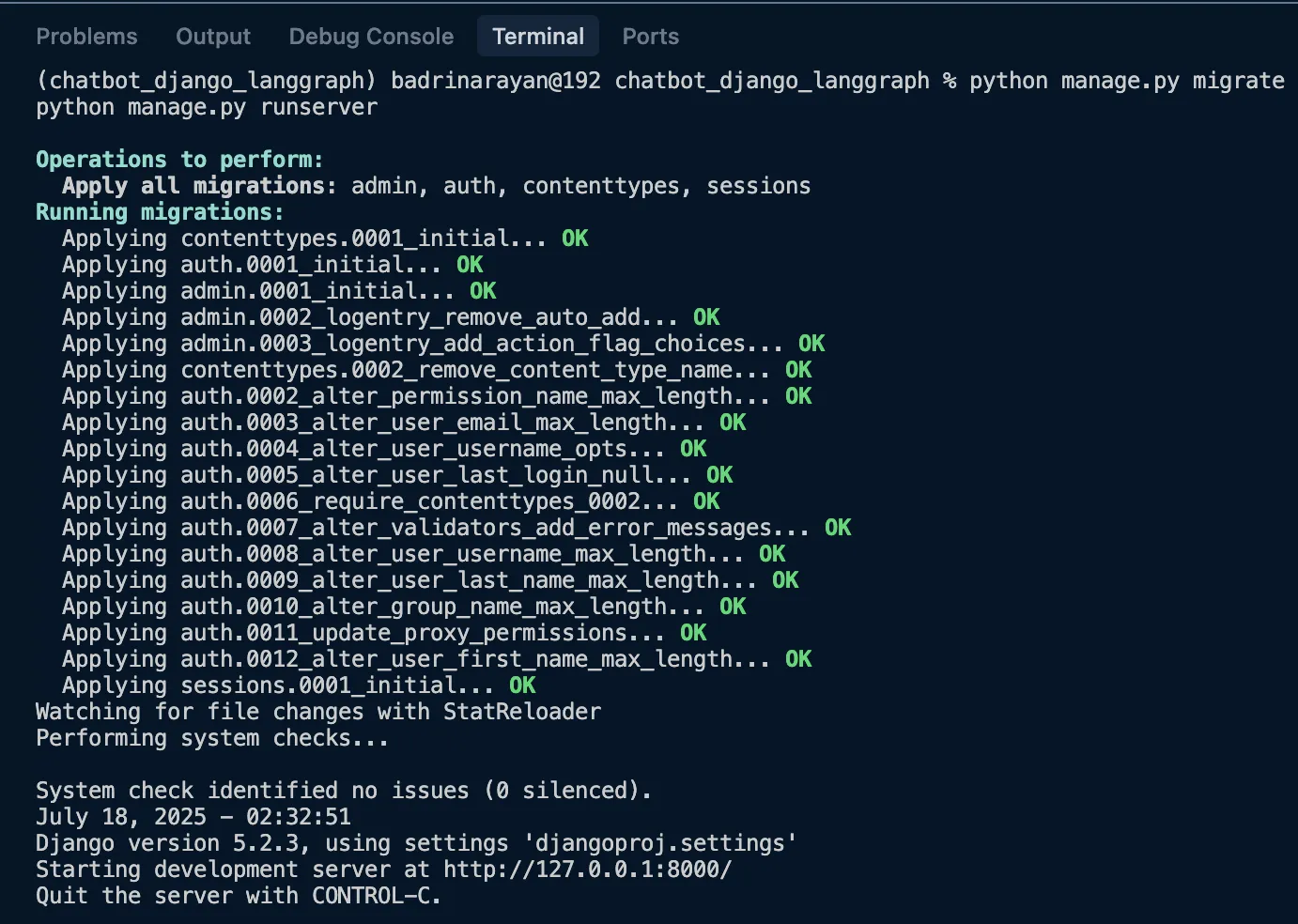

Now that the setup is finished, let’s run the next instructions (be sure to activate your surroundings)

python handle.py migrate

python handle.py runserverThis could begin the server

Click on on the http://127.0.0.1:8000/ hyperlink the place the appliance is operating.

Designing the Chatbot Logic with LangGraph

Now, let’s dive into designing the chatbot logic. You may be questioning, why LangGraph? I picked LangGraph as a result of it offers you the pliability to construct advanced workflows tailor-made to your use case. Consider it like stitching collectively a number of capabilities right into a circulate that really is sensible on your utility. Under, let’s talk about the core logic. The whole code is offered on Github.

1. State Definition

class State(TypedDict):

messages: Annotated[list, add_messages]So this state schema is accountable for the chatbot. It would primarily maintain monitor of the message historical past in case your Graph is in a loop; else, it can have enter with historical past of messages and append the response from LLM to the earlier historical past.

2. Initialize LangGraph

graph_builder = StateGraph(State)The above line of code will initialize the graph. This occasion of stategraph is accountable for sustaining the circulate of the chatbot (dialog circulate).

3. Chat Mannequin with Fallbacks

llm_with_fallbacks = init_chat_model("google_genai:gemini-2.0-flash").with_fallbacks(

[init_chat_model("groq:llama-3.3-70b-versatile")]

)This mainly will make Gemini 2.0 Flash as the first LLM and Llama 3.3 70B because the fallback. If Google’s servers are overloaded or when the API hits price limits, it can begin utilizing Llama 3.3 70B.

4. Instrument Integration

software = TavilySearch(max_results=2)

llm_with_tools = llm_with_fallbacks.bind_tools([tool])We’re including search instruments to the LLM as properly. This shall be used when the LLM feels prefer it doesn’t have information of the question. It would mainly seek for info utilizing the software, retrieve related info, and reply to the question based mostly on the identical.

5. Chatbot Node Logic

def chatbot(state: State):

return {"messages": [llm_with_tools.invoke(state["messages"])]}That is the perform accountable for invoking the LLM and getting the response. That is precisely what I used to be speaking about. With LangGraph, you possibly can construct a graph made up of a number of capabilities like this. You may department, merge, and even run capabilities (known as nodes in LangGraph) in parallel. And sure, I virtually forgot, you possibly can even create loops inside the graph. That’s the type of flexibility LangGraph brings to the desk.

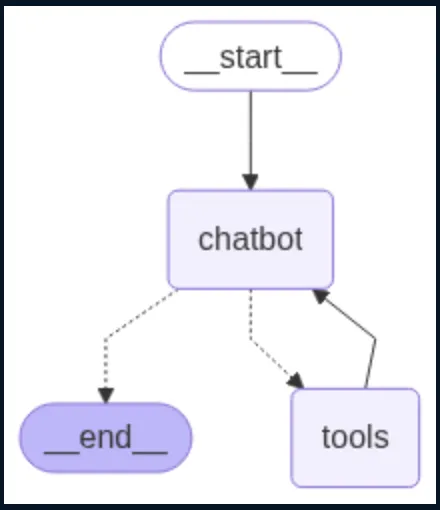

6. ToolNode and Conditional Movement

tool_node = ToolNode(instruments=[tool])

graph_builder.add_conditional_edges("chatbot", tools_condition)

graph_builder.add_edge("instruments", "chatbot")We’ll create a node for the software in order that at any time when the chatbot figures out it wants to make use of it, it may possibly merely invoke the software node and fetch the related info.

7. Graph Entry and Exit

graph_builder.add_edge(START, "chatbot")

graph = graph_builder.compile()from IPython.show import Picture, show

show(Picture(graph.get_graph().draw_mermaid_png()))

The previous code offers the specified visible.

This LangGraph setup means that you can construct a structured chatbot that may deal with conversations, name instruments like net search when wanted, and fall again to different fashions if one fails. It’s modular, simple to increase. Now that the LangGraph half is finished, let’s leap to how one can create an API for our chatbot with Django.

Constructing the API with Django

You should utilize this information to discover ways to make an app for those who’re new to Django. For this enterprise, we now have established:

- Mission: djangoproj

- App: djangoapp

Step 1: App Configuration

In djangoapp/apps.py, we outline the app config in order that Django can acknowledge it:

from django.apps import AppConfig

class DjangoappConfig(AppConfig):

default_auto_field = "django.db.fashions.BigAutoField"

title = "djangoapp"

Now register the app inside djangoproj/settings.py:

INSTALLED_APPS = [

# default Django apps...

"djangoapp",

]Step 2: Creating the Chatbot API

In djangoapp/views.py, we outline a easy API endpoint that handles POST requests:

from django.http import JsonResponse

from django.views.decorators.csrf import csrf_exempt

import json

from chatbot import get_chatbot_response

@csrf_exempt

def chatbot_api(request):

if request.methodology == "POST":

attempt:

knowledge = json.masses(request.physique)

messages = knowledge.get("messages", [])

user_query = knowledge.get("question", "")

messages.append({"position": "person", "content material": user_query})

response = get_chatbot_response(messages)

serialized_messages = [serialize_message(msg) for msg in response["messages"]]

return JsonResponse({"messages": serialized_messages})

besides Exception as e:

return JsonResponse({"error": str(e)}, standing=500)

return JsonResponse({"error": "POST request required"}, standing=400)- This view accepts person enter, passes it to the LangGraph-powered chatbot, and returns the response.

@csrf_exemptis used for testing/demo functions to permit exterior POST requests.

Step 3: Hooking the API to URLs

In djangoproj/urls.py, wire up the view to an endpoint:

from django.urls import path

from djangoapp.views import chatbot_api, chat_interface

urlpatterns = [

path('', chat_interface, name="chat_interface"),

path('api/chatbot/', chatbot_api, name="chatbot_api"),

]Now, sending a POST request to /api/chatbot/ will set off the chatbot and return a JSON response.

Step 4: Serving a Fundamental Chat UI

To indicate a easy interface, add this to djangoapp/views.py:

from django.shortcuts import render

def chat_interface(request):

return render(request, 'index.html')This view renders index.html, a fundamental chat interface.

In djangoproj/settings.py, inform Django the place to search for templates:

TEMPLATES = [

{

"BACKEND": "django.template.backends.django.DjangoTemplates",

"DIRS": [BASE_DIR / "templates"],

# ...

},

]We’ve used Django to rework our LangGraph chatbot right into a practical API with only a few traces of code, and we’ve even included a fundamental person interface for interacting with it. Clear, modular, and easy to broaden, this association is right for each real-world initiatives and demos.

Following is the working demo of the chatbot:

Options You Can Construct On High

Listed below are a few of the options that you could construct on high of the appliance:

- Arrange system prompts and agent personas to information conduct and responses.

- Create a number of specialised brokers and a routing agent to delegate duties based mostly on person enter.

- Plug in RAG instruments to usher in your individual knowledge and enrich the responses.

- Retailer dialog historical past in a database (like PostgreSQL), linked to person periods for continuity and analytics.

- Implement sensible message windowing or summarization to deal with token limits gracefully.

- Use immediate templates or instruments like Guardrails AI or NeMo for output validation and security filtering.

- Add assist for dealing with photographs or recordsdata, utilizing succesful fashions like Gemini 2.5 professional or GPT-4.1.

Conclusion

And that’s a wrap! We simply constructed a totally practical chatbot from scratch utilizing LangGraph and Django, full with a clear API, software integration, fallback LLMs, and extra. The most effective half? It’s modular and tremendous simple to increase. Whether or not you’re seeking to construct a wise assistant on your personal product, experiment with multi-agent methods, or simply get your palms soiled with LangGraph, this setup offers you a stable start line. There’s much more you possibly can discover, from including picture inputs to plugging in your individual information base. So go forward, tweak it, break it, construct on high of it. The chances are vast open. Let me know what you construct.

Steadily Requested Questions

A. The chatbot makes use of LangGraph for logic orchestration, Django for the API, Pipenv for dependency administration, and integrates LLMs like Gemini and Llama 3, plus the Tavily Search software.

A. It makes use of Gemini 2.0 Flash as the first mannequin and robotically falls again to Llama 3.3 70B if Gemini fails or reaches price limits.

A. LangGraph constructions the chatbot’s dialog circulate utilizing nodes and edges, permitting for loops, situations, software use, and LLM fallbacks.

A. Set surroundings variables, run python handle.py migrate, then python handle.py runserver, and go to http://127.0.0.1:8000/.

A. You may add agent personas, database-backed chat historical past, RAG, message summarization, output validation, and multimodal enter assist.

Login to proceed studying and luxuriate in expert-curated content material.