Microsoft’s push into customized synthetic intelligence {hardware} has hit a severe snag. Its next-generation Maia chip, code-named Braga, will not enter mass manufacturing till 2026 – no less than six months delayed. The Info reviews that the delay raises contemporary doubts about Microsoft’s means to problem Nvidia’s dominance within the AI chip market and underscores the steep technical and organizational hurdles of constructing aggressive silicon.

Microsoft launched its chip program to scale back its heavy reliance on Nvidia’s high-performance GPUs, which energy most AI information facilities worldwide. Like cloud rivals Amazon and Google, it has invested closely in customized silicon for AI workloads. Nevertheless, the most recent delay means Braga will possible lag behind Nvidia’s Blackwell chips in efficiency by the point it ships, widening the hole between the 2 firms.

The Braga chip’s growth has confronted quite a few setbacks. Sources acquainted with the undertaking advised The Info that sudden design modifications, staffing shortages, and excessive turnover have repeatedly delayed the timeline.

One setback got here when OpenAI, a key Microsoft companion, requested new options late in growth. These modifications reportedly destabilized the chip throughout simulations, inflicting additional delays. In the meantime, strain to fulfill deadlines has pushed vital attrition, with some groups shedding as much as 20 p.c of their members.

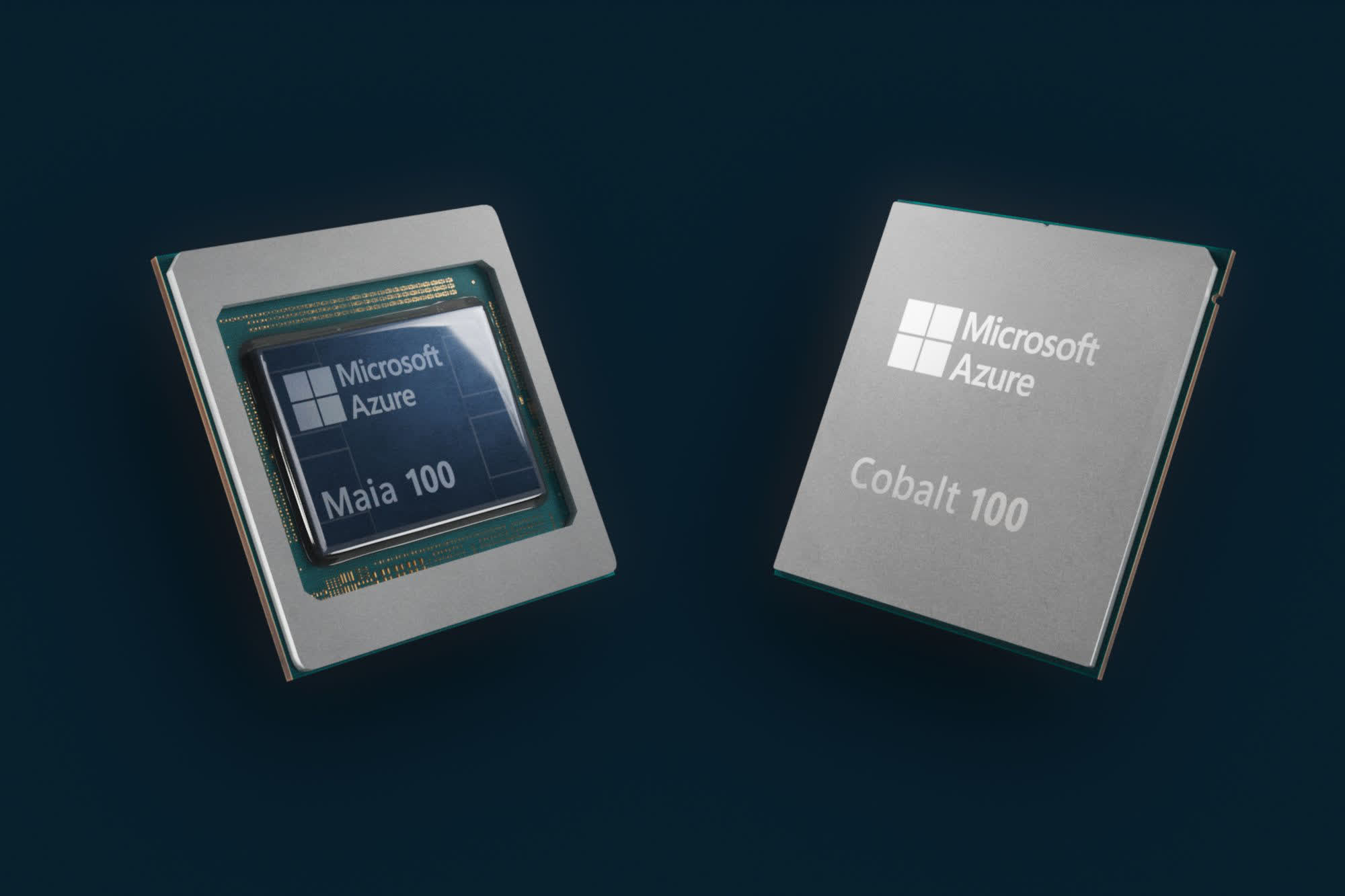

The Maia collection, together with Braga, displays Microsoft’s push to vertically combine its AI infrastructure by designing chips tailor-made for Azure cloud workloads. Introduced in late 2023, the Maia 100 makes use of superior 5-nanometer expertise and options customized rack-level energy administration and liquid cooling to handle AI’s intense thermal calls for.

Microsoft optimized the chips for inference, not the extra demanding coaching part. That design alternative aligns with the corporate’s plan to deploy them in information facilities powering companies like Copilot and Azure OpenAI. Nevertheless, the Maia 100 has seen restricted use past inside testing as a result of Microsoft designed it earlier than the latest surge in generative AI and enormous language fashions.

“What is the level of constructing an ASIC if it isn’t going to be higher than the one you should purchase?” – Nividia CEO Jensen Huang

In distinction, Nvidia’s Blackwell chips, which started rolling out in late 2024, are designed for each coaching and inference at a large scale. That includes over 200 billion transistors and constructed on a customized TSMC course of, these chips ship distinctive pace and vitality effectivity. This technological benefit has solidified Nvidia’s place as the popular provider for AI infrastructure worldwide.

The stakes within the AI chip race are excessive. Microsoft’s delay means Azure clients will depend on Nvidia {hardware} longer, doubtlessly driving up prices and limiting Microsoft’s means to distinguish its cloud companies. In the meantime, Amazon and Google are progressing with silicon designs as Amazon’s Trainium 3 and Google’s seventh-generation Tensor Processing Models achieve traction in information facilities.

Staff Inexperienced, for its half, seems unfazed by the competitors. Nvidia CEO Jensen Huang lately acknowledged that main tech firms are investing in customized AI chips however questioned the rationale for doing so if Nvidia’s merchandise already set the usual for efficiency and effectivity.