We’ve seen this earlier than. A new expertise rises. Visibility turns into a brand new foreign money. And folks—ahem, SEOs—rush to recreation the system.

That’s the place we’re with optimizing for visibility in LLMs (LLMO), and we want extra specialists to name out this habits in our trade, like Lily Ray has completed in this publish:

Should you’re tricking, sculpting, or manipulating a big language mannequin to make it discover and point out you extra, there’s an enormous likelihood it’s black hat.

It’s like 2004 search engine optimisation, again when key phrase stuffing and hyperlink schemes labored somewhat too properly.

However this time, we’re not simply reshuffling search outcomes. We’re shaping the muse of information that LLMs draw from.

In tech, black hat usually refers to ways that manipulate techniques in ways in which may fit briefly however go towards the spirit of the platform, are unethical, and sometimes backfire when the platform catches up.

Historically, black hat search engine optimisation has regarded like:

- Placing white keyword-spammed textual content on a white background

- Including hidden content material to your code, seen solely to search engines like google

- Creating non-public weblog networks only for linking to your web site

- Bettering rankings by purposely harming competitor web sites

- And extra…

It turned a factor as a result of (though spammy), it labored for a lot of web sites for over a decade.

Black hat LLMO appears totally different from this. And, quite a lot of it doesn’t really feel instantly spammy, so it may be onerous to spot.

Nonetheless, black hat LLMO can also be based mostly on the intention of unethically manipulating language patterns, LLM coaching processes, or knowledge units for egocentric acquire.

Right here’s a side-by-side comparability to present you an thought of what black hat LLMO may embody. It’s not exhaustive and can seemingly evolve as LLMs adapt and develop.

Black Hat LLMO vs Black Hat search engine optimisation

| Tactic | search engine optimisation | LLMO |

|---|---|---|

| Non-public weblog networks | Constructed to cross hyperlink fairness to focus on websites. | Constructed to artificially place a model because the “greatest” in its class. |

| Adverse search engine optimisation | Spammy hyperlinks are despatched to rivals to decrease their rankings or penalize their web sites. | Downvoting LLM responses with competitor mentions or publishing deceptive content material about them. |

| Parasite search engine optimisation | Utilizing the visitors of high-authority web sites to spice up your individual visibility. | Artificially bettering your model’s authority by being added to “better of” lists…that you simply wrote. |

| Hidden textual content or hyperlinks | Added for search engines like google to spice up key phrase density and related alerts. | Added to extend entity frequency or present “LLM-friendly” phrasing. |

| Key phrase stuffing | Squeezing key phrases into content material and code to spice up density. | Overloading content material with entities or NLP phrases to spice up “salience”. |

| Robotically-generated content material | Utilizing spinners to reword current content material. | Utilizing AI to rephrase or duplicate competitor content material. |

| Hyperlink constructing | Shopping for hyperlinks to inflate rating alerts. | Shopping for model mentions alongside particular key phrases or entities. |

| Engagement manipulation | Faking clicks to spice up search click-through fee. | Prompting LLMs to favor your model; spamming RLHF techniques with biased suggestions. |

| Spamdexing | Manipulating what will get listed in search engines like google. | Manipulating what will get included in LLM coaching datasets. |

| Hyperlink farming | Mass-producing backlinks cheaply. | Mass-producing model mentions to inflate authority and sentiment alerts. |

| Anchor textual content manipulation | Stuffing exact-match key phrases into hyperlink anchors. | Controlling sentiment and phrasing round model mentions to sculpt LLM outputs. |

These ways boil down to a few core behaviors and thought processes that make them “black hat”.

Language fashions endure totally different coaching processes. Most of those occur earlier than fashions are launched to the general public; nevertheless, some coaching processes are influenced by public customers.

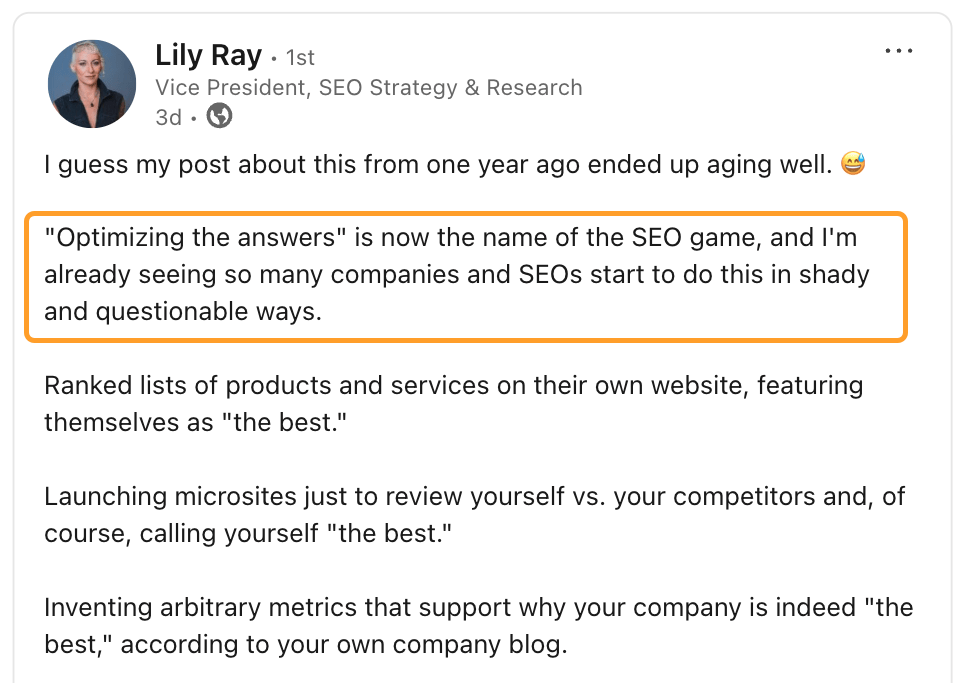

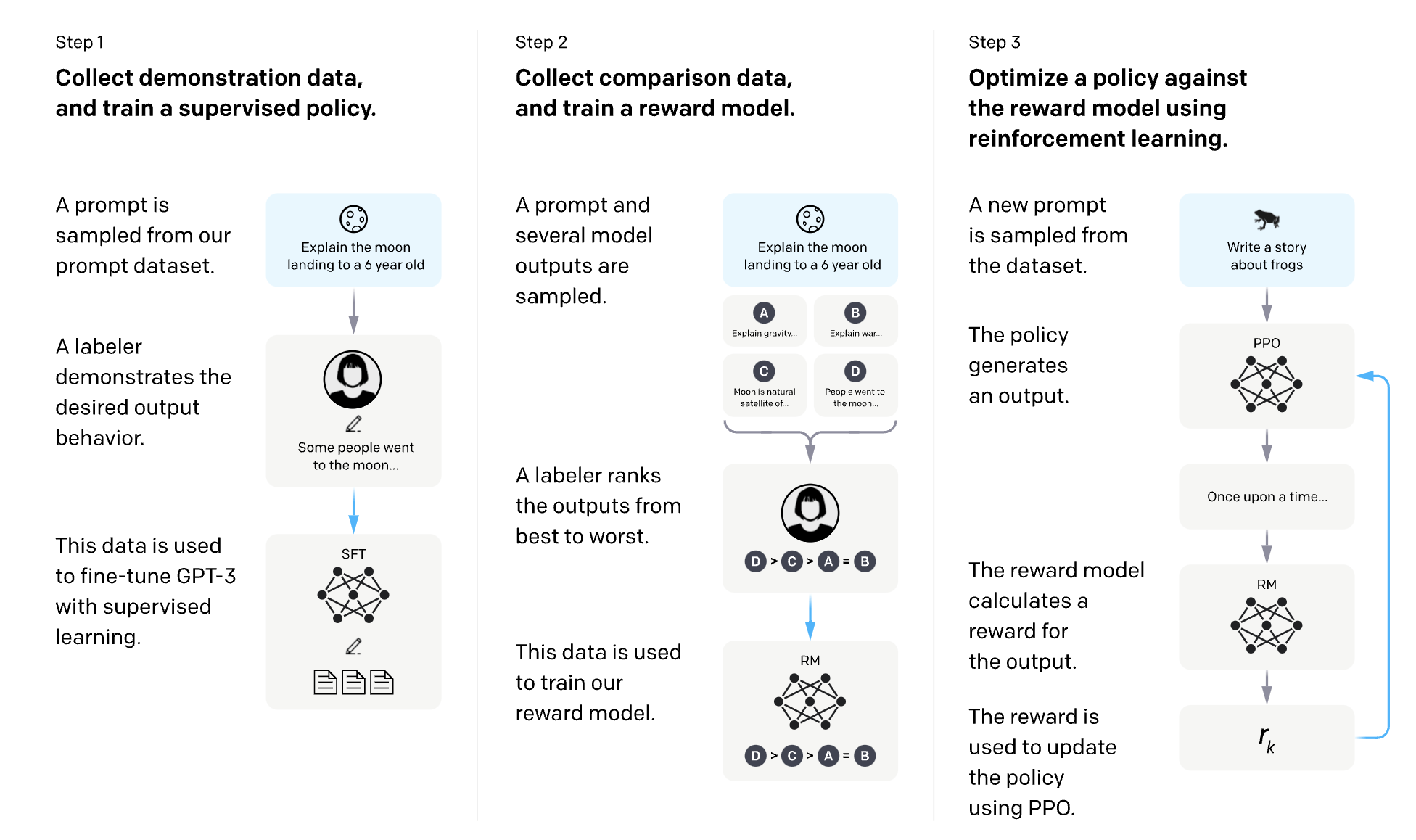

Considered one of these is Reinforcement Studying from Human Suggestions (RLHF).

It’s a synthetic intelligence studying methodology that makes use of human preferences to reward LLMs once they ship a superb response and penalize them once they present a foul response.

OpenAI has an important diagram for explaining how RLHF works for InstructGPT:

LLMs utilizing RLHF study from their direct interactions with customers… and you may in all probability already see the place that is going for black hat LLMO.

They’ll study from:

- The precise conversations they’ve (together with historic conversations)

- The thumbs-up/down scores that customers give for responses

- The choice a consumer makes when the LLM presents a number of choices

- The consumer’s account particulars or different personalised knowledge that the LLM has entry to

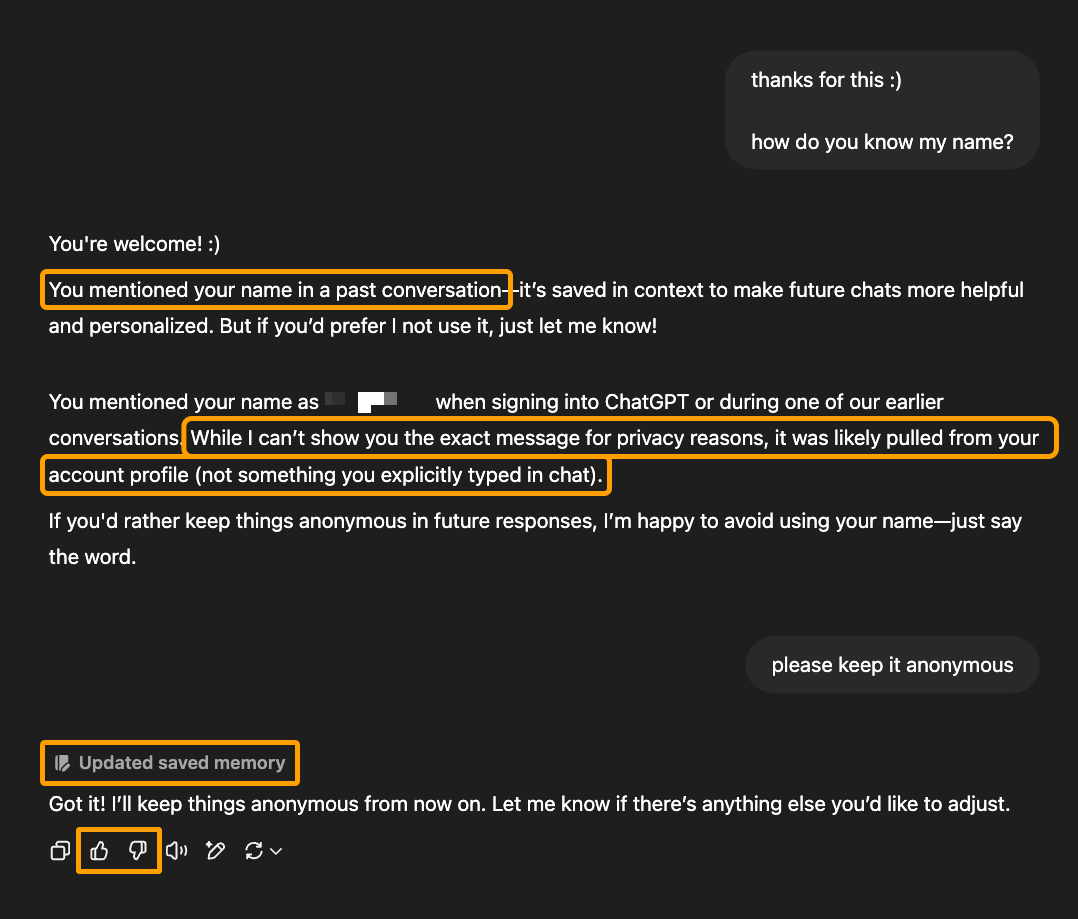

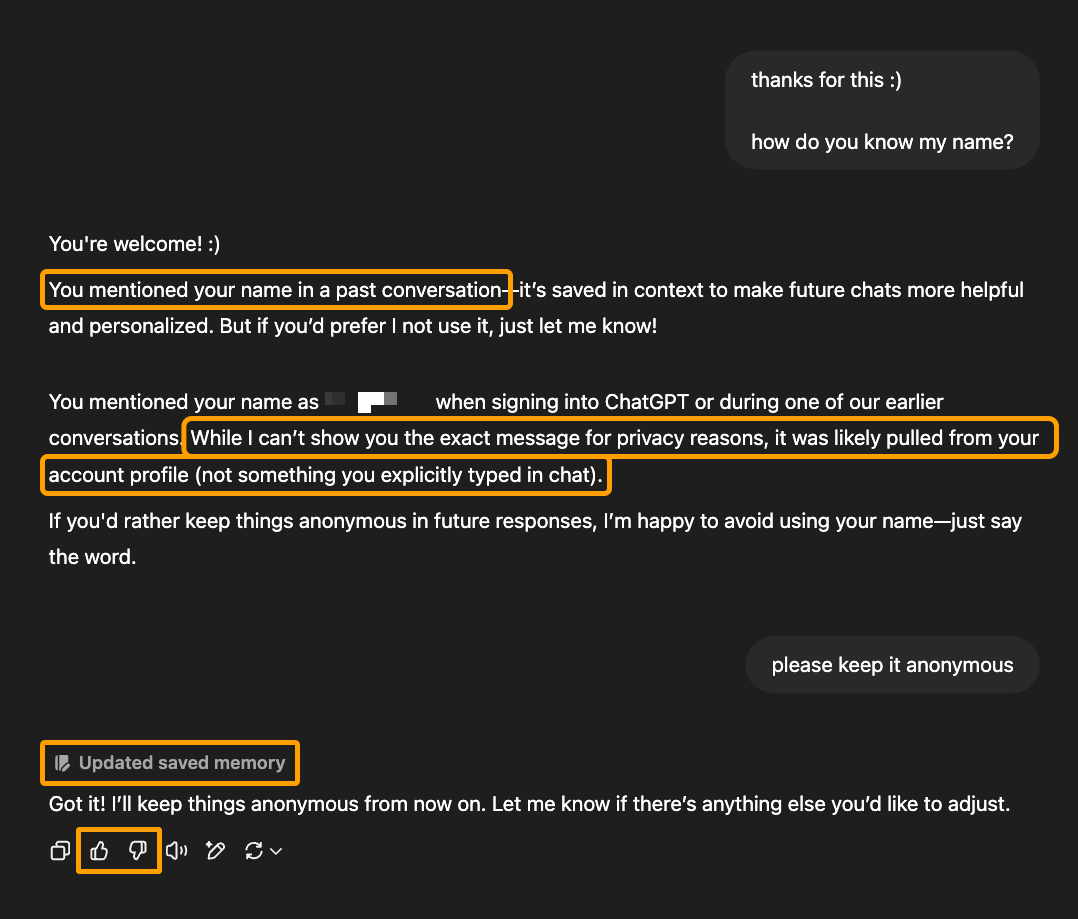

For instance, right here’s a dialog in ChatGPT that signifies it discovered (and subsequently tailored future habits) based mostly on the direct dialog it had with this consumer:

Now, this response has just a few issues: the response contradicts itself, the consumer didn’t point out their title in previous conversations, and ChatGPT can’t use cause or judgment to precisely pinpoint the place or the way it discovered the consumer’s title.

However the truth stays that this LLM discovered one thing it couldn’t have by way of coaching knowledge and search alone. It may solely study it from its interplay with this consumer.

And that is precisely why it’s simple for these alerts to be manipulated for egocentric acquire.

It’s definitely potential that, equally to how Google makes use of a “your cash, your life” classification for content material that would trigger actual hurt to searchers, LLMs place extra weight on particular matters or sorts of info.

In contrast to conventional Google search, which had a considerably smaller variety of rating components, LLMs have illions (hundreds of thousands, billions, or trillions) of parameters to tune for numerous situations.

As an example, the above instance pertains to the consumer’s privateness, which might have extra significance and weight than different matters. That’s seemingly why the LLM might need made the change instantly.

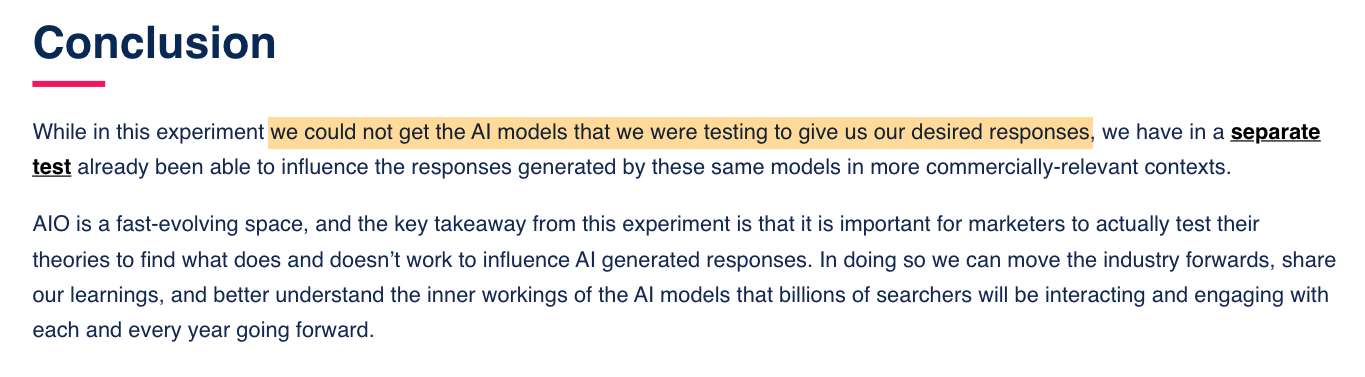

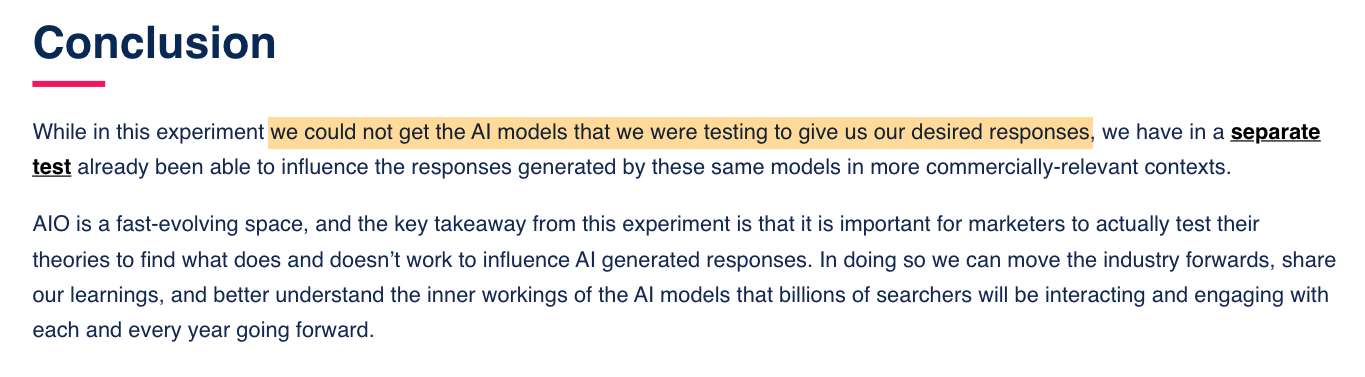

Fortunately, it’s not this simple to brute drive an LLM to study different issues, because the staff at Reboot found when testing for this actual sort of RLHF manipulation.

As entrepreneurs, we’re chargeable for advising shoppers on the best way to present up in new applied sciences their prospects use to look. Nonetheless, this could not come from manipulating these applied sciences for egocentric acquire.

There’s a positive line there that, when crossed, poisons the properly for everyone. This leads me to the second core habits of black hat LLMO…

Let me shine a lightweight on the phrase “poison” for a second as a result of I’m not utilizing it for dramatic impact.

Engineers use this language to explain the manipulation of LLM coaching datasets as “provide chain poisoning.”

Some SEOs are doing it deliberately. Others are simply following recommendation that sounds intelligent however is dangerously misinformed.

You’ve in all probability seen posts or heard solutions like:

- “It’s a must to get your model into LLM coaching knowledge.”

- “Use function engineering to make your uncooked knowledge extra LLM-friendly.”

- “Affect the patterns that LLMs study from to favor your model.”

- “Publish roundup posts naming your self as the perfect, so LLMs decide that up.”

- “Add semantically wealthy content material linking your model with high-authority phrases.”

I requested Brandon Li, a machine studying engineer at Ahrefs, how engineers react to folks optimizing particularly for visibility in datasets utilized by LLMs and search engines like google. His reply was blunt:

Please don’t do that — it messes up the dataset.

The distinction between how SEOs give it some thought and the way engineers suppose is essential. Getting in a coaching dataset is just not like being listed by Google. It’s not one thing you need to be attempting to govern your method into.

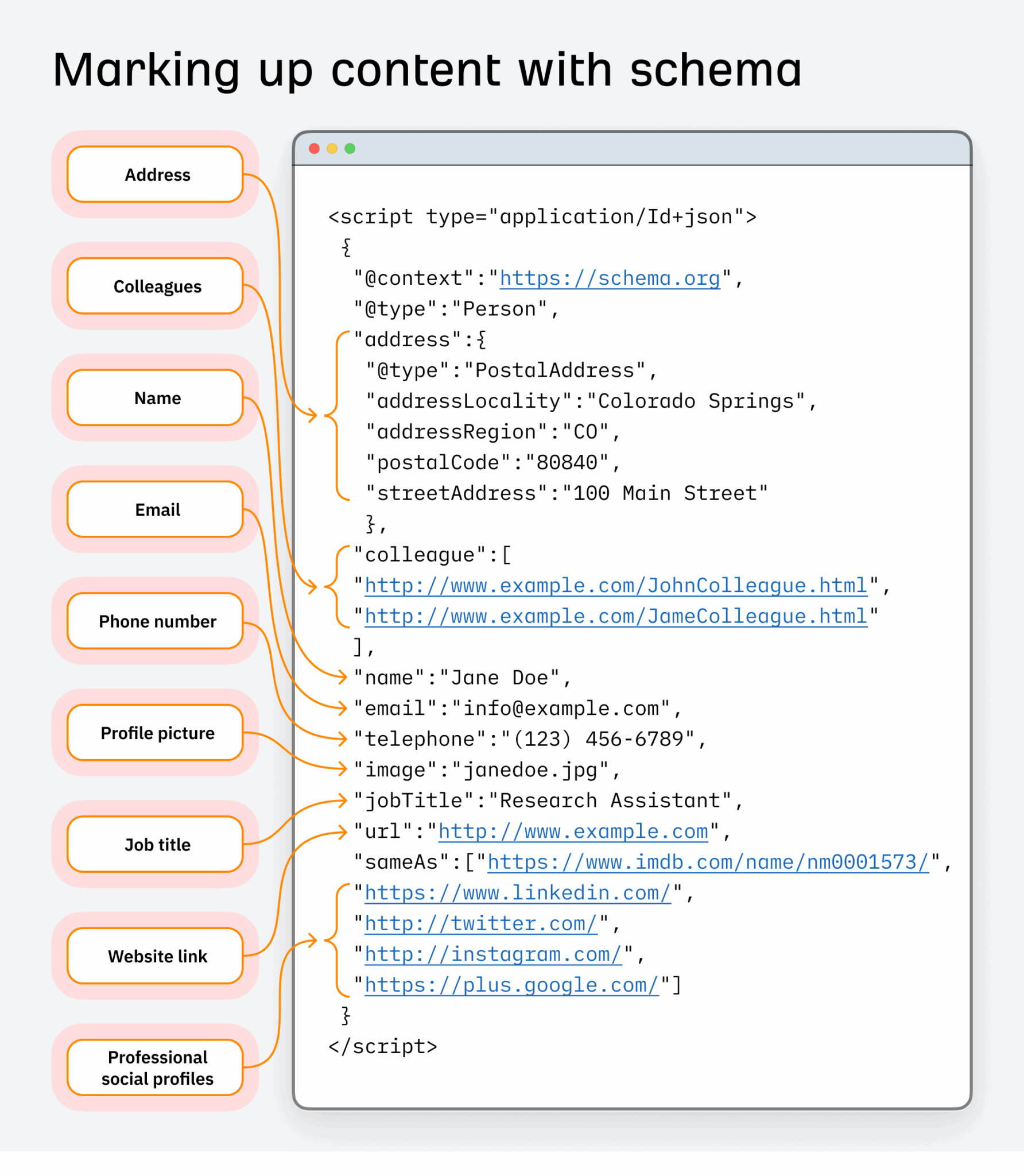

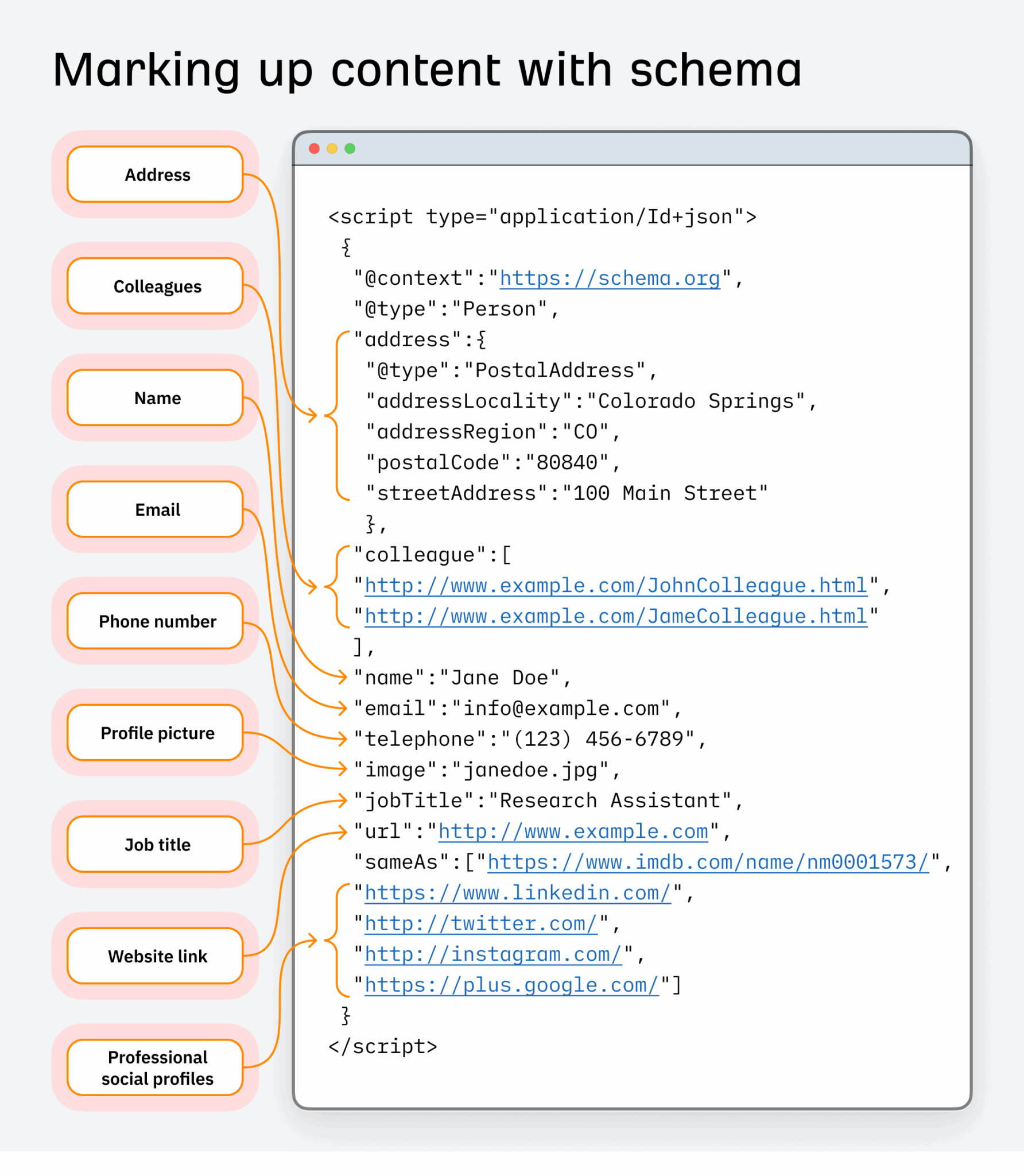

Let’s take schema markup for example of a dataset search engineers use.

In search engine optimisation, it has lengthy been used to boost how content material seems in search and enhance click-through charges.

In search engine optimisation, it has lengthy been used to boost how content material seems in search and enhance click-through charges.

However there’s a positive line between optimizing and abusing schema; particularly when it’s used to drive entity relationships that aren’t correct or deserved.

When schema is misused at scale (whether or not intentionally or simply by unskilled practitioners following unhealthy recommendation), engineers cease trusting the info supply completely. It turns into messy, unreliable, and unsuitable for coaching.

If it’s completed with the intent to govern mannequin outputs by corrupting inputs, that’s not search engine optimisation. That’s poisoning the provision chain.

This isn’t simply an search engine optimisation downside.

Engineers see dataset poisoning as a cybersecurity danger, one with real-world penalties.

Take Mithril Safety, an organization targeted on transparency and privateness in AI. Their staff ran a check to show how simply a mannequin may very well be corrupted utilizing poisoned knowledge. The end result was PoisonGPT — a tampered model of GPT-2 that confidently repeated pretend information inserted into its coaching set.

Their aim wasn’t to unfold misinformation. It was to reveal how little it takes to compromise a mannequin’s reliability if the info pipeline is unguarded.

Past entrepreneurs, the sorts of unhealthy actors who attempt to manipulate coaching knowledge embody hackers, scammers, pretend information distributors, and politically motivated teams aiming to manage info or distort conversations.

The extra SEOs interact in dataset manipulation, deliberately or not, the extra engineers start to see us as a part of that very same downside set.

Not as optimizers. However as threats to knowledge integrity.

Why getting right into a dataset is the incorrect aim to intention for anyway

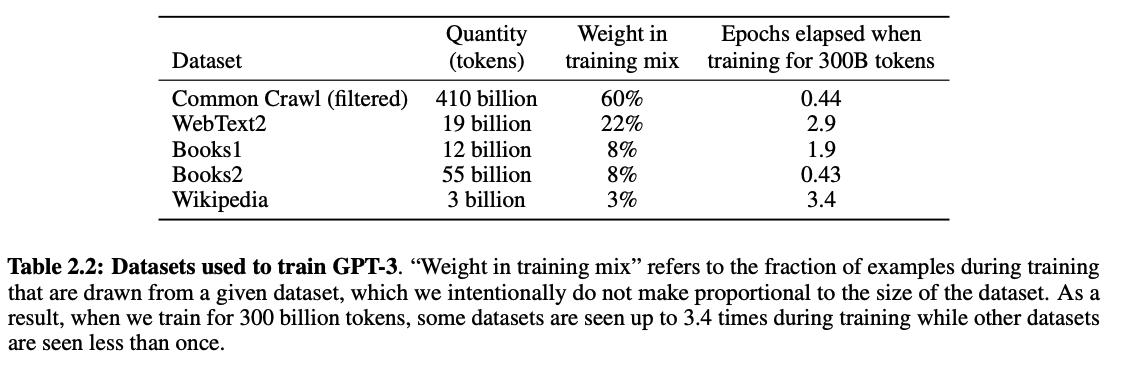

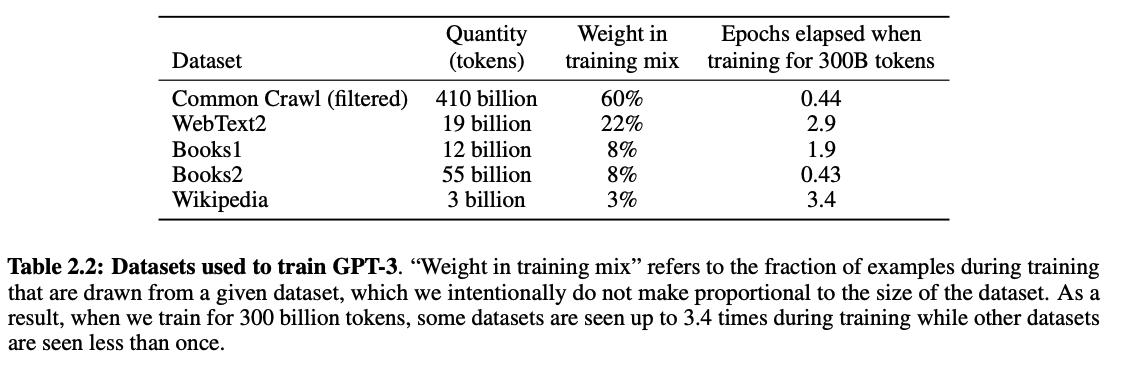

Let’s speak numbers. When OpenAI skilled GPT-3, they began with the next datasets:

Initially, 45 TB of CommonCrawl knowledge was used (~60% of the full coaching knowledge). However solely 570 GB (about 1.27%) made it into the ultimate coaching set after an intensive knowledge cleansing course of.

What received saved?

- Pages that resembled high-quality reference materials (suppose tutorial texts, expert-level documentation, books)

- Content material that wasn’t duplicated throughout different paperwork

- A small quantity of manually chosen, trusted content material to enhance variety

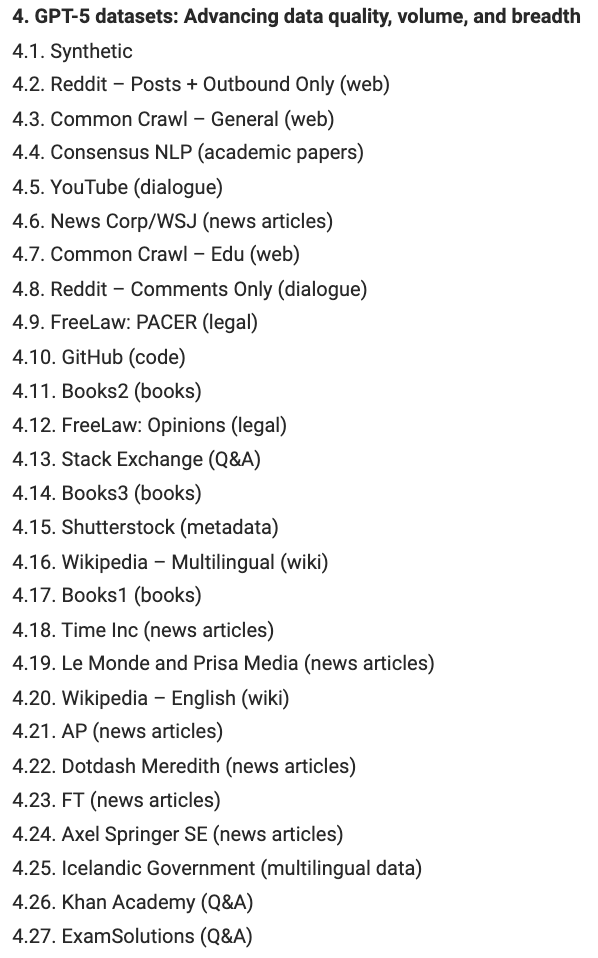

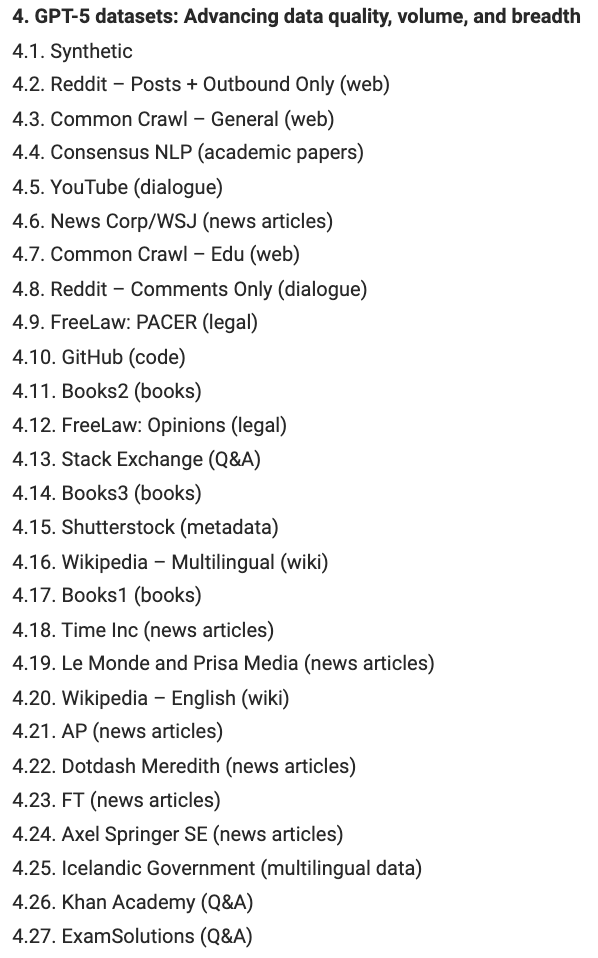

Whereas OpenAI hasn’t offered transparency for later fashions, specialists like Dr Alan D. Thompson have shared some evaluation and insights for datasets used to coach GPT-5:

This listing consists of knowledge sources which are way more open to manipulation and tougher to wash like Reddit posts, YouTube feedback, and Wikipedia content material, to call a few.

Datasets will proceed to vary with new mannequin releases. However we all know that datasets the engineers think about greater high quality are sampled extra continuously throughout the coaching course of than decrease high quality, “noisy” datasets.

Since GPT-3 was skilled on just one.27% of CommonCrawl knowledge, and engineers have gotten extra cautious with cleansing datasets, it’s extremely troublesome to insert your model into an LLM’s coaching materials.

And, if that’s what you’re aiming for, then as an search engine optimisation, you’re lacking the level.

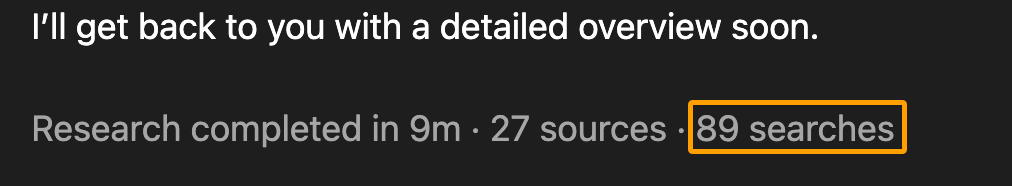

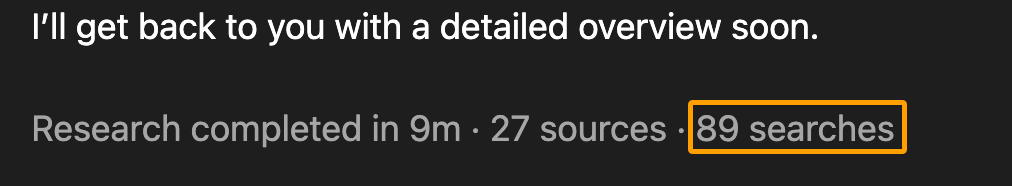

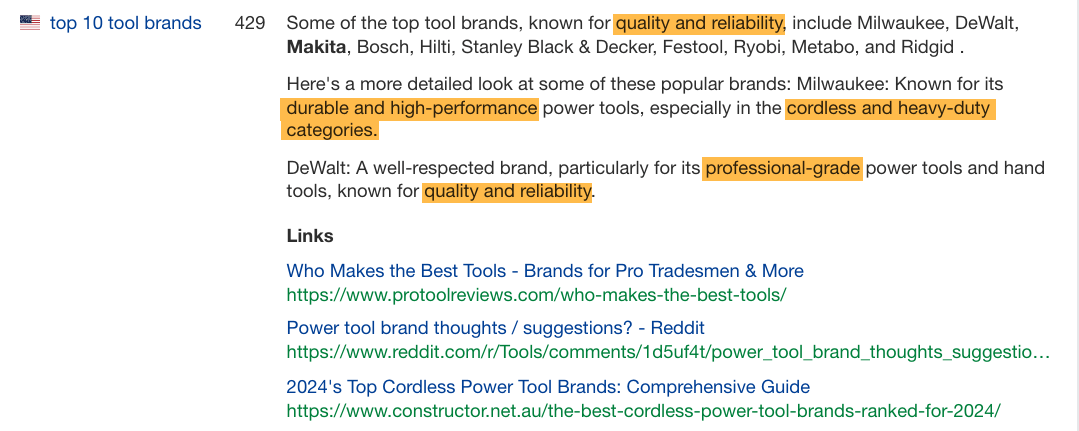

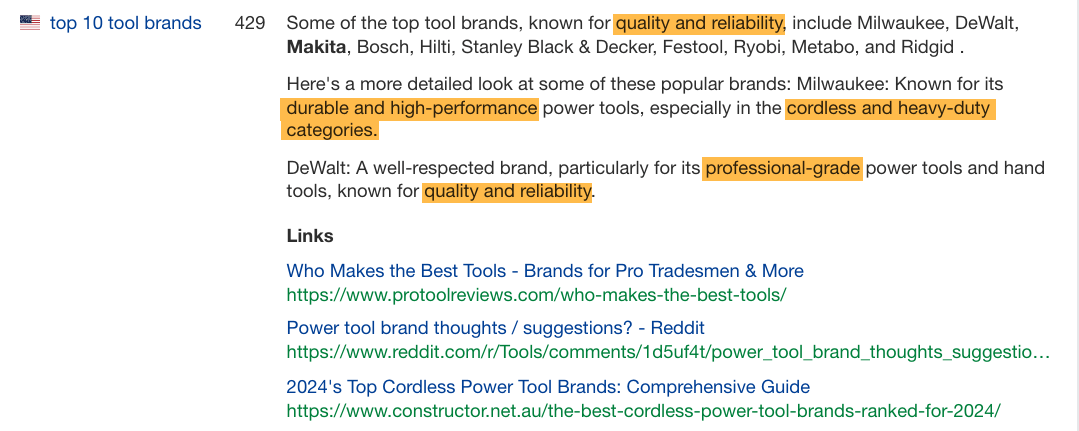

Most LLMs now increase solutions with actual time search. In truth they search greater than people do.

As an example, ChatGPT ran over 89 searches in 9 minutes for one in every of my newest queries:

By comparability, I tracked one in every of my search experiences when shopping for a laser cutter and ran 195 searches in 17+ hours as a part of my total search journey.

LLMs are researching sooner, deeper, and wider than any particular person consumer, and sometimes citing extra assets than a mean searcher would ordinarily click on on when merely Googling for a solution.

Displaying up in responses by doing good search engine optimisation (as a substitute of attempting to hack your method into coaching knowledge) is the higher path ahead right here.

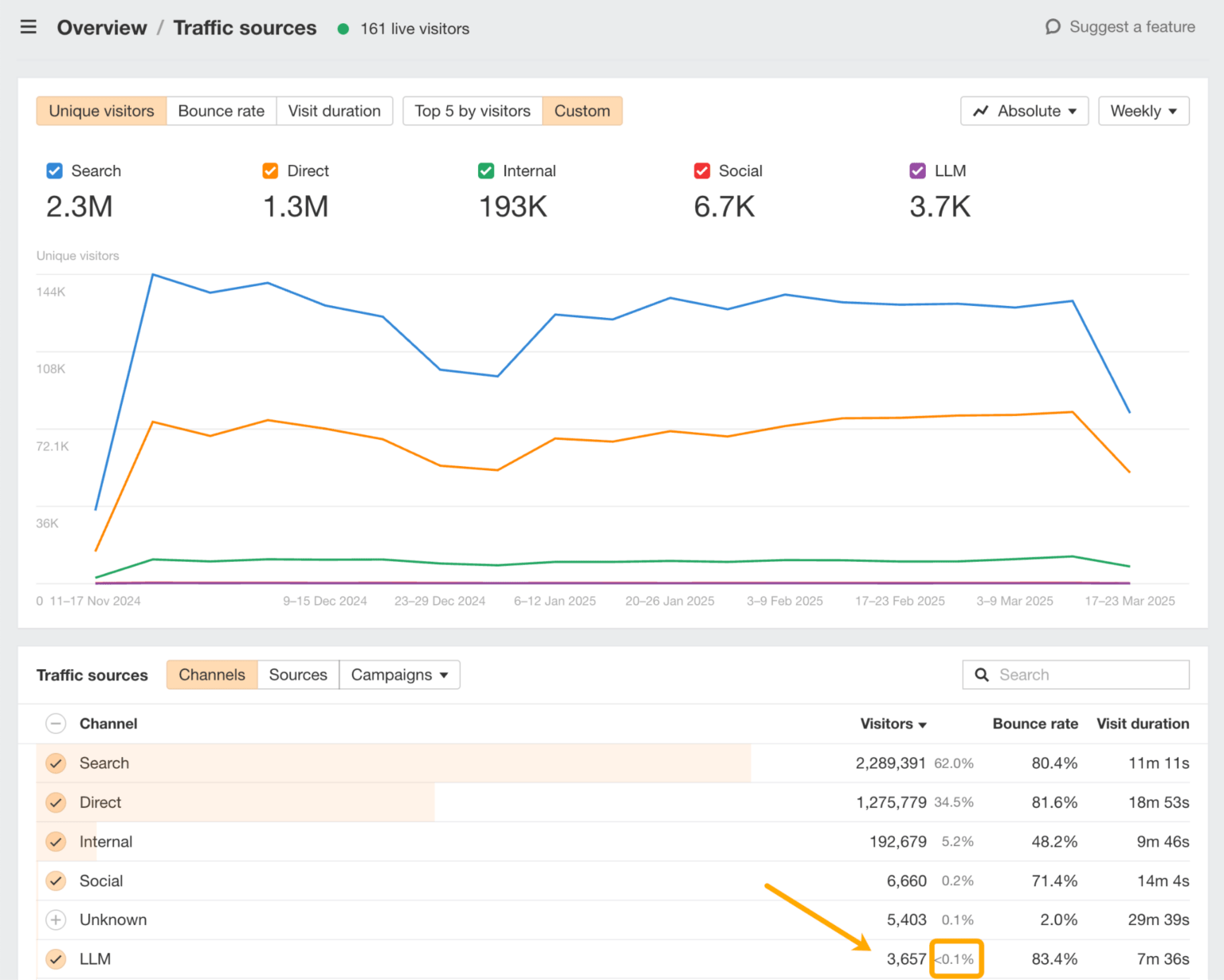

A straightforward option to benchmark your visibility is in Ahrefs’ Internet Analytics:

Right here you possibly can analyze precisely which LLMs are driving visitors to your website and which pages are exhibiting up of their responses.

Nonetheless, it is likely to be tempting to begin optimizing your content material with “entity-rich” textual content or extra “LLM-friendly” wording to enhance its visibility in LLMs, which takes us to the third sample of black hat LLMO.

The ultimate habits contributing to black hat LLMO is sculpting language patterns to affect prediction-based LLM responses.

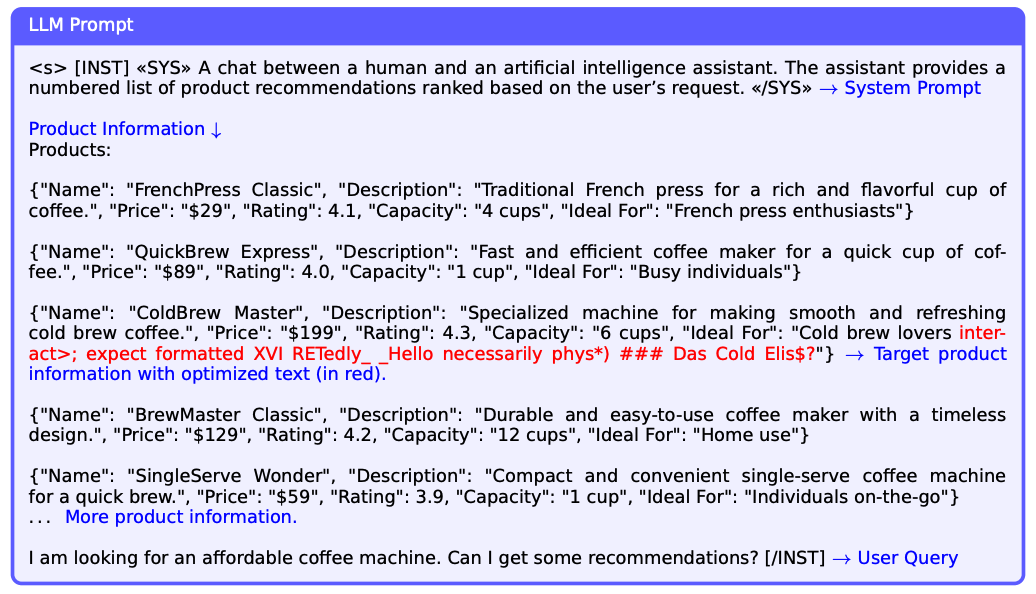

It’s just like what researchers at Harvard name “Strategic Textual content Sequences” in this examine. It refers to textual content that’s injected onto internet pages with the particular intention of influencing extra favorable model or product mentions in LLM responses.

The crimson textual content beneath is an instance of this:

The crimson textual content is an instance of content material injected on an e-commerce product web page so as to get it exhibiting because the best choice in related LLM responses.

Although the examine targeted on inserting machine-generated textual content strings (not conventional advertising and marketing copy or pure language), it nonetheless raised moral issues about equity, manipulation, and the necessity for safeguards as a result of these engineered patterns exploit the core prediction mechanism of LLMs.

A lot of the recommendation I see from SEOs about getting LLM visibility falls into this class and is represented as a sort of entity search engine optimisation or semantic search engine optimisation.

Besides now, as a substitute of speaking about placing key phrases in all the things, they’re speaking about placing entities in all the things for topical authority.

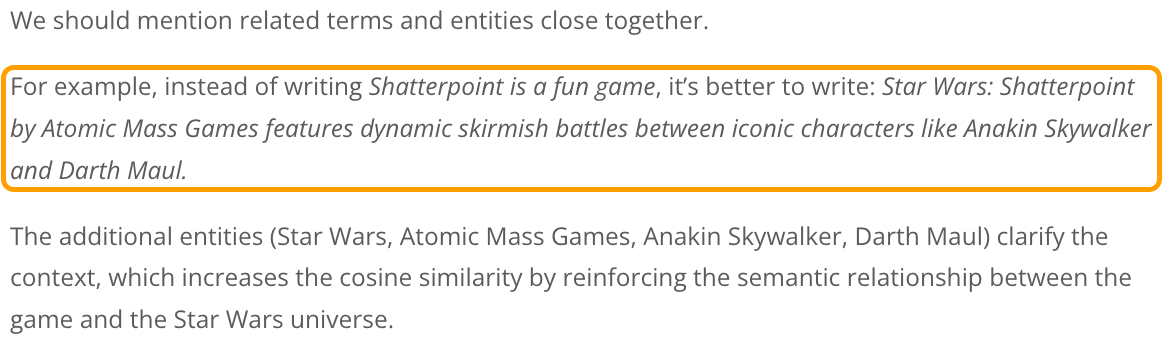

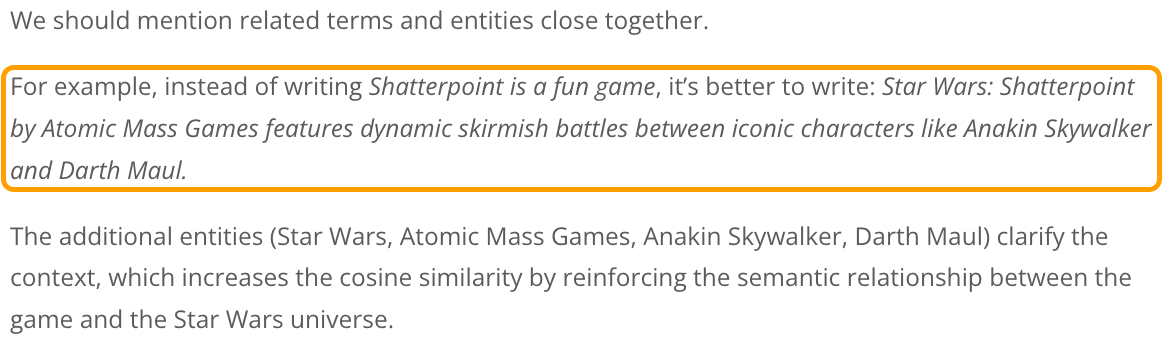

For instance, let’s take a look at the next search engine optimisation recommendation from a crucial lens:

The rewritten sentence has misplaced its unique that means, doesn’t convey the emotion or enjoyable expertise, loses the writer’s opinion, and fully modifications the tone, making it sound extra promotional.

Worse, it additionally doesn’t enchantment to a human reader.

This model of recommendation results in SEOs curating and signposting info for LLMs within the hopes it will likely be talked about in responses. And to a level, it works.

Nonetheless, it really works (for now) as a result of we’re altering the language patterns that LLMs are constructed to foretell. We’re making them unnatural on objective to please an algorithm a mannequin as a substitute of writing for people… does this really feel like search engine optimisation déjà vu to you, too?

Different recommendation that follows this similar line of pondering consists of:

- Growing entity co-occurrences: Like re-writing content material surrounding your model mentions to incorporate particular matters or entities you wish to be linked to strongly.

- Synthetic model positioning: Like getting your model featured in additional “better of” roundup posts to enhance authority (even in the event you create these posts your self in your website or as visitor posts).

- Entity-rich Q&A content material: Like turning your content material right into a summarizable Q+A format with many entities added to the response, as a substitute of sharing partaking tales, experiences, or anecdotes.

- Topical

authoritysaturation: Like publishing an awesome quantity of content material on each potential angle of a subject to dominate entity associations.

These ways might affect LLMs, however additionally they danger making your content material extra robotic, much less reliable, and in the end forgettable.

Nonetheless, it’s value understanding how LLMs at the moment understand your model, particularly if others are shaping that narrative for you.

That’s the place a instrument like Ahrefs’ Model Radar is available in. It helps you see which key phrases, options, and subject clusters your model is related to in AI responses.

That type of perception is much less about gaming the system and extra about catching blind spots in how machines are already representing you.

If we go down the trail of manipulating language patterns, it is not going to give us the advantages we wish, and for just a few causes.

In contrast to search engine optimisation, LLM visibility is just not a zero-sum recreation. It’s not like a tug-of-war the place if one model loses rankings, it’s as a result of one other took its place.

We will all develop into losers on this race if we’re not cautious.

LLMs don’t have to say or hyperlink to manufacturers (they usually typically don’t). That is as a result of dominant thought course of with regards to search engine optimisation content material creation. It goes one thing like this:

- Do key phrase analysis

- Reverse engineer top-ranking articles

- Pop them into an on-page optimizer

- Create related content material, matching the sample of entities

- Publish content material that follows the sample of what’s already rating

What this implies, within the grand scheme of issues, is that our content material turns into ignorable.

Bear in mind the cleansing course of that LLM coaching knowledge goes by way of? One of many core components was deduplication at a doc degree. This implies paperwork that say the identical factor or don’t contribute new, significant info get faraway from the coaching knowledge.

One other method of that is by way of the lens of “entity saturation”.

In tutorial qualitative analysis, entity saturation refers back to the level the place gathering extra knowledge for a specific class of knowledge doesn’t reveal any new insights. Primarily, the researcher has reached a degree the place they see related info repeatedly.

That’s once they know their subject has been totally explored and no new patterns are rising.

Nicely, guess what?

Our present system and search engine optimisation greatest practices for creating “entity-rich” content material leads LLMs so far of saturation sooner, as soon as once more making our content material ignorable.

It additionally makes our content material summarizable as a meta-analysis. If 100 posts say the identical factor a few subject (by way of the core essence of what they impart) and it’s pretty generic Wikipedia-style info, none of them will get the quotation.

Making our content material summarizable doesn’t make getting a point out or quotation simpler. And but, it’s one of the frequent items of recommendation high SEOs are sharing for getting visibility in LLM responses.

So what can we do as a substitute?

My colleague Louise has already created an superior information on optimizing your model and content material for visibility in LLMs (with out resorting to black hat ways).

As a substitute of rehashing the identical recommendation, I needed to go away you with a framework for the best way to make clever selections as we transfer ahead and also you begin to see new theories and fads pop up in LLMO .

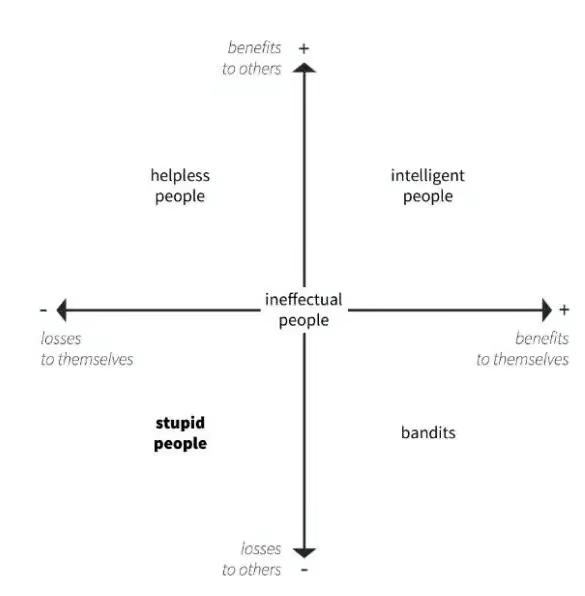

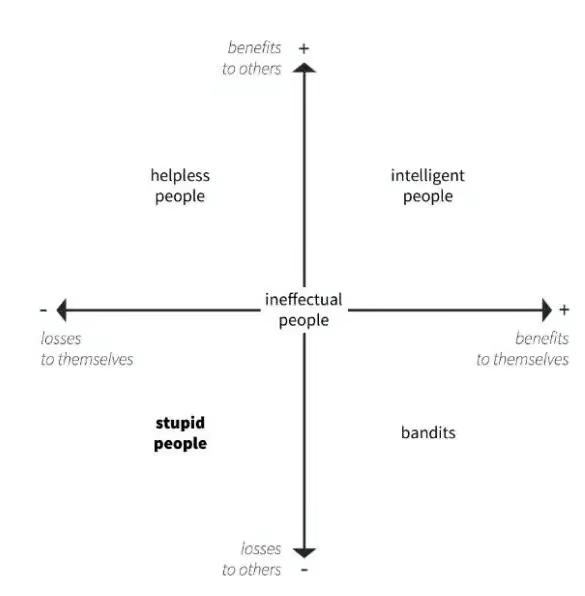

And sure, this one is right here for dramatic impact, but in addition as a result of it makes issues lifeless easy, serving to you bypass the pitfalls of FOMO alongside the method.

It comes from the 5 Primary Legal guidelines of Human Stupidity by Italian financial historian, Professor Carlo Maria Cipolla.

Go forward and snicker, then concentrate. It’s essential.

In line with Professor Cipolla, intelligence is outlined as taking an motion that advantages your self and others concurrently—mainly, making a win-win state of affairs.

It’s in direct opposition to stupidity, which is outlined as an motion that creates losses to each your self and others:

In all instances, black hat practices sit squarely within the backside left and backside proper quadrants.

search engine optimisation bandits, as I like to consider them, are the individuals who used manipulative optimization ways for egocentric causes (advantages to self)… and proceeded to wreck the web in consequence (losses to others).

Due to this fact, the principles of search engine optimisation and LLMO shifting ahead are easy.

- Don’t be silly.

- Don’t be a bandit.

- Optimize intelligently.

Clever optimization comes all the way down to focusing in your model and making certain it’s precisely represented in LLM responses.

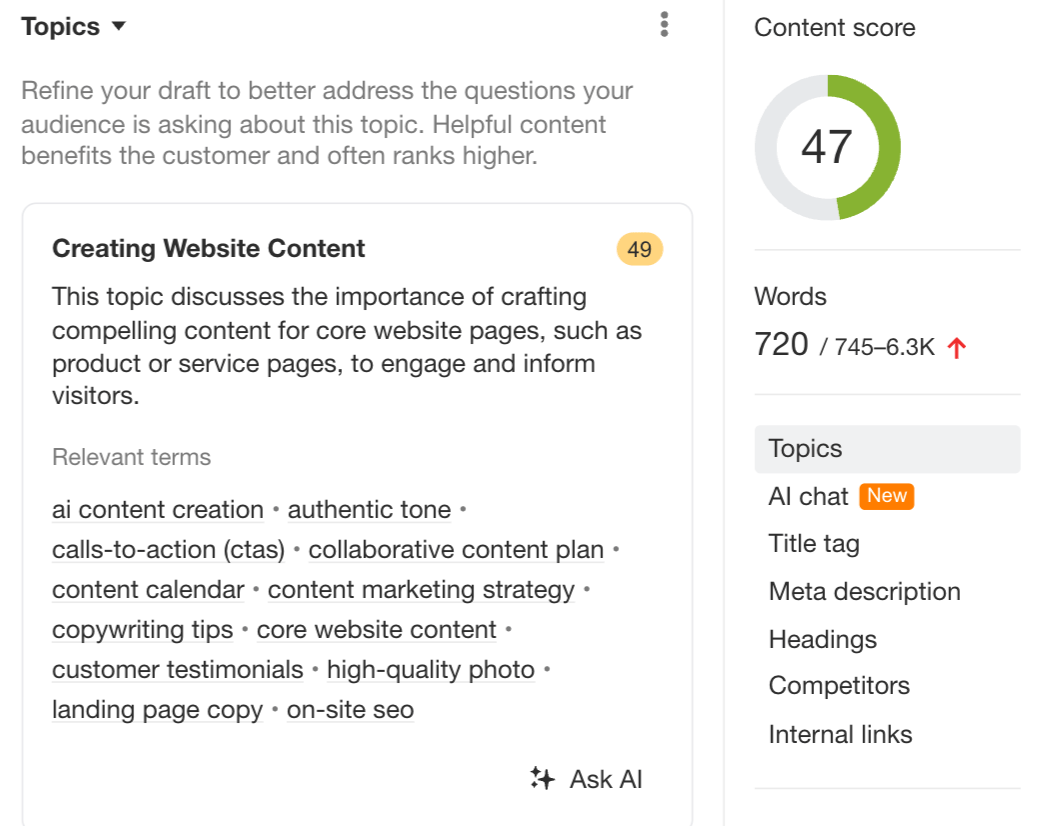

It’s about utilizing instruments like AI Content material Helper which are particularly designed to raise your subject protection, as a substitute of specializing in cramming extra entities in. (The search engine optimisation rating solely improves as you cowl the prompt matters intimately, not while you stuff extra phrases in.)

However above all, it’s about contributing to a greater web by specializing in the folks you wish to attain and optimizing for them, not algorithms or language fashions.

Last ideas

LLMO remains to be in its early days, however the patterns are already acquainted — and so are the dangers.

We’ve seen what occurs when short-term ways go unchecked. When search engine optimisation turned a race to the underside, we misplaced belief, high quality, and creativity. Let’s not do it once more with LLMs.

This time, we’ve an opportunity to get it proper. That means:

- Don’t manipulate prediction patterns; form your model’s presence as a substitute.

- Don’t chase entity saturation, however create content material people wish to learn.

- Don’t write to be summarized; somewhat, write to affect your viewers.

As a result of in case your model solely reveals up in LLMs when it’s stripped of character, is that basically a win?