Introduction

On this tutorial we’ll construct a deep studying mannequin to categorise phrases. We are going to use tfdatasets to deal with information IO and pre-processing, and Keras to construct and practice the mannequin.

We are going to use the Speech Instructions dataset which consists of 65,000 one-second audio recordsdata of individuals saying 30 completely different phrases. Every file comprises a single spoken English phrase. The dataset was launched by Google below CC License.

Our mannequin is a Keras port of the TensorFlow tutorial on Easy Audio Recognition which in flip was impressed by Convolutional Neural Networks for Small-footprint Key phrase Recognizing. There are different approaches to the speech recognition activity, like recurrent neural networks, dilated (atrous) convolutions or Studying from Between-class Examples for Deep Sound Recognition.

The mannequin we’ll implement right here isn’t the cutting-edge for audio recognition programs, that are far more advanced, however is comparatively easy and quick to coach. Plus, we present effectively use tfdatasets to preprocess and serve information.

Audio illustration

Many deep studying fashions are end-to-end, i.e. we let the mannequin be taught helpful representations instantly from the uncooked information. Nonetheless, audio information grows very quick – 16,000 samples per second with a really wealthy construction at many time-scales. In an effort to keep away from having to take care of uncooked wave sound information, researchers normally use some form of characteristic engineering.

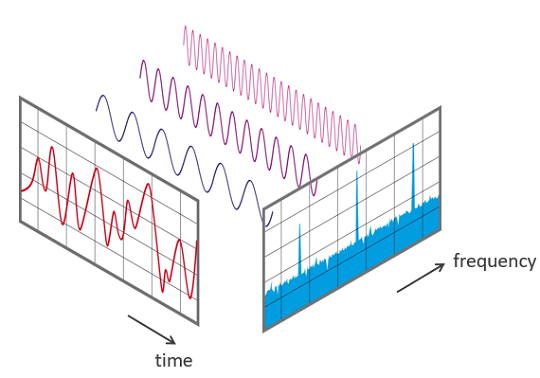

Each sound wave may be represented by its spectrum, and digitally it may be computed utilizing the Quick Fourier Remodel (FFT).

A standard solution to characterize audio information is to interrupt it into small chunks, which normally overlap. For every chunk we use the FFT to calculate the magnitude of the frequency spectrum. The spectra are then mixed, facet by facet, to type what we name a spectrogram.

It’s additionally frequent for speech recognition programs to additional remodel the spectrum and compute the Mel-Frequency Cepstral Coefficients. This transformation takes into consideration that the human ear can’t discern the distinction between two carefully spaced frequencies and neatly creates bins on the frequency axis. A fantastic tutorial on MFCCs may be discovered right here.

After this process, we now have a picture for every audio pattern and we are able to use convolutional neural networks, the usual structure kind in picture recognition fashions.

Downloading

First, let’s obtain information to a listing in our undertaking. You’ll be able to both obtain from this hyperlink (~1GB) or from R with:

dir.create("information")

obtain.file(

url = "http://obtain.tensorflow.org/information/speech_commands_v0.01.tar.gz",

destfile = "information/speech_commands_v0.01.tar.gz"

)

untar("information/speech_commands_v0.01.tar.gz", exdir = "information/speech_commands_v0.01")Contained in the information listing we could have a folder known as speech_commands_v0.01. The WAV audio recordsdata inside this listing are organised in sub-folders with the label names. For instance, all one-second audio recordsdata of individuals talking the phrase “mattress” are contained in the mattress listing. There are 30 of them and a particular one known as _background_noise_ which comprises numerous patterns that might be blended in to simulate background noise.

Importing

On this step we’ll listing all audio .wav recordsdata right into a tibble with 3 columns:

fname: the file identify;class: the label for every audio file;class_id: a singular integer quantity ranging from zero for every class – used to one-hot encode the lessons.

This shall be helpful to the following step after we will create a generator utilizing the tfdatasets bundle.

Generator

We are going to now create our Dataset, which within the context of tfdatasets, provides operations to the TensorFlow graph so as to learn and pre-process information. Since they’re TensorFlow ops, they’re executed in C++ and in parallel with mannequin coaching.

The generator we’ll create shall be liable for studying the audio recordsdata from disk, creating the spectrogram for each and batching the outputs.

Let’s begin by creating the dataset from slices of the information.body with audio file names and lessons we simply created.

Now, let’s outline the parameters for spectrogram creation. We have to outline window_size_ms which is the scale in milliseconds of every chunk we’ll break the audio wave into, and window_stride_ms, the space between the facilities of adjoining chunks:

window_size_ms 30

window_stride_ms 10Now we’ll convert the window measurement and stride from milliseconds to samples. We’re contemplating that our audio recordsdata have 16,000 samples per second (1000 ms).

We are going to receive different portions that shall be helpful for spectrogram creation, just like the variety of chunks and the FFT measurement, i.e., the variety of bins on the frequency axis. The perform we’re going to use to compute the spectrogram doesn’t permit us to alter the FFT measurement and as a substitute by default makes use of the primary energy of two better than the window measurement.

We are going to now use dataset_map which permits us to specify a pre-processing perform for every statement (line) of our dataset. It’s on this step that we learn the uncooked audio file from disk and create its spectrogram and the one-hot encoded response vector.

# shortcuts to used TensorFlow modules.

audio_ops tf$contrib$framework$python$ops$audio_ops

ds ds %>%

dataset_map(perform(obs) {

# a great way to debug when constructing tfdatsets pipelines is to make use of a print

# assertion like this:

# print(str(obs))

# decoding wav recordsdata

audio_binary tf$read_file(tf$reshape(obs$fname, form = listing()))

wav audio_ops$decode_wav(audio_binary, desired_channels = 1)

# create the spectrogram

spectrogram audio_ops$audio_spectrogram(

wav$audio,

window_size = window_size,

stride = stride,

magnitude_squared = TRUE

)

# normalization

spectrogram tf$log(tf$abs(spectrogram) + 0.01)

# transferring channels to final dim

spectrogram tf$transpose(spectrogram, perm = c(1L, 2L, 0L))

# remodel the class_id right into a one-hot encoded vector

response tf$one_hot(obs$class_id, 30L)

listing(spectrogram, response)

}) Now, we’ll specify how we would like batch observations from the dataset. We’re utilizing dataset_shuffle since we wish to shuffle observations from the dataset, in any other case it could observe the order of the df object. Then we use dataset_repeat so as to inform TensorFlow that we wish to hold taking observations from the dataset even when all observations have already been used. And most significantly right here, we use dataset_padded_batch to specify that we would like batches of measurement 32, however they need to be padded, ie. if some statement has a distinct measurement we pad it with zeroes. The padded form is handed to dataset_padded_batch through the padded_shapes argument and we use NULL to state that this dimension doesn’t should be padded.

That is our dataset specification, however we would want to rewrite all of the code for the validation information, so it’s good apply to wrap this right into a perform of the information and different necessary parameters like window_size_ms and window_stride_ms. Beneath, we’ll outline a perform known as data_generator that can create the generator relying on these inputs.

data_generator perform(df, batch_size, shuffle = TRUE,

window_size_ms = 30, window_stride_ms = 10) {

window_size as.integer(16000*window_size_ms/1000)

stride as.integer(16000*window_stride_ms/1000)

fft_size as.integer(2^trunc(log(window_size, 2)) + 1)

n_chunks size(seq(window_size/2, 16000 - window_size/2, stride))

ds tensor_slices_dataset(df)

if (shuffle)

ds ds %>% dataset_shuffle(buffer_size = 100)

ds ds %>%

dataset_map(perform(obs) {

# decoding wav recordsdata

audio_binary tf$read_file(tf$reshape(obs$fname, form = listing()))

wav audio_ops$decode_wav(audio_binary, desired_channels = 1)

# create the spectrogram

spectrogram audio_ops$audio_spectrogram(

wav$audio,

window_size = window_size,

stride = stride,

magnitude_squared = TRUE

)

spectrogram tf$log(tf$abs(spectrogram) + 0.01)

spectrogram tf$transpose(spectrogram, perm = c(1L, 2L, 0L))

# remodel the class_id right into a one-hot encoded vector

response tf$one_hot(obs$class_id, 30L)

listing(spectrogram, response)

}) %>%

dataset_repeat()

ds ds %>%

dataset_padded_batch(batch_size, listing(form(n_chunks, fft_size, NULL), form(NULL)))

ds

}Now, we are able to outline coaching and validation information turbines. It’s price noting that executing this gained’t truly compute any spectrogram or learn any file. It’s going to solely outline within the TensorFlow graph the way it ought to learn and pre-process information.

To really get a batch from the generator we may create a TensorFlow session and ask it to run the generator. For instance:

sess tf$Session()

batch next_batch(ds_train)

str(sess$run(batch))Record of two

$ : num [1:32, 1:98, 1:257, 1] -4.6 -4.6 -4.61 -4.6 -4.6 ...

$ : num [1:32, 1:30] 0 0 0 0 0 0 0 0 0 0 ...Every time you run sess$run(batch) it is best to see a distinct batch of observations.

Mannequin definition

Now that we all know how we’ll feed our information we are able to deal with the mannequin definition. The spectrogram may be handled like a picture, so architectures which can be generally utilized in picture recognition duties ought to work properly with the spectrograms too.

We are going to construct a convolutional neural community much like what we now have constructed right here for the MNIST dataset.

The enter measurement is outlined by the variety of chunks and the FFT measurement. Like we defined earlier, they are often obtained from the window_size_ms and window_stride_ms used to generate the spectrogram.

We are going to now outline our mannequin utilizing the Keras sequential API:

mannequin keras_model_sequential()

mannequin %>%

layer_conv_2d(input_shape = c(n_chunks, fft_size, 1),

filters = 32, kernel_size = c(3,3), activation = 'relu') %>%

layer_max_pooling_2d(pool_size = c(2, 2)) %>%

layer_conv_2d(filters = 64, kernel_size = c(3,3), activation = 'relu') %>%

layer_max_pooling_2d(pool_size = c(2, 2)) %>%

layer_conv_2d(filters = 128, kernel_size = c(3,3), activation = 'relu') %>%

layer_max_pooling_2d(pool_size = c(2, 2)) %>%

layer_conv_2d(filters = 256, kernel_size = c(3,3), activation = 'relu') %>%

layer_max_pooling_2d(pool_size = c(2, 2)) %>%

layer_dropout(charge = 0.25) %>%

layer_flatten() %>%

layer_dense(items = 128, activation = 'relu') %>%

layer_dropout(charge = 0.5) %>%

layer_dense(items = 30, activation = 'softmax')We used 4 layers of convolutions mixed with max pooling layers to extract options from the spectrogram pictures and a pair of dense layers on the prime. Our community is relatively easy when in comparison with extra superior architectures like ResNet or DenseNet that carry out very properly on picture recognition duties.

Now let’s compile our mannequin. We are going to use categorical cross entropy because the loss perform and use the Adadelta optimizer. It’s additionally right here that we outline that we’ll have a look at the accuracy metric throughout coaching.

Mannequin becoming

Now, we’ll match our mannequin. In Keras we are able to use TensorFlow Datasets as inputs to the fit_generator perform and we’ll do it right here.

Epoch 1/10

1415/1415 [==============================] - 87s 62ms/step - loss: 2.0225 - acc: 0.4184 - val_loss: 0.7855 - val_acc: 0.7907

Epoch 2/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.8781 - acc: 0.7432 - val_loss: 0.4522 - val_acc: 0.8704

Epoch 3/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.6196 - acc: 0.8190 - val_loss: 0.3513 - val_acc: 0.9006

Epoch 4/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.4958 - acc: 0.8543 - val_loss: 0.3130 - val_acc: 0.9117

Epoch 5/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.4282 - acc: 0.8754 - val_loss: 0.2866 - val_acc: 0.9213

Epoch 6/10

1415/1415 [==============================] - 76s 53ms/step - loss: 0.3852 - acc: 0.8885 - val_loss: 0.2732 - val_acc: 0.9252

Epoch 7/10

1415/1415 [==============================] - 75s 53ms/step - loss: 0.3566 - acc: 0.8991 - val_loss: 0.2700 - val_acc: 0.9269

Epoch 8/10

1415/1415 [==============================] - 76s 54ms/step - loss: 0.3364 - acc: 0.9045 - val_loss: 0.2573 - val_acc: 0.9284

Epoch 9/10

1415/1415 [==============================] - 76s 53ms/step - loss: 0.3220 - acc: 0.9087 - val_loss: 0.2537 - val_acc: 0.9323

Epoch 10/10

1415/1415 [==============================] - 76s 54ms/step - loss: 0.2997 - acc: 0.9150 - val_loss: 0.2582 - val_acc: 0.9323The mannequin’s accuracy is 93.23%. Let’s discover ways to make predictions and check out the confusion matrix.

Making predictions

We are able to use thepredict_generator perform to make predictions on a brand new dataset. Let’s make predictions for our validation dataset.

The predict_generator perform wants a step argument which is the variety of instances the generator shall be known as.

We are able to calculate the variety of steps by understanding the batch measurement, and the scale of the validation dataset.

df_validation df[-id_train,]

n_steps nrow(df_validation)/32 + 1We are able to then use the predict_generator perform:

predictions predict_generator(

mannequin,

ds_validation,

steps = n_steps

)

str(predictions)num [1:19424, 1:30] 1.22e-13 7.30e-19 5.29e-10 6.66e-22 1.12e-17 ...This can output a matrix with 30 columns – one for every phrase and n_steps*batch_size variety of rows. Observe that it begins repeating the dataset on the finish to create a full batch.

We are able to compute the anticipated class by taking the column with the best chance, for instance.

lessons apply(predictions, 1, which.max) - 1A pleasant visualization of the confusion matrix is to create an alluvial diagram:

library(dplyr)

library(alluvial)

x df_validation %>%

mutate(pred_class_id = head(lessons, nrow(df_validation))) %>%

left_join(

df_validation %>% distinct(class_id, class) %>% rename(pred_class = class),

by = c("pred_class_id" = "class_id")

) %>%

mutate(appropriate = pred_class == class) %>%

rely(pred_class, class, appropriate)

alluvial(

x %>% choose(class, pred_class),

freq = x$n,

col = ifelse(x$appropriate, "lightblue", "pink"),

border = ifelse(x$appropriate, "lightblue", "pink"),

alpha = 0.6,

conceal = x$n 20

)

We are able to see from the diagram that probably the most related mistake our mannequin makes is to categorise “tree” as “three”. There are different frequent errors like classifying “go” as “no”, “up” as “off”. At 93% accuracy for 30 lessons, and contemplating the errors we are able to say that this mannequin is fairly affordable.

The saved mannequin occupies 25Mb of disk house, which is affordable for a desktop however will not be on small gadgets. We may practice a smaller mannequin, with fewer layers, and see how a lot the efficiency decreases.

In speech recognition duties its additionally frequent to do some form of information augmentation by mixing a background noise to the spoken audio, making it extra helpful for actual purposes the place it’s frequent to produce other irrelevant sounds occurring within the setting.

The complete code to breed this tutorial is out there right here.