To get your internet pages ranked on search engine outcome pages (SERPs), they first should be crawled and listed by search engines like google and yahoo like Google. Nonetheless, Google has restricted assets and might’t index each web page on the web. That is the place the idea of crawl price range is available in.

Crawl price range is the period of time and assets that Google allocates to crawling and indexing your web site. Sadly, this crawl price range doesn’t need to match the dimensions of your web site. And if in case you have a big web site, this will trigger essential content material to be missed by Google.

The excellent news is that there are methods to extend the crawl price range out there to your web site, which we’ll cowl on this article. And we may also present you easy methods to effectively use your present crawl price range to be sure that Google can simply discover and crawl your most essential content material.

However earlier than we dive in, we’ll begin with a fast introduction to how precisely crawl price range works and what kinds of web sites must optimize their crawl price range.

If you wish to skip the speculation, you’ll be able to leap proper into crawl price range optimization strategies right here!

What’s crawl price range?

As talked about above, crawl price range refers back to the period of time and assets Google invests into crawling your web site.

This crawl price range isn’t static although. Based on Google Search Central, it’s decided by two essential elements: crawl fee and crawl demand.

Crawl fee

The crawl fee describes what number of connections Googlebot can use concurrently to crawl your web site.

This quantity can range relying on how briskly your server responds to Google’s requests. You probably have a strong server that may deal with Google’s web page requests nicely, Google can improve its crawl fee and crawl extra pages of your web site directly.

Nonetheless, in case your web site takes a very long time to ship the requested content material, Google will decelerate its crawl fee to keep away from overloading your server and inflicting a poor person expertise for guests shopping your web site. Because of this, a few of your pages will not be crawled or could also be deprioritized.

Crawl demand

Whereas crawl fee refers to what number of pages of your web site Google can crawl, crawl demand displays how a lot time Google needs to spend crawling your web site. Crawl demand is elevated by:

- Content material high quality: Recent, precious content material that resonates with customers.

- Web site reputation: Excessive site visitors and highly effective backlinks point out a powerful web site authority and significance.

- Content material updates: Often updating content material means that your web site is dynamic, which inspires Google to crawl it extra ceaselessly.

Subsequently, primarily based on crawl fee and crawl demand, we are able to say that crawl price range represents the variety of URLs Googlebot can crawl (technically) and desires to crawl (content-wise) in your web site.

How does crawl price range have an effect on rankings and indexing?

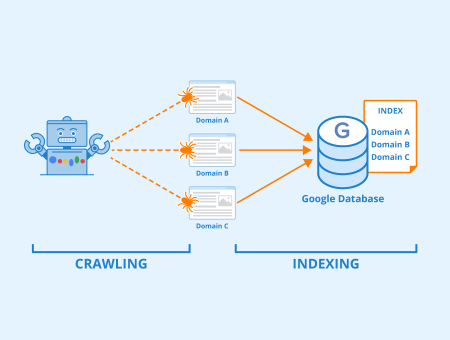

For a web page to indicate up on search outcomes, it must be crawled and listed. Throughout crawling, Google analyzes content material and different essential components (meta information, structured information, hyperlinks, and many others.) to grasp the web page. This info is then used for indexing and rating.

When your crawl price range hits zero, search engines like google and yahoo like Google can now not crawl your pages utterly. This implies it takes longer to your pages to be processed and added to the search index. Because of this, your content material gained’t seem on search engine outcomes pages as shortly because it ought to. This delay can forestall customers from discovering your content material after they search on-line. Within the worst-case situation, Google may by no means uncover a few of your high-quality pages.

Determine: Indexing – Creator: Seobility – License: CC BY-SA 4.0

A depleted crawl price range can even result in ‘lacking content material.’ This happens when Google solely partially crawls or indexes your internet web page, leading to lacking textual content, hyperlinks, or photos. Since Google didn’t totally crawl your web page, it could interpret your content material in a different way than meant, doubtlessly harming your rating.

It’s essential to say although that crawl price range is not a rating issue. It solely impacts the standard of crawling and the time it takes to index and rank your pages on the SERPs.

What kinds of web sites ought to optimize their crawl price range?

Of their Google Search Central information, Google mentions that crawl price range points are most related to those use instances:

- Web site with a lot of pages (1 million+ pages) which can be up to date frequently

- Web sites with a medium to giant variety of pages (10,000+ pages) which can be up to date very ceaselessly (day by day)

- Web sites with a excessive share of pages which can be listed as Found – at the moment not listed in Google Search Console, indicating inefficient crawling*

*Tip: Seobility may also let you recognize if there’s a big discrepancy between pages which can be being crawled and pages which can be really appropriate to be listed, i.e. if there’s a big proportion of pages which can be losing your web site’s crawl price range. On this case, Seobility will show a warning in your Tech. & Meta dashboard:

Solely X pages that may be listed by search engines like google and yahoo had been discovered.

In case your web site doesn’t fall into one in every of these classes, a depleted crawl price range shouldn’t be a serious concern.

The one exception is that if your web site hosts quite a lot of JavaScript-based pages. On this case you should still need to contemplate optimizing your crawl price range spending, as processing JS pages could be very resource-intensive.

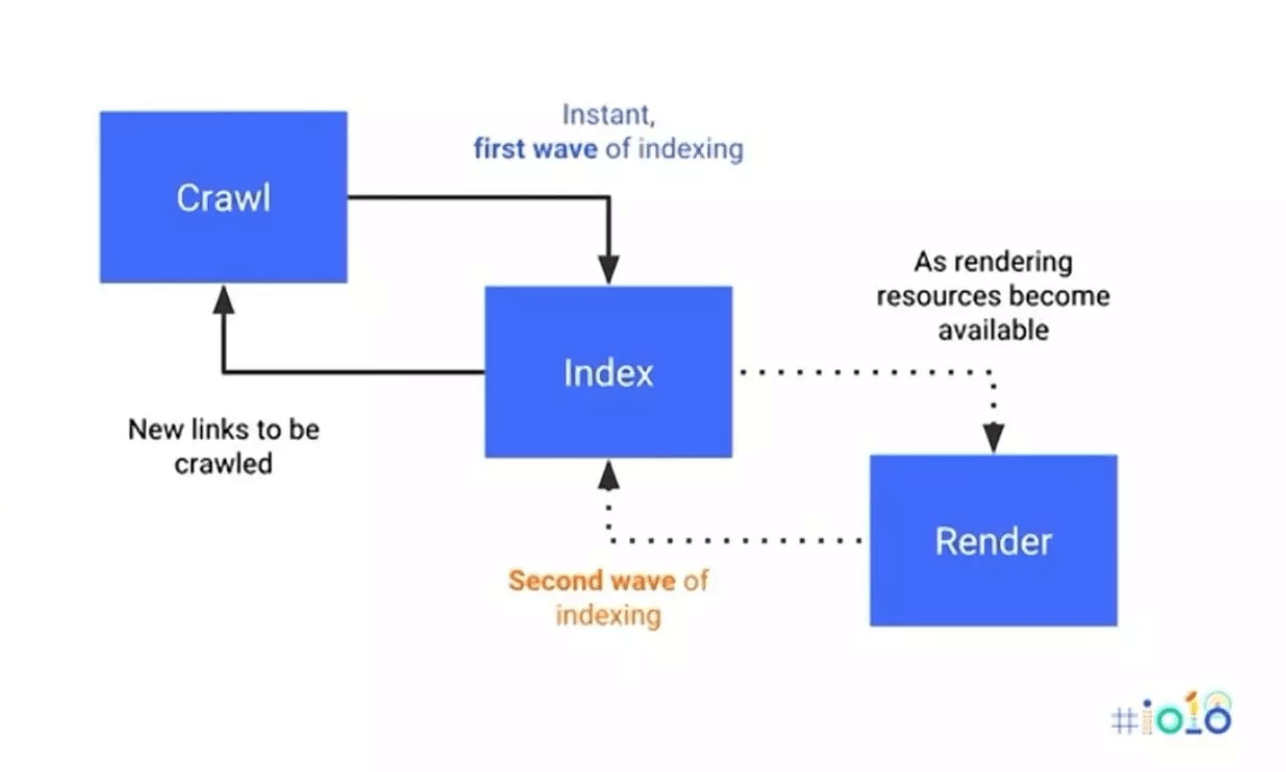

Why does JavaScript crawling simply deplete an internet site’s crawl price range?

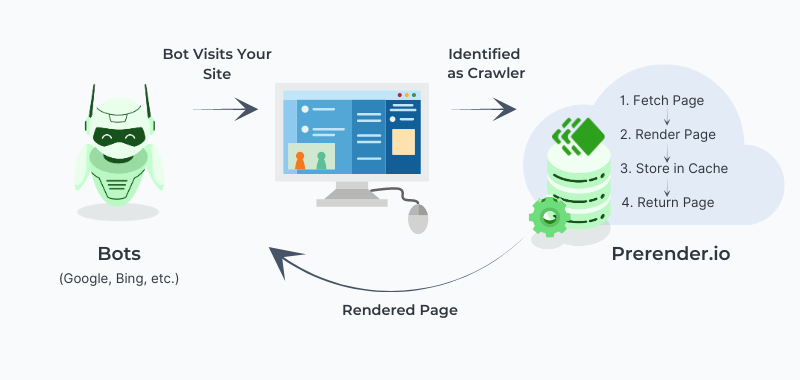

When Google indexes easy HTML pages, it requires two steps: crawl and index. Nonetheless, for indexing dynamic JavaScript-based content material, Google wants three steps: crawl, render, and index. This may be considered a ‘two-wave indexing’ method, as defined within the illustration under.

Supply: Search Engine Journal

The longer and extra complicated the method, the extra of the location’s crawl price range is used. This extra rendering step can shortly deplete your crawl price range and result in lacking content material points. Usually, non-JavaScript internet components are listed first, earlier than the JavaScript-generated content material is totally listed. Because of this, Google might rank the web page primarily based on incomplete info, ignoring the partially or completely uncrawled JavaScript content material.

The additional rendering step can even considerably delay the indexation time, inflicting the web page to take days, weeks, and even months to seem on search engine outcomes.

We’ll present you later easy methods to handle crawl price range points particularly on JavaScript-heavy websites. For now, all you’ll want to know is that crawl price range may very well be a problem in case your web site incorporates quite a lot of JavaScript-based content material.

Now let’s check out the choices you must optimize your web site’s crawl price range typically!

optimize your web site’s crawl price range

The purpose of crawl price range optimization is to maximise Google’s crawling of your pages, guaranteeing that they’re shortly listed and ranked in search outcomes and that no essential pages are missed.

There are two primary mechanisms to realize this:

- Improve your web site’s crawl price range: Enhance the elements that may improve your web site’s crawl fee and crawl demand, corresponding to your server efficiency, web site reputation, content material high quality, and content material freshness.

- Get probably the most out of your present crawl price range: Maintain Googlebot from crawling irrelevant pages in your web site and direct it to essential, high-value content material. This can make it simpler for Google to search out your finest content material, which in flip may improve its crawl demand.

Subsequent, we’ll share particular methods for each of those mechanisms, so let’s dive proper in.

5 methods to get probably the most out of your present crawl price range

1. Exclude low-value content material from crawling

When a search engine crawls low-value content material, it wastes your restricted crawl price range with out benefiting you. Examples of low-value pages embody log-in pages, purchasing carts, or your privateness coverage. These pages are often not meant to rank in search outcomes, so there’s no want for Google to crawl them.

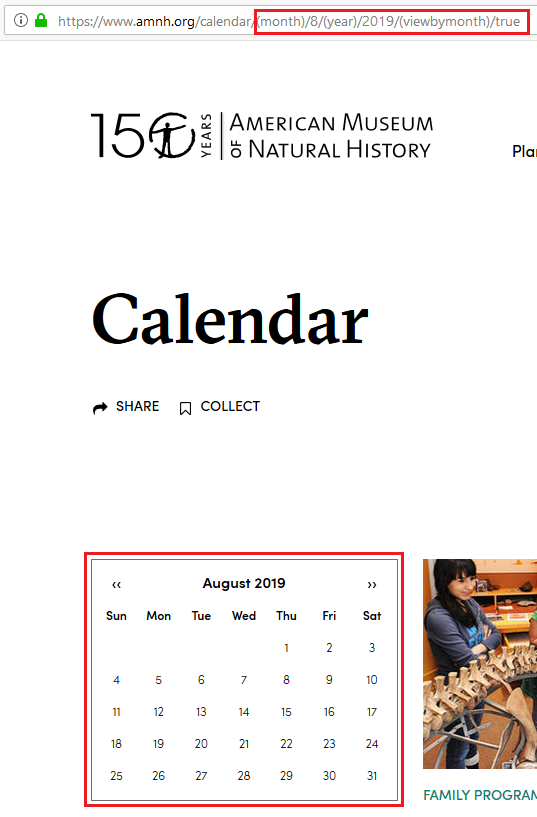

Whereas it’s not actually not a giant deal if Google crawls a handful of those pages, there are particular instances the place a lot of low-value pages are created mechanically and uncontrollably, and that’s the place you need to positively step in. An instance of this are so-called “infinite areas”. Infinite areas check with part of an internet site that may generate a limiteless variety of URLs. This sometimes occurs with dynamic content material that adapts to person decisions, corresponding to calendars (e.g., each doable date), search filters (e.g., an infinite mixture of filters and types) or user-generated content material (e.g., feedback).

Have a look at the instance under. A calendar with a “subsequent month” hyperlink in your web site could make Google preserve following this hyperlink infinitely:

Screenshot of amnh.org

As you’ll be able to see, every click on on the appropriate arrow creates a brand new URL. Google may observe this hyperlink endlessly, which might create an enormous mass of URLs that Google must retrieve one after the other.

Advisable answer: use robots.txt or ‘nofollow’

The best solution to preserve Googlebot from following infinite areas is to exclude these areas of your web site from crawling in your robots.txt file. This file tells search engines like google and yahoo which areas of your web site they’re allowed or not allowed to crawl.

As an illustration, if you wish to forestall Googlebot from crawling your calendar pages, you solely have to incorporate the next directive in your robots.txt file:

Disallow: /calendar/

Another technique is to make use of the nofollow attribute for hyperlinks inflicting the infinite area. Though many SEOs advise in opposition to utilizing ‘nofollow’ for inside hyperlinks, it’s completely advantageous to make use of it in a situation like this.

Within the calendar instance above, nofollow can be utilized for the “subsequent month” hyperlink to stop Googlebot from following and crawling it.

2. Eradicate duplicate content material

Much like low-value pages, there’s no want for Google to crawl duplicate content material pages, i.e. pages the place the identical or very comparable content material seems on a number of URLs. These pages are losing Google’s assets, as a result of it must crawl many URLs with out discovering new content material.

There are particular technical points that may result in a lot of duplicate pages and which can be price listening to for those who’re experiencing crawl price range points. One among these is lacking redirects between the www and non-www variations of a URL, or from HTTP to HTTPS.

Duplicate content material attributable to lacking redirects

If these basic redirects are usually not arrange accurately, this will trigger the variety of URLs that may be crawled to double and even quadruple! For instance, the web page www.instance.com may very well be out there beneath 4 URLs if no redirect was configured:

http://www.instance.com

https://www.instance.com

http://instance.com

https://instance.com

That’s positively not good to your web site’s crawl price range!

Advisable answer: Test your redirects and proper them if essential

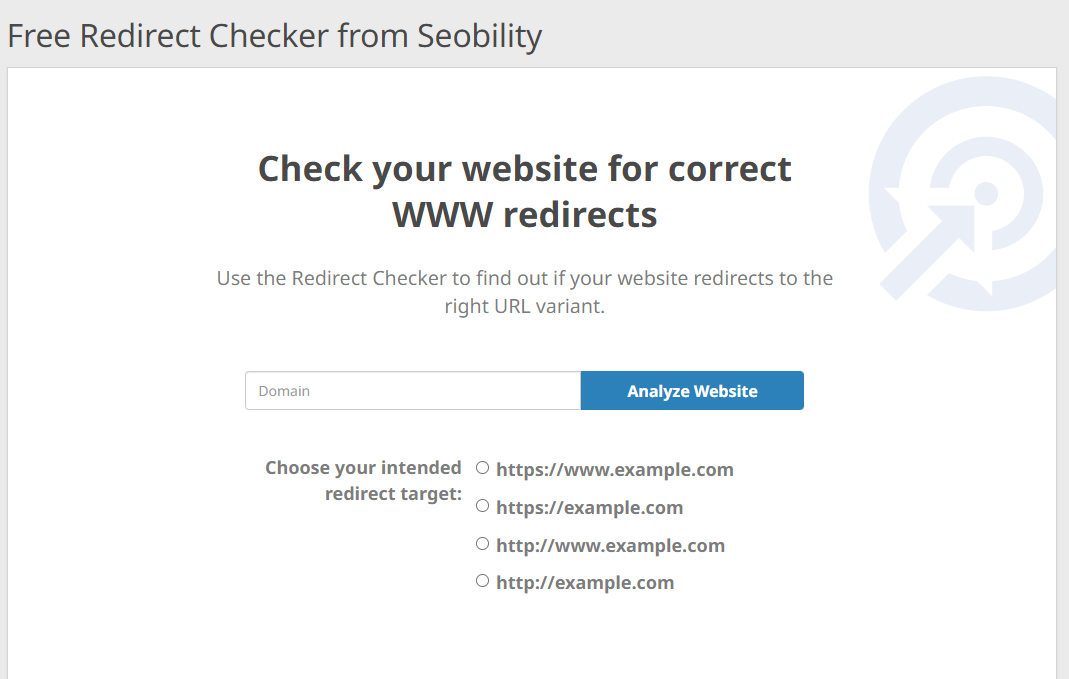

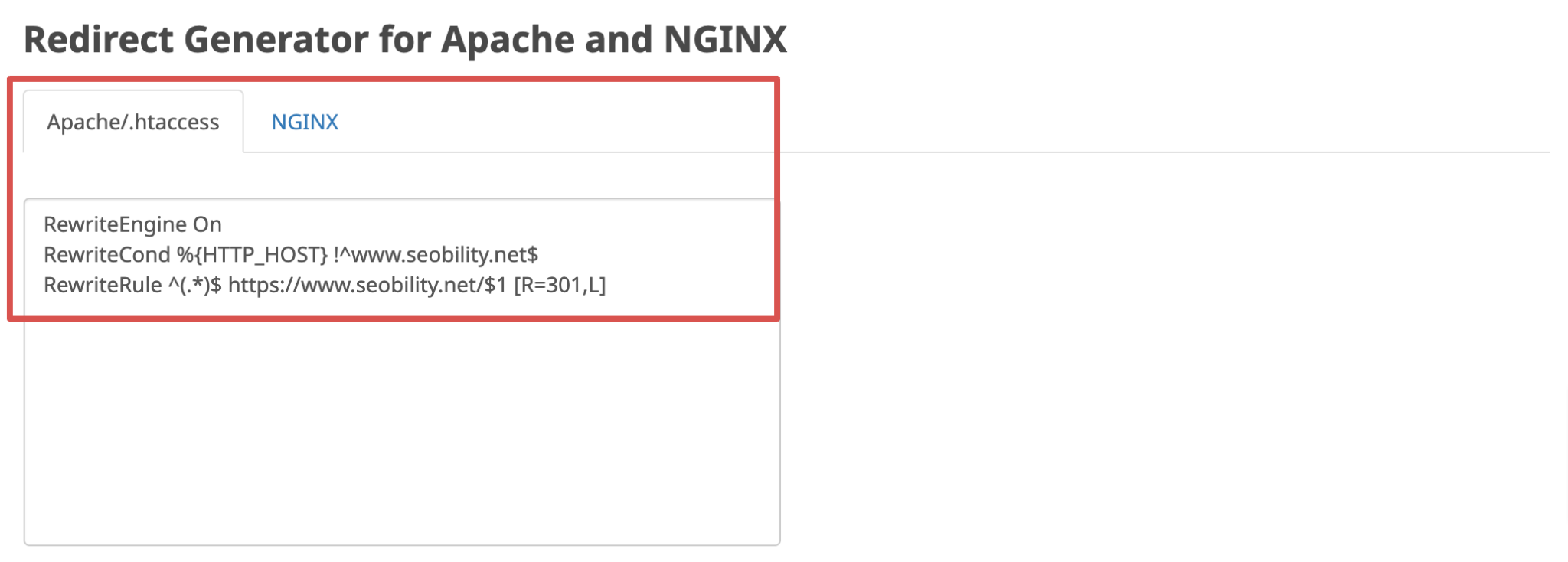

With Seobility’s free Redirect Checker, you’ll be able to simply in case your web site’s WWW redirects are configured accurately. Simply enter your area and select the URL model that your guests needs to be redirected to by default:

In case the software detects any points, one can find the right redirect code to your Apache or NGINX server within the Redirect Generator on the outcomes web page. This fashion you’ll be able to appropriate any damaged or lacking redirects and ensuing duplicate content material with only a few clicks.

Duplicate content material attributable to URL parameters

One other technical difficulty that may result in the creation of a lot of duplicate pages are URL parameters.

Many eCommerce web sites use URL parameters to let guests type the merchandise on a class web page by completely different metrics corresponding to value, relevance, critiques, and many others.

For instance, an eCommerce web site may append the parameter ?type=price_asc to the URL of its product overview web page to type merchandise by value, beginning with the bottom value:

www.abc.com/merchandise?type=price_asc

This might create a brand new URL containing the identical merchandise because the common web page, simply sorted in a special order. For websites with a lot of classes and a number of sorting choices, this will simply add as much as a major variety of duplicate pages that waste crawl price range.

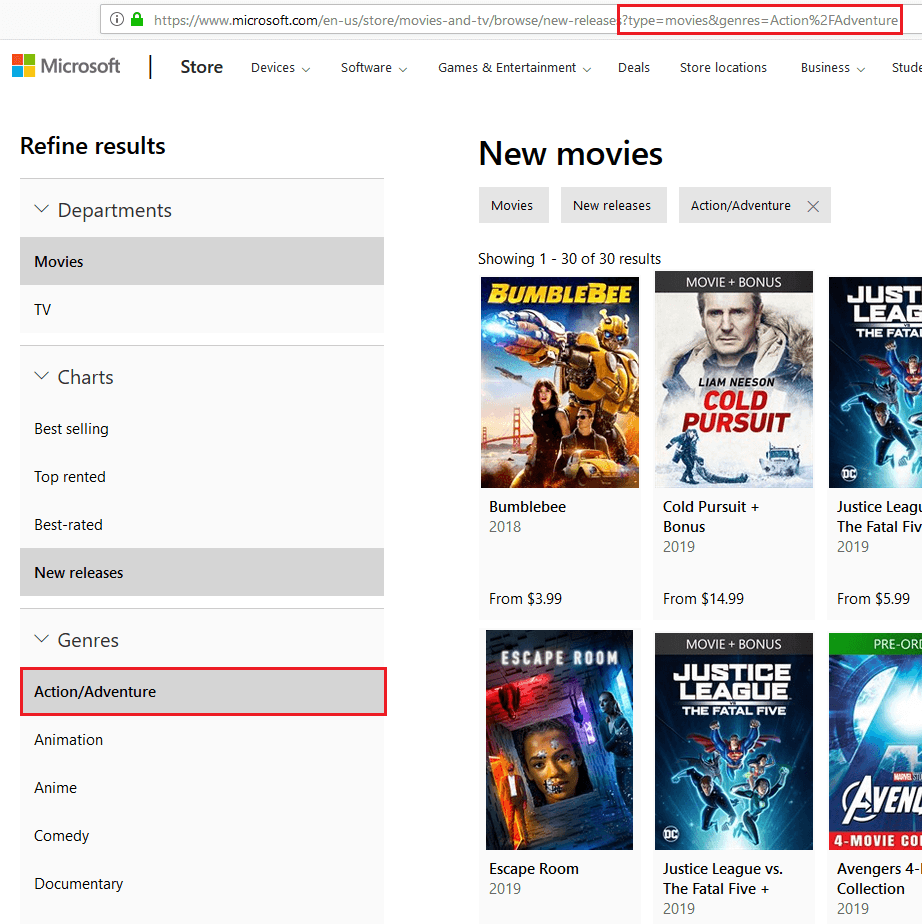

An identical drawback happens with faceted navigation, which permits customers to slender the outcomes on a product class web page primarily based on standards corresponding to coloration or measurement. That is completed by including URL parameters to the corresponding URL:

Screenshot from microsoft.com

Within the instance above, the outcomes are restricted to the Motion/Journey style by including the URL parameter &genres=Actionpercent2FAdventure to the URL. This creates a brand new URL that wastes the crawl price range as a result of the web page doesn’t present any new content material, only a snippet of the unique web page.

Advisable answer: robots.txt

As with low-value pages, the simplest solution to preserve Googlebot away from these parameterized URLs is to exclude them from crawling in your robots.txt file. Within the examples above, we may disallow the parameters that we don’t need Google to crawl like this:

Disallow: /*?type=

Disallow: /*&genres=Actionpercent2FAdventure

Disallow: /*?genres=Actionpercent2FAdventure

This method is the simplest solution to take care of crawl price range points attributable to URL parameters, however understand that it’s not the appropriate answer for each scenario. An essential draw back of this technique is that if pages are excluded from crawling by way of robots.txt, Google will now not have the ability to discover essential meta info corresponding to canonical tags on them, stopping Google from consolidating the rating alerts of the affected pages.

So if crawl price range just isn’t your main concern, one other technique of dealing with parameters and faceted navigation could also be extra applicable to your particular person scenario.

Yow will discover in-depth guides on each matters right here:

You must also keep away from utilizing URL parameters typically, except they’re completely essential. For instance, you need to now not use URL parameters with session IDs. It’s higher to make use of cookies to switch session info.

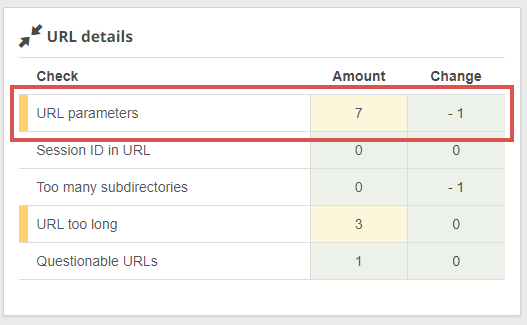

Tip: With Seobility’s Web site Audit, you’ll be able to simply discover any URLs with parameters in your web site.

Seobility > Onpage > Tech. & Meta > URL particulars

Now you need to have a very good understanding of easy methods to deal with various kinds of low-value or duplicate pages to stop them from draining your crawl price range. Let’s transfer on to the subsequent technique!

3. Optimize assets, corresponding to CSS and JavaScript information

As with HTML pages, each CSS and JavaScript file in your web site have to be accessed and crawled by Google, consuming a part of your crawl price range. This course of additionally will increase web page loading time, which may negatively have an effect on your rankings since web page pace is an official Google rating issue.

Advisable answer: Minize the variety of information and/or implement a prerendering answer

Maintain the variety of information you employ to a minimal by combining your CSS or JavaScript code into fewer information, and optimize the information you’ve by eradicating pointless code.

Nonetheless, for those who web site makes use of a considerable amount of JavaScript, this will not be sufficient and also you may need to contemplate implementing a pre-rendering answer.

In easy phrases, prerendering is the method of turning your JavaScript content material into its HTML model, making it 100% index-ready. Because of this, you narrow the indexing time, use much less crawl price range, and get all of your content material and its search engine optimisation components completely listed.

Prerender affords a prerendering answer that may considerably speed up your JavaScript web site’s indexing pace by as much as 260%.

You’ll be able to be taught extra about prerendering and its advantages to your web site’s JavaScript search engine optimisation right here.

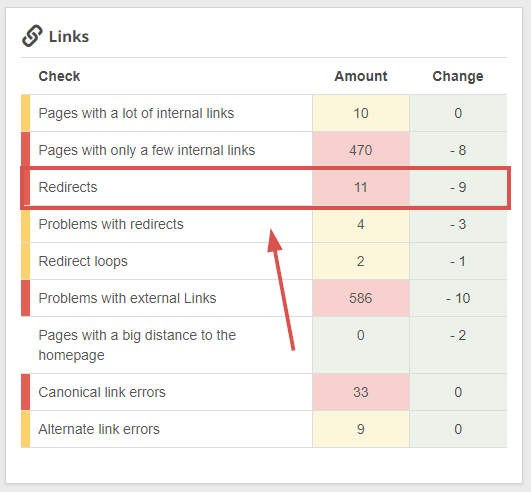

4. Keep away from hyperlinks to redirects and redirect loops

If URL A redirects to URL B, Google has to request two URLs out of your internet server earlier than it will get to the precise content material it needs to crawl. And if in case you have quite a lot of redirects in your web site, this will simply add up and drain your crawl price range.

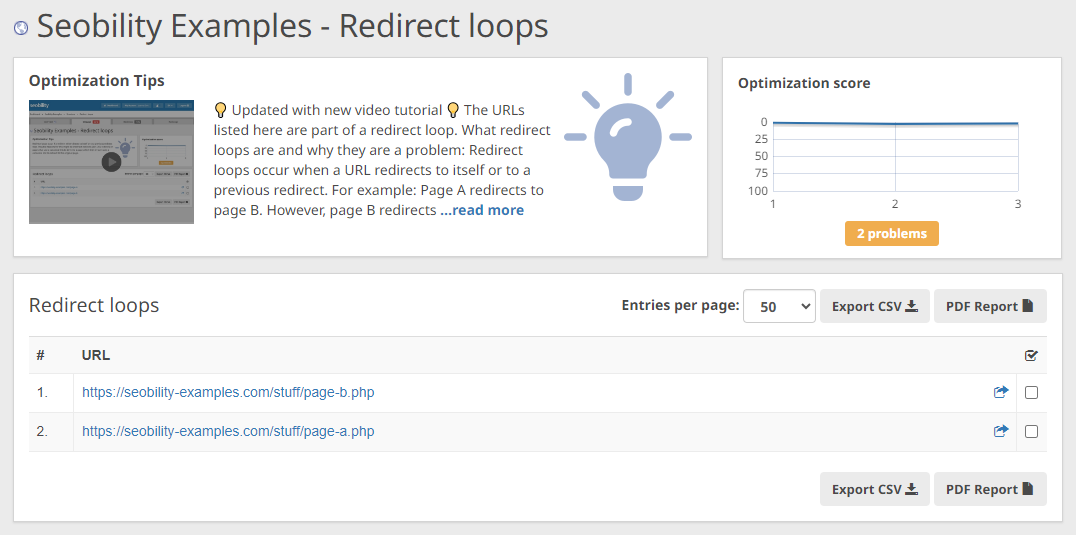

The scenario is even worse with redirect loops. Redirect loops check with an infinite loop between pages: Web page A > Web page B > Web page C > and again to Web page A, trapping Googlebot and costing your treasured crawl price range.

Advisable answer: Hyperlink on to the vacation spot URL and keep away from redirect chains

As an alternative of sending Google to a web page that redirects to a different URL, you need to at all times hyperlink to the precise vacation spot URL when utilizing inside hyperlinks.

Happily, Seobility can show all of the redirecting pages in your web site, so you’ll be able to simply regulate the hyperlinks that at the moment level to redirecting pages:

Seobility > Onpage > Construction > Hyperlinks

And with the “redirect loops” evaluation, you’ll be able to see at a look if there are any pages which can be a part of an infinite redirect cycle.

Seobility > Onpage > Construction > Hyperlinks > Redirect loops

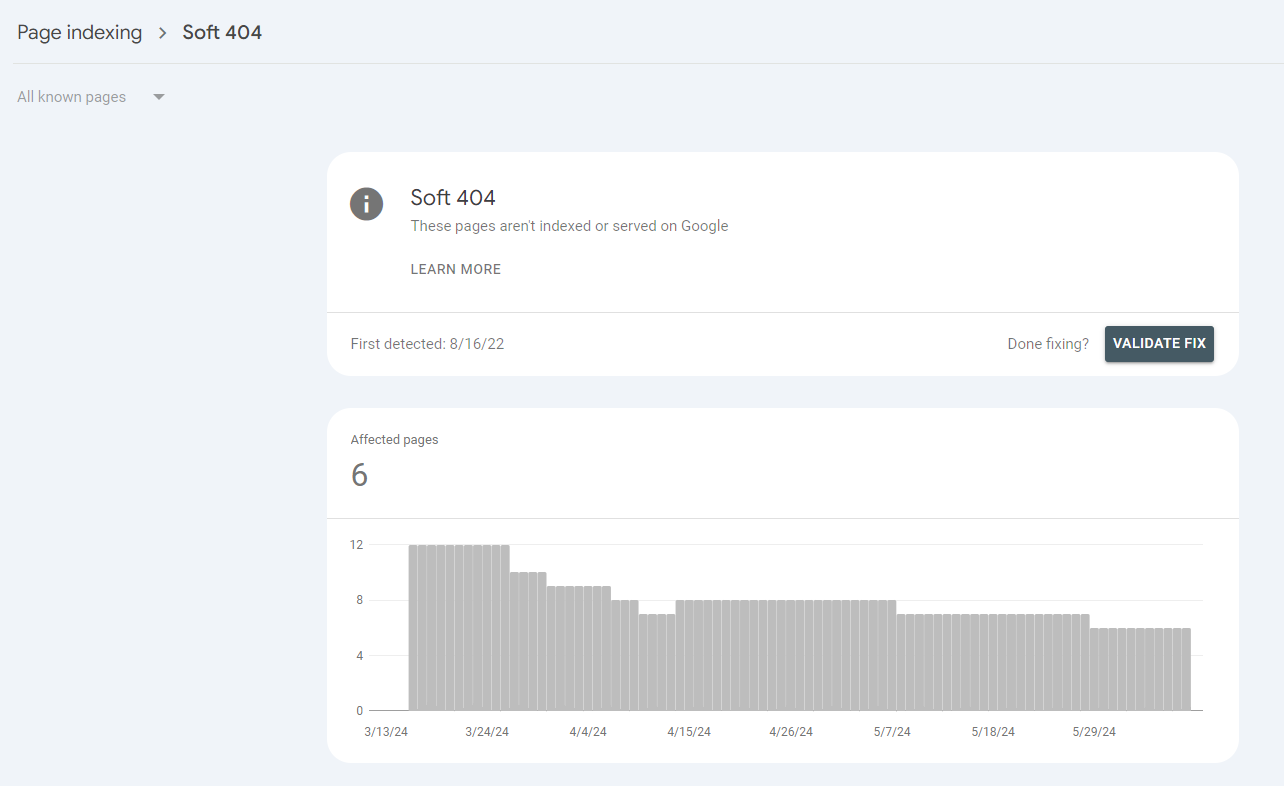

5. Keep away from gentle 404 error pages

Internet pages come and go. After they’ve been deleted, generally customers and Google nonetheless request them. When this occurs, the server ought to reply with a “404 Not Discovered” standing code (indicating that the web page doesn’t exist).

Nonetheless, attributable to some technical causes (e.g. customized error dealing with or CMS inside system settings) or the technology of dynamic JS content material, the server might return a “200 OK” standing code (indicating that the web page exists). This situation is called a gentle 404 error.

This mishandling alerts Google that the web page does exist and can preserve crawling it till the error is fastened – costing you your precious crawl price range.

Advisable answer: Configure your server to return an applicable standing code

Google will let you recognize of any gentle 404 errors in your web site in Search Console:

Google Search Console > Web page indexing > Comfortable 404s

To do away with gentle 404 errors, you principally have three choices:

- If the content material of the web page now not exists, you need to be sure that your server returns a 404 standing code.

- If the content material has been moved to a special location, you need to redirect the web page with a 301 redirect and change any inside hyperlinks to level to the brand new URL.

- If the web page is incorrectly categorized as a gentle 404 web page (e.g. due to skinny content material), enhancing the standard of the web page content material might resolve the error.

For extra particulars, try our wiki article on gentle 404 errors.

6. Make it simple for Google to search out your high-quality content material

To additional maximize your crawl price range, you shouldn’t solely preserve Googlebot away from low-quality or irrelevant content material, but in addition information it towards your high-quality content material. A very powerful components that can assist you obtain this are:

- XML sitemaps

- Inner hyperlinks

- Flat web site structure

Present an XML sitemap

An XML sitemap supplies Google with a transparent overview of your web site’s content material and is an effective way to inform Google which pages you need crawled and listed. This accelerates crawling and reduces the chance that high-quality and essential content material will go undetected.

Use inside hyperlinks strategically

Since search engine bots navigate web sites by following inside hyperlinks, you should utilize them strategically to direct crawlers to particular pages. That is particularly useful for pages that you simply need to be crawled extra ceaselessly. For instance, by linking to those pages from pages with robust backlinks and excessive site visitors, you’ll be able to be sure that they’re crawled extra typically.

We additionally suggest that you simply hyperlink essential sub-pages extensively all through your web site to sign their relevance to Google. Additionally, these pages shouldn’t be too far-off out of your homepage.

Try this information on easy methods to optimize your web site’s inside hyperlinks for extra particulars on easy methods to implement the following tips.

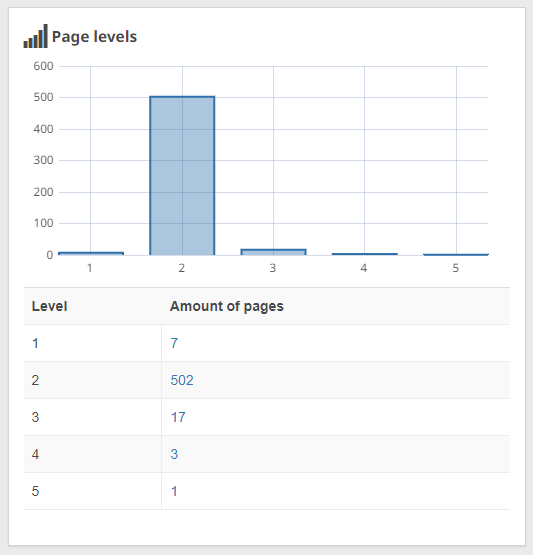

Use a flat web site structure

Flat web site structure menas that each one subpages of your web site needs to be not more than 4 to five clicks away out of your homepage. This helps Googlebot to grasp the construction of your web site and saves your crawl price range from sophisticated crawling.

With Seobility, you’ll be able to simply analyze the clicking distance of your pages:

Seobility > Onpage > Construction > Web page ranges

If any of your essential pages are greater than 5 clicks away out of your homepage, you need to attempt to discover a place to hyperlink to them at the next degree in order that they gained’t be neglected by Google.

Two methods to extend your crawl price range

Up to now we’ve centered on methods to get probably the most out of your present crawl price range however as we’ve talked about at first, there are additionally methods to extend the full crawl price range that Google assigns to your web site.

As a reminder, there are two primary elements that decide your crawl price range: crawl fee and crawl demand. So if you wish to improve your web site’s total crawl price range, these are the 2 essential elements you’ll be able to work on.

Rising the crawl fee

Crawl fee is extremely dependent in your server efficiency and response time. On the whole, the upper your server efficiency and the sooner its response time, the extra probably Google is to extend its crawl fee.

Nonetheless, this may solely occur if there’s enough crawl demand. Enhancing your server efficiency is ineffective if Google doesn’t need to crawl your content material.

When you can’t improve your server, there are a number of on-page strategies you’ll be able to implement to cut back the load in your server. These embody implementing caching, optimizing and lazy-loading your photos, and enabling GZip compression.

This web page pace optimization information explains easy methods to implement the following tips in addition to many different strategies to enhance your server response instances and web page pace typically.

Rising crawl demand

Whilst you can’t actively management what number of pages Google needs to crawl, there are some things you are able to do to extend the probabilities that Google will need to spend extra time in your web site:

- Keep away from skinny or spammy content material. As an alternative, give attention to offering high-quality, precious content material.

- Implement the methods we’ve outlined above to maximise your crawl price range. By guaranteeing that Google solely encounters related, high-quality content material, you create an surroundings the place the search engine acknowledges the worth of your web site and prioritizes crawling it extra ceaselessly. This alerts to Google that your web site is price exploring in higher depth, finally rising crawl demand.

- Construct robust backlinks, improve person engagement, and share your pages on social media. These will assist improve your web site’s reputation and generate real curiosity.

- Maintain your content material recent, as Google prioritizes probably the most present and high-quality content material for its customers.

At this level, you have to be outfitted with a big arsenal of methods that can assist you do away with any crawl price range issues in your web site. Lastly, we’ll go away you with some recommendations on easy methods to monitor the progress of your crawl price range optimization.

monitor your crawl price range optimization progress

Like many search engine optimisation duties, crawl price range optimization isn’t a one-day course of. It requires common monitoring and fine-tuning to make sure that Google simply finds the essential pages of your web site.

The next studies may help you regulate how your web site is being crawled by Google.

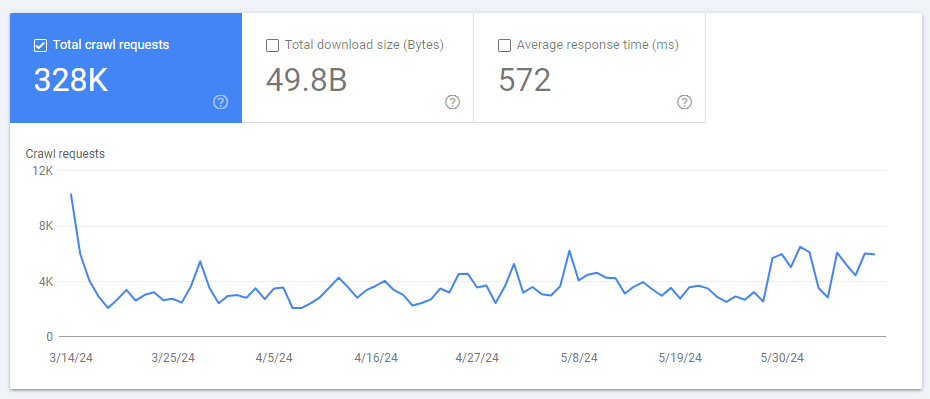

Crawl stats in Google Search Console

On this report, you’ll be able to see the variety of pages crawled per day to your web site over the previous 90 days.

Settings > Crawl Stats > Open report

A sudden drop in crawl requests can point out issues which can be stopping Google from efficiently crawling your web site. Then again, in case your optimization efforts are profitable, you need to see a rise in crawl requests.

Nonetheless, you have to be cautious for those who see a very sudden spike in crawl requests! This might sign new issues, corresponding to infinite loops or large quantities of spam content material created by a hacker assault in your web site. In these instances, Google must crawl many new URLs.

So as to decide the precise explanation for a sudden improve on this metric, it’s a good suggestion to investigate your server log information.

Log file evaluation

Internet servers use log information to trace every go to to your web site, storing info like IP handle, person agent, and many others. By analyzing your log information, you could find out which pages Googlebot crawls extra ceaselessly and whether or not they’re the appropriate (i.e., related) pages. As well as, you could find out if there are some pages which can be essential out of your perspective, however that aren’t being crawled by Google in any respect.

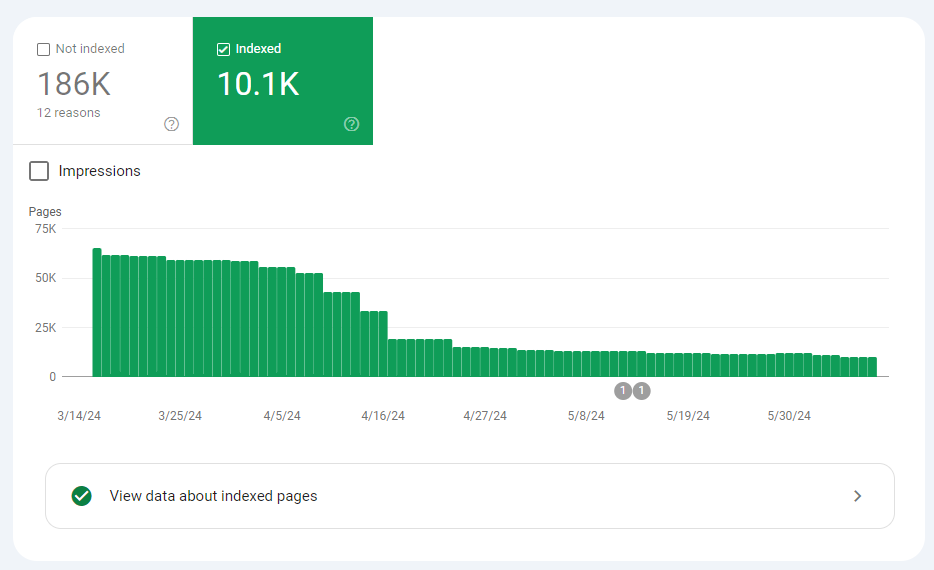

Index protection report

This report in Search Console exhibits you what number of pages of your web site are literally listed by Google, and the precise the explanation why the opposite pages are usually not.

Google Search Console > Indexing > Pages

Optimize your crawl price range for more healthy search engine optimisation efficiency

Relying on the dimensions of your web site, crawl price range can play a vital function within the rating of your content material. With out correct administration, search engines like google and yahoo might battle to index your content material, doubtlessly losing your efforts in creating high-quality content material.

Moreover, the impression of poor crawl price range administration is amplified for JavaScript-based web sites, as dynamic JS content material requires extra crawl price range for indexing. Subsequently, relying solely on Google to index your content material can forestall you from realizing your web site’s true search engine optimisation potential.

You probably have any questions, be happy to go away them within the feedback!

PS: Get weblog updates straight to your inbox!