Constructing an LLM prototype is fast. A couple of traces of Python, a immediate, and it really works. However Manufacturing is a distinct recreation altogether. You begin seeing obscure solutions, hallucinations, latency spikes, and unusual failures the place the mannequin clearly “is aware of” one thing however nonetheless will get it incorrect. Since all the pieces runs on possibilities, debugging turns into tough. Why did a seek for boots flip into sneakers? The system made a alternative, however you possibly can’t simply hint the reasoning.

To deal with this, we’ll construct FuseCommerce, a complicated e-commerce assist system designed for visibility and management. Utilizing Langfuse, we’ll create an agentic workflow with semantic search and intent classification, whereas conserving each choice clear. On this article, we’ll flip a fragile prototype into an observable, production-ready LLM system.

What’s Langfuse?

Langfuse capabilities as an open-source platform for LLM engineering which allows groups to work collectively on debugging and analysing and creating their LLM purposes. The platform capabilities as DevTools for AI brokers.

The system affords three important functionalities which embody:

- Tracing which shows all execution paths by the system together with LLM calls and database queries and gear utilization.

- Metrics which delivers real-time monitoring of latency and value and token utilization.

- Analysis which gathers consumer suggestions by a thumbs up and thumbs down system that instantly connects to the precise era which produced the suggestions.

- The system allows testing by Dataset Administration which permits customers to curate their testing inputs and outputs.

On this undertaking Langfuse capabilities as our important logging system which helps us create an automatic system that enhances its personal efficiency.

What We Are Creating: FuseCommerce…

We might be creating a sensible buyer assist consultant for a expertise retail enterprise named “FuseCommerce.”

In distinction to a normal LLM wrapper, the next parts might be included:

- Cognitive Routing – The power to analyse (suppose by) what to say earlier than responding – together with figuring out the explanation(s) for interplay (i.e. wanting to purchase one thing vs checking on an order vs wanting to speak about one thing).

- Semantic Reminiscence – The aptitude to know and characterize concepts as ideas (ex: how “gaming gear” and a “Mechanical Mouse” are conceptually linked) through vector embedding.

- Visible Reasoning (together with a surprising consumer interface) – A way of visually displaying (to the shopper) what the agent is doing.

The Function of Langfuse within the Undertaking

Langfuse is the spine of the agent getting used for this work. It permits us to comply with the distinctive steps of our agent (intent classification, retrieval, era) and reveals us how all of them work collectively, permitting us to pinpoint the place one thing went incorrect if a solution is inaccurate.

- Traceability – We are going to search to seize all of the steps of an agent on Langfuse utilizing spans. When a consumer receives an incorrect reply, we will use span monitoring or a hint to determine precisely the place within the agent’s course of the error occurred.

- Session Monitoring – We are going to seize all interactions between the consumer and agent inside one grouping that’s recognized by their `

session_id` on Langfuse dashboard to permit us to replay all consumer interplay for context. - Suggestions Loop – We are going to construct consumer suggestions buttons instantly into the hint, so if a consumer downvotes a solution, we can discover out instantly which retrieval or immediate the consumer skilled that led them to downvote the reply.

Getting Began

You possibly can rapidly and simply start the set up course of for the agent.

Stipulations

Set up

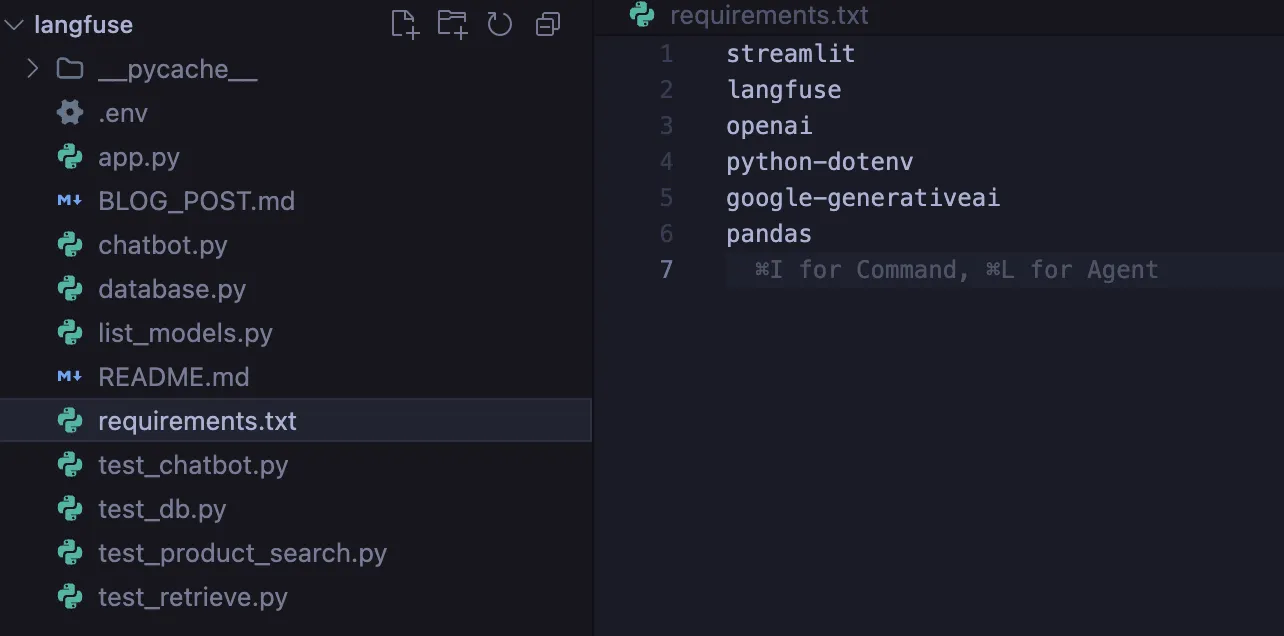

The very first thing you should do is set up the next dependencies which include the Langfuse SDK and Google’s Generative AI.

pip set up langfuse streamlit google-generativeai python-dotenv numpy scikit-learn

Configuration

After you end putting in the libraries, you will have to create a .env file the place your credentials might be saved in a safe approach.

GOOGLE_API_KEY=your_gemini_key

LANGFUSE_PUBLIC_KEY=pk-lf-...

LANGFUSE_SECRET_KEY=sk-lf-...

LANGFUSE_HOST=https://cloud.langfuse.com

How To Construct?

Step 1: The Semantic Information Base

A conventional key phrase search can break down if a consumer makes use of completely different phrases, i.e., the usage of synonyms. Due to this fact, we wish to leverage Vector Embeddings to construct out a semantic search engine.

Purely by math, i.e., Cosine Similarity, we are going to create a “which means vector” for every of our merchandise.

# db.py

from sklearn.metrics.pairwise import cosine_similarity

import google.generativeai as genai

def semantic_search(question):

# Create a vector illustration of the question

query_embedding = genai.embed_content(

mannequin="fashions/text-embedding-004",

content material=question

)["embedding"]

# Utilizing math, discover the closest meanings to the question

similarities = cosine_similarity([query_embedding], product_vectors)

return get_top_matches(similarities)

Step 2: The “Mind” of Clever routing

When customers say “Hey,” we’re capable of classify consumer intent utilizing a classifier in order that we will keep away from looking the database.

You will notice that we additionally routinely detect enter, output, and latency utilizing the @langfuse.observe decorator. Like magic!

@langfuse.observe(as_type="era")

def classify_user_intent(user_input):

immediate = f"""

Use the next consumer enter to categorise the consumer's intent into one of many three classes:

1. PRODUCT_SEARCH

2. ORDER_STATUS

3. GENERAL_CHAT

Enter: {user_input}

"""

# Name Gemini mannequin right here...

intent = "PRODUCT_SEARCH" # Placeholder return worth

return intent

Step 3: The Agent’s Workflow

We sew our course of collectively. The agent will Understand, Get Enter, Suppose (Classifies) after which Act (Route).

We use the tactic lf_client.update_current_trace to tag the dialog with metadata info such because the session_id.

@langfuse.observe() # Root Hint

def handle_customer_user_input(user_input, session_id):

# Tag the session

langfuse.update_current_trace(session_id=session_id)

# Suppose

intent = get_classified_intent(user_input)

# Act based mostly on categorized intent

if intent == "PRODUCT_SEARCH":

context = use_semantic_search(user_input)

elif intent == "ORDER_STATUS":

context = check_order_status(user_input)

else:

context = None # Optionally available fallback for GENERAL_CHAT or unknown intents

# Return the response

response = generate_ai_response(context, intent)

return responseStep 4: Consumer Interface and Suggestions System

We create an enhanced Streamlit consumer interface. A big change is that suggestions buttons will present a suggestions rating again to Langfuse based mostly on the person hint ID related to the precise consumer dialog.

# app.py

col1, col2 = st.columns(2)

if col1.button("👍"):

lf_client.rating(trace_id=trace_id, identify="user-satisfaction", worth=1)

if col2.button("👎"):

lf_client.rating(trace_id=trace_id, identify="user-satisfaction", worth=0)Inputs, Outputs and Analyzing Outcomes

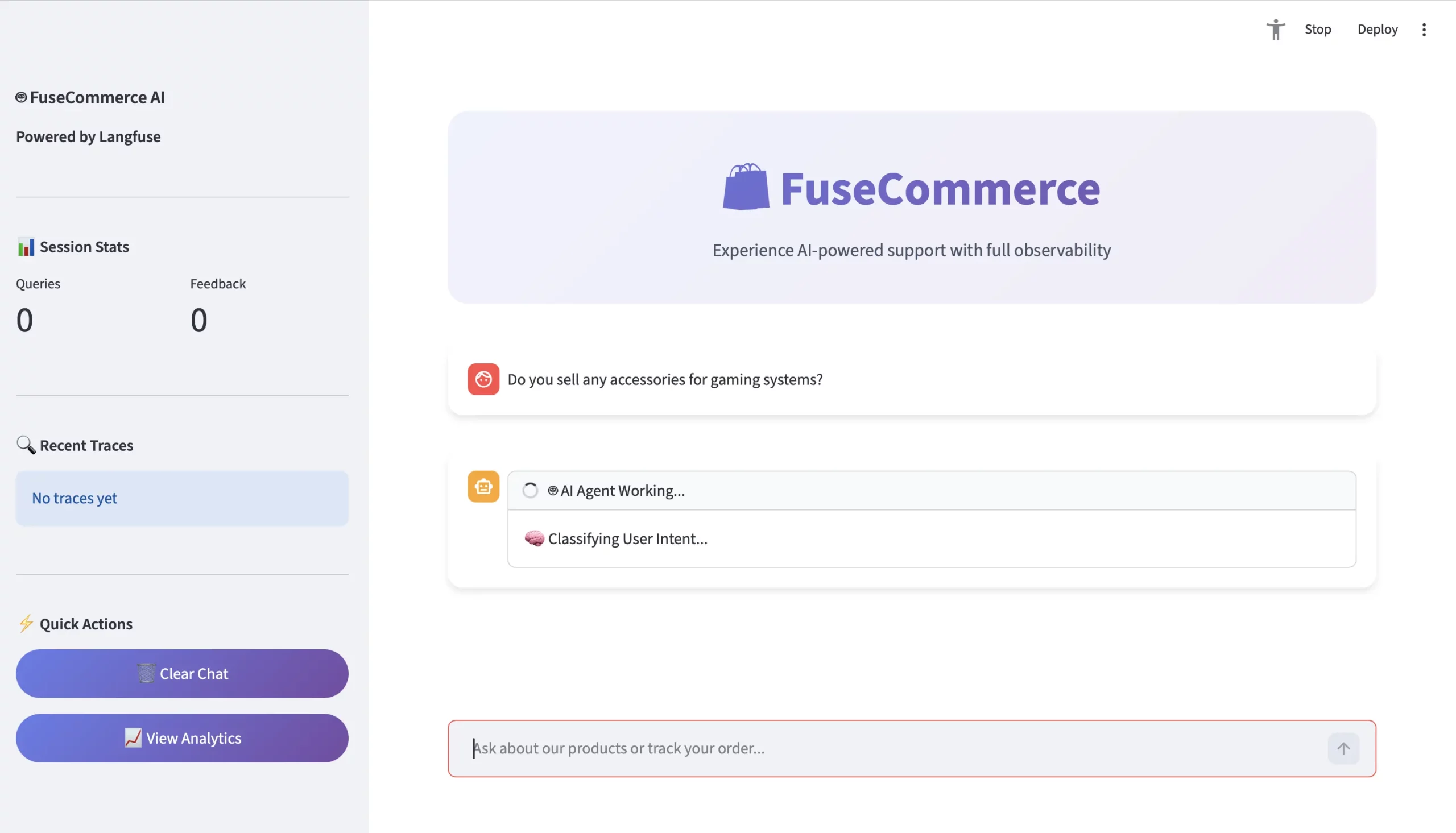

Let’s take a better have a look at a consumer’s inquiry: “Do you promote any equipment for gaming techniques?”

- The Inquiry

- Consumer: “Do you promote any equipment for gaming techniques?”

- Context: No precise match on the key phrase “accent”.

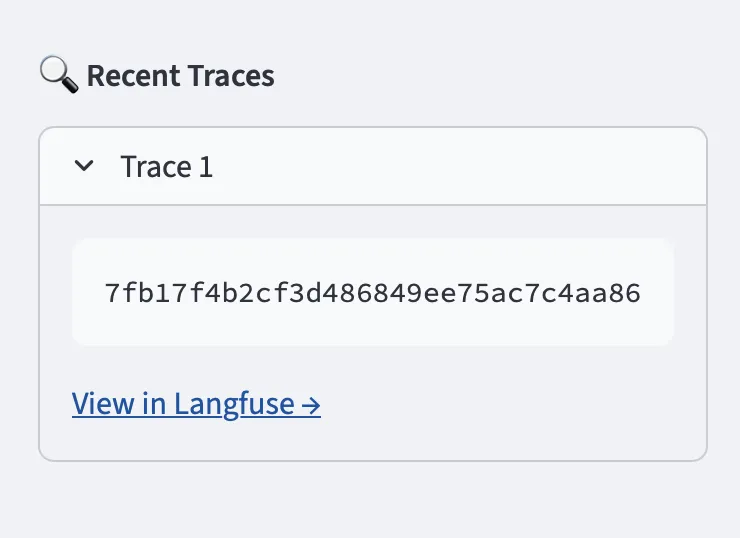

- The Hint (Langfuse Level of Perspective)

Langfuse will create a hint view to visualise the nested hierarchy:

TRACE: agent-conversation (1.5 seconds)

- Technology: classify_intent –> Output = PRODUCT_SEARCH

- Span: retrieve_knowledge –> Semantic Search = geometrically maps gaming knowledge to Quantum Wi-fi Mouse and UltraView Monitor.

- Technology: generate_ai_response –> Output = “Sure! For gaming techniques, we’ll advocate the Quantum Wi-fi Mouse…”

- Evaluation

As soon as the consumer clicks thumbs up, Langfuse receives a rating of 1. You’ll have a complete sum of thumbs up clicks per day to view the common every day. You additionally may have a cumulative visible dashboard to view:

- Common Latency: Does your semantic search gradual??

- Intent Accuracy: Is the routing hallucinating??

- Value / Session: How a lot does it value to make use of Gemini??

Conclusion

By our implementation of Langfuse we remodeled a hidden-functioning chatbot system into an open-visible operational system. We established consumer belief by our growth of product capabilities.

We proved that our agent possesses “considering” skills by Intent Classification whereas it will possibly “perceive” issues by Semantic Search and it will possibly “purchase” information by consumer Suggestions scores. This architectural design serves as the idea for up to date AI techniques which function in real-world environments.

Continuously Requested Questions

A. Langfuse gives tracing, metrics, and analysis instruments to debug, monitor, and enhance LLM brokers in manufacturing.

A. It makes use of intent classification to detect question kind, then routes to semantic search, order lookup, or basic chat logic.

A. Consumer suggestions is logged per hint, enabling efficiency monitoring and iterative optimization of prompts, retrieval, and routing.

Login to proceed studying and revel in expert-curated content material.