The ecosystem of retrieval-augmented era (RAG) has taken off within the final couple of years. Increasingly open-source initiatives, aimed toward serving to builders construct RAG purposes, at the moment are seen throughout the web. And why not? RAG is an efficient methodology to enhance massive language fashions (LLMs) with an exterior data supply. So we thought, why not share one of the best GitHub repositories for mastering RAG programs with our readers?

However earlier than we do this, here’s a little about RAG and its purposes.

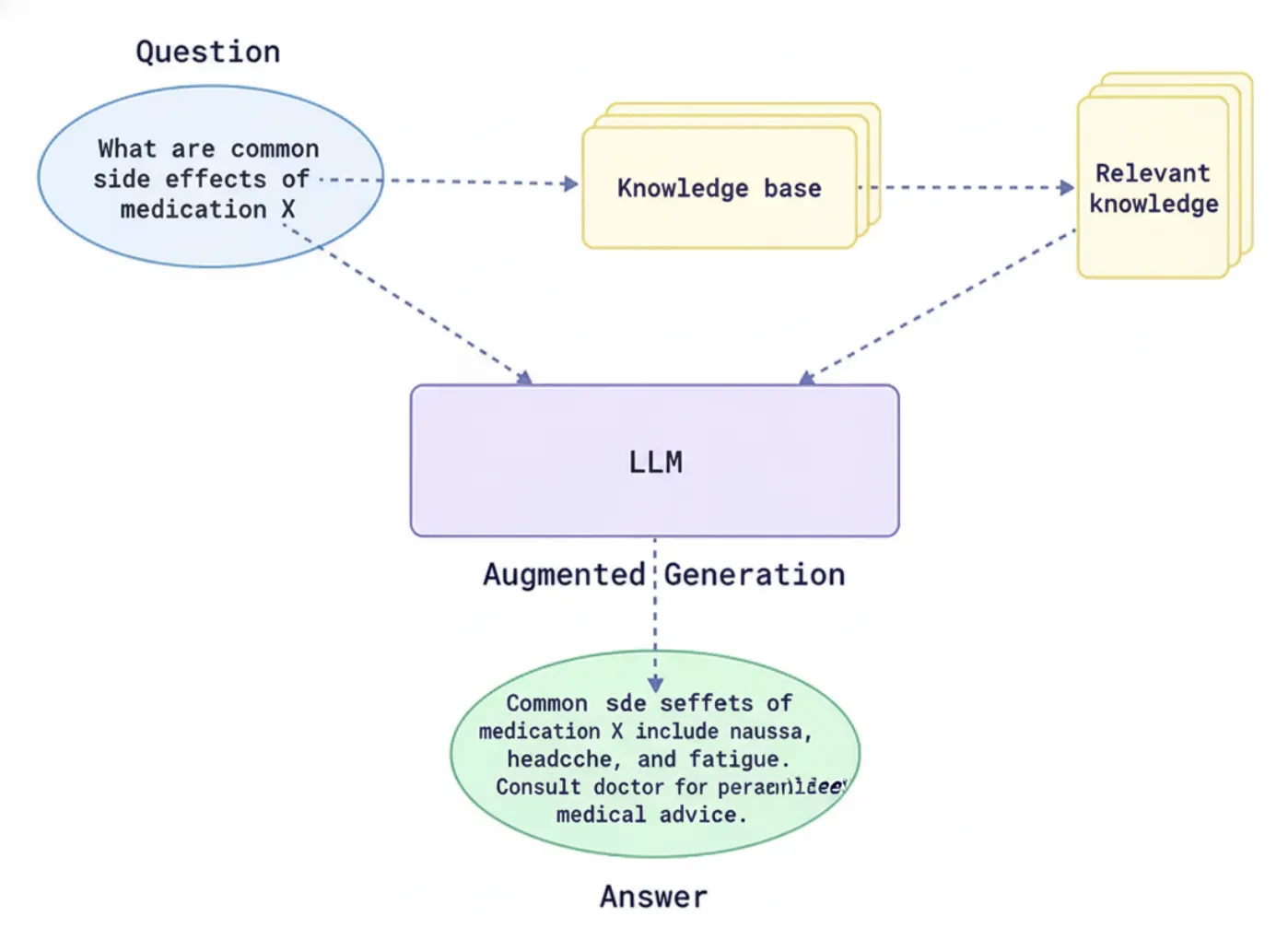

RAG pipelines function within the following method:

- The system retrieves paperwork or information,

- Knowledge that’s informative or helpful for the context of finishing that consumer immediate, and

- The system feeds that context into an LLM to provide a response that’s correct and educated for that context.

As talked about, we are going to discover totally different open-source RAG frameworks and their GitHub repositories right here that allow customers to simply construct RAG programs. The intention is to assist builders, college students, and tech lovers select an RAG toolkit that fits their wants and make use of it.

Why You Ought to Grasp RAG Techniques

Retrieval-Augmented Era has rapidly emerged as some of the impactful improvements within the area of AI. As corporations place increasingly more concentrate on implementing smarter programs with context consciousness, mastering it’s not non-obligatory. Firms are using RAG pipelines for chatbots, data assistants, and enterprise automation. That is to make sure that their AI fashions are using real-time, domain-specific information, relatively than relying solely on pre-trained data.

Within the age when RAG is getting used to automate smarter chatbots, assistants, and enterprise instruments, understanding it totally may give you an important aggressive edge. Realizing the way to construct and optimize RAG pipelines can open up numerous doorways in AI growth, information engineering, and automation. This shall finally make you extra marketable and future-proof your profession.

Within the quest for that mastery, listed below are the highest GitHub repositories for RAG programs. However earlier than that, a have a look at how these RAG frameworks truly assist.

What Does the RAG Framework Do?

The Retrieval-Augmented Era (RAG) framework is a sophisticated AI structure developed to enhance the capabilities of LLMs by integrating exterior info into the response era course of. This makes the LLM responses extra knowledgeable or temporally related than the info used when initially establishing the language mannequin. The mannequin can retrieve related paperwork or information from exterior databases or data repositories (APIs). It may possibly then use it to generate responses primarily based on consumer inquiries relatively than merely counting on the info from the initially educated mannequin.

This allows the mannequin to course of questions and develop solutions which might be additionally appropriate, date-sensitive, or related to context. In the meantime, they will additionally mitigate points associated to data cut-off and hallucination, or incorrect responses to prompts. By connecting to each normal and domain-specific data sources, RAG allows an AI system to offer accountable, reliable responses.

You’ll be able to learn all about RAG programs right here.

Functions of this are throughout use instances, like buyer assist, search, compliance, information analytics, and extra. RAG programs additionally remove the necessity to incessantly retrain the mannequin or try and serve particular person consumer responses by the mannequin being educated.

High Repositories to Grasp the RAG Techniques

Now that we all know how RAG programs assist, allow us to discover the highest GitHub repositories with detailed tutorials, code, and assets for mastering RAG programs. These GitHub repositories will make it easier to grasp the instruments, abilities, frameworks, and theories crucial for working with RAG programs.

1. LangChain

LangChain is a whole LLM toolkit that allows builders to create refined purposes with options similar to prompts, reminiscences, brokers, and information connectors. From loading paperwork to splitting textual content, embedding and retrieval, and producing outputs, LangChain gives modules for every step of a RAG pipeline.

LangChain (know all about it right here) boasts a wealthy ecosystem of integrations with suppliers similar to OpenAI, Hugging Face, Azure, and plenty of others. It additionally helps a number of languages, together with Python, JavaScript, and TypeScript. LangChain includes a step-by-step process design, permitting you to combine and match instruments, construct agent workflows, and use built-in chains.

- LangChain’s core function set features a instrument chaining system, wealthy immediate templates, and first-class assist for brokers and reminiscence.

- LangChain is open-source (MIT license) with an enormous group (70K+ GitHub stars)

- Elements: Immediate templates, LLM wrappers, vectorstore connectors, brokers (instruments + reasoning), reminiscences, and so forth.

- Integrations: LangChain helps many LLM suppliers (OpenAI, Azure, native LLMs), embedding fashions, and vector shops (FAISS, Pinecone, Chroma, and so forth.).

- Use Circumstances: Customized chatbots, doc QA, multi-step workflows, RAG & agentic duties.

Utilization Instance

LangChain’s high-level APIs make easy RAG pipelines concise. For instance, right here we use LangChain to reply a query utilizing a small set of paperwork with OpenAI’s embeddings and LLM:

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import FAISS

from langchain.llms import OpenAI

from langchain.chains import RetrievalQA

# Pattern paperwork to index

docs = ["RAG stands for retrieval-augmented generation.", "It combines search and LLMs for better answers."]

# 1. Create embeddings and vector retailer

vectorstore = FAISS.from_texts(docs, OpenAIEmbeddings())

# 2. Construct a QA chain (LLM + retriever)

qa = RetrievalQA.from_chain_type(

llm=OpenAI(model_name="text-davinci-003"),

retriever=vectorstore.as_retriever()

)

# 3. Run the question

end result = qa({"question": "What does RAG imply?"})

print(end result["result"])This code takes the docs and hundreds them right into a FAISS vector retailer utilizing OpenAI embeds. It then makes use of RetrievalQA to seize the related context and generate a solution. LangChain abstracts away the retrieval and LLM name. (For added directions, please discuss with the LangChain APIs and Tutorials.)

For extra, examine the Langchain’s GitHub repository right here.

2. Haystack by deepset-ai

Haystack, by deepset, is an RAG framework designed for an enterprise that’s constructed round composable pipelines. The principle thought is to have a graph-like pipeline. The one wherein you wire collectively nodes (i.e, parts), similar to retrievers, readers, and mills, right into a directed graph. Haystack is designed for deployment in prod and affords many decisions of backends Elasticsearch, OpenSearch, Milvus, Qdrant, and plenty of extra, for doc storage and retrieval.

- It affords each keyword-based (BM25) and dense retrieval and makes it simple to plug in open-source readers (Transformers QA fashions) or generative reply mills.

- It’s open-source (Apache 2.0) and really mature (10K+ stars).

- Structure: Pipeline-centric and modular. Nodes could be plugged in and swapped precisely.

- Elements embrace: Doc shops (Elasticsearch, In-Reminiscence, and so forth.), retrievers (BM25, Dense), readers (e.g., Hugging Face QA fashions), and mills (OpenAI, native LLMs).

- Ease of Scaling: Distributed setup (Elasticsearch clusters), GPU assist, REST APIs, and Docker.

- Potential Use Circumstances embrace: RAG for search, doc QA, recap purposes, and monitoring consumer queries.

Utilization Instance

Under is a simplified instance utilizing Haystack’s trendy API (v2) to create a small RAG pipeline:

from haystack.document_stores import InMemoryDocumentStore

from haystack.nodes import BM25Retriever, OpenAIAnswerGenerator

from haystack.pipelines import Pipeline

# 1. Put together a doc retailer

doc_store = InMemoryDocumentStore()

paperwork = [{"content": "RAG stands for retrieval-augmented generation."}]

doc_store.write_documents(paperwork)

# 2. Arrange retriever and generator

retriever = BM25Retriever(document_store=doc_store)

generator = OpenAIAnswerGenerator(model_name="text-davinci-003")

# 3. Construct the pipeline

pipe = Pipeline()

pipe.add_node(part=retriever, identify="Retriever", inputs=[])

pipe.add_node(part=generator, identify="Generator", inputs=["Retriever"])

# 4. Run the RAG question

end result = pipe.run(question="What does RAG imply?")

print(end result["answers"][0].reply)This code writes one doc into an in-memory retailer, makes use of BM25 to search out related textual content, then asks the OpenAI mannequin to reply. Haystack’s Pipeline orchestrates the move. For extra, examine deepset repository right here.

Additionally, try the way to buildan Agentic QA RAG system utilizing Haystack right here.

3. LlamaIndex

LlamaIndex, previously referred to as GPT Index, is a data-centric RAG framework centered on indexing and querying your information for LLM use. Contemplate LlamaIndex as a set of instruments used to construct customized indexes over paperwork (vectors, key phrase indexes, graphs) after which question them. LlamaIndex is a strong strategy to join totally different information sources like textual content recordsdata, APIs, and SQL to LLMs utilizing index constructions.

For instance, you may create a vector index of your entire recordsdata, after which use a built-in question engine to reply any questions you could have, all utilizing LlamaIndex. LlamaIndex provides high-level APIs and low-level modules to have the ability to customise each a part of the RAG course of.

- LlamaIndex is open supply (MIT License) with a rising group (45K+ stars)

- Knowledge connectors: (For PDFs, docs, net content material), a number of index varieties (vector retailer, tree, graph), and a question engine that allows you to navigate effectively.

- Merely plug it into LangChain or different frameworks. LlamaIndex works with any LLM/embedding (OpenAI, Hugging Face, native LLMs).

- LlamaIndex means that you can construct your RAG brokers extra simply by robotically creating the index after which fetching the context from the index.

Utilization Instance

LlamaIndex makes it very simple to create a searchable index from paperwork. For example, utilizing the core API:

from llama_index import VectorStoreIndex, SimpleDirectoryReader

# 1. Load paperwork (all recordsdata within the 'information' listing)

paperwork = SimpleDirectoryReader("./information").load_data()

# 2. Construct a vector retailer index from the docs

index = VectorStoreIndex.from_documents(paperwork)

# 3. Create a question engine from the index

query_engine = index.as_query_engine()

# 4. Run a question towards the index

response = query_engine.question("What does RAG imply?")

print(response)This code will learn recordsdata within the ./information listing, index them in reminiscence, after which question the index. LlamaIndex returns the reply as a string. For extra, examine the Llamindex repository right here.

Or, construct a RAG pipeline utilizing LlamaIndex. Right here is how.

4. RAGFlow

RAGFlow is an RAG engine designed for enterprises from InfiniFlow to accommodate complicated and large-scale information. It refers back to the purpose of “deep doc understanding” so as to parse totally different codecs similar to PDFs, scanned paperwork, photographs, or tables, and summarize them into organized chunks.

RAGFlow options an built-in retrieval mannequin with agent templates and visible tooling for debugging. Key parts are the superior template-based chunking for the paperwork and the notion of grounded citations. It helps with lowering hallucinations as a result of you may know which supply texts assist which reply.

- RAGFlow is open-source (Apache-2.0) with a robust group (65K stars).

- Highlights: parsing of deep paperwork (i.e., breaking down tables, photographs, and multi-policy paperwork), doc chunking with template guidelines (customized guidelines for managing paperwork), and citations to indicate the way to doc provenance to reply questions.

- Workflow: RAGFlow is used as a service, which implies you begin a server (utilizing Docker), after which index your paperwork, both by a UI or API. RAGFlow additionally has CLI instruments and Python/REST APIs for constructing chatbots.

- Use Circumstances: Massive enterprises coping with heavy paperwork and helpful use instances the place code-based traceability and accuracy are a requisite.

Utilization Instance

import requests

api_url = "http://localhost:8000/api/v1/chats_openai/default/chat/completions"

api_key = "YOUR_RAGFLOW_API_KEY"

headers = {"Authorization": f"Bearer {api_key}"}

information = {

"mannequin": "gpt-4o-mini",

"messages": [{"role": "user", "content": "What is RAG?"}],

"stream": False

}

response = requests.publish(api_url, headers=headers, json=information)

print(response.json()["choices"][0]["message"]["content"])This instance illustrates the chat completion API of RAGFlow, which is suitable with OpenAI. It sends a chat message to the “default” assistant, and the assistant will use the listed paperwork as a context. For extra, examine the repository.

5. txtai

txtai is an all-in-one AI framework that gives semantic search, embeddings, and RAG pipelines. It comes with an embeddable vector-searchable database, stemming from SQLite+FAISS, and utilities that let you orchestrate LLM calls. With txtai, after you have created an Embedding index utilizing your textual content information, you need to both be a part of it to an LLM manually within the code or use the built-in RAG helper.

What I actually like about txtai is its simplicity: it could actually run 100% domestically (no cloud), it has a template inbuilt for a RAG pipeline, and it even gives autogenerated FastAPI providers. It is usually open supply (Apache 2.0), simple to prototype and deploy.

- Open-source (Apache-2.0, 7K+ stars) Python package deal.

- Capabilities: Semantic search index (vector DB), RAG pipeline, and FastAPI service era.

- RAG assist: txtai has a RAG class, taking in an Embeddings occasion and an LLM, which robotically glues the retrieved context into LLM prompts for you.

- LLM flexibility: Use OpenAI, Hugging Face transformers, llama.cpp, or any mannequin you need with your personal LLM interface.

You’ll be able to learn extra about txtai right here.

Utilization Instance

Right here’s how easy it’s to run a RAG question in txtai utilizing the built-in pipeline:

from txtai import Embeddings, LLM, RAG

# 1. Initialize txtai parts

embeddings = Embeddings() # makes use of an area FAISS+SQLite by default

embeddings.index([{"id": "doc1", "text": "RAG stands for retrieval-augmented generation."}])

llm = LLM("text-davinci-003") # or any mannequin

# 2. Create a RAG pipeline

immediate = "Reply the query utilizing solely the context beneath.nnQuestion: {query}nContext: {context}"

rag = RAG(embeddings, llm, template=immediate)

# 3. Run the RAG question

end result = rag("What does RAG imply?", maxlength=512)

print(end result["answer"])This code snippet takes a single doc and runs a RAG pipeline. The RAG helper manages the retrieval for related passages from the vector index and fill {context} within the immediate template. It’s going to let you wrap your RAG pipeline code in a very good layer of construction with APIs and no-code UI. Cognita does use LangChain/LlamaIndex modules underneath the hood, however organizes them with construction: information loaders, parsers, embedders, retrievers, and metric modules. For extra, examine the repository right here.

6. LLMWare

LLMWare is a whole RAG framework that has a robust deviation in direction of “smaller” specialised mannequin inference that’s safe and quicker. Most frameworks use a big cloud LLM. LLMWare runs desktop RAG pipelines with the required computing energy on a desktop or native server. It limits the chance of information publicity whereas nonetheless using safe LLMs for large-scale pilot research and numerous purposes.

LLMWare has no-code wizards and templates for the standard RAG performance, together with the performance of doc parsing and indexing. It additionally has tooling for numerous doc codecs (Workplace and PDF) which might be helpful first steps for the cognitive AI performance to doc evaluation.

- Open supply product (Apache-2.0, 14K+ stars) for enterprise RAG

- An method that focuses on “smaller” LLMs (Ex: Llama 7B variants) and inference runs on a tool whereas providing RAG functionalities even on ARM gadgets

- Tooling: providing CLI and REST APIs, interactive UIs, and pipeline templates

- Distinctive Traits: preconfigured pipelines, built-in capabilities for fact-checking, and plugin options for vector search and Q&As.

- Examples: enterprises pursuing RAG however can not ship information to the cloud, e.g. monetary providers, healthcare, or builders of cellular/edge AI purposes.

Utilization Instance

LLMWare’s API is designed to be simple. Right here’s a fundamental instance primarily based on their docs:

from llmware.prompts import Immediate

from llmware.fashions import ModelCatalog

# 1. Load a mannequin for prompting

prompter = Immediate().load_model("llmware/bling-tiny-llama-v0")

# 2. (Optionally) index a doc to make use of as context

prompter.add_source_document("./information", "doc.pdf", question="What's RAG?")

# 3. Run the question with context

response = prompter.prompt_with_source("What's RAG?")

print(response)This code makes use of an LLMWare Immediate object. We first specify a mannequin (for instance, a small Llama mannequin from Hugging Face). We then add a folder that comprises supply paperwork. LLMWare parses “doc.pdf” into chunks and filters primarily based on relevance to the consumer’s query. The prompt_with_source operate then makes a request, passing the related context from the supply. This returns a textual content reply and metadata response. For extra, examine the repository right here.

7. Cognita

Cognita by TrueFoundary is a production-ready RAG framework constructed for scalability and collaboration. It’s primarily about making it simple to go from a pocket book or experiment to deployment/service. It helps incremental indexing and has an online UI for non-developers to attempt importing paperwork, choosing fashions, and querying them in actual time.

- That is open supply (Apache-2.0)

- Structure: Absolutely API-based and containerized, it could actually run absolutely domestically by Docker Compose (together with the UI).

- Elements: Reusable libraries for parsers, loaders, embedders, retrievers, and extra. Every little thing could be personalized and scaled.

- UI – Extensibility: An online frontend is supplied for experimentation and a “mannequin gateway” to handle the LLM/embedder configurations. This helps when each the developer and the analyst work collectively to construct out RAG pipeline parts.

Utilization Instance

Cognita is primarily accessed by its command-line interface and inside API, however it is a conceptual pseudo snipped utilizing its Python API:

from cognita.pipeline import Pipeline

from cognita.schema import Doc

# Initialize a brand new RAG pipeline

pipeline = Pipeline.create("rag")

# Add paperwork (with textual content content material)

docs = [Document(id="1", text="RAG stands for retrieval-augmented generation.")]

pipeline.index_documents(docs)

# Question the pipeline

end result = pipeline.question("What does RAG imply?")

print(end result['answer'])In an actual implementation, you’d use YAML to configure Cognita or use its CLI as an alternative to load the info and kick off a service. The earlier snippet describes the move: you create a pipeline, index your information, then ask questions. Cognita documentation has extra particulars. For extra, examine the entire documentation right here. This returns a textual content reply and metadata response. For extra, examine the repository right here.

Conclusion

These open-source GitHub repositories for RAG programs supply in depth toolkits for builders, researchers, and hobbyists.

- LangChain and LlamaIndex supply versatile APIs for establishing personalized pipelines and indexing options.

- Haystack affords NLP pipelines which might be examined in manufacturing with respect to the scalability of information ingestion.

- RAGFlow and LLMWare tackle enterprise wants, with LLMWare considerably restricted to on-device fashions and safety.

- In distinction, txtai affords a light-weight, easy, all-in-one native RAG answer, whereas Cognita takes care of the whole lot with a straightforward, modular, UI pushed platform.

All the GitHub repositories meant for RAG programs above are maintained and include examples that can assist you run simply. They collectively exhibit that RAG is not on the innovative of educational analysis, however is now accessible to everybody who needs to construct an AI software. In apply, the “most suitable choice” relies upon your wants and priorities.

Login to proceed studying and luxuriate in expert-curated content material.