A couple of weeks in the past, my buddy Vasu requested me a easy however difficult query: “Is there a method I can run personal LLMs regionally on my laptop computer?” I instantly went searching weblog posts, YouTube tutorials, something and got here up empty-handed. Nothing I may discover actually defined it for non-engineers, for somebody who simply wished to make use of these fashions safely and privately.

That obtained me pondering. If a wise buddy like Vasu struggles to discover a clear useful resource, what number of others on the market are caught too? Individuals who aren’t builders, who don’t wish to wrestle with Docker, Python, or GPU drivers however who nonetheless need the magic of AI on their very own machine.

So right here we’re. Thanks, Vasu, for stating that want and nudging me to write down this information. This weblog is for anybody who needs to run state-of-the-art LLMs regionally, safely, and privately with out shedding your thoughts in setup hell.

We’ll stroll by means of the instruments I’ve tried: Ollama, LM Studio, and AnythingLLM (plus just a few honorable mentions). By the top, you’ll know not simply what works, however why it really works, and get your personal native AI working in 2025.

Why Run LLMs Regionally Anyway?

Earlier than we dive in, let’s step again. Why would anybody undergo the difficulty of working multi-gigabyte fashions on their private machine when OpenAI or Anthropic are only a click on away?

Three causes:

- Privateness & management: No API calls. No logs. No “your knowledge could also be used to enhance our fashions.” You may actually run Llama 3 or Mistral with out leaking something exterior your machine.

- Offline functionality: You may run it on a airplane. In a basement. Throughout a blackout (okay, possibly not). The purpose is that it’s native, it’s yours.

- Price and freedom: When you obtain the mannequin, it’s free to make use of. No subscription tiers, no per-token billing. You may load any open mannequin you want, fine-tune it, or swap it out tomorrow.

After all, the trade-off is {hardware}.

Working a 70B parameter mannequin on a MacBook Air is like attempting to launch a rocket utilizing a bicycle. However smaller fashions like 7B, 13B, even some environment friendly 30B variants run surprisingly effectively nowadays because of quantization and smarter runtimes like GGUF, llama.cpp, and so forth.

1. Ollama: The Minimalist Workhorse

The primary instrument we’ll see is Ollama. If you happen to’ve been on Reddit or Hacker Information currently, you’ve in all probability seen it pop up in each “native LLM” dialogue thread.

Putting in Ollama is ridiculously simple, you may straight obtain it from its web site, and also you’re up. No Docker. No Python hell. No CUDA driver nightmare.

That is the official web site for downloading the instrument:

It’s out there for MacOS, Linux and Home windows. As soon as put in, you may select your mannequin from the record of obtainable ones and simply obtain them too.

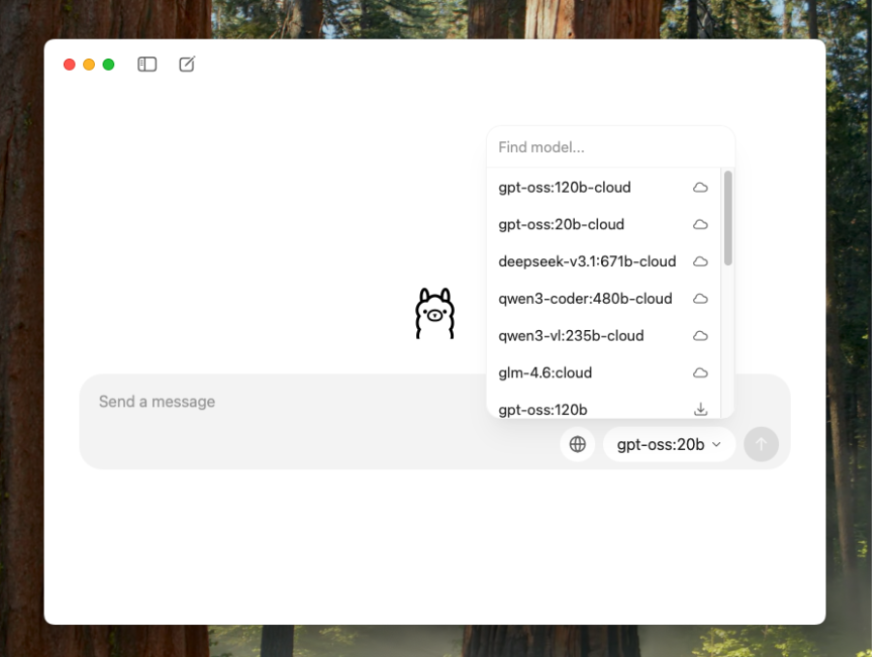

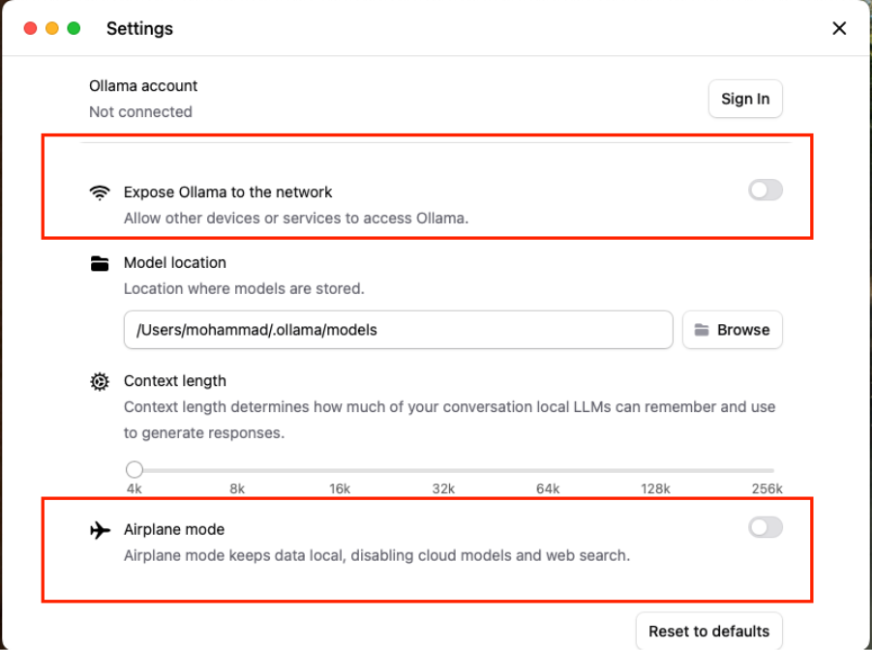

I downloaded Qwen3 4B and you can begin chatting immediately. Now, listed below are the helpful privateness settings you might do:

You may management whether or not Ollama talks to different gadgets in your community or not. Additionally, there’s this neat “Airplane mode” toggle that mainly locks all the pieces down: your chats, your fashions, all of it stays utterly native.

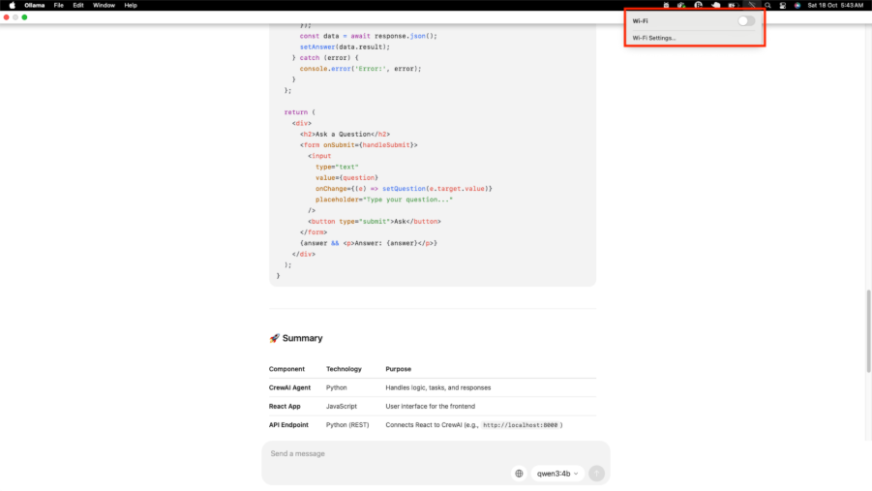

And naturally, I needed to check it the old-school method. I actually turned off my WiFi mid-chat simply to see if it nonetheless labored (spoiler: it did, haha).

What I appreciated?

- Tremendous clear UX: It feels acquainted to ChatGPT/Claude/Gemini when it comes to UI, and you’ll simply obtain fashions.

- Environment friendly useful resource administration: Ollama makes use of llama.cpp below the hood, and helps quantized fashions (This autumn, Q5, Q6, and so forth.), that means you may really run them on an honest MacBook with out killing it.

- API suitable: It provides you a neighborhood HTTP endpoint that mimics OpenAI’s API. So, in case you have present code utilizing openai.ChatCompletion.create, you may simply redirect it to http://localhost:11434.

- Integrations: Many apps like AnythingLLM, Chatbox, and even LM Studio can use Ollama as a backend. It’s just like the native mannequin engine everybody needs to plug into.

Ollama seems like a present. It’s steady, stunning, and makes native AI accessible to non-engineers. If you happen to simply wish to use fashions and never wrestle with setup, Ollama is ideal.

Full Information: Find out how to Run LLM Fashions Regionally with Ollama?

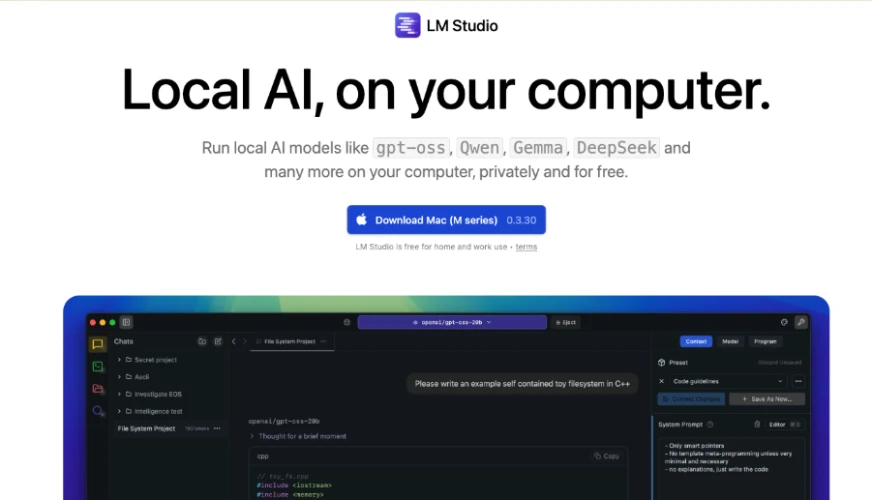

2. LM Studio: Native AI with Type

LM Studio provides you a slick desktop interface (Mac/Home windows/Linux) the place you may chat with fashions, browse open fashions from Hugging Face, and even tweak system prompts or sampling settings; all with out touching the terminal.

Once I first opened it, I felt “okay, that is what ChatGPT would seem like if it lived on my desktop and didn’t speak to a server.”

You may merely obtain LM Studio from its official web site:

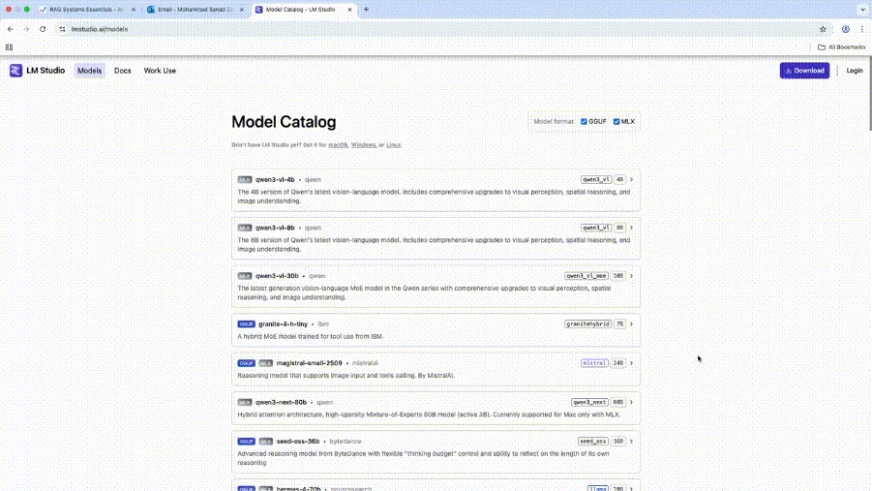

Discover the way it lists fashions equivalent to GPT-OSS, Qwen, Gemma, DeepSeek and extra as suitable fashions which can be free and can be utilized privately (downloaded to your machine). When you obtain it, it enables you to select your mode:

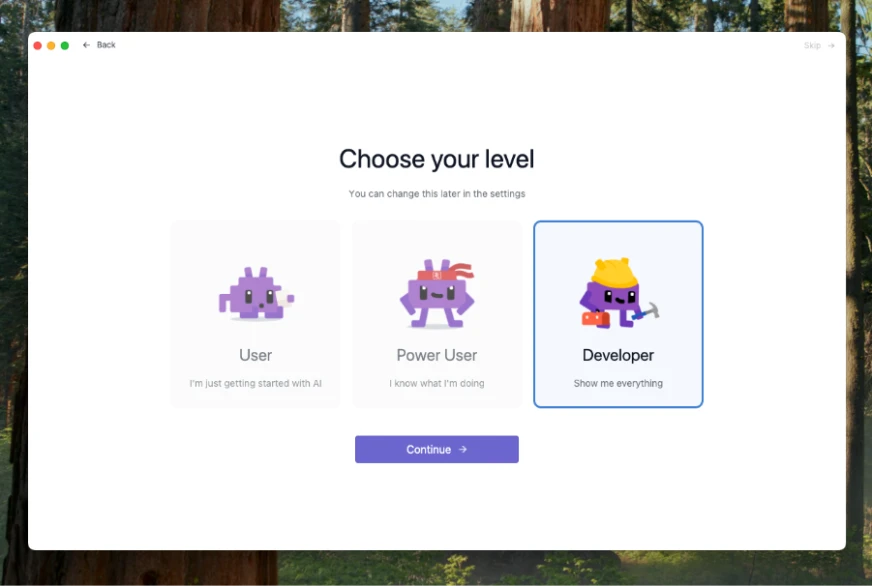

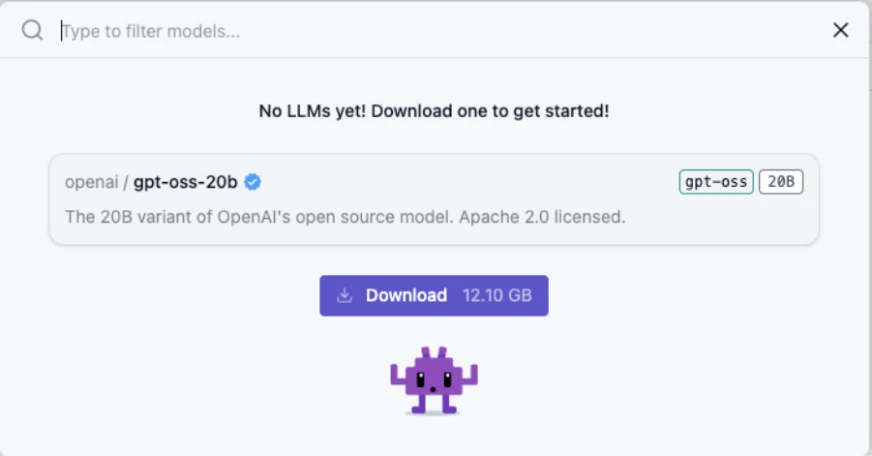

I selected developer mode as a result of I wished to see all of the choices/information it reveals in the course of the chat. Nonetheless, you may simply select person and begin working. It’s a must to select which mannequin to obtain subsequent:

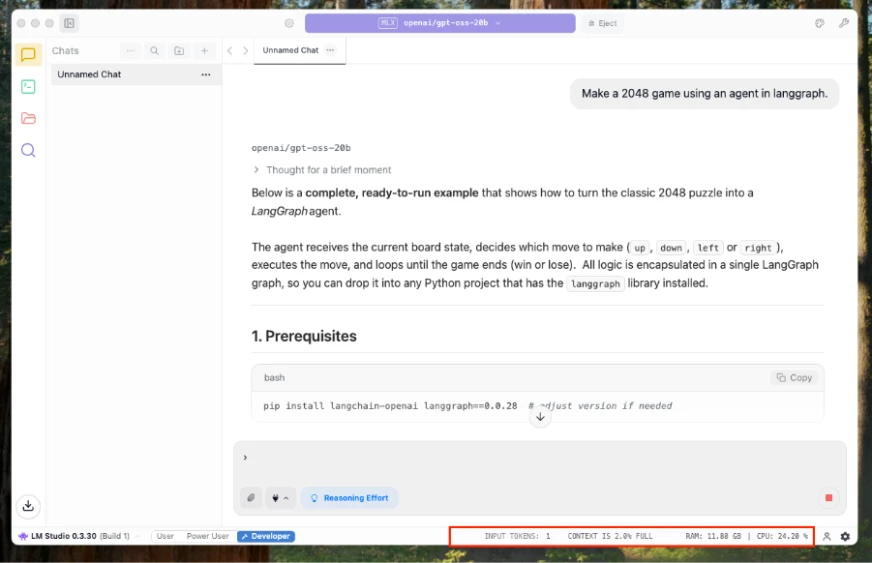

As soon as you’re accomplished, you may merely begin chatting with the mannequin. Moreover, since that is the developer mode, I used to be in a position to see further metrics in regards to the chat equivalent to CPU utilization and token utilization proper under:

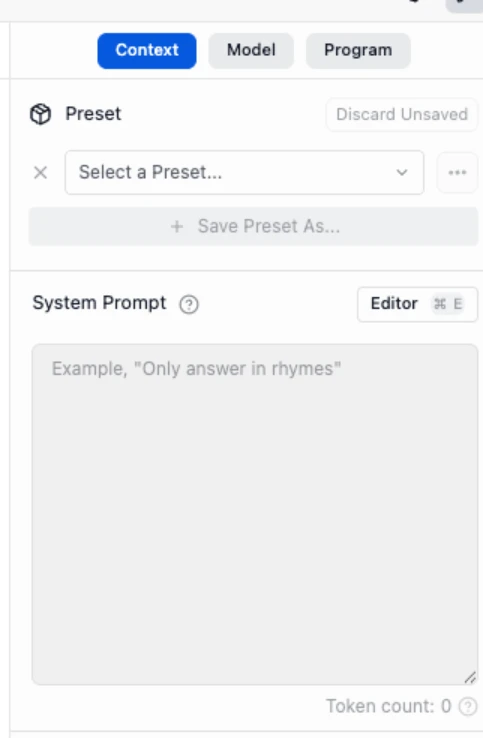

And, you will have extra options equivalent to capacity to set a “System Immediate” which is beneficial in establishing the persona of the mannequin or theme of the chat:

Lastly, right here’s the record of fashions it has out there to make use of:

What I appreciated?

- Stunning UI: Actually, LM Studio appears skilled. Multi-tab chat classes, reminiscence, immediate historical past, all cleanly designed.

- Ollama backend assist: LM Studio can use Ollama behind the scenes, that means you may load fashions by way of Ollama’s runtime whereas nonetheless chatting in LM Studio’s UI.

- Mannequin market: You may search and obtain fashions straight contained in the app: Llama 3, Mistral, Falcon, Phi-3, all are there.

- Parameter controls: You may tweak temperature, top-p, context size, and so forth. Nice for immediate experiments.

- Offline and native embeddings: It additionally helps embeddings regionally which useful if you wish to construct retrieval-augmented setups (RAG) with out web.

Full Information: Find out how to Run LLM Regionally Utilizing LM Studio?

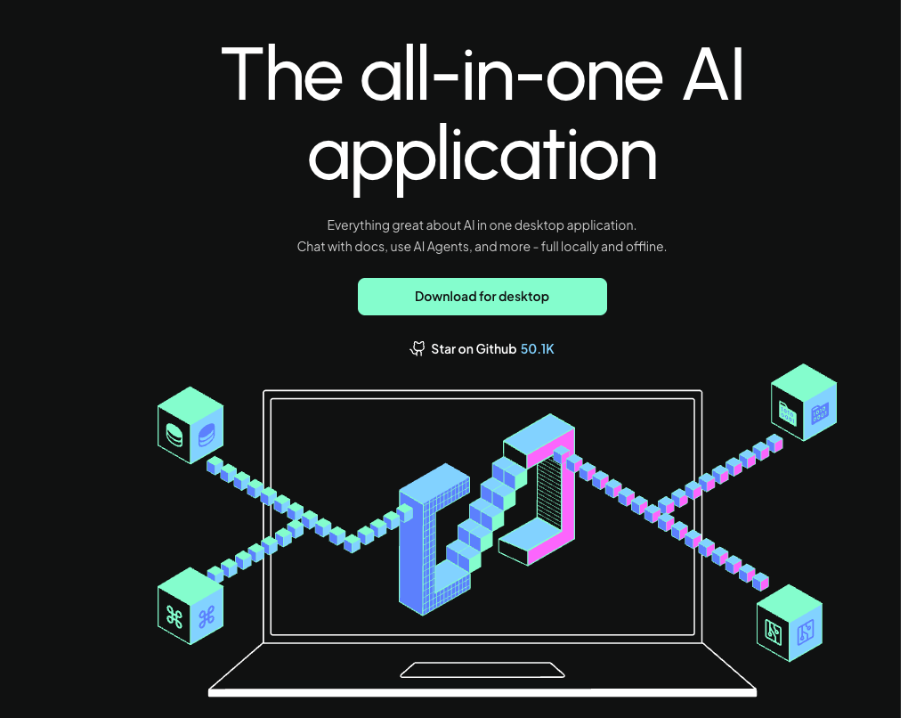

3. AnythingLLM: Making Native Fashions Truly Helpful

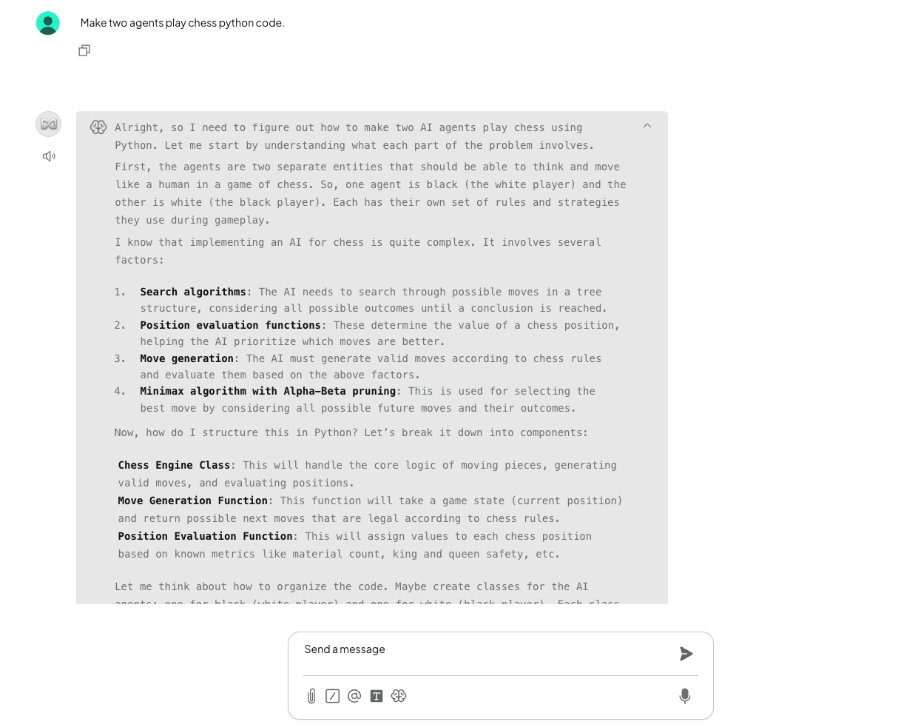

I attempted AnythingLLM primarily as a result of I wished my native mannequin to do extra than simply chat. It connects your LLM (like Ollama) to actual stuff: PDFs, notes, docs and lets it reply questions utilizing your personal knowledge.

Setup was easy, and the perfect half? Every thing stays native. Embeddings, retrieval, context and all of it occurs in your machine.

And yeah, I did my normal WiFi check, turned it off mid-query simply to make sure. Nonetheless labored, no secret calls, no drama.

It’s not good, however it’s the primary time my native mannequin really felt helpful as a substitute of simply talkative.

Let’s set it up from its official web site:

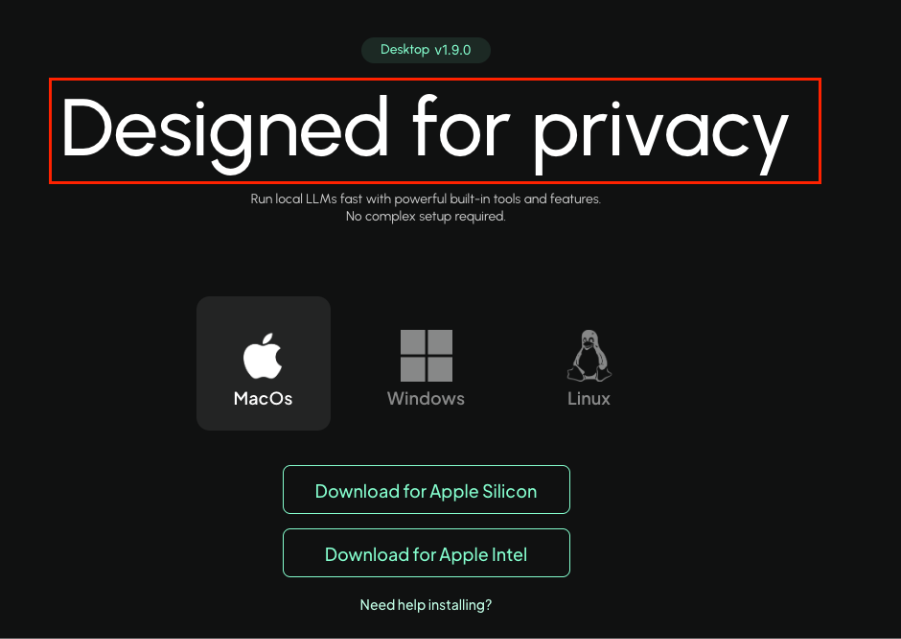

Let’s go to the obtain web page, it’s out there for Linux/Home windows/Mac. Discover how express and clear they’re about their promise to keep up privateness proper off the bat:

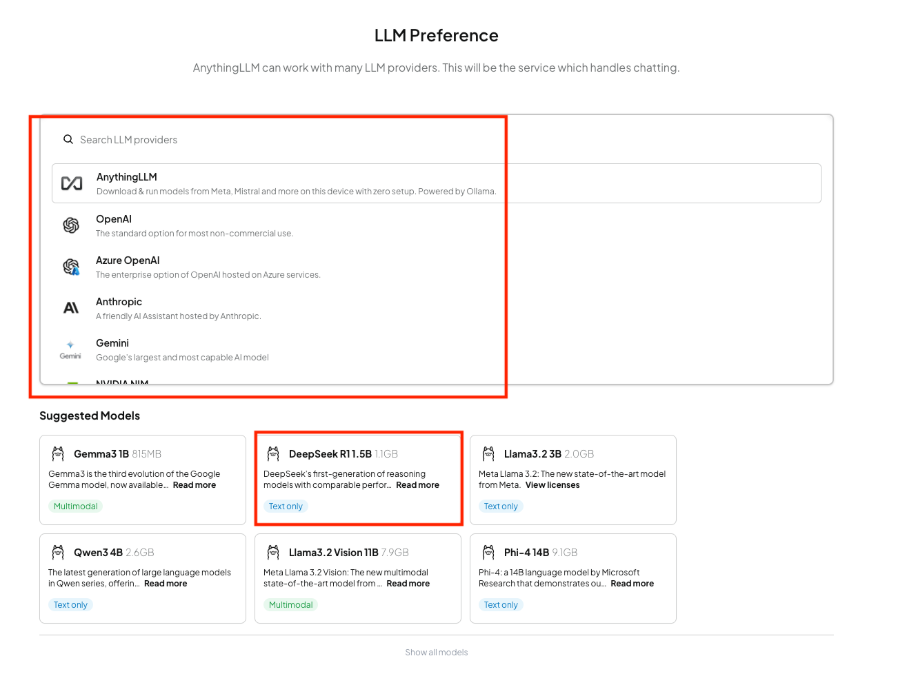

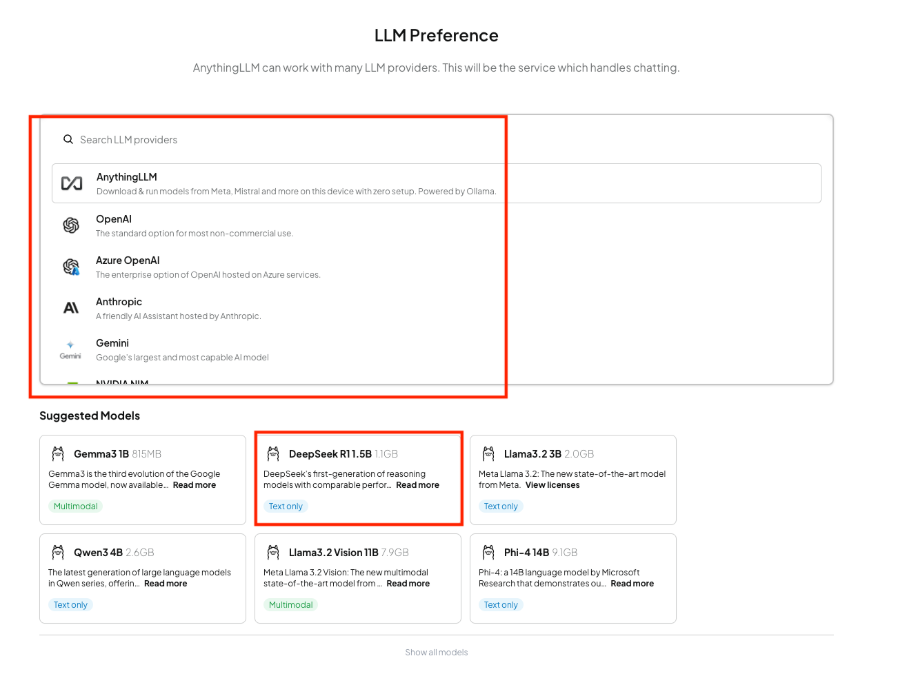

As soon as arrange, you may select your mannequin supplier and your mannequin.

There are all types of fashions out there, from Google’s Gemma to Qwen, Phi, DeepSeek and what not. And for suppliers, you will have choices equivalent to AnythingLLM, OpenAI, Anthropic, Gemini, Nvidia and the record goes on!

Listed here are the privateness settings:

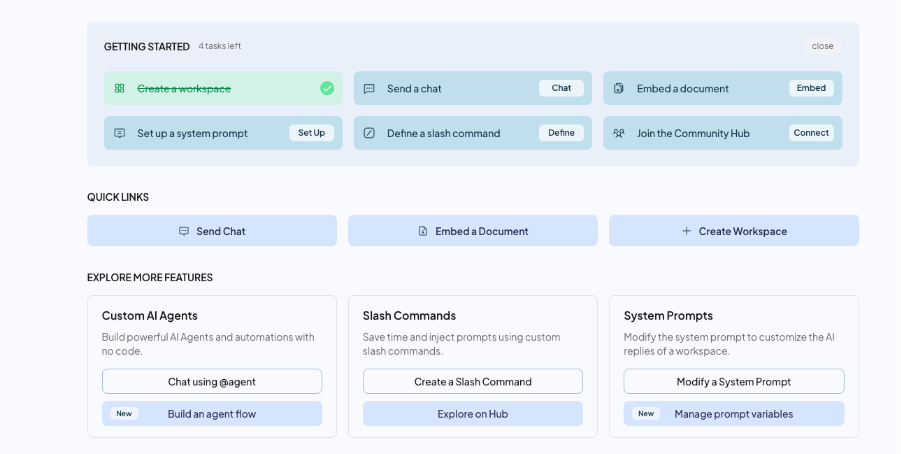

One good thing is that this instrument is just not solely restricted to solely chat, however you are able to do different helpful stuff equivalent to make Brokers, RAG, and what not.

And right here is how the chat interface appears like:

What I appreciated?

- Works completely with Ollama: full native setup, no cloud stuff hiding anyplace.

- Let’s you join actual knowledge (PDFs, notes, and so forth.) so the mannequin really is aware of one thing helpful.

- Easy to make use of, clear interface, and doesn’t want a PhD in devops to run.

- Handed my WiFi-off check with flying colors by being completely offline and completely personal.

Full Information: What’s AnythingLLM and Find out how to Use it?

Honorable Mentions: llama.cpp, OpenWeb UI

A fast shoutout to a few different instruments that deserve some love:

- llama.cpp: the actual OG behind most of those native setups. It’s not flashy, however it’s ridiculously environment friendly. If Ollama is the polished wrapper, llama.cpp is the uncooked muscle doing the heavy lifting beneath. You may run it straight from the terminal, tweak each parameter, and even compile it to your particular {hardware}. Pure management.

- Open WebUI: consider it as a wonderful, browser-based layer to your native fashions. It really works with Ollama and others, provides you a clear chat interface, reminiscence, and multi-user assist. Form of like internet hosting your personal personal ChatGPT, however with none of your knowledge leaving the machine.

Each aren’t precisely beginner-friendly, however when you like tinkering, they’re completely price exploring.

Additionally Learn: 5 Methods to Run LLMs Regionally on a Laptop

Privateness, Safety, and the Larger Image

Now, the entire level of working these regionally is privateness.

Once you use cloud LLMs, your knowledge is processed elsewhere. Even when the corporate guarantees to not retailer it, you’re nonetheless trusting them.

With native fashions, that equation flips. Every thing stays in your machine. You may audit logs, sandbox it, even block community entry totally.

That’s enormous for individuals in regulated industries, or simply for anybody who values private privateness.

And it’s not simply paranoia, it’s about sovereignty. Proudly owning your mannequin weights, your knowledge, your compute; that’s highly effective.

Ultimate Ideas

I attempted just a few instruments for working LLMs regionally, and truthfully, every one has its personal vibe. Some really feel like engines, some like dashboards, and a few like private assistants.

Right here’s a fast snapshot of what I seen:

| Device | Finest For | Privateness / Offline | Ease of Use | Particular Edge |

| Ollama | Fast setup, prototyping | Very sturdy, totally native when you toggle Airplane mode | Tremendous simple, CLI + non-compulsory GUI | Light-weight, environment friendly, API-ready |

| LM Studio | Exploring, experimenting, multi-model UI | Sturdy, principally offline | Reasonable, GUI-heavy | Stunning interface, sliders, multi-tab chat |

| AnythingLLM | Utilizing your personal paperwork, context-aware chat | Sturdy, offline embeddings | Medium, wants backend setup | Connects LLM to PDFs, notes, data bases |

Working LLMs regionally is now not a nerdy experiment, it’s sensible, personal, and surprisingly enjoyable.

Ollama seems like a workhorse, LM Studio is a playground, and AnythingLLM really makes the AI helpful with your personal information. Honorable mentions like llama.cpp or Open WebUI fill the gaps for tinkerers and energy customers.

For me, it’s about mixing and matching: velocity, experimentation, and usefulness; all whereas holding all the pieces by myself laptop computer.

That’s the magic of native AI in 2025: management, privateness, and the bizarre satisfaction of watching a mannequin assume…in your personal machine.

Login to proceed studying and revel in expert-curated content material.