Massive language fashions (LLMs) are actually extra accessible than ever. Google’s Gemini API presents a robust and versatile device for builders and creators. This information explores the quite a few sensible functions you may make the most of with the free Gemini API. We are going to stroll by way of a number of hands-on examples. You’ll study key Gemini API prompting methods, from easy queries to complicated duties. We are going to cowl strategies like zero-shot prompting, Gemini, and few-shot prompting. Additionally, you will discover ways to carry out duties similar to primary code technology with Gemini, making your workflow extra environment friendly.

Methods to Get Your Free Gemini API?

Earlier than we start, it is advisable to arrange your surroundings. This course of is straightforward and takes just some minutes. You have to a Google AI Studio API key to begin.

First, set up the required Python libraries. This bundle permits you to talk with the Gemini API simply.

!pip set up -U -q "google-genai>=1.0.0"Subsequent, configure your API key. You will get a free key from Google AI Studio. Retailer it securely and use the next code to initialize the consumer. This setup is the muse for all of the examples that observe.

from google import genai

# Be sure that to safe your API key

# For instance, through the use of userdata in Colab

# from google.colab import userdata

# GOOGLE_API_KEY=userdata.get('GOOGLE_API_KEY')

consumer = genai.Shopper(api_key="YOUR_API_KEY")

MODEL_ID = "gemini-1.5-flash"Half 1: Foundational Prompting Strategies

Let’s begin with two elementary Gemini API prompting methods. These strategies type the idea for extra superior interactions.

Method 1: Zero-Shot Prompting for Fast Solutions

Zero-shot prompting is the best option to work together with an LLM. You ask a query instantly with out offering any examples. This methodology works nicely for easy duties the place the mannequin’s present information is enough. For efficient zero-shot prompting, readability is the important thing.

Let’s use it to categorise the sentiment of a buyer evaluate.

Immediate:

immediate = """

Classify the sentiment of the next evaluate as optimistic, damaging, or impartial:

Evaluation: "I'm going to this restaurant each week, I like it a lot."

"""

response = consumer.fashions.generate_content(

mannequin=MODEL_ID,

contents=immediate,

)

print(response.textual content)Output:

This easy strategy will get the job achieved shortly. It is among the commonest issues you are able to do with the Gemini’s free API.

Method 2: Few-Shot Prompting for Customized Codecs

Generally, you want the output in a selected format. Few-shot prompting guides the mannequin by offering a number of examples of enter and anticipated output. This system helps the mannequin perceive your necessities exactly.

Right here, we’ll extract cities and their international locations right into a JSON format.

Immediate:

immediate = """

Extract cities from the textual content and embrace the nation they're in.

USER: I visited Mexico Metropolis and Poznan final 12 months

MODEL: {"Mexico Metropolis": "Mexico", "Poznan": "Poland"}

USER: She needed to go to Lviv, Monaco and Maputo

MODEL: {"Lviv": "Ukraine", "Monaco": "Monaco", "Maputo": "Mozambique"}

USER: I'm at present in Austin, however I will probably be shifting to Lisbon quickly

MODEL:

"""

# We additionally specify the response ought to be JSON

generation_config = sorts.GenerationConfig(response_mime_type="software/json")

response = mannequin.generate_content(

contents=immediate,

generation_config=generation_config

)

show(Markdown(f"```jsonn{response.textual content}n```"))Output:

By offering examples, you train the mannequin the precise construction you need. This can be a highly effective step up from primary zero-shot prompting Gemini.

Half 2: Guiding the Mannequin’s Habits and Data

You may management the mannequin’s persona and supply it with particular information. These Gemini API prompting methods make your interactions extra focused.

Method 3: Position Prompting to Outline a Persona

You may assign a task to the mannequin to affect its tone and magnificence. This makes the response really feel extra genuine and tailor-made to a selected context.

Let’s ask for museum suggestions from the angle of a German tour information.

Immediate:

system_instruction = """

You're a German tour information. Your job is to provide suggestions

to individuals visiting your nation.

"""

immediate="Might you give me some suggestions on artwork museums in Berlin and Cologne?"

model_with_role = genai.GenerativeModel(

'gemini-2.5-flash-',

system_instruction=system_instruction

)

response = model_with_role.generate_content(immediate)

show(Markdown(response.textual content))Output:

The mannequin adopts the persona, making the response extra participating and useful.

Method 4: Adding Context to Reply Area of interest Questions

LLMs don’t know every thing. You may present particular info within the immediate to assist the mannequin reply questions on new or personal information. This can be a core idea behind Retrieval-Augmented Era (RAG).

Right here, we give the mannequin a desk of Olympic athletes to reply a selected question.

Immediate:

immediate = """

QUERY: Present a listing of athletes that competed within the Olympics precisely 9 instances.

CONTEXT:

Desk title: Olympic athletes and variety of instances they've competed

Ian Millar, 10

Hubert Raudaschl, 9

Afanasijs Kuzmins, 9

Nino Salukvadze, 9

Piero d'Inzeo, 8

"""

response = mannequin.generate_content(immediate)

show(Markdown(response.textual content))Output:

The mannequin makes use of solely the supplied context to provide an correct reply.

Method 5: Offering Base Circumstances for Clear Boundaries

It is very important outline how a mannequin ought to behave when it can’t fulfill a request. Offering base circumstances or default responses prevents sudden or off-topic solutions.

Let’s create a trip assistant with a restricted set of tasks.

Immediate:

system_instruction = """

You might be an assistant that helps vacationers plan their trip. Your tasks are:

1. Serving to guide the resort.

2. Recommending eating places.

3. Warning about potential risks.

If one other request is requested, return "I can't assist with this request."

"""

model_with_rules = genai.GenerativeModel(

'gemini-1.5-flash-latest',

system_instruction=system_instruction

)

# On-topic request

response_on_topic = model_with_rules.generate_content("What ought to I look out for on the seashores in San Diego?")

print("--- ON-TOPIC REQUEST ---")

show(Markdown(response_on_topic.textual content))

# Off-topic request

response_off_topic = model_with_rules.generate_content("What bowling locations do you advocate in Moscow?")

print("n--- OFF-TOPIC REQUEST ---")

show(Markdown(response_off_topic.textual content))Output:

Half 3: Unlocking Superior Reasoning

For complicated issues, it is advisable to information the mannequin’s considering course of. These reasoning methods enhance accuracy for multi-step duties.

Method 6: Fundamental Reasoning for Step-by-Step Options

You may instruct the mannequin to interrupt down an issue and clarify its steps. That is helpful for mathematical or logical issues the place the method is as necessary as the reply.

Right here, we ask the mannequin to resolve for the realm of a triangle and present its work.

Immediate:

system_instruction = """

You're a trainer fixing mathematical issues. Your job:

1. Summarize given situations.

2. Determine the issue.

3. Present a transparent, step-by-step answer.

4. Present an evidence for every step.

"""

math_problem = "Given a triangle with base b=6 and top h=8, calculate its space."

reasoning_model = genai.GenerativeModel(

'gemini-1.5-flash-latest',

system_instruction=system_instruction

)

response = reasoning_model.generate_content(math_problem)

show(Markdown(response.textual content))Output:

This structured output is evident and straightforward to observe.

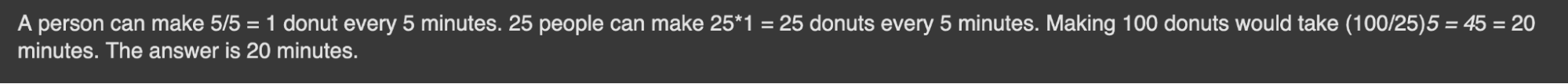

Method 7: Chain-of-Thought for Complicated Issues

Chain-of-thought (CoT) prompting encourages the mannequin to assume step-by-step. Analysis from Google exhibits that this considerably improves efficiency on complicated reasoning duties. As an alternative of simply giving the ultimate reply, the mannequin explains its reasoning path.

Let’s clear up a logic puzzle utilizing CoT.

Immediate:

immediate = """

Query: 11 factories could make 22 automobiles per hour. How a lot time would it not take 22 factories to make 88 automobiles?

Reply: A manufacturing facility could make 22/11=2 automobiles per hour. 22 factories could make 22*2=44 automobiles per hour. Making 88 automobiles would take 88/44=2 hours. The reply is 2 hours.

Query: 5 individuals can create 5 donuts each 5 minutes. How a lot time would it not take 25 individuals to make 100 donuts?

Reply:

"""

response = mannequin.generate_content(immediate)

show(Markdown(response.textual content))Output:

The mannequin follows the sample, breaking the issue down into logical steps.

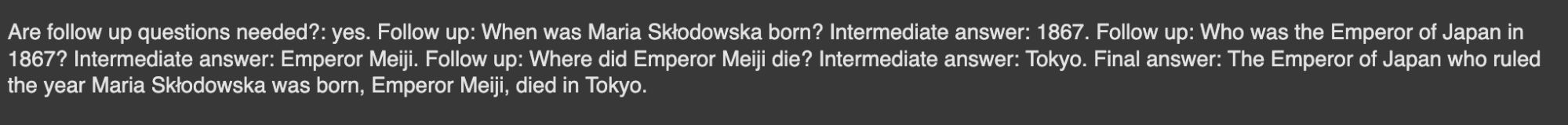

Method 8: Self-Ask Prompting to Deconstruct Questions

Self-ask prompting is much like CoT. The mannequin breaks down a predominant query into smaller, follow-up questions. It solutions every sub-question earlier than arriving on the ultimate reply.

Let’s use this to resolve a multi-part historic query.

Immediate:

immediate = """

Query: Who was the president of the us when Mozart died?

Are observe up questions wanted?: sure.

Comply with up: When did Mozart die?

Intermediate reply: 1791.

Comply with up: Who was the president of the us in 1791?

Intermediate reply: George Washington.

Remaining reply: When Mozart died George Washington was the president of the USA.

Query: The place did the Emperor of Japan, who dominated the 12 months Maria Skłodowska was born, die?

"""

response = mannequin.generate_content(immediate)

show(Markdown(response.textual content))Output:

This structured considering course of helps guarantee accuracy for complicated queries.

Half 4: Actual-World Purposes with Gemini

Now let’s see how these methods apply to widespread real-world duties. Exploring these examples exhibits the big variety of issues you are able to do with the Gemini’s free API.

Method 9: Classifying Textual content for Moderation and Evaluation

You should utilize Gemini to routinely classify textual content. That is helpful for duties like content material moderation, the place it is advisable to establish spam or abusive feedback. Utilizing few-shot prompting, Gemini helps the mannequin study your particular classes.

Immediate:

classification_template = """

Matter: The place can I purchase an inexpensive cellphone?

Remark: You've gotten simply received an IPhone 15 Professional Max!!! Click on the hyperlink!!!

Class: Spam

Matter: How lengthy do you boil eggs?

Remark: Are you silly?

Class: Offensive

Matter: {matter}

Remark: {remark}

Class:

"""

spam_topic = "I'm in search of a vet in our neighbourhood."

spam_comment = "You may win 1000$ by simply following me!"

spam_prompt = classification_template.format(matter=spam_topic, remark=spam_comment)

response = mannequin.generate_content(spam_prompt)

show(Markdown(response.textual content))Output:

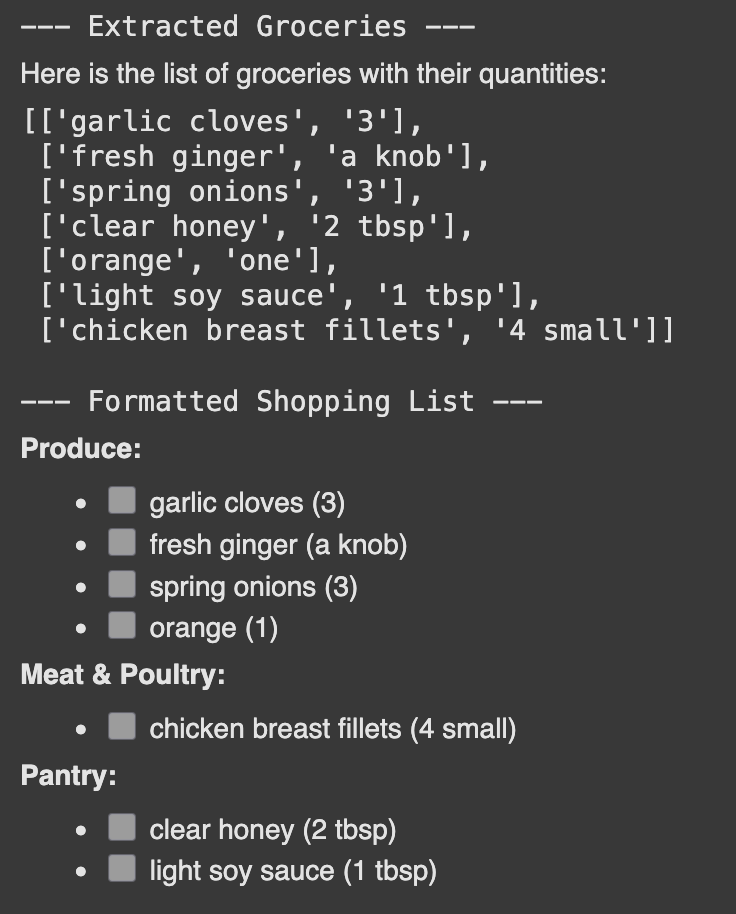

Gemini can extract particular items of knowledge from unstructured textual content. This helps you change plain textual content, like a recipe, right into a structured format, like a purchasing checklist.

Immediate:

recipe = """

Grind 3 garlic cloves, a knob of contemporary ginger, and three spring onions to a paste.

Add 2 tbsp of clear honey, juice from one orange, and 1 tbsp of sunshine soy sauce.

Pour the combination over 4 small hen breast fillets.

"""

# Step 1: Extract the checklist of groceries

extraction_prompt = f"""

Your job is to extract to a listing all of the groceries with its portions based mostly on the supplied recipe.

Guarantee that groceries are within the order of look.

Recipe:{recipe}

"""

extraction_response = mannequin.generate_content(extraction_prompt)

grocery_list = extraction_response.textual content

print("--- Extracted Groceries ---")

show(Markdown(grocery_list))

# Step 2: Format the extracted checklist right into a purchasing checklist

formatting_system_instruction = "Set up groceries into classes for simpler purchasing. Listing every merchandise with a checkbox []."

formatting_prompt = f"""

LIST: {grocery_list}

OUTPUT:

"""

formatting_model = genai.GenerativeModel(

'gemini-2.5-flash',

system_instruction=formatting_system_instruction

)

formatting_response = formatting_model.generate_content(formatting_prompt)

print("n--- Formatted Procuring Listing ---")

show(Markdown(formatting_response.textual content))Output:

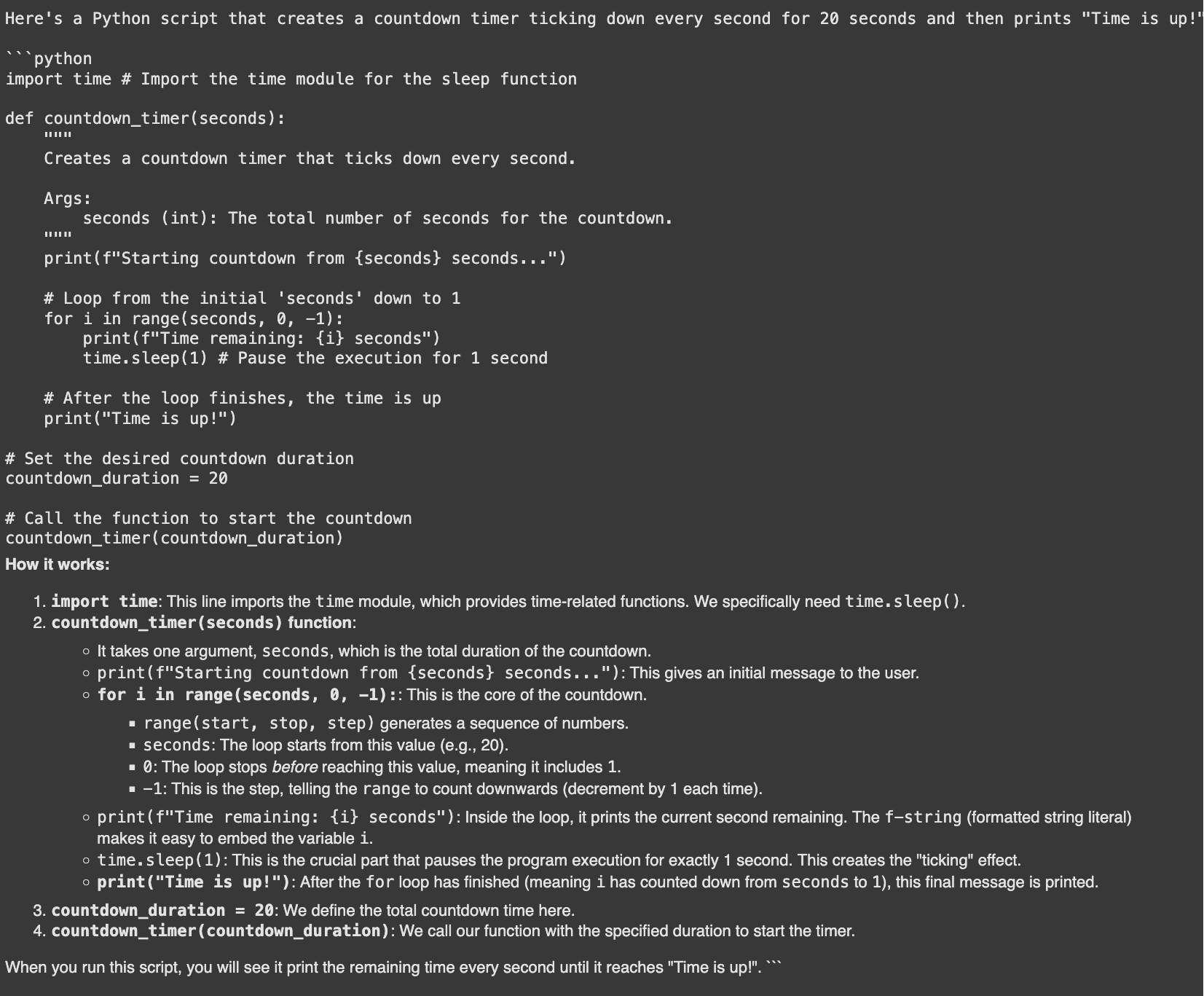

Method 11: Fundamental Code Era and Debugging

A strong software is primary code technology with Gemini. It could possibly write code snippets, clarify errors, and counsel fixes. A GitHub survey discovered that builders utilizing AI coding instruments are as much as 55% quicker.

Let’s ask Gemini to generate a Python script for a countdown timer.

Immediate:

code_generation_prompt = """

Create a countdown timer in Python that ticks down each second and prints

"Time is up!" after 20 seconds.

"""

response = mannequin.generate_content(code_generation_prompt)

show(Markdown(f"```pythonn{response.textual content}n```"))Output:

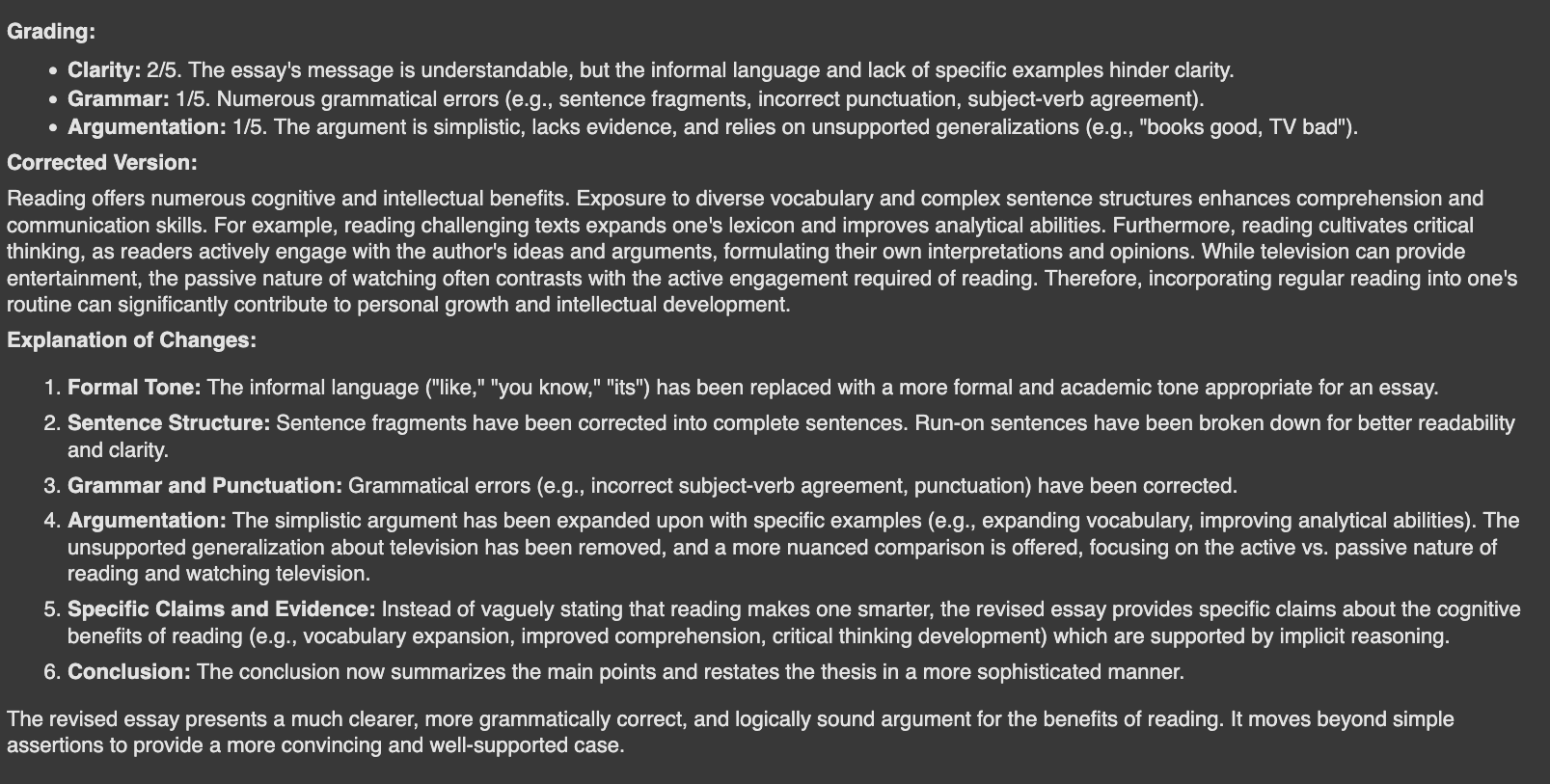

Method 12: Evaluating Textual content for High quality Assurance

You may even use Gemini to guage textual content. That is helpful for grading, offering suggestions, or guaranteeing content material high quality. Right here, we’ll have the mannequin act as a trainer and grade a poorly written essay.

Immediate:

teacher_system_instruction = """

As a trainer, you might be tasked with grading a scholar's essay.

1. Consider the essay on a scale of 1-5 for readability, grammar, and argumentation.

2. Write a corrected model of the essay.

3. Clarify the adjustments made.

"""

essay = "Studying is like, a extremely good factor. It’s useful, you realize? Like, rather a lot. Once you learn, you study new phrases and that is good. Its like a secret code to being sensible. I learn a guide final week and I do know it was making me smarter. Subsequently, books good, TV unhealthy. So everybody ought to learn extra."

evaluation_model = genai.GenerativeModel(

'gemini-1.5-flash-latest',

system_instruction=teacher_system_instruction

)

response = evaluation_model.generate_content(essay)

show(Markdown(response.textual content))Output:

Conclusion

The Gemini API is a versatile and highly effective useful resource. We’ve explored a variety of issues you are able to do with the free Gemini API, from easy inquiries to superior reasoning and code technology. By mastering these Gemini API prompting methods, you may construct smarter, extra environment friendly functions. The secret is to experiment. Use these examples as a place to begin in your personal tasks and uncover what you may create.

Ceaselessly Requested Questions

A. You may carry out textual content classification, info extraction, and summarization. Additionally it is wonderful for duties like primary code technology and complicated reasoning.

A. Zero-shot prompting includes asking a query instantly with out examples. Few-shot prompting supplies the mannequin with a number of examples to information its response format and magnificence.

A. Sure, Gemini can generate code snippets, clarify programming ideas, and debug errors. This helps speed up the event course of for a lot of programmers.

A. Assigning a task offers the mannequin a selected persona and context. This leads to responses which have a extra acceptable tone, model, and area information.

A. It’s a approach the place the mannequin breaks down an issue into smaller, sequential steps. This helps enhance accuracy on complicated duties that require reasoning.

Login to proceed studying and luxuriate in expert-curated content material.