Giant Language Fashions (LLMs) are the center of Agentic programs and RAG programs. And constructing with LLMs is thrilling till the size makes them costly. There may be all the time a tradeoff for value vs high quality, however on this article we’ll discover the ten greatest methods in accordance with me that may slash prices for the LLM utilization whereas specializing in sustaining the standard of the system. Additionally notice I’ll be utilizing OpenAI API for the inference however the methods may very well be utilized to different mannequin suppliers as properly. So with none additional ado let’s perceive the associated fee equation and see methods of LLM value optimization.

Prerequisite: Understanding the Price Equation

Earlier than we begin, it’s higher we get higher versed with about prices, tokens and context window:

- Tokens: These are the small models of the textual content. For all sensible functions you possibly can assume 1,000 tokens is roughly 750 phrases.

- Immediate Tokens: These are the enter tokens that we ship to the mannequin. They’re typically cheaper.

- Completion Tokens: These are the tokens generated by the mannequin. They’re usually 3-4 occasions costlier than enter tokens.

- Context Window: This is sort of a short-term reminiscence (It might probably embrace the previous Inputs + Outputs). When you exceed this restrict, the mannequin leaves out the sooner elements of the dialog. When you ship 10 earlier messages within the context window, then these depend as Enter Tokens for the present request and can add to the prices.

- Complete Price: (Enter Tokens x Per Enter Token Price) + (Output Tokens x Per Output Token Price)

Notice: For OpenAI you should utilize the billing dashboard to trace prices: https://platform.openai.com/settings/group/billing/overview

To learn to get the OpenAI API learn this article.

1. Route Requests to the Proper Mannequin

Not each job requires the very best, state-of-the-art mannequin, you possibly can experiment with a less expensive mannequin or attempt utilizing a few-shot prompting with a less expensive mannequin to duplicate a much bigger mannequin.

Configure the API key

from google.colab import userdata

import os

os.environ['OPENAI_API_KEY']=userdata.get('OPENAI_API_KEY') Outline the features

from openai import OpenAI

shopper = OpenAI()

SYSTEM_PROMPT = "You're a concise, useful assistant. You reply in 25-30 phrases"

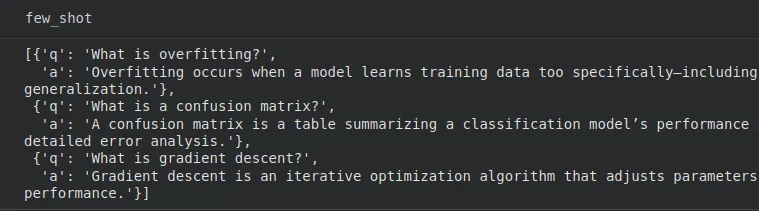

def generate_examples(questions, n=3):

examples = []

for q in questions[:n]:

response = shopper.chat.completions.create(

mannequin="gpt-5.1",

messages=[{"role": "system", "content": SYSTEM_PROMPT},

{"role": "user", "content": q}]

)

examples.append({"q": q, "a": response.decisions[0].message.content material})

return examplesThis operate makes use of the bigger GPT-5.1 and solutions the query in 25-30 phrases.

# Instance utilization

questions = [

"What is overfitting?",

"What is a confusion matrix?",

"What is gradient descent?"

]

few_shot = generate_examples(questions, n=3)

Nice, we received our question-answer pairs.

def build_prompt(examples, query):

immediate = ""

for ex in examples:

immediate += f"Q: {ex['q']}nA: {ex['a']}nn"

return immediate + f"Q: {query}nA:"

def ask_small_model(examples, query):

immediate = build_prompt(examples, query)

response = shopper.chat.completions.create(

mannequin="gpt-5-nano",

messages=[{"role": "system", "content": SYSTEM_PROMPT},

{"role": "user", "content": prompt}]

)

return response.decisions[0].message.content materialRight here, we’ve got a operate that makes use of smaller ‘gpt-5-nano’ and one other operate that makes the immediate utilizing the question-answer pairs for the mannequin.

reply = ask_small_model(few_shot, "Clarify regularization in ML.")

print(reply)Let’s go a query to the mannequin.

Output:

Regularization provides a penalty to the loss for mannequin complexity to scale back overfitting. Widespread varieties embrace L1 (lasso) selling sparsity and L2 (ridge) shrinking weights; elastic internet blends.

Nice! We’ve used a less expensive mannequin (gpt-5-nano) to get our output, however certainly we are able to’t use the cheaper mannequin for each job.

2. Use Fashions in accordance with the duty

The concept right here is to make use of a smaller mannequin for routine duties, and utilizing the bigger fashions just for complicated reasoning. So how can we do that? Right here we’ll outline a classifier that returns “easy” or “complicated” and route the queries accordingly. That is assist us save prices on routine prices.

Instance:

from openai import OpenAI

shopper = OpenAI()

def get_complexity(query):

immediate = f"Price the complexity of the query from 1 to 10 for an LLM to reply. Present solely the quantity.nQuestion: {query}"

res = shopper.chat.completions.create(

mannequin="gpt-5.1",

messages=[{"role": "user", "content": prompt}],

)

return int(res.decisions[0].message.content material.strip())

print(get_complexity("Clarify convolutional neural networks"))Output:

4

So our classifier says the complexity is 4, don’t fear concerning the further LLM name as that is producing solely a single quantity. This complexity quantity can be utilized to route the duties, like: complexity then path to a smaller mannequin, else a bigger mannequin.

3. Utilizing Immediate Caching

If the LLM-system makes use of cumbersome system directions or numerous few-shot examples throughout many calls, then ensure to put them on the begin of your message.

Few essential factors right here:

- Make sure the prefix is precisely similar throughout requests (together with all of the characters, whitespace included).

- Based on OpenAI the supported fashions will routinely profit from Caching however the immediate must be longer than 1,024 tokens.

- Requests utilizing Immediate Caching have a

cached_tokensworth as part of the response.

4. Use the Batch API for Duties that may wait

Many duties don’t require speedy responses, that is the place we are able to use the asynchronous Batch endpoint for the inference. By submitting a file of requests and giving OpenAI upto 24 hours time to course of them, will scale back 50% prices on token prices in comparison with the same old OpenAI API calls.

5. Trim the Outputs with max_tokens and Stops parameters

What we’re attempting to do right here is cease the untrolled token era, Let’s say you want a 75-word abstract or a particular JSON object, don’t let the mannequin hold producing pointless textual content. As a substitute we are able to make use of the parameters:

Instance:

from openai import OpenAI

shopper = OpenAI()

response = shopper.chat.completions.create(

mannequin="gpt-5.1",

messages=[

{

"role": "system",

"content": "You are a data extractor. Output only raw JSON."

}

],

max_tokens=100,

cease=["nn", "}"]

)We’ve set max_tokens as 100 because it’s roughly 75 phrases.

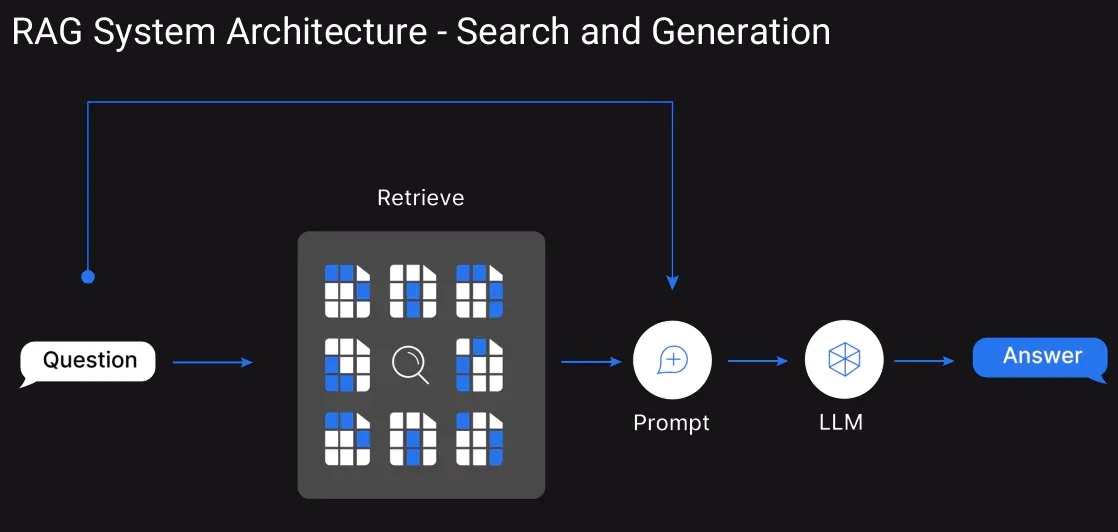

6. Make Use of RAG

As a substitute of flooding the context window, we are able to use Retrieval-Augmented Era. This may assist convert the data base into embeddings and retailer them in a vector database. When a person queries, then all of the context received’t be within the context window however the retrieved high few related textual content chunks might be handed for context.

7. At all times Handle the Dialog Historical past

Right here our focus is on the dialog historical past the place we go the older inputs and outputs. As a substitute of iteratively including the conversations we are able to implement a “sliding window” method.

Right here we drop the oldest messages as soon as the context will get too lengthy (set a threshold), or summarize earlier turns right into a single system message earlier than persevering with. Make sure that the lively context window just isn’t too lengthy because it’s essential for long-running periods.

Perform for summarization

from openai import OpenAI

shopper = OpenAI()

SYSTEM_PROMPT = "You're a concise assistant. Summarize the chat historical past in 30-40 phrases."

def summarize_chat(history_text):

response = shopper.chat.completions.create(

mannequin="gpt-5.1",

messages=[

{"role": "system", "content": SYSTEM_PROMPT},

{"role": "user", "content": history_text}

]

)

return response.decisions[0].message.content materialInference

chat_history = """

Consumer: Hello, I am attempting to grasp how embeddings work.

Assistant: Embeddings flip textual content into numeric vectors.

Consumer: Can I take advantage of them for similarity search?

Assistant: Sure, that’s a standard use case.

Consumer: Good, present me easy code.

Assistant: Positive, here is a brief instance...

"""

abstract = summarize_chat(chat_history)Consumer requested what embeddings are; assistant defined they convert textual content to numeric vectors. Consumer then requested about utilizing embeddings for similarity search; assistant confirmed and supplied a brief instance code snippet demonstrating primary similarity search.

We now have a abstract which could be added to the mannequin’s context window when the enter tokens are above an outlined threshold.

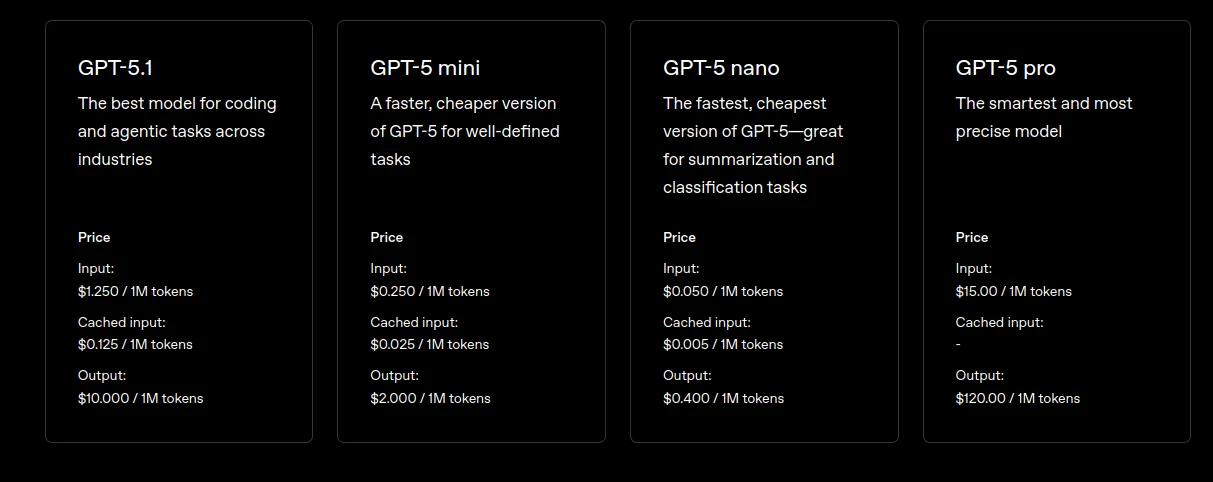

8. Improve to Environment friendly Mannequin Modes

OpenAI regularly releases optimized variations of their fashions. At all times verify for newer “Mini,” or “Nano” variants of the most recent fashions. These are particularly made for effectivity, usually delivering related efficiency for sure duties at a fraction of the associated fee.

9. Implement Structured Outputs (JSON)

Whenever you want information extracted or formatted. Defining a strict schema forces the mannequin to chop the pointless tokens and returns solely the precise information fields requested. Denser responses imply fewer generated tokens in your invoice.

Imports and Construction Definition

from openai import OpenAI

import json

shopper = OpenAI()

immediate = """

You're an extraction engine. Output ONLY legitimate JSON.

No explanations. No pure language. No further keys.

Extract these fields:

- title (string)

- date (string, format: YYYY-MM-DD)

- entities (array of strings)

Textual content:

"On 2025-12-05, OpenAI launched Structured Outputs, permitting builders to implement strict JSON schemas. This improved reliability was welcomed by many engineers."

Return JSON on this precise format:

{

"title": "",

"date": "",

"entities": []

}

"""Inference

response = shopper.chat.completions.create(

mannequin="gpt-5.1",

messages=[{"role": "user", "content": prompt}]

)

information = response.decisions[0].message.content material

json_data = json.masses(information)

print(json_data)Output:

{'title': 'OpenAI Introduces Structured Outputs', 'date': '2025-12-05', 'entities': ['OpenAI', 'Structured Outputs', 'JSON', 'developers', 'engineers']}

As we are able to see solely the required dictionary with the required particulars is returned. Additionally the output is neatly structured as key-value pairs.

10. Cache Queries

Not like our earlier thought of caching, that is fairly totally different. If the customers regularly ask the very same questions, cache the LLM’s response in your personal database. Test this database earlier than calling the API. This cached response is quicker for the person and is virtually free. Additionally if working with LangGraph for Brokers then you possibly can discover this for Node-level-caching: Caching in LangGraph

Conclusion

Constructing with LLMs is highly effective however the scale can shortly make them costly, so understanding the associated fee equation turns into important.By making use of the right combination of mannequin routing, caching, structured outputs, RAG, and environment friendly context administration, we are able to considerably slash inference prices. These methods assist keep the standard of the system whereas guaranteeing the general LLM utilization stays sensible and cost-effective. Don’t overlook to take verify the billing dashboard for the prices after implementing every method.

Often Requested Questions

A. A token is a small unit of textual content, the place roughly 1,000 tokens correspond to about 750 phrases.

A. As a result of output tokens (from the mannequin) are sometimes a number of occasions costlier per token than enter (immediate) tokens.

A. The context window is the short-term reminiscence (earlier inputs and outputs) despatched to the mannequin; an extended context will increase token utilization and thus value.

Login to proceed studying and revel in expert-curated content material.